Introduction

FastAPI is quickly becoming the framework of choice for many Python developers it is simple and easy to learn and use. FastAPI has a powerful but intuitive Dependency Injection system that is simple to use. It allows for easy building of plugins that can handle requests and augment path operations. In this tutorial, I am going to show how this system can be used to build a simple file storage plugin. Let's start by looking at how FastAPI handles files and how advanced dependency injection works.

Handling Files

To handle files first, make sure that python-multipart is installed then create file parameters in your route function as you would for body or form. To mark these parameters as file fields declare them with File or UploadFile. UploadFile has several advantages over File. You can read them in the official docs but from henceforth I will exclusively use UploadFile. A sample route function handling file upload is shown in the code block below.

@app.post("/uploadfile/")

async def create_upload_file(file: Union[UploadFile, None] = None):

if not file:

return {"message": "No upload file sent"}

else:

return {"filename": file.filename}

Advance Dependency Injection

In Python, an object instance can be made a callable if the call special method is implemented. Since FastAPI dependencies are callables this gives us the opportunity to instantiate a class instance with special parameters as the case may be before using it as a path or route dependency. Using this concept I am going to build three classes for handling file storage, One for local storage, another for memory storage and the last for AWS S3 storage.

A Base Class

The three classes are going to share a lot of similarities so I will start by building a common abstract base class that they will all inherit from. This class will implement the call method that makes class instances callable and hence usable as a dependency. It will have two abstract methods that must be implemented by subclasses namely upload and multi_upload for single and multiple file upload respectively. The result of the file storage operations shall be saved to a special pydantic model FileData. The code is shown in the block below.

from abc import ABC, abstractmethod

from logging import getLogger

from pathlib import Path

from fastapi import UploadFile

from pydantic import BaseModel, HttpUrl

logger = getLogger(__name__)

class FileData(BaseModel):

"""

Represents the result of an upload operation

Attributes:

file (Bytes): File saved to memory

path (Path | str): Path to file in local storage

url (HttpUrl | str): A URL for accessing the object.

size (int): Size of the file in bytes.

filename (str): Name of the file.

status (bool): True if the upload is successful else False.

error (str): Error message for failed upload.

message: Response Message

"""

file: bytes = b''

path: Path | str = ''

url: HttpUrl | str = ''

size: int = 0

filename: str = ''

content_type: str = ''

status: bool = True

error: str = ''

message: str = ''

class CloudUpload(ABC):

"""

Methods:

upload: Uploads a single object to the cloud

multi_upload: Upload multiple objects to the cloud

Attributes:

config: A config dict

"""

def __init__(self, config: dict | None = None):

"""

Keyword Args:

config (dict): A dictionary of config settings

"""

self.config = config or {}

async def __call__(self, file: UploadFile | None = None, files: list[UploadFile] | None = None) -> FileData | list[FileData]:

try:

if file:

return await self.upload(file=file)

elif files:

return await self.multi_upload(files=files)

else:

return FileData(status=False, error='No file or files provided', message='No file or files provided')

except Exception as err:

return FileData(status=False, error=str(err), message='File upload was unsuccessful')

@abstractmethod

async def upload(self, *, file: UploadFile) -> FileData:

""""""

@abstractmethod

async def multi_upload(self, *, files: list[UploadFile]) -> list[FileData]:

""""""

Local Storage

My local storage implementation simply saves the file to disk in the server it has one optional config parameter dest which represents a destination in the disk to save the file. If not provided it assumes that a folder named uploads relative to the current working directory is made available. Below is the implementation of local storage.

import asyncio

from logging import getLogger

from .main import CloudUpload, FileData, Path

from fastapi import UploadFile

logger = getLogger()

class Local(CloudUpload):

"""

Local storage for FastAPI.

"""

async def upload(self, *, file: UploadFile) -> FileData:

"""

Upload a file to the destination.

Args:

file UploadFile: File to upload

Returns:

FileData: Result of file upload

"""

try:

dest = self.config.get('dest') or Path('uploads') / f'{file.filename}'

file_object = await file.read()

with open(f'{dest}', 'wb') as fh:

fh.write(file_object)

await file.close()

return FileData(path=dest, message=f'{file.filename} saved successfully', content_type=file.content_type,

size=file.size, filename=file.filename)

except Exception as err:

logger.error(f'Error uploading file: {err} in {self.__class__.__name__}')

return FileData(status=False, error=str(err), message=f'Unable to save file')

async def multi_upload(self, *, files: list[UploadFile]) -> list[FileData]:

"""

Upload multiple files to the destination.

Args:

files (list[tuple[str, UploadFile]]): A list of tuples of field name and the file to upload.

Returns:

list[FileData]: A list of uploaded file data

"""

res = await asyncio.gather(*[self.upload(file=file) for file in files])

return list(res)

Memory Storage

Memory storage will save the file to memory and return it in the file attribute of the FileData object. It is quite simple as shown in the code below.

"""

Memory storage for FastStore. This storage is used to store files in memory.

"""

import asyncio

from logging import getLogger

from .main import CloudUpload, FileData

from fastapi import UploadFile

logger = getLogger()

class Memory(CloudUpload):

"""

Memory storage for FastAPI.

This storage is used to store files in memory and returned as bytes.

"""

async def upload(self, *, file: UploadFile) -> FileData:

try:

file_object = await file.read()

return FileData(size=file.size, filename=file.filename, content_type=file.content_type, file=file_object,

message=f'{file.filename} saved successfully')

except Exception as err:

logger.error(f'Error uploading file: {err} in {self.__class__.__name__}')

return FileData(status=False, error=str(err), message='Unable to save file')

async def multi_upload(self, *, files: list[UploadFile]) -> list[FileData]:

return list(await asyncio.gather(*[self.upload(file=file) for file in files]))

AWS S3

To create a storage service dependency using AWS S3 make sure the AWS client library for Python boto3 is installed. I placed the needed credentials in my .env file under the following keys.

- AWS_ACCESS_KEY_ID

- AWS_SECRET_ACCESS_KEY

- AWS_DEFAULT_REGION

- AWS_BUCKET_NAME

Bucket and region names can also be accessed and set using the config parameter and attribute. My implementation of the AWS Storage service is shown in the code block below.

S3 Implementation:

import os

import asyncio

import logging

from urllib.parse import quote as urlencode

from functools import cache

import boto3

from .main import CloudUpload, FileData, UploadFile

logger = logging.getLogger(__name__)

class S3(CloudUpload):

@property

@cache

def client(self):

key_id = os.environ.get('AWS_ACCESS_KEY_ID')

access_key = os.environ.get('AWS_SECRET_ACCESS_KEY')

region_name = self.config.get('region') or os.environ.get('AWS_DEFAULT_REGION')

return boto3.client('s3', region_name=region_name, aws_access_key_id=key_id, aws_secret_access_key=access_key)

async def upload(self, *, file: UploadFile) -> FileData:

try:

extra_args = self.config.get('extra_args', {})

bucket = self.config.get('bucket') or os.environ.get('AWS_BUCKET_NAME')

region = self.config.get('region') or os.environ.get('AWS_DEFAULT_REGION')

await asyncio.to_thread(self.client.upload_fileobj, file.file, bucket, file.filename, ExtraArgs=extra_args)

url = f"https://{bucket}.s3.{region}.amazonaws.com/{urlencode(file.filename.encode('utf8'))}"

return FileData(url=url, message=f'{file.filename} uploaded successfully', filename=file.filename,

content_type=file.content_type, size=file.size)

except Exception as err:

logger.error(err)

return FileData(status=False, error=str(err), message='File upload was unsuccessful')

async def multi_upload(self, *, files: list[UploadFile]):

tasks = [asyncio.create_task(self.upload(file=file)) for file in files]

return await asyncio.gather(*tasks)

A Simple App

Let's look at a simple app demonstrating the usage of storage services. I have written a simple HTML page with forms for querying the endpoints using the storage services.

<!DOCTYPE html>

<html>

<head>

<title>File Upload</title>

</head>

<body>

<main style="margin:20%">

<div style="margin: auto">

<h1>S3 File Upload</h1>

<form action="{{url_for('s3_upload')}}" method="post" style="margin:5px 5px" enctype="multipart/form-data">

<div style="margin: 5px 0">

<input type="file" name="file">

</div>

<button type="submit" style="display: bloc">Upload</button>

</form>

</div>

<div style="margin: auto">

<h1>Local File Upload</h1>

<form action="{{url_for('local_upload')}}" method="post" style="margin:5px 5px"

enctype="multipart/form-data">

<div style="margin: 5px 0">

<input type="file" name="files" multiple>

</div>

<button type="submit" style="display: bloc">Upload</button>

</form>

</div>

<div style="margin: auto">

<h1>Memory File Upload</h1>

<form action="{{url_for('memory_upload')}}" method="post" style="margin:5px 5px"

enctype="multipart/form-data">

<div style="margin: 5px 0">

<input type="file" name="file">

</div>

<button type="submit" style="display: bloc">Upload</button>

</form>

</div>

</main>

</body>

</html>

The sample app in the code below makes use of the three storage services in three different endpoints for handling files.

from fastapi import FastAPI, Request, Depends

from fastapi.responses import RedirectResponse

from fastapi.templating import Jinja2Templates

from dotenv import load_dotenv

from fastfiles import S3, Local, Memory, FileData

load_dotenv()

app = FastAPI()

templates = Jinja2Templates(directory='.')

s3 = S3(config={'extra-args': {'ACL': 'public-read'}})

local = Local()

memory = Memory()

@app.get('/')

async def home(req: Request):

return templates.TemplateResponse('home.html', {'request': req})

@app.post('/s3_upload', name='s3_upload')

async def upload(file: FileData = Depends(s3)) -> FileData:

return file

@app.post('/local_upload', name='local_upload')

async def upload(files: list[FileData] = Depends(local)) -> list[FileData]:

return files

@app.post('/memory_upload', name='memory_upload')

async def upload(file: FileData = Depends(memory)) -> FileData:

return file

Testing with OpenAPI and Swagger

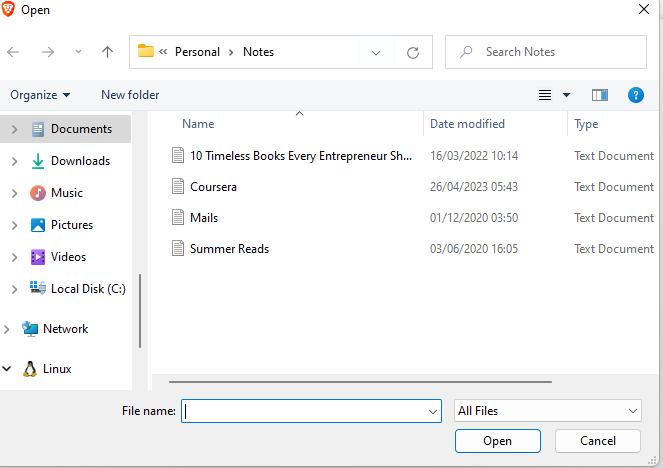

Multiple Files Local Storage With Swagger:

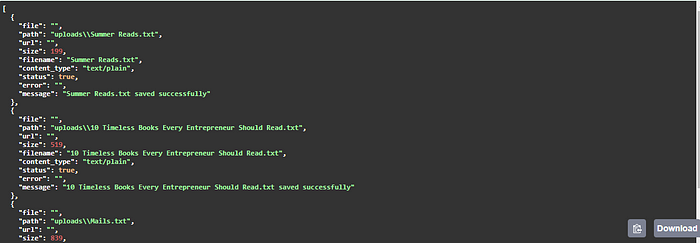

Response of Files Storage Request:

Testing With the HTML form.

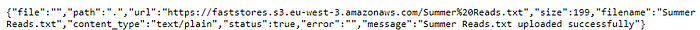

S3 File Storage With HTML Form:

JSON Response:

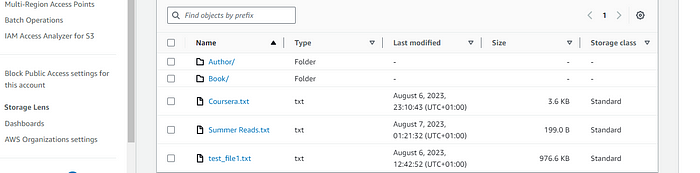

File In AWS S3:

Conclusion

The sample code shown here can be easily adapted and modified for your unique case you can go through them at this repo. Express.js was my first love in backend development and I feel that something as comprehensive as Multer won’t be amiss in FastAPI so combining the ideas in this project and my understanding of Multer I have built filestore which is basically Multer for FastAPI. I will go into detail on how it works in a future article. If you enjoyed this article or found it useful follow me on this platform and buy me a coffee!

Comments

Loading comments…