Generative AI had a defining moment in the early 2020s: it made knowledge work feel instantly more productive. You could draft, summarize, brainstorm, translate, code, and create in seconds. The world watched systems “talk” fluently, and the natural assumption followed: if a model can write like a human, it can think like a human. That assumption produced thousands of pilots, internal chatbots, and glossy demos that looked impressive in meetings and underwhelming in operations. In 2026, the conversation is changing fast—not because generative AI stopped being useful, but because businesses have grown tired of “helpful” outputs that can’t be trusted to drive real decisions.

Decision-grade intelligence is the next step: AI that doesn’t just generate text, but produces recommendations you can defend, audit, and act on. It’s the difference between an assistant that suggests “you might reduce churn by improving onboarding” and a system that identifies the top churn drivers for your cohorts, quantifies impact, proposes the best intervention, shows confidence and evidence, and logs why it chose that path. It’s not just smarter AI. It’s AI integrated into how organizations measure truth, assign accountability, and execute change.

This shift is happening because organizations are converging on a hard reality: decision-making is where value is created or destroyed. Content generation saves time, but decision quality compounds. A better decision made consistently—pricing, inventory, risk, hiring, fraud, capacity planning, patient triage, incident response—moves margins, revenue, and resilience. Decision-grade intelligence aims to accomplish two objectives simultaneously: improve the decision and minimize the cost of making it. That requires a deeper architecture than prompts and chat interfaces. It requires systems that treat data, constraints, uncertainty, and governance as first-class citizens.

Why generative AI hits a ceiling in real operations

Generative AI is incredible at producing plausible language, code, and imagery. But the moment you ask it to operate inside a business context, three problems surface.

First, truth is not guaranteed. Large language models are probabilistic systems trained to predict tokens, not to certify facts. Even when they’re “mostly right,” a small number of confident errors can be catastrophic in high-stakes environments: finance, healthcare, security, compliance, and safety. Many teams discovered this the hard way when internal copilots gave answers that sounded authoritative but couldn’t be traced back to sources.

Second, context is messy and distributed. The information needed for a decision isn’t in a single document. It lives across databases, metrics tools, Slack threads, customer tickets, CRM records, contracts, product telemetry, and tribal knowledge. A chat interface can retrieve some of this, but retrieval alone doesn’t resolve conflicts, data freshness, or business rules. “Which number is correct?” becomes a workflow problem, not a model problem.

Third, a decision is more than an answer. A decision requires options, constraints, risk tolerance, and accountability. Who is the owner of this decision? What’s the acceptable error rate? What tradeoffs are allowed? What’s the cost of being late? Generative AI can produce suggestions, but operational decisions need structure, repeatability, and audit trails.

In 2026, the market is less impressed by “AI that can write” and more impressed by “AI that can be trusted.” That is the foundation of decision-grade intelligence: reliability, traceability, and outcomes.

What “decision-grade intelligence” actually means

Decision-grade intelligence is not a single model. It’s a capability: the ability to generate recommendations or actions that meet a standard of quality appropriate for real-world decisions.

A decision-grade system typically includes:

- Grounding in authoritative data (with provenance)

- Explicit uncertainty (confidence, probability, risk bounds)

- Constraints and policies (what is allowed vs. not allowed)

- Reasoning over structured outcomes (not just narrative text)

- Validation loops (tests, evaluations, monitoring, feedback)

- Auditability (logs, sources, rationale, and change history)

- Operational integration (approval flows, ticketing, automation, rollback)

In other words, decision-grade intelligence is a product and platform shift. It’s closer to flight software than a creative writing tool. It may still use generative AI—often heavily—but it wraps it in an engineering discipline that treats the model as one component in a larger system.

“Decision-grade intelligence isn’t about sounding confident. It’s about being defensible. If a system can’t explain where an answer came from, how reliable it is, and what happens when it’s wrong, it’s not ready to support real decisions,” says Kos Chekanov, CEO of Artkai.

The five forces driving the shift in 2026

A) Executive Decision Fatigue Is Reaching a Breaking Point

Leaders today are drowning in information but starving for clarity. Dashboards, AI summaries, reports, and alerts provide endless insights, yet few offer clear direction. Generative AI added speed to content creation, but it often increased cognitive load rather than reducing it. In 2026, executives expect AI to narrow options, prioritize actions, and surface the most defensible decision, not just present more data. Decision-grade intelligence responds by focusing on relevance, timing, and confidence—helping leaders decide what matters now.

B) The Cost of Wrong Decisions Is Rising Sharply

Market volatility, tighter margins, and regulatory pressure have made mistakes far more expensive. A flawed pricing change, delayed risk response, or incorrect forecast can ripple across the organization. Generative AI is useful for ideation, but it struggles in high-stakes scenarios where precision matters. Decision-grade intelligence introduces probability modeling, scenario evaluation, and risk thresholds, allowing teams to understand not just possible outcomes—but likely ones.

“As volatility increases, the tolerance for ‘close enough’ decisions disappears. Organizations are realizing that speed without accuracy is just a faster way to compound risk,” says Ankit Kanoria, Chief Growth Officer at Hiver.

C) Trust, Accountability, and Explainability Are Now Non-Negotiable

As AI moves deeper into finance, hiring, healthcare, and compliance workflows, organizations must be able to explain how decisions were made. Generative systems often lack traceability, which creates friction with auditors, regulators, and boards. Decision-grade intelligence is designed for scrutiny. It logs inputs, records rationale, cites data sources, and preserves decision history—making accountability a built-in feature rather than an afterthought.

D) AI Is Moving Closer to Execution, Not Just Assistance

In 2026, AI is no longer confined to chat interfaces or advisory roles. It is embedded directly into operational systems such as ERPs, CRMs, and deployment pipelines. When AI outputs trigger real actions—refunds, approvals, re-routing, or deployments—suggestive language and ambiguity become liabilities. Decision-grade intelligence prioritizes structured outputs, defined constraints, and safe automation, ensuring AI recommendations are ready for execution.

E) Organizations Are Measuring AI by Outcomes, Not Activity

Early AI adoption rewarded novelty and usage: prompt counts, chatbot engagement, or time saved. That mindset is fading. Leaders now evaluate AI by its impact on revenue, risk reduction, efficiency, and resilience. Decision-grade intelligence aligns naturally with this shift because it is built around measurable decision quality. The question in 2026 is no longer “What can the AI generate?” but “Did this AI help us make a better decision?”

“In my opinion, AI value is no longer proven by how often it’s used, but by whether it consistently improves decisions that move the business forward,” says William Fletcher, CEO at Car.co.uk.

The architecture: from prompts to decision systems

A typical “GenAI phase” looks like this:

- A chat UI

- Prompt templates

- Some retrieval over docs

- A few guardrails (sometimes)

- Lots of manual copy/paste into real systems

A decision-grade phase looks like this:

- A defined decision workflow (inputs → logic → outputs → actions)

- Data pipelines with documented ownership and freshness

- A retrieval layer with provenance and citations

- A reasoning layer that combines models, rules, and constraints

- A validation layer (unit tests, evals, red-team cases)

- A monitoring layer (drift, accuracy, latency, cost)

- A governance layer (access control, audit logs, approvals)

- A human-in-the-loop design with clear escalation and override rules

This shift is similar to the evolution of analytics. Organizations didn’t stop using spreadsheets; they built data warehouses, BI layers, metric definitions, and governance. Generative AI is the spreadsheet moment. Decision-grade intelligence is the modern data stack moment—except now it’s about decisions, not dashboards.

Evidence becomes a product feature

One of the most underappreciated changes in 2026 is that evidence is becoming part of the user experience.

A decision-grade system doesn’t just say “Do X.” It says:

- Do X

- Because (key drivers, logic, assumptions)

- Based on (data sources, time windows, segments)

- With confidence (probability or risk score)

- Tradeoffs (what you gain and what you give up)

- What to watch (leading indicators and failure modes)

This makes the system usable by leaders who must justify decisions. A CFO doesn’t need a poetic explanation. They need defensible reasoning with traceable inputs. The same is true for an SRE on call deciding whether to rollback, or a risk team deciding whether to block a transaction.

Decision intelligence is not “one AI”—it’s a layered approach

A practical decision-grade system rarely relies on a single model or technique. Instead, it blends multiple layers of intelligence, each designed to handle a specific aspect of decision-making. This layered approach reflects a hard-earned lesson from early AI adoption: different problems require different tools, and no single AI model can reliably handle truth, uncertainty, policy, and communication simultaneously.

A) Data and Signal Layer

Decision intelligence begins with grounding. Systems must pull from trusted, authoritative sources such as databases, metric stores, policy documents, incident runbooks, and operational logs. Crucially, the system must record exactly what data was used, from where, and at what point in time. This provenance is essential for trust, audits, and post-decision analysis. Without grounding, AI outputs may be fluent but unreliable, especially in environments where data changes rapidly.

“A decision system is only as trustworthy as its data lineage. If you cannot trace an output back to the exact inputs and time context, you cannot rely on it when decisions are scrutinized,” says Brandy Hastings, SEO Strategist at SmartSites.

B) Structured reasoning

Unlike generative chat systems that produce free-form narratives, decision-grade systems output structured results. These typically include a recommended action, confidence level, key drivers, and supporting references. Structured reasoning reduces ambiguity, makes decisions easier to evaluate, and allows downstream systems to act on outputs programmatically rather than relying on human interpretation.

C) Rules and constraints

Some aspects of decision-making should never be left to probabilistic inference. Compliance requirements, safety thresholds, financial limits, and approval policies must be enforced deterministically. These constraints act as guardrails, ensuring that AI recommendations remain within acceptable boundaries regardless of model behavior or prompt quality.

D) Probabilistic models and forecasts

Many critical decisions depend on prediction: demand forecasts, churn likelihood, fraud risk, or incident probability. These tasks are often better handled by specialized statistical or machine learning models trained for precision, rather than by general-purpose language models alone. Their outputs provide quantitative signals that strengthen overall decision confidence.

“When decisions hinge on likelihood and impact, probabilities matter more than prose. Forecasting models provide the quantitative backbone that lets teams reason about risk instead of guessing at it,” says Raphael Yu, CMO at LeadsNavi.

E) Generative layers for communication and coordination

Generative AI still plays a vital role—but its strength lies in communication. It excels at summarizing evidence, drafting action plans, explaining rationale, and translating complex insights for different stakeholders. In decision-grade systems, generative models communicate decisions rather than invent them.

By 2026, the most effective systems stop debating “LLM vs. classic ML” and instead combine these layers intentionally—creating intelligence that is accurate, explainable, and operationally safe.

The new KPI: decision quality

If decision-grade intelligence is the goal, organizations need ways to measure decision quality. This is harder than measuring output volume, but it’s doable.

Common approaches include:

- Accuracy against known outcomes (forecast vs. actual, prediction vs. ground truth)

- Regret / counterfactual analysis (how did this decision compare to alternatives?)

- Time-to-decision and time-to-action

- Error rate and severity (how often did it require override, rollback, or escalation?)

- Business outcome movement (margin, churn, NPS, incident frequency, fraud loss)

- Trust metrics (adoption by senior decision-makers, approval rates, repeat usage)

The key is to treat AI like an operational system: define what “good” looks like and instrument it.

Human-in-the-loop becomes “human-as-governor”

Early AI systems used humans as editors: “Here’s a draft, please fix it.” Decision-grade intelligence changes the role. Humans become governors and exception handlers.

A healthy pattern in 2026 looks like:

- AI proposes actions within a defined scope

- AI routes high-risk cases to humans.

- Humans review decisions with evidence attached.d

- The system learns from overrides (where appropriate)

- The organization continuously updates policies and thresholds.

This is closer to how credit risk systems and fraud engines evolved than how content tools evolved. The mindset is operational: let automation handle the predictable 80%, and elevate humans for the ambiguous 20%.

“In mature AI systems, humans are no longer there to clean up outputs. They exist to set boundaries, approve exceptions, and intervene when risk crosses an acceptable threshold,” says Beni Avni, founder of New York Gates.

Industry examples: what decision-grade looks like in practice

A) Customer support and success

Instead of summarizing tickets, decision-grade intelligence identifies root causes, predicts escalations, recommends next-best actions, and measures whether interventions reduced churn.

B) Finance and procurement

Rather than drafting emails, decision-grade systems flag anomalies, predict cash flow risk, recommend payment timing strategies, and ensure decisions align with policy and audit requirements.

C) Engineering and reliability

Not just incident summaries, but automated diagnosis suggestions, safe rollback recommendations, and performance impact projections—paired with runbooks and telemetry evidence.

D) Sales and revenue operations

Beyond call notes, decision-grade intelligence prioritizes accounts, predicts deal risk, recommends outreach sequences, and shows which actions historically moved the needle for similar segments.

E) Security and fraud

Decision-grade systems correlate signals, rank threats, recommend containment steps, and track false positives/negatives over time with tight governance.

Across these domains, the pattern is consistent: decisions become repeatable workflows, not one-off chats.

The hard part: trust engineering

The real work of decision-grade intelligence is not the model prompt. It’s trust engineering—building the conditions under which stakeholders will rely on AI outputs.

Trust engineering includes:

- Clear data ownership and definitions

- Versioning of prompts, policies, and models

- Testing against edge cases and adversarial inputs

- Monitoring for drift and failure modes

- Controls for access and privacy

- Audit trails for investigations

- Playbooks for when AI is wrong

In 2026, organizations that win with AI aren’t those that “use the newest model.” They’re the ones who build the strongest trust layer.

“The hardest part of using AI is not generating answers. It is earning trust at the moment a real decision is on the line. That trust is engineered through controls, evidence, and accountability, not confidence alone,” says Sharon Amos, Director at Air Ambulance 1.

The decision stack: a simple framework teams can use

As organizations move from experimenting with AI to operationalizing it, one of the most common failure patterns is fragmentation. Teams deploy isolated copilots, build one-off automations, and experiment with models without a shared structure. The decision stack provides a practical framework to prevent this sprawl by organizing AI initiatives around the decisions that matter, rather than the tools themselves. It gives teams a repeatable way to design, deploy, and scale decision-grade intelligence.

“Teams do not fail with AI because the models are weak. They fail because decisions are scattered across tools with no shared structure. A clear decision stack turns experimentation into an operating system,” says Tom Bukevicius, Principal at Scube Marketing.

Layer 1: Decision inventory

The foundation of the stack is clarity. Teams begin by listing the decisions that materially affect outcomes—pricing changes, refunds, dispatching, risk approvals, incident response, or hiring screens. Each decision should have a clearly defined owner and scope. This step forces organizations to prioritize impact over novelty and ensures accountability from day one.

Layer 2: Data readiness

For each decision, identify:

- Inputs required

- Source of truth

- Freshness requirements

- Definitions and metric contracts

Layer 3: Policy and constraints

Every operational decision operates within boundaries. Compliance requirements, risk thresholds, approval hierarchies, and safety limits must be explicitly documented. This layer ensures that AI recommendations respect organizational rules and regulatory obligations rather than relying on informal guidance or prompts.

Layer 4: Intelligence

Choose the right mix:

- Forecast models

- LLM reasoning and summarization

- Retrieval over internal knowledge

- Rules engines

Layer 5: Workflow integration

Decisions should surface where work actually happens—CRMs, ticketing systems, ERPs, CI/CD pipelines, or messaging platforms. Integrating AI into existing workflows eliminates friction and increases adoption.

Layer 6: Evaluation and monitoring

Finally, teams define success metrics, test cases, and rollback procedures. Cost, latency, accuracy, and override rates are tracked continuously to ensure decisions remain reliable at scale.

This framework stops AI initiatives from turning into scattered copilots and forces them to become decision systems.

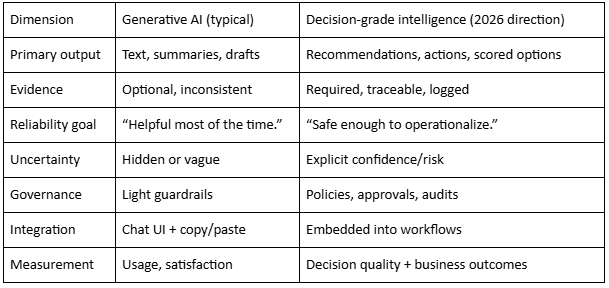

Generative AI vs. Decision-grade intelligence

Common failure modes (and how 2026 teams avoid them)

As organizations push AI deeper into operational workflows, many discover that early enthusiasm masks recurring structural problems. By 2026, high-performing teams have learned to anticipate these failure modes and design around them. The difference between stalled pilots and scalable decision intelligence often comes down to how these issues are addressed.

Failure mode 1: “We built a chatbot, and nobody used it.”

This is one of the most common outcomes of prompt-first AI initiatives. Teams launch a standalone chatbot expecting adoption, only to find that users revert to existing tools. The issue is not capability but placement. In 2026, successful teams integrate AI directly into the environments where decisions already occur—CRMs, ticketing systems, ERPs, or incident dashboards. By embedding intelligence into workflows, AI becomes part of the job rather than an extra step.

Failure mode 2: “It’s fast, but wrong sometimes.”

Speed without reliability quickly erodes trust. Early generative systems often produced confident but incorrect answers, making them unsuitable for high-stakes use. Mature teams address this by requiring evidence for every recommendation, constraining outputs with policies, and continuously evaluating performance against known outcomes. High-risk decisions are automatically routed to humans, ensuring AI accelerates work without introducing unacceptable risk.

“Speed only creates value when it is paired with reliability. In high-stakes decisions, a fast wrong answer is worse than no answer at all.”

Failure mode 3: “The AI says one number, finance says another.”

Conflicting metrics undermine credibility. This failure typically stems from unclear definitions and multiple sources of truth. In 2026, teams establish metric contracts that clearly define how numbers are calculated, where they come from, and how they are versioned. AI systems are explicitly bound to these contracts, eliminating ambiguity.

Failure mode 4: “We can’t explain why it made that recommendation.”

Opacity is a deal-breaker in regulated or executive environments. Leading teams design for auditability from the start, capturing decision logs, data sources, rationale, and thresholds. This allows decisions to be reviewed, challenged, and improved over time.

Failure mode 5: “Costs exploded.”

Uncontrolled AI usage can quickly inflate infrastructure and model costs. Cost-aware teams cache retrievals, use smaller models where appropriate, and reserve advanced intelligence for decisions with measurable business impact.

Avoiding these failures turns AI from an experimental tool into a dependable decision system.

What to prioritize in 2026 if you’re building toward decision-grade

By 2026, the biggest mistake organizations make with AI is trying to do too much, too fast. Decision-grade intelligence rewards focus, discipline, and sequencing. The 80/20 roadmap is not about deploying the most advanced models—it’s about building the minimum system that reliably improves a real decision and then scaling from there.

- Start with one high-impact decision domain: Avoid the temptation to roll out “AI everywhere.” Instead, identify a decision that is frequent, costly, or high-risk—such as pricing adjustments, refund approvals, fraud detection, incident response, or demand planning. Choosing a narrow domain creates clarity, limits blast radius, and makes success measurable. Decision-grade intelligence is earned one decision at a time.

- Define what “good” actually means: Before introducing AI, teams must agree on success criteria. Is the goal faster decisions, fewer errors, lower risk, higher conversion, or improved forecast accuracy? Measurable outcomes anchor the system and prevent debates after deployment. If you can’t define what a better decision looks like, AI will only add noise.

- Fix data definitions and sources of truth early: Most AI failures trace back to inconsistent data. In 2026, mature teams resolve this upfront by aligning on metric definitions, ownership, freshness requirements, and versioning. Decision quality cannot exceed data quality, and scaling broken definitions only amplifies problems.

- Design for trust with evidence-first UX: Users must see why a recommendation exists. Evidence-first interfaces surface inputs, assumptions, confidence levels, and tradeoffs. Trust is not built by accuracy alone—it’s built by transparency.

- Add evaluation and monitoring before wide rollout: Testing, confidence thresholds, override tracking, and rollback procedures should exist before scaling. Waiting to evaluate after deployment is how silent failures spread.

- Integrate into workflows with safeguards: Decisions should surface where work happens, with approvals, escalation paths, and rollback mechanisms built in.

You don’t need perfection to start, but you do need discipline. Decision-grade intelligence is a systems game.

AI value shifts from content to outcomes

Generative AI will remain part of modern work the same way email and spreadsheets remain part of modern work. But in 2026, the differentiator is shifting. The organizations that pull ahead will be the ones that transform AI from a writing assistant into a decision advantage—faster decisions, better decisions, and decisions you can defend.

Decision-grade intelligence doesn’t mean removing humans. It means making human judgment more scalable. It replaces “AI said so” with “AI showed the evidence, quantified the risk, respected the constraints, and improved the outcome.”

That’s the real shift: from generating words to generating confidence.

Frequently Asked Questions

Common questions about this topic

What is decision-grade intelligence?

How does decision-grade intelligence differ from generative AI used for content?

What core elements does a decision-grade system include?

Why do organizations need decision-grade intelligence instead of just faster content generation?

What are the main operational problems when applying generative AI to business decisions?

What does an architecture for decision-grade intelligence look like compared to a prompt-first GenAI phase?

How is evidence treated in decision-grade systems?

What layered components make up decision intelligence?

How should organizations measure the quality of decisions produced by AI?

What role do humans play in decision-grade intelligence?

What common failure modes do teams face when building decision systems and how are they avoided?

What practical priorities should teams follow to build decision-grade intelligence?

Comments

Loading comments…