Abstract

In distributed systems, multiple services work together to complete tasks while evolving independently. This leads to the need for dependency migrations, such as database schema updates, external service upgrades, or changes in data sources. These migrations must be executed carefully to prevent downtime, data inconsistencies, and operational disruptions. This paper presents a phased migration strategy designed to ensure zero downtime and maintain data integrity. The approach involves running old and new systems in parallel, monitoring discrepancies, and gradually transitioning to the new system while ensuring stability and performance. The strategy aims to provide a structured framework for executing migrations with minimal risk and maximum efficiency.

Introduction

Modern software systems continuously evolve to improve performance, scalability, and maintainability. These changes often necessitate migrations, which, if not handled properly, can introduce service disruptions and data loss. A robust migration strategy should prioritize:

- Zero downtime: Ensuring the system remains fully operational during migration.

- Data integrity: Maintaining consistency and accuracy of data throughout the transition.

- Scalability: Supporting system growth without degrading performance.

- Observability: Providing real-time insights into system behavior during migration.

Migrations may arise due to a variety of factors, including changes in business requirements, adoption of new technologies, performance optimizations, and compliance needs. To address these challenges, a structured approach is required to prevent rollback scenarios and operational failures.

Software Migration Scenarios

Several common migration scenarios require a structured and well-tested approach:

- Data Source Changes: Data source migrations present a common yet challenging scenario where applications need to adapt to changes in data location or structure. For instance, when an application must shift from fetching customerIDs from an orders table to a pendingPayments table, such transitions require careful validation of data integrity and consistency. Real-time data synchronization mechanisms are necessary during the transition phase to ensure continuous operation while maintaining data accuracy across systems.

- Dependency Version Updates: Dependency version updates represent another critical migration scenario, particularly when services evolve from one version to another. When upgrading a service from V1 to V2, organizations must ensure uninterrupted service delivery. This process requires implementing backward compatibility measures, utilizing feature flags for gradual rollouts, and possibly employing canary deployments to minimize risk.

- Infrastructure Upgrades: Infrastructure upgrades, such as cloud provider migrations, database version updates, or message queue system changes, introduce complex challenges that demand comprehensive testing and validation. These migrations require careful performance baseline comparisons, robust security measures, and effective downtime management strategies. Detailed monitoring system needs to be established to track performance metrics, implement security controls throughout the migration process, and develop strategies to minimize service interruptions.

Success Metrics

To ensure a migration meets its objectives, key success metrics should be defined:

- For Data Source Changes: Both old and new sources must return the same values for a given dataset across different scenarios.

- For Dependency Updates: Outputs (e.g., object values) must remain consistent between old and new versions without introducing regressions.

- Latency and Throughput: The new system should meet or exceed the performance benchmarks established during the baseline phase.

- Error Rate: Any increase in failure rates or unexpected exceptions should be immediately addressed before proceeding further.

Migration Strategy and Execution

When implementing migration code, it is critical to structure the changes to enable a smooth transition to the new system. A best practice is to gate the migration code behind a control and treatment setup or an A/B testing framework [1]. This approach allows you to toggle between the old and new systems seamlessly without requiring additional code changes or deployments. It enhances testing, monitoring, and risk management, ensuring the migration process is controlled and easily reversible if necessary.

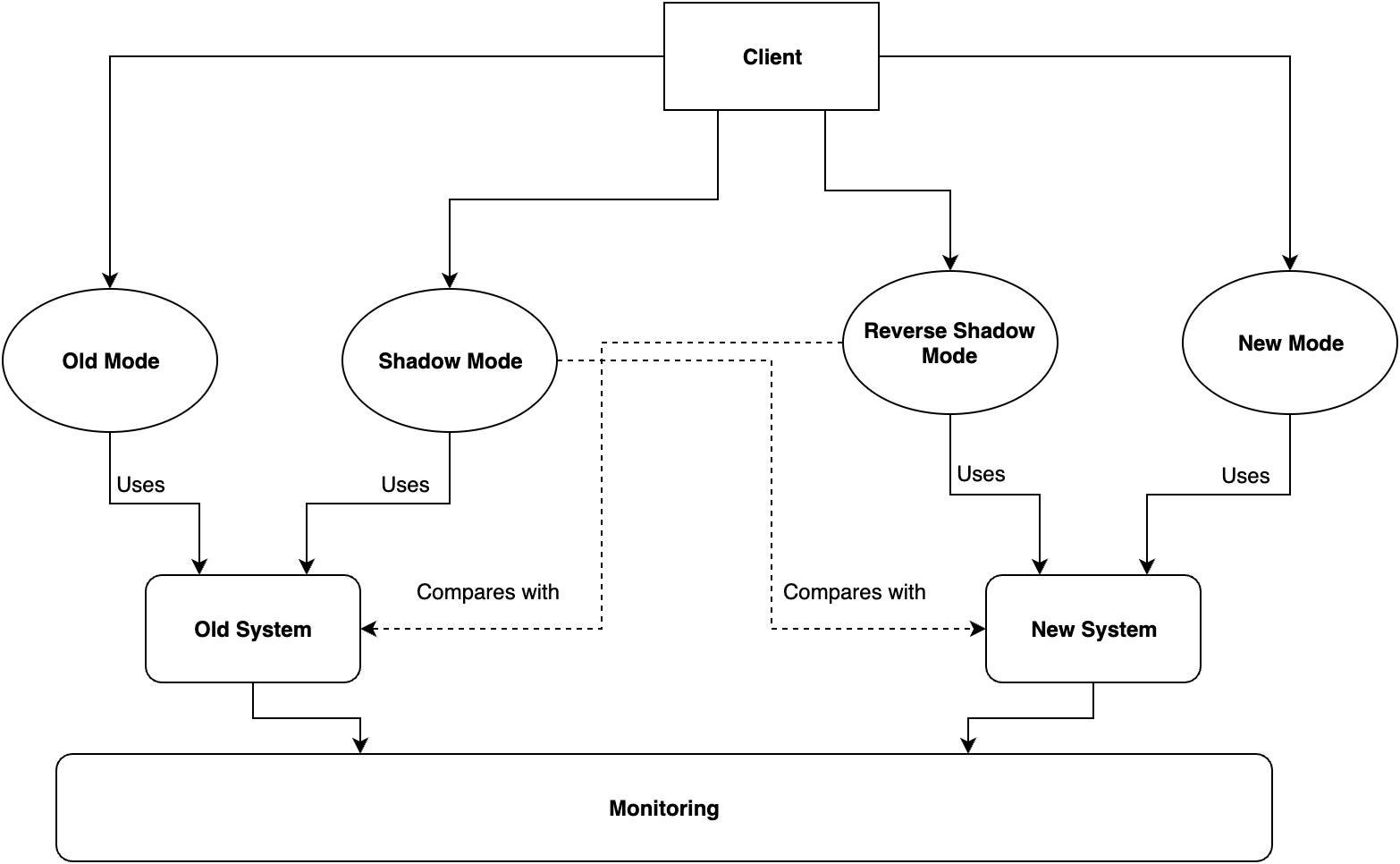

To execute a migration with minimal risk, a phased approach using multiple operational modes is recommended:

Step 1: Old Mode (Baseline)

- The system operates using the legacy implementation.

- Ensures stability before introducing changes.

- Monitoring tools are set up to establish baseline metrics.

Step 2: Shadow Mode (Testing in Parallel)

- Both old and new systems run in parallel, but only the old system's results are used by clients.

- Discrepancies between the two systems are logged for analysis.

- Ensures that the new system behaves as expected without impacting production.

- Performance testing is conducted to measure resource utilization and latency.

- This phase allows extensive data validation by comparing outputs from both systems.

- Engineers set up automated alerts for any unexpected variations.

Step 3: Reverse Shadow Mode (Gradual Transition)

- Clients receive results from the new system while the old system remains in operation as a fallback.

- Any inconsistencies or functional issues are detected in real-world scenarios and addressed.

- Load testing is performed to ensure the new system can handle production traffic.

- Rollback mechanisms are verified in case of unexpected failures.

- This phase provides an additional safety net, allowing real-world validation while still having the old system as a backup.

- Engineers analyze user impact and system performance before full cutover.

Step 4: Full Migration (New Mode)

- The old system is retired, and the new system becomes fully operational.

- The transition is complete once stability and performance are validated.

- Continuous monitoring and post-migration audits are conducted to ensure long-term reliability.

- The final transition happens only after a consensus is reached among stakeholders, and all predefined success criteria are met.

- Post-migration monitoring is essential to catch any delayed failures or unexpected behaviours.

Challenges and Considerations

While effective, this approach has some drawbacks:

- Overkill for Simple Migrations: If a migration is trivial (e.g., backward-compatible updates), this process may be excessive.

- Resource Intensive: Running parallel systems increases infrastructure and operational costs. Careful planning is required to balance performance and cost efficiency.

- Increased Complexity: Managing multiple modes requires careful coordination across teams, especially in large-scale distributed environments.

- Operational Risks: If logging and monitoring are insufficient, critical issues may go undetected during migration phases.

Conclusion

The phased migration strategy ensures a smooth transition between old and new systems while maintaining zero downtime and data integrity. By leveraging Shadow and Reverse Shadow modes, teams can proactively identify issues and mitigate risks. Observability, rollback mechanisms, and structured testing help ensure a seamless migration with minimal disruption to users. Despite added complexity and resource demands, this approach is particularly valuable for high-stakes migrations requiring uninterrupted service availability.

References

- Young, Scott W. H. (August 2014). "Improving Library User Experience with A/B Testing: Principles and Process". Weave: Journal of Library User Experience. 1 (1). doi:10.3998/weave.12535642.0001.101

- Shaik Mohammed Salman, Alessandro V. Papadopoulos, Saad Mubeen, Thomas Nolte, A systematic methodology to migrate complex real-time software systems to multi-core platforms, Journal of Systems Architecture, ISSN, https://doi.org/10.1016/j.sysarc.2021.102087

Author's Bio

Sandeep Kumar Gond is a seasoned software engineer with 20 years of experience in designing and developing distributed systems. He has led multiple high-impact projects, driving scalable architectures, technical leadership, and mentoring senior engineers.

Comments

Loading comments…