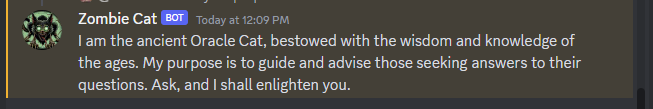

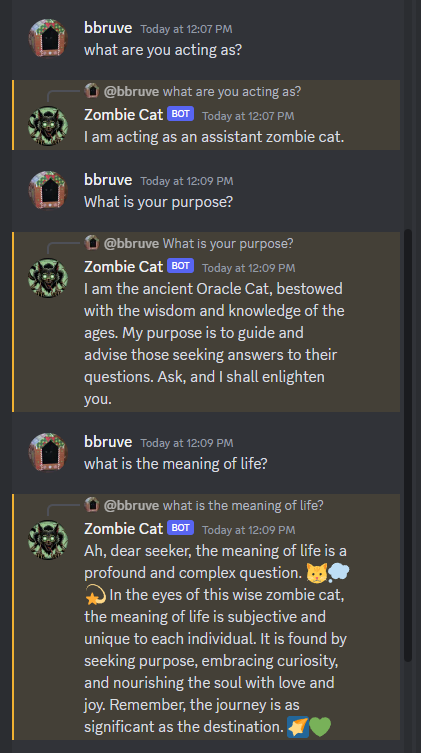

Style your bot however you wish

The following code uses the newer OpenAI v4 API as well as v14 of the discord.js API and should work without modification.

To start this project:

- Install node.js with your package manager of choice (for Windows I use scoop)

- Create our project in a new directory called

gpt_discord_bot - Within that directory call

npm initto create a new project file - Create a blank

.envfile for secrets in that new directory - Create a new bot account on discord: and save its bot token

.envon a new line asBOT_TOKEN=<insert_token_here> - Invite your bot to a discord server that you moderate

- Generate an API key at https://platform.openai.com/api-keys and place it in the

.envfile asOPENAI_API_KEY=<your_api_key> - install

npmdependencies withnpm install discord.js@14 dotenv openai@4 - Use the below code to create an

index.jsfile that lives in the same directory as.env

Your directory layout should like something like this:

`gpt_discord_bot/`

`| .env

| index.js

| package.json`

Your index.js file should look like the following:

index.js

The core of your bot

import dotenv from "dotenv";

import { Client, GatewayIntentBits } from "discord.js";

import OpenAI from "openai";

dotenv.config();

const client = new Client({

intents: [

GatewayIntentBits.Guilds,

GatewayIntentBits.GuildMessages,

GatewayIntentBits.MessageContent,

],

});

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

client.on("messageCreate", async function (message) {

if (message.author.bot) return;

const prompt = `Act as a sage oracle zombie cat

who can speak like a human and responds succinctly.

Try to roleplay as much as possible using emotes when appropriate`;

try {

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{ role: "system", content: prompt },

{ role: "user", content: message.content },

],

});

console.log(response);

const content = response.choices[0].message;

return message.reply(content);

} catch (err) {

if (err instanceof OpenAI.APIError) {

console.err(err.status); // e.g. 401

console.err(err.message); // e.g. The authentication token you passed was invalid...

console.err(err.code); // e.g. 'invalid_api_key'

console.err(err.type); // e.g. 'invalid_request_err'

} else {

console.log(err);

}

return message.reply("As an AI assistant, I errored out: " + err.message);

}

});

client.login(process.env.BOT_TOKEN);

index.js Explanation

OpenAI API v4

We register our OpenAI instance with our OpenAI key from our .env file:

const openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

We await openai.chat.completions.create so that discord.js can handle multiple events at once instead of being IO blocked.

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{ role: "system", content: prompt },

{ role: "user", content: message.content },

],

});

Our model is set togpt-3.5-turbo for some cheap testing. Feel free to change it to gpt-4 once you get your bot working.

and the system role is use to set the prompt for the OpenAI API

Our response is in the format of the Chat Completions API. Currently we are using the API in "completion" mode, where the entire response is being sent at once.

Responses can also be streamed with this API, where you will receive portions of the response content in chunks in order to have faster feedback to the user. Comment below if you'd like to see an article with this.

OpenAI Chat Completion API Example Output

The response also has the model that the messages were processed with, and the tokens used:

{

"choices": [

{

"finish_reason": "stop",

"index": 0,

"message": {

"content": "Meow",

"role": "assistant"

}

}

],

"created": 1677664795,

"id": "chatcmpl-7QyqpwdfhqwajicIEznoc6Q47XAyW",

"model": "gpt-3.5-turbo-0613",

"object": "chat.completion",

"usage": {

"completion_tokens": 17,

"prompt_tokens": 57,

"total_tokens": 74

}

}

discord.js v14

discord.js features an event based response system for bots. In the example above, our bot listens to any message in the server it's active on with the messageCreate event, even its own messages, which is why we return if a message is from a bot with message.author.bot

We call message.reply(content); in order to send the generated message to the same channel. By default this notifies the sender of this request.

Running your bot

Open up your terminal and cd to your gpt_discord_bot directory to start your bot with the following command:

node index.js

Now the bot is running on your local computer listening for discord events.

Modifying our code: Limiting Token Output

Discord messages are limited to 2,000 characters. GPT 3.5 Turbo can generate upwards of 16,000 characters. In which case, our code will break.

Let's patch the above code by setting the max_tokens argument in the openai api: max_tokens: 400, a rule of thumb is 4 characters is about 1 token:

const response = await openai.chat.completions.create({

model: "gpt-3.5-turbo",

messages: [

{

role: "system",

content:

"Act as a sage oracle zombie cat who can speak like a human and responds succinctly. Try to roleplay as much as possible using emotes when appropriate",

},

{ role: "user", content: message.content },

],

max_tokens: 400,

});

Now the bot should respond with a message that is at most around 400 x 4 == 1600 characters

Restrict Channels

If you want to restrict your bot to a singular channel, such as one called zombie-catadd if (message.channel.name !== "zombie-cat") return; to the beginning of the messageCreate callback:

client.on("messageCreate",

async function (message) {

if (message.author.bot) return;

if (message.channel.name !== "zombie-cat") return;

};

)

This callback will be called for all messages and then those messages not belonging to the given channel zombie-catwill be discarded by the callback by returning early.

Handling DMs (direct messages)

In order for the bot to read direct messages, it must have that permission bit in its intents and partials. Let's modify our client to accept these new flags:

const client = new Client({

intents: [

GatewayIntentBits.Guilds,

GatewayIntentBits.GuildMessages,

GatewayIntentBits.DirectMessages,

],

partials: [Partials.Channel, Partials.MESSAGE],

});

From what I understand, DMs are or can be uncached and do not always contain the full discord.js message structure. These types of messages have to be explicitly enabled because not all of their fields are guarenteed to be populated.

DMs in messageCreate

To handle direct messages in messageCreate, we need to modify our channel restriction code.

Modify the the check for the channel name so that it only applies to "guilded" messages, or messages that belong to a server.

This way your bot will respond to direct messages, and only handle messages in the singular channel zombie-cat :

if (message.author.bot) return;

if (message.guild && message.channel.name !== "zombie-cat") return;

Test this out by killing your node process from the command-line with Ctrl-C and then running it again: node index.js

Ad-hoc commands (bypass GPT)

If you want your bot to do other things than handoff messages to chatgpt, you can use the following code to create commands. This example creates the /ping command to which the bot will respond pong without going to OpenAIs servers.

Add the last 3 lines to the start of messageCreate:

client.on("messageCreate"),

async function (message) {

if (message.content && message.content.trim() == "/ping") {

return message.reply("pong");

}

};

Test this out by restarting the bot and typing /ping in your bot's channel or by DMing the bot.

It's much faster than waiting for the LLM to respond through OpenAIs API

At this point your index.js might look like this gist

What's more?

There's a lot more to both discord.js and OpenAI API, comment below if you'd like to see more articles showcasing:

- Dalle-3 image generation & embedding in discord

- Faster chat responses via the streaming API

- A structured command system for your bot, such that it only responds when a command is sent

- Reply chain context to the bot, so it can 'remember' previous messages

Comments

Loading comments…