Photo: Shutterstock

AI has quickly transitioned from a futuristic concept to an essential component in nearly every digital product. Yet, despite the abundance of AI tools and models available, the real challenge lies not in the technology but in seamlessly integrating AI into products that deliver real value. Studies indicate that a large majority of AI projects fail to move beyond pilots into full production - by some estimates, over 80% of AI initiatives never reach successful deployment. The gap isn't a lack of innovation - it's aligning cross-functional teams and executing with precision in an ever-evolving landscape.

The consequences are significant: organizations miss out on the efficiency gains and revenue growth that successful AI at scale can provide. For example, research by Boston Consulting Group found only 11% of companies reap substantial value from AI, with the majority failing to scale beyond initial pilots. Notably, those that scale AI effectively achieve 3× higher revenue impacts (up to 20% of total revenue) and significantly higher profit margins than those stuck at the pilot stage.

My name is Akhil Kumar Pandey. For over 16 years, I've led product initiatives across ERP, procurement, and compliance platforms, designing and scaling AI features in complex, high-stakes enterprise environments.

Traditional development approaches don't work well with AI. Agile practices often fall short when not profoundly embedded into workflows. Applying Agile to AI/ML projects is not straightforward - naive adoption of Scrum or similar frameworks can lead to significant friction.

AI product development often involves a research-oriented, experimental workflow that doesn't neatly fit into fixed-length sprints or produce a shippable feature every two weeks.

What drives success is integrating agile with cross-functional collaboration, rapid feedback loops, and continuous iteration to create AI-driven solutions that solve real-world problems.

In this article, I'll outline a practical framework for building AI-powered products that deliver measurable business outcomes and do so with the speed and adaptability that agile provides.

The key is adapting Agile processes to fit AI/ML needs, rather than forcing AI work into a traditional Agile mold. Researchers and practitioners have proposed various approaches. For instance, using Kanban instead of Scrum can better accommodate continuous, flexible workflows that handle research spikes and variable cycle times.

We'll also explore why AI is no longer optional for modern products, and how agile can help teams move faster, manage uncertainty, and deliver solutions that continuously improve and scale.

Why Agile Is Essential to Operationalizing AI

AI isn't a 'nice-to-have' anymore - it's critical. The question is how fast you can integrate it without disrupting the user experience or damaging trust. A ResearchGate study across 50 enterprise projects found that companies embedding AI into core product workflows reported a 32% improvement in decision-making speed and a 27% increase in alignment with user needs.

However, many teams still stumble. Often, it's because AI is treated like a bolt-on feature instead of a core product function. That's where agile becomes critical. AI thrives in environments fueled by cross-functional collaboration, accelerated learning loops, and relentless validation.

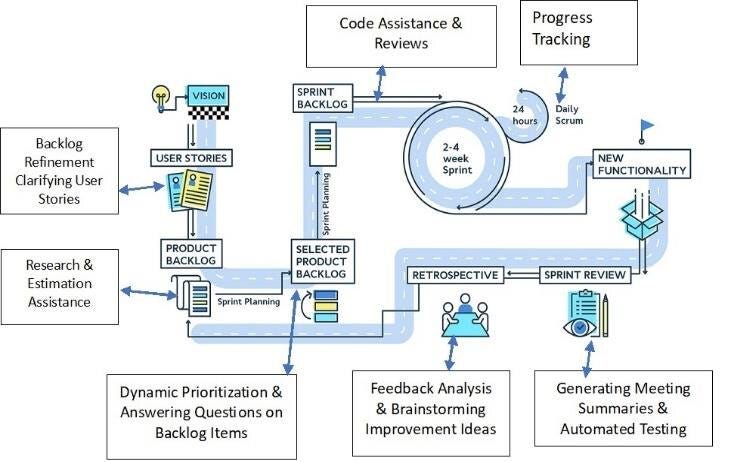

This is especially true for generative AI. It's already reshaping sprint rituals - automating backlog refinement, suggesting user stories, and assisting with code reviews.

Fei-Fei Li, Co-Director of Stanford's Institute for Human-Centered AI, frames the mindset shift clearly:

"Artificial intelligence is not a substitute for human intelligence; it is a tool to amplify human creativity and ingenuity."

That perspective matters. The focus isn't just on what AI can do - it's on how it changes how teams think, work, and ship. Agile, in turn, allows teams to manage the uncertainty that comes with evolving models and emerging use cases.

A paper by the Society of Artificial Intelligence and Technology outlines how generative AI improves development workflows - automated story generation, AI-assisted code validation, and backlog curation. These tools help teams move faster and avoid avoidable complexity. They work best when embedded inside agile routines, not tacked on afterward.

The point is simple: agile and AI reinforce each other. Together, they shorten learning loops and increase the quality of what gets shipped.

The Framework for Building AI-Enabled Products in an Agile Environment

Think of AI as the engine and agile as the transmission. One generates output; the other determines how well that output translates to velocity and user value. This framework aligns three stages of development with agile practices to ensure each solution is viable, practical, and built for scale.

Stage 1: Ideation & Use Case Prioritization

The first step in building is identifying the right problem. In my work leading AI initiatives, the strongest use cases emerge when technical leads and business stakeholders co-create from the beginning. Early alignment doesn't just reduce rework - it helps avoid building polished solutions to the wrong problems.

One effective trick is to insert dedicated "research sprints" or discovery phases. The goal here is purely exploratory - whether it's testing different modeling approaches, feature engineering, or algorithm research - without the expectation of delivering production features.

These research sprints allow data scientists to experiment within an Agile framework, after which findings can be incorporated into implementation sprints. This process ensures a more informed transition from exploration to execution that is aligned with real-world needs.

We begin by identifying data-heavy workflows that are also repetitive - think forecasting, anomaly detection, or procurement risk scoring. But we don't stop at feasibility. We evaluate:

- Does this meaningfully improve the user experience?

- Does it resolve a real operational constraint?

A study posted in arXiv backs this data-first approach, showing how natural language processing and clustering techniques can help teams make smarter use case decisions.

We use design sprints and collaborative data discovery sessions for initial input. Notably, these include both product and engineering voices. We also bring compliance and data governance early for enterprise apps, especially if sensitive or regulated data is involved.

Success hinges on co-creation and prioritization based on constraints. Siloed brainstorming sessions often lead to ideas that collapse under real-world conditions. Cross-functional input ensures ideas aren't just technically interesting but operationally sound.

Stage 2: Prototyping & Testing AI Solutions

Once a use case is locked in, the goal shifts to quickly identifying risks and testing assumptions. The objective isn't speed for its own sake - it's faster learning.

In a recent engagement involving public cloud integration for project-led services, we used generative AI to streamline workforce planning. After just a few sprints, we had a working prototype running in a 'shadow' environment, allowing us to test it using accurate data without affecting anyone's work. The result was reduced planning errors and significant time savings on manual tasks.

We prioritized explainability from the start. Tools like SHAP helped us understand how features contributed to predictions. We used TensorBoard to monitor model drift in real time. Jupyter dashboards helped us visualize false positives during retrospectives, refining the training data with each cycle.

What matters here is integrating model validation inside the sprint, not after deployment. We review accuracy, edge-case behavior, and feedback from test users every cycle. Transparency builds trust across both technical and non-technical teams.

As Craig Larman, co-creator of Large-Scale Scrum (LeSS), puts it:

"Any management approach that doesn't include AI as a central part of the future workforce is of the past."

In agile, testing isn't about hitting a perfect number - it's about creating a system that improves with every cycle, just like the team does.

Stage 3: Scaling AI Solutions Across the Lifecycle

Once an AI solution proves itself, the challenge shifts from building to scaling. That means retraining pipelines, model performance monitoring, version control for code and data, and production-grade deployment - all wired into the delivery pipeline.

Scaling an AI-enabled product requires a robust technical architecture that can accommodate AI's data and computational needs while allowing for frequent iterative changes.

In an Agile context, the architecture must be scalable, reliable, modular and extensible, so that new features or models can be integrated continuously without needing significant refactoring. A key principle is to design the system to isolate the AI/ML components behind well-defined interfaces, enabling the rest of the product to evolve or be deployed independently.

Modern best practices suggest using a microservices architecture for AI-enabled systems, which aligns well with Agile development. Microservice patterns emphasize the separation of concerns - for AI, this often means having distinct services or pipelines for data processing, model training, and model inference (serving).

By decoupling these components, teams can scale and update each part on its schedule, such as retraining models or updating the feature extraction service, without disrupting the entire application.

We create internal enablement kits with decision trees, architectural guides, and validation protocols so other teams can use, extend, or adapt the solution: these kits document model assumptions, input constraints, error-handling flows, and test results.

From a software design perspective, the architecture should allow for easy integration of AI components into the broader product. Many legacy products were not designed initially with AI, so integrating a new machine learning module can be intrusive. A helpful design pattern in this case is the "Sidecar" pattern, which involves deploying AI functionality as an adjacent service or process running alongside the main application.

This approach eliminates the need to rewrite large portions of the existing system. Instead, the AI model runs in its container or service, and the main application interacts with it (for instance, via an API request to a prediction service). The sidecar encapsulates the complexity of the AI, ensuring that if the model needs to be updated or if it fails, it doesn't crash the entire system.

This loose coupling is crucial for agility - it allows AI features to be added, removed, or modified with minimal impact on other system parts, aligning with Agile's embrace of change.

Other architecture patterns that benefit AI scalability include asynchronous messaging (using queues or streaming platforms like Kafka), which decouples data ingestion from processing. This enables the system to handle spikes in data or long-running model computations without slowing down user-facing features.

Scaling is about maintaining clarity and adaptability as conditions change. Even highly accurate models break without strong infrastructure and consistent retraining.

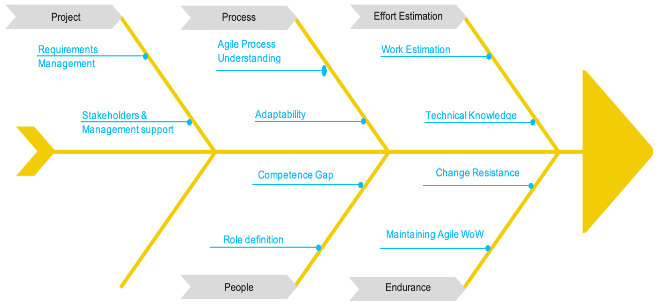

Overcoming Challenges in AI Product Development within Agile Frameworks

Photo: Shutterstock

AI efforts rarely fail because of weak models. They fail when organizations treat AI like an isolated toolset instead of a cross-functional capability.

In regulated environments, I've seen promising AI projects stall due to the usual suspects: unclear requirements, inaccessible data, role ambiguity, or models no one could explain. These aren't technical problems - they're coordination problems.

Agile helps resolve these issues. We've adapted our agile processes to include AI-specific steps. Model updates are tracked as backlog items. Sprint demos showcase model accuracy trends and user-facing implications. Retrospectives include failure case analysis, not just velocity metrics.

We don't just publish F1 scores - we clarify their meaning in the context of user experience.

Metrics for Measuring Success in AI-Enabled Product Development

A single metric cannot define success in AI product development. We evaluate success across three layers:

- Model Metrics: Precision, recall, and F1 score - used to assess algorithmic performance.

- Product Metrics: Task completion rates, user satisfaction scores, adoption curves.

- Business Metrics: Efficiency gains, ROI, compliance improvements.

In our Total Workforce Management rollout, generative AI reduced manual planning time by 40%, improved forecast accuracy by 25%, and strengthened audit traceability. Agile feedback loops helped us detect when user sentiment and model accuracy weren't aligned so that we could adjust in real time.

A publication from MSOE backs this three-layer approach, noting that agile enables teams to adjust success criteria as models and markets shift.

Working Smarter with AI and Agile

AI and agile are a perfect fit, speeding up learning and boosting quality. Agile gives AI teams the feedback structure they need, while AI offers agile teams smarter tools, better insights, and shorter cycles.

The teams that figure out how to make them work together will ship better products and define modern product development. And it starts now - with one use case, one sprint, and a team aligned on building something that matters.

Integrating AI into agile frameworks isn't just a technical challenge - it's an organizational one. Organizations can create AI-powered products that deliver measurable outcomes by aligning teams early, prototyping quickly, and scaling precisely. The combination of AI and agile is undeniable, and those who master it will define the future of product development.

About the Author

Akhil Kumar Pandey is a Principal Product Manager focused on AI-driven enterprise software. He's spent over 16 years leading initiatives across ERP, procurement, and compliance platforms, designing and scaling AI features for global teams. He holds a Master's in Business Analytics and certifications in machine learning and product strategy. His work centers on building AI systems that perform in real-world conditions and are grounded in cross-functional accountability.

References:

- Bahi, A., Gharib, J. and Gahi, Y. (2024, March) Integrating Generative AI for Advancing Agile Software Development. International Journal of Advanced Computer Science and Applications, 15(3). https://thesai.org/Downloads/Volume15No3/Paper_6-Integrating_Generative_AI_for_Advancing_Agile_Software.pdf.

- Lukic, V. et al. (2023, January). Scaling AI Pays Off. Boston Consulting Group. https://www.bcg.com/publications/2023/scaling-ai-pays-off#:

:text=While%20there%20is%20broad%20consensus,although%20there%20are%20significant#::text=research%20has%20shown%20that%20only,explored%20AI%20use%20cases%20and. - Cabrero-Daniel, B. (2023, May 14) Enhancing Agile Project Management with AI: A Preprint Study. arXiv preprint. https://arxiv.org/pdf/2305.08093

- DZone. (2024, January). Microservice Design Patterns for AI. Microservice Design Patterns for AI.

- MSOE Online (2024, January 16) Agile Product Development: Best Practices for Speed and Innovation. MSOE Business Blog. https://online.msoe.edu/business/blog/agile-product-development-best-practices-for-speed-and-innovation.

- RAND Corporation. (2024, August). More Than 80% of AI Projects Fail to Move Beyond Pilots. https://www.rand.org/pubs/research_reports/RRA2680-1.html#:~:text=By%20some%20estimates%2C%20more%20than,others%20avoid%20the%20same%20pitfalls.

- Zadeh, E.K., Khoulenjani, A.B. and Safaei, M. (2024, July 14) Integrating AI for Agile Project Management: Innovations, Challenges, and Benefits. International Journal of AI and Project Management. https://www.researchgate.net/publication/382241441_Integrating_AI_for_Agile_Project_Management_Innovations_Challenges_and_Benefits.

Comments

Loading comments…