Source: the author

Fastify — the name is the motto. At least that is what the framework for Node.js wants to stand for — Speed.

After we had already noticed in the last article that bare Node.js is clearly inferior to Express, I asked myself the question, what else can be used to develop fast web servers without using the node's own HTTP module.

Fastify came to my mind — so I wrote the same API three times again — in pure Node.js, in Express.js, and in Fastify. And now let me show you how Fastify performs against the competition.

Let's start by writing the APIs.

Let's install Fastify first

As usual, we do this with NPM: npm install fastify

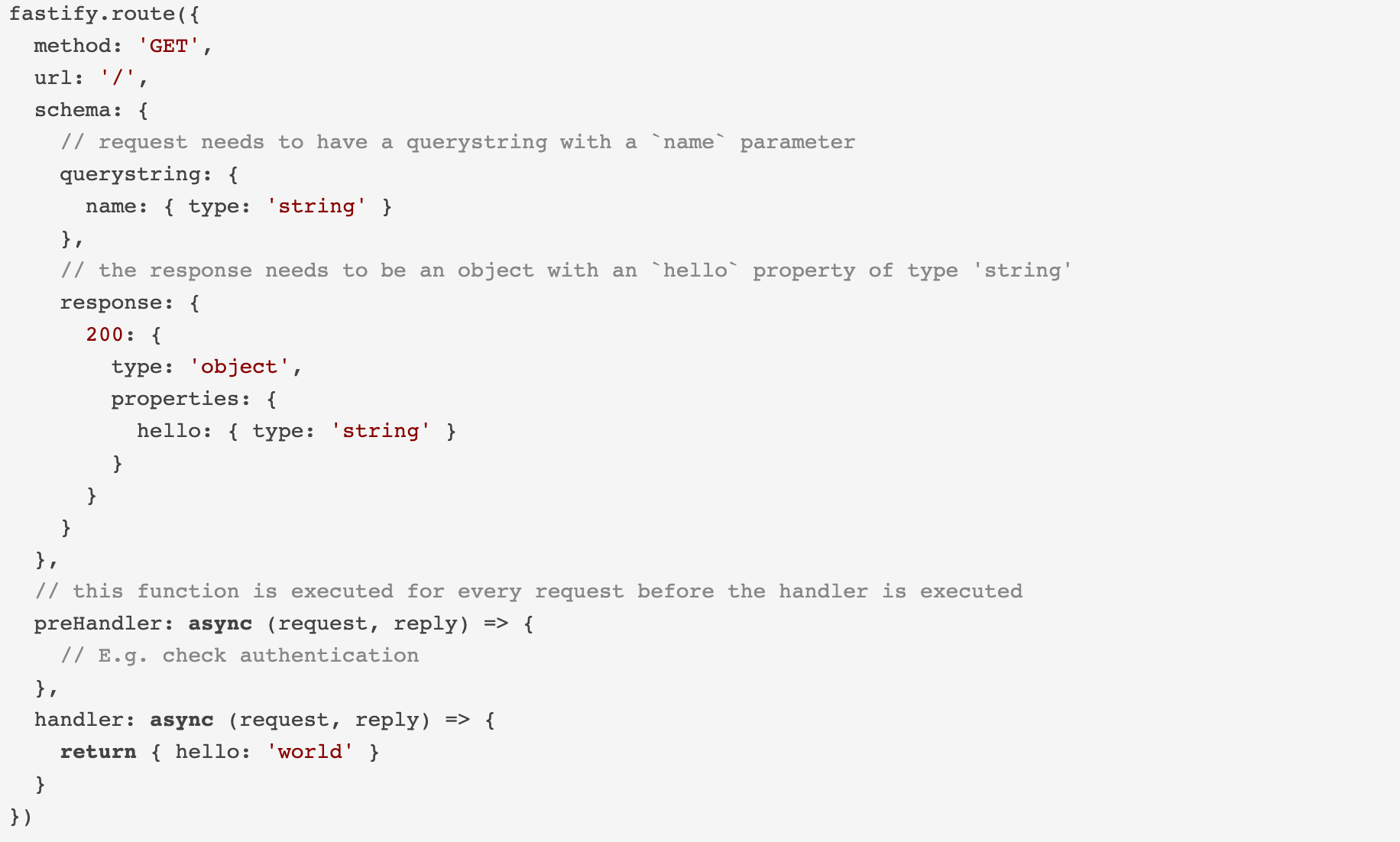

Now we can write the Fastify server. There are two ways to develop a web application in this framework. First, there is the possibility to build a schema that is used to do input and output validation. On the official website of Fastify you can see this quite well:

Source: Fastify

So here you can define what you want to receive e.g. as query parameters, and you can also use a preHandler function which works a bit like middleware — it is executed on every request.

The other option is a bit less complex and reminds more of Express.js. I used it for the benchmark, because on paper, the schema variant is even a bit slower, depending on its complexity.

So, don't be put off by the code shown above, here is our Fastify API:

All three APIs respond to requests on the api-route. They get a first name and a last name as query parameters and return both in the response.

The request I will take for the benchmark is: localhost:4000/api?name=john&lastname=doe.

The Express.js API

Not so complicated at all. And here is the Express.js API, which looks almost the same:

Comments: With app.disable I deactivated two contents in the header, before the response I set the content-type header to text/plain — in Fastify this is the default. With these settings, we get nearly identical headers in all three APIs.

Native/Bare Node.js API

Last but not least the bare Node.js server, completely without any framework.

Comments: In bare Node.js, there is no res.send. I added the if-statement to make it fair — after all, the other two APIs check the route when requesting. Without the if-statement this node server would answer every request. For the content-type I had to specify utf-8 manually. With Express and Fastfiy, this was automatically in the header. So all headers have the same size.

The Benchmark

For the benchmark, I use the MacBook Pro 13 inch 2018, with the 4-core Intel i5 processor. I made sure that the same conditions prevailed in all tests. Each server was tested individually, all other request sources except the load-testing tool were switched off.

The Node.js version is 14.2.0. I simply executed the APIs with the node command, no PM2 or nodemon was used.

The load tester

In the last article, I used apache bench. But because of the HTTP-versions, there are problems with Fastify and AB is very old anyway. Therefore I decided to use wrk.

On macOS, it can be installed with brew install wrk. On Linux, it can be installed with sudo apt-get install wrk.

This is the command I used for each API:

wrk -t12 -c400 -d10s http://localhost:4000/api?name=john&lastname=doe

12 is the number of threads we use, 400 the number of concurrent connections. The whole thing runs for 10 seconds.

The results

Of course, I have bombarded each API several times with the load tester — here are the most average results.

Express.js

Thread Stats Avg Stdev Max +/- Stdev

Latency 17.03ms 4.10ms 109.13ms 90.16%

Req/Sec 1.86k 353.95 2.89k 88.92%

222514 requests in 10.02s, 32.47MB read

Socket errors: connect 0, read 248, write 0, timeout 0

Requests/sec: 22207.75

Transfer/sec: 3.24MB

Bare/Native

Thread Stats Avg Stdev Max +/- Stdev

Latency 11.03ms 3.09ms 77.64ms 85.72%

Req/Sec 2.77k 542.65 4.38k 86.17%

331028 requests in 10.01s, 48.62MB read

Socket errors: connect 0, read 257, write 0, timeout 0

Requests/sec: 33067.32

Transfer/sec: 4.86MB

Fastify

Thread Stats Avg Stdev Max +/- Stdev

Latency 9.75ms 3.17ms 79.39ms 87.88%

Req/Sec 3.13k 729.47 11.94k 87.78%

375177 requests in 10.10s, 54.74MB read

Socket errors: connect 0, read 263, write 0, timeout 0

Requests/sec: 37147.37

Transfer/sec: 5.42MB

The results are clear — Fastify is faster than Express.js and even faster than Bare Node.js. Especially the latter has surprised me. But I also read it in other benchmarks, not least on the official site of Fastify. It seems to be the fastest web framework in the Node.js world.

Summing up

In terms of performance, Fastify is superior.

To be fair, the benchmarks that show such performance differences are often very unrealistic situations — in reality, hardly any web server would be bombarded with so many requests at once.

Especially since the hardware of the system also plays a decisive role. Despite the superior performance of Fastify, I will primarily stay with Express. For me and many others, what Express offers is completely sufficient.

The entire ecosystem is better equipped and there are more resources to Express. This makes it especially beginner-friendly.

Finally, the differences in the frameworks are not big for many purposes — especially for a single API, or serving static assets, migration to another framework is easily possible.

Enjoyed this article? If so, get more similar content by subscribing to Decoded, our YouTube channel!

Comments

Loading comments…