Let’s analyze this with a simple problem. Let us assume that you have to call two external APIs i.e., network calls. One takes around 4 secs and the other takes around 3 secs. So let’s see what are the possible approaches with which we can go:

Write sequential code. That will take around 7secs.

Consider the following sequential sample code. I have used sleep function. In sequential code, API 1 will take around 4 secs, which means the thread that is executing this has to wait for its completion. Kernal puts the thread to the sleep state ie. it will wait for the I/O to complete. It means we cannot use this thread for anything else. The thread scheduler will put the thread again in the running state after the I/O is complete. Now our code will call API 2 and similarly the thread will again to the sleep state. It means we will get the final result in around 7secs approximately.

import time

def api1():

print("call 1st API")

time.sleep(4)

print("1st API finish")

def api2():

print("call 2st API")

time.sleep(3)

print("2nd API finish")

start = time.time()

api1()

api2()

print(time.time()-start)

"""

Output:

call 1st API

1st API finish

call 2st API

2nd API finish

7.007178068161011

"""

You can write multithreaded code for it.

One thread for 1st API and 2nd thread for the 2nd API. Multithreading on a single processor gives the illusion of running in parallel. But actually, the processor is switching based on scheduling algorithm or priority if you specify any via your code, etc. Multithreading allows a single processor to spawn multiple, concurrent threads.

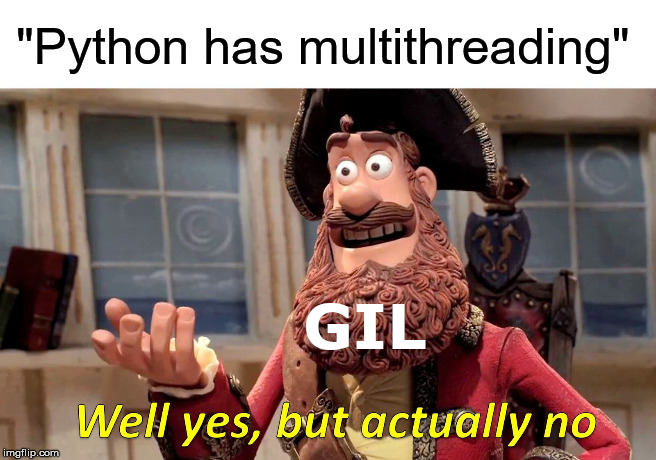

There is the concept of GIL in Python, because of which you cannot execute more than one thread/process in Python. So if your code is CPU bound(calculations using CPU etc), you won’t be able to get benefits from multi-threading in Python. But if your code is I/O bound(ie. reading from disk, network calls, etc) then you can go for multithreading. Refer to the following code sample and do check out the time and print statements.

You can see that in multithread with a CPU-bound problem, the first thread is completed first, and then the second thread is completed. And in I/O bound both threads are executed concurrently.

I/O bound:

import time

import threading

def api1():

print("call 1st API")

time.sleep(4)

print("1st API finish")

def api2():

print("call 2st API")

time.sleep(3)

print("2nd API finish")

start = time.time()

# create threads

t1 = threading.Thread(target=api1, name='t1')

t2 = threading.Thread(target=api2, name='t2')

# start threads

t1.start()

t2.start()

# wait untill all threads finished

t1.join()

t2.join()

print(time.time()-start)

"""

call 1st API

call 2st API

2nd API finish

1st API finish

4.003810882568359

"""

CPU bound:

import time

import threading

def api1():

print("call 1st API")

count=0

while count<100000000:

count=count+1

print("1st API finish")

def api2():

print("call 2st API")

count=0

while count<100000000:

count=count+1

print("2nd API finish")

start = time.time()

# create threads

t1 = threading.Thread(target=api1, name='t1')

t2 = threading.Thread(target=api2, name='t2')

# start threads

t1.start()

t2.start()

# wait untill all threads finished

t1.join()

t2.join()

print(time.time()-start)

"""

call 1st API

call 2st API

1st API finish

2nd API finish

11.712602853775024

"""

There is a concept of asynchronous I/O ie. non-blocking I/O. This is the best solution for the given problem.

Here comes the concept of the event loop. The event loop does not use multiple threads, it runs on a single thread. You can run multiple event loops on different threads**.** A single thread helps us to achieve better performance as compared to what we have achieved with multi-threading, also it is easy or clean to write async code in Python than multi-threaded code. In Python, you can use async/await syntax to write asynchronous code. For more details on how to write asynchronous code in Python, you can refer to this article.

The event loop is like an infinite while loop, that continuously checks/polls if data is ready or not to read from the network sockets because all the network I/O(API call) is performed on non-blocking sockets.

sockets: Every I/O action is done by writing, reading from a file descriptor(fd). Fd is an integer associated with an open file, i.e. pointer to an open file. There is a file descriptor table for every process, that maintains a mapping of fd to a file. So, we can say that every i/o action is like reading or writing from a file via an fd, and for the current context, we can assume that sockets are like fds (because sockets are implemented as fds, ie they also have an entry in the file descriptor table and points to an open file). There are various types of sockets and they can be blocking or non-blocking.

You might think that an infinite loop will consume a lot of CPU but it is not the case, as the event loop polls or checks ready sockets using the best polling mechanism available on the given platform: epoll on Linux, kqueue on OS X, etc.

It is based on your use case, what suits you the best, multithreading or asyncio. I hope this article gave you a kick-start. Thanks for reading and happy exploring.