Abstract

This article examines the evolving landscape of online safety for minors, highlighting major concerns and technological solutions. Key issues include cyberbullying, online predators, privacy breaches, exposure to inappropriate content, and the rise of immersive digital experiences. The article explores how AI-powered content moderation, advanced parental controls, age verification systems, and privacy-enhancing technologies are being implemented to create a safer online environment for children. It also discusses emerging trends, regulatory pressures, and the need for a collaborative approach involving technology companies, parents, educators, and policymakers. Finally, the article's insights can inform future policies by highlighting the need for regulations that prioritize children's online safety while also considering privacy and freedom of speech. These insights can also shape technological developments by emphasizing the importance of AI-powered solutions, advanced parental controls, and privacy-enhancing technologies in creating a safer online environment for minors.

Introduction

The digital world continues to evolve at a rapid pace, offering children and teenagers unprecedented opportunities for learning, socializing, and entertainment. However, this digital landscape also presents significant risks to young users, and the urgency of addressing these concerns is amplified by the increasing number of minors using digital platforms for education, entertainment, and social interaction. This article aims to explore the major concerns regarding online safety for minors and discuss the technological solutions being developed and implemented to protect them in this ever-changing digital environment.

Literature Review

Recent studies and legislative efforts have highlighted the growing concerns around children's online safety. The Kids Online Safety Act (KOSA), which has gained significant support in 2024, emphasizes the need to address issues such as mental health disorders, addiction, sexual exploitation, and bullying that may arise from minors' use of online platforms.

The concept of "Safety by Design" has gained traction in recent years, emphasizing the importance of integrating safety considerations into the earliest stages of product development. This approach aligns with the growing regulatory pressure on companies to implement comprehensive safety measures. Real-world examples include Apple's Screen Time and Family Sharing, which allows parents to control app usage and monitor activity. YouTube Kids filters content specifically for children using algorithms and human review. Instagram's Safety Features for Teens include default private accounts for users under 16 and restrictions on adult interactions. LEGO Life is a social network with safeguards like avatars without personal identifiers and moderated content. These examples show a shift towards prioritizing child online safety from the outset, crucial for ethical responsibility, user trust, and regulatory compliance.

In the United States, states like California have implemented legislation imposing robust requirements on businesses offering online services likely to be accessed by children under 18. These laws require companies to assess and mitigate risks to children's privacy and safety in their platform designs (MultiState, 2024).

Technical Analysis

To address the major concerns regarding online safety for minors, several technological solutions are being developed and implemented:

AI-Powered Content Filtering and Moderation

Advanced artificial intelligence and machine learning algorithms are being used to detect and filter out inappropriate content, including explicit images, cyberbullying attempts, and child sexual abuse material (CSAM). These AI systems can operate at scale, scanning vast amounts of online content in real time to create safer digital environments for children.

Key statistics highlight the urgency of this issue as 92% of webpages containing child sexual abuse imagery in 2023 included "self-generated" material extorted from children. In contrast, 21% of sites containing "self-generated" imagery (54,250 web pages) contain the most severe abuse (Category A). However, 64.5% of the most common reported risks to children across social media platforms was 'content of interest to predators'.

Source: https://whatsthebigdata.com/cyberbullying-statistics-facts/

One proposed solution is to have AI score all messages on social media platforms. This approach would involve using artificial intelligence to analyze and rate the content of messages for potential risks or inappropriate material, providing an additional layer of protection for young users.

Advanced Parental Control Tools

Sophisticated parental control software is being designed to allow parents to set screen time limits, block access to specific apps or websites, monitor online activity, and manage in-app purchases. These tools are becoming more intuitive and comprehensive, giving parents greater visibility and control over their children's digital experiences.

Recent data shows that 75% of parents of children aged 5--11 report checking the websites and apps their child uses. Similarly, 72% use parental controls to restrict time on devices, 49% look at call records or text messages, and 33% track their child's location through GPS apps or software.

The global parental control software market is projected to grow from $1.40 billion in 2024 to $3.54 billion by 2032, at a CAGR of 12.3% (Fortune Business Insights, 2024).

Some platforms are developing features to create activity summaries for parents. These summaries would provide an overview of a child's online activities, allowing parents to stay informed without necessarily invading their child's privacy.

Age Verification and Age-Appropriate Design

Technological solutions for age verification and estimation are being implemented to ensure children only access age-appropriate content and services. This includes on-device age estimation tools, age gates, and verification systems for online platforms.

The statistics from the Cyberbullying Research Center show that 53% of U.S. teens aged 13 to 17 report that they have a smartphone by the age of 11 and 87% of children in high-income countries have access to the internet at home.

In the UK, the Online Safety Act requires online platforms to prioritize safety measures, and compliance reports, and collaborate with Ofcom, the UK's communications regulator. Ofcom is introducing Children's Safety Codes of Practice, which provide detailed guidance to online platforms on their obligations to prevent harm to young users.

Privacy-Enhancing Technologies

Innovations in privacy-preserving technologies are being prioritized to protect children's privacy while enabling necessary safety measures. These include:

- Client-side scanning for CSAM detection

- Differential privacy techniques for data collection

- Secure multi-party computation for age verification

Metaverse Safety Measures

As immersive 3D environments become more prevalent, new safety measures are being developed for these virtual worlds. This includes implementing parental controls that allow monitoring of interactions in these spaces and creating safe, supervised areas within metaverse platforms.

These technological solutions aim to address the alarming statistics of online risks for minors:

- Nearly 90% of people aged between 18 and 34 have witnessed or received harmful content online at least once.

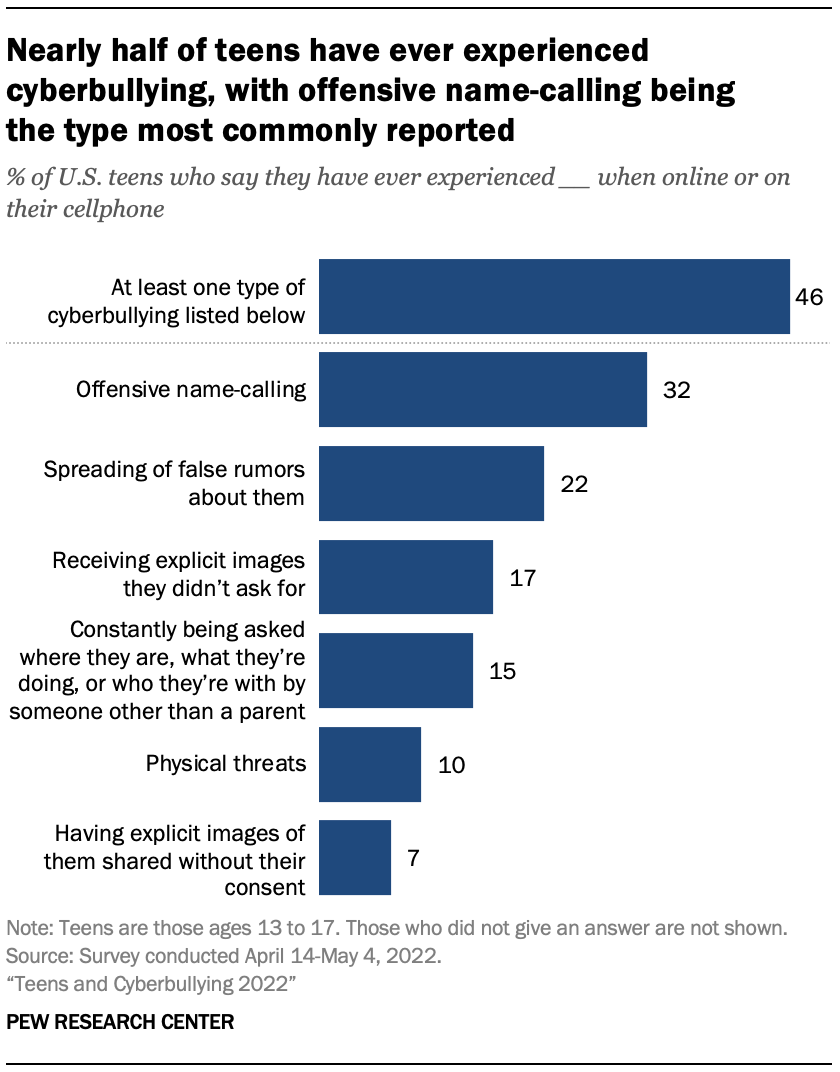

- 59% of US teens have personally experienced at least one of six types of abusive online behaviors.

- 302 million children a year are victims of non-consensual taking, sharing of, and exposure to sexual images and videos.

- About 300 million children are estimated to have been subjected to online solicitation (UNICEF, 2024).

Future Trends

Looking ahead, several key trends are shaping the landscape of online child safety:

1. The rise of metaverse experiences, which require new safety measures for immersive 3D environments.

2. Increased sophistication of deepfake capabilities and AI-generated content, posing new challenges for content moderation. The increasing accessibility of advanced tools for creating deepfakes and AI-generated content presents a significant challenge. Malicious actors could exploit these technologies to generate realistic false depictions of minors in compromising situations, leading to reputational damage, emotional distress, and even extortion attempts. Mitigating these risks requires a multi-pronged approach, including:

- Developing advanced detection technologies to identify and flag deepfakes.

- Educating children and young adults about the potential misuse of AI and deepfake technology.

- Implementing stricter regulations and penalties for the malicious creation and distribution of deepfakes involving minors.

- Providing support services for victims of deepfake abuse, including counseling and legal assistance.

3. Growing concerns about AI-facilitated sextortion and peer-to-peer misuse of AI tools. The potential for AI to facilitate sextortion and the peer-to-peer misuse of AI tools is a growing concern. AI-powered chatbots or social media platforms could be exploited by malicious actors to manipulate minors into sharing explicit content, which can then be used for blackmail or harassment. To address this, ongoing efforts are needed to:

- Develop AI-powered tools that can detect and flag potentially harmful interactions or requests for explicit content.

- Educate children about online grooming tactics and the risks associated with sharing personal information or explicit content online.

- Strengthen law enforcement capabilities to investigate and prosecute cases of online sextortion and AI-facilitated abuse.

4. The emergence of privacy-centric platforms that prioritize user confidentiality.

5. Increased regulatory pressure on companies to implement comprehensive safety measures.

6. The expansion of educational apps and platforms designed to teach children about online safety and digital citizenship.

Conclusion

As we navigate the complex digital landscape, protecting children online requires a multi-faceted approach combining technological innovation, education, parental guidance, and appropriate legislation. The implementation of advanced AI-powered content moderation, sophisticated parental controls, age-appropriate interfaces, and privacy-enhancing technologies is crucial in creating a safer online environment for children.

However, these technological solutions must be balanced with considerations of user privacy, free speech, and the need for children to develop digital literacy skills. The rise of immersive digital experiences, such as metaverse platforms, presents new challenges that require innovative safety measures and ongoing research.

The regulatory landscape is evolving rapidly, with countries and regions implementing new laws and guidelines to protect children online. Technology companies must stay ahead of these regulations, proactively implementing safety measures and collaborating with policymakers to create effective solutions.

Parents and educators play a critical role in this ecosystem, needing to stay informed about emerging technologies and safety tools. Open communication with children about online risks and responsible digital behavior remains essential.

As we look to the future, it's clear that protecting children online will require ongoing collaboration between technology companies, parents, educators, policymakers, and children themselves. By fostering this collaborative approach and continuing to innovate in the field of online safety, we can work towards creating a digital world that offers children the benefits of technology while minimizing the risks they face.

The challenges are significant, but so too are the opportunities to create a safer, more empowering online environment for the next generation. As we move forward, all stakeholders must remain committed to this goal, adapting and evolving our approaches as the digital landscape continues to change.

References

- Livingstone, S., & Smith, P. K. (2014). Annual research review: Harms experienced by child users of online and mobile technologies: The nature, prevalence and management of sexual and aggressive risks in the digital age. Journal of child psychology and psychiatry, 55(6), 635--654.

- Jones, L. M., Mitchell, K. J., & Finkelhor, D. (2012). Online harassment in context: Trends from three youth internet safety surveys (2000, 2005, 2010). Psychology of Violence, 2(1), 53.

- Subrahmanyam, K., & Greenfield, P. M. (2008). Online communication and adolescent relationships. The Future of Children, 18(1), 119--146.

- O'Keeffe, G. S., & Clarke-Pearson, K. (2011). The impact of social media on children, adolescents, and families. Pediatrics, 127(4), 800--804.

- Hinduja, S., & Patchin, J. W. (2009). Bullying beyond the schoolyard: Preventing and responding to cyberbullying. Corwin Press.

- UNICEF. (2023). Child online safety: A guide for policymakers.

- European Commission. (2022). Safer Internet for Kids: A European framework for a better internet for children.

- Council of Europe. (2001). Convention on Cybercrime.

- National Center for Missing and Exploited Children (NCMEC). (2023). CyberTipline Report.

- Internet Watch Foundation (IWF). (2023). Annual Report.

- Pew Research Center. (2023). Teens, Social Media and Technology 2022.

- Common Sense Media. (2023). Social Media, Social Life: How Teens View Their Digital Lives.

About the author

Anurag Agrawal is an experienced Senior Technical Lead and Engineering Manager at Google LLC with a demonstrated history of guiding high-performing development teams to success. With over 12 years of industry experience, Anurag is an expert in cybersecurity, Fraud & Abuse prevention, AI, and Machine Learning. Anurag is committed to pushing the boundaries of technology while nurturing the next generation of tech leaders through mentorship and guidance.

Comments

Loading comments…