Introduction

Amazon S3 (Simple Storage Service) is a highly scalable, durable, and secure object storage service provided by Amazon Web Services (AWS). It is widely used for various purposes such as storing files, hosting static websites, and serving as a data lake for big data analytics. In this article, we will discuss how to use Python and the Boto3 library to upload multiple files to an S3 bucket.

Prerequisites

Before diving into the code, make sure you have the following:

- An AWS account and access to the AWS Management Console.

- A configured IAM user with the necessary permissions to access your S3 bucket.

- Python and Boto3 library installed on your system. You can install Boto3 using

pip install boto3.

Code Explanation

Let's take a look at the following Python script that uploads multiple files to an S3 bucket:

import boto3

import os

# Replace the following placeholders with your own values

aws_access_key_id = 'YOUR_AWS_ACCESS_KEY_ID'

aws_secret_access_key = 'YOUR_AWS_SECRET_ACCESS_KEY'

bucket_name = 'YOUR_BUCKET_NAME'

local_folder_path = 'LOCAL_FOLDER_PATH'

def upload_to_s3(local_file_path, bucket_name, s3_key):

s3 = boto3.client(

's3',

aws_access_key_id=aws_access_key_id,

aws_secret_access_key=aws_secret_access_key

)

try:

with open(local_file_path, 'rb') as file:

s3.upload_fileobj(file, bucket_name, s3_key)

print(f"File {local_file_path} uploaded to {bucket_name}/{s3_key}.")

except Exception as e:

print(f"Error uploading file to S3: {e}")

# List of file names you want to upload

files_to_upload = ['file1.txt', 'file2.txt', 'file3.txt']

for file_name in files_to_upload:

local_file_path = os.path.join(local_folder_path, file_name)

s3_key = file_name

upload_to_s3(local_file_path, bucket_name, s3_key)

Here's a breakdown of what the code does:

- Import the necessary libraries:

boto3is the official AWS SDK for Python, andosis a built-in Python library for interacting with the operating system. - Replace placeholders with your own values: You need to provide your AWS Access Key ID, AWS Secret Access Key, the S3 bucket name where you want to upload the files, and the local folder path containing the files to be uploaded.

- Define the

upload_to_s3function: This function takes three arguments - the local file path of the file to be uploaded, the bucket name, and the S3 key (destination path and file name) for the file in the bucket. It initializes the S3 client with the provided AWS credentials and uploads the file using theupload_fileobjmethod. - Create a list of file names to be uploaded: In the

files_to_uploadlist, specify the names of the files that you want to upload from the local folder. - Iterate through the list of files and call the

upload_to_s3function for each file: The script iterates through each file in thefiles_to_uploadlist, constructs the local file path and S3 key, and calls theupload_to_s3function to upload the file to the specified S3 bucket.

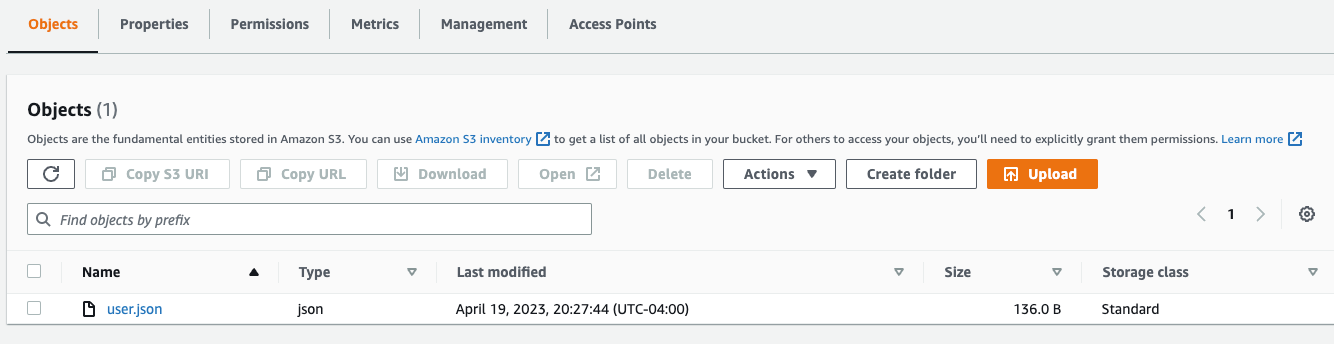

user.json uploaded to AWS S3

Conclusion:

In this article, we explored a Python script that uses the Boto3 library to upload multiple files to an Amazon S3 bucket. This script provides a simple and efficient way to automate the process of uploading files to your S3 storage. With a few modifications, you can customize the script to upload files from different local folders, store the files in specific folders within the S3 bucket, or even apply additional options like setting the object's access control or metadata during the upload process.

Remember to always follow best practices when working with AWS credentials, such as using environment variables or AWS configuration files to securely store your credentials. Avoid hardcoding them directly into your script, especially if you plan to share it or use version control.

By leveraging the power of Python and Boto3, you can easily manage your files in Amazon S3 and integrate the script into your data pipeline or application workflow.