You probably know that a ChatGPT Plus membership is 20 bucks a month. This is easy to understand.

But there are other ways to use ChatGPT too. If you are a developer, you can use its API and "pay for what you use".

The confusing part is that it is not easy to figure out how much it costs. API pricing is quite cryptic --- I believe on purpose. But don't worry, I'm going to shed light on how much this actually costs --- in a way a human can understand.

Hint... It is a lot!

Before I dive into the pricing, read how funny this is:

To let you know how weird the pricing is for the ChatGPT API, imagine going to a restaurant, and the restaurant charged you 2 cents for every 1 million molecules of food you ate. Would that be expensive or cheap? It is so difficult to have any clue what a plate of food costs --- and that is what you want to know!

Ok, now to the ChatGPT API Pricing:

The pricing depends on a few factors:

- Which model you use. (GPT 3.5, GPT 4, GPT 4 Turbo, etc.)

- How many "tokens" you use

- Number of "input tokens" and "output tokens"

What is a "token"?

A token is the smallest unit of text that is processed by the model. A long common word can be one token. But even something as simple as an exclamation mark can be one token.

A good rule of thumb is that 1000 tokens equals approximately 750 words.

What is an "input token" and an "output token"?

Input tokens are counted whenever you enter something into the query box of ChatGPT. It is whatever you are typing in.

Output tokens are counted whenever ChatGPT responds to you or answers you.

Basically, if you ask ChatGPT a question, whatever you type in is counted as "input tokens", and whatever answer ChatGPT gives you is counted as "output tokens".

Ok. That is all you really need to know about terminology. Now I'm going to give you some concrete examples for how much this actually costs.

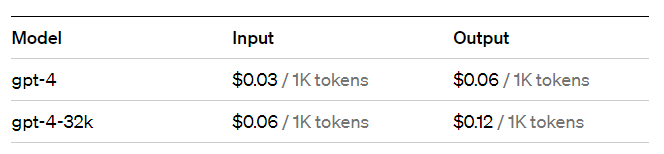

This is a screenshot from OpenAI's website. It shows their ChatGPT-4 model API pricing.

Here is a fun quesion:

How much would it cost to get ChatGPT to write a 300 page book using their API?

Let's suppose we want to use ChatGPT's gpt-4--32k model --- their most expensive model.

If we use average sized text, that would be about 400 words per page.

400 words times 300 pages = 120 000 total words in the book.

Number of tokens = 120 000 divided by 750 times 1000 = 160 000 total tokens.

Ok, so this book costs 160 000 tokens. Since ChatGPT is writing the book, these count as "output tokens".

From the pricing chart above, we can see that output tokens for this model cost 12 cents per 1000 tokens.

So the total cost for the book is: $0.12 X 160 = $19.20

What??? This can't be right!

I've checked the math plenty of times. It is right. It would cost 19 dollars and 20 cents (USD) to get ChatGPT to write one 300 page book. Unbelievable. You can buy a digital book for less than that.

The crazy part is that it would realistically cost a lot more than that to make one. The way you would get ChatGPT to write a book would be with a lot of prompting back and forth. You would ask for one page, and then you would ask ChatGPT to improve the page, and make outlines, and a lot of other things. Also, this assumes no images in the book either.

The $19.20 cost is just assuming you didn't change a thing, and you used the very first words that ChatGPT wrote, with no editing whatsoever.

Of course if you use a cheaper model, it is cheaper. Also, you often wouldn't ask ChatGPT to perform that large a task at once. But still, the price for this is staggeringly high. I would have guessed the entire book would cost 10 cents or less, even with the expensive model.

Ok, what if you got ChatGPT to make this book using the ChatGPT 3.5 model?

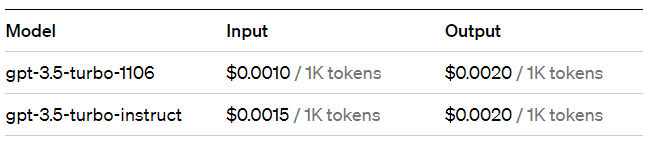

Here is the pricing for ChatGPT 3.5 API:

It is cheaper than the expensive ChatGPT 4 model by a factor of 60!

$0.12 divided by $0.002 = 60

So using this model, the book would cost: $19.20 divided by 60. That equals $0.32. That equals 32 cents.

Ok, what about the same 300 page book with GPT-4 Turbo?

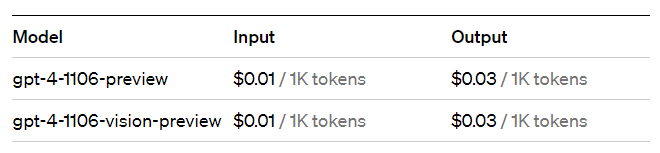

Here is the GPT-4 Turbo pricing:

So the GPT-4 Turbo price would be a quarter the price of the most expensive model. It works out to be $4.80

That's quite a bit cheaper than the $19.20, but this is still expensive.

Imagine if you were trying to build business based on these models. You'd have to charge your customers a fortune if you allowed them heavy usage.

How about image use? How much does it cost to use Dall-e to generate images through the API?

It is expensive too!

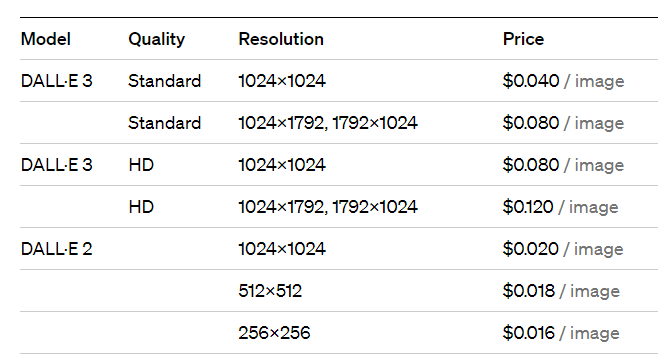

Here is the pricing chart:

For the high res Dall-e 3 model, it costs 8 cents per image.

So it is $8.00 to make 100 images. 8 bucks! That is really expensive. If you've ever tried to generate images, you will find that you really need to use a lot of prompts to get one that you like.

If you used the cheapest image generator they have, 100 images would cost $1.60.

As we can see, it is extremely expensive to use the API, especially if you are planning to use it a lot. Developers really need to be careful as to what business models they can build with this current price structure.

Hopefully this concrete example was a real eye opener, and it gave you something tangible to think about in terms of how much the API actually costs to use.

And hopefully the prices of these models will come down a lot in the near future. Knowing computer industry, they likely will.