Data is the new gold. Businesses and individuals use data to streamline processes and make informed decisions. Where to get this data if not the internet? The internet is a gold mine of data. But this data is embedded in deep layers of HTML code that can make it difficult to obtain the data that you need, especially if there's no API available to get the data from.

Web scraping is a method of getting data from the internet without an API. In this article, you would know what web scraping is, its applications, and proxies.

What is web scraping?

Web scraping is the automated process of extracting publicly available data from targeted websites. Web scraping is a form of copying in which a specific data is gathered and copied by automated scripts and bots.

Web scraping involves three main steps:

- Retrieve content from website: retrieve content from your targeted website by using web scrapers to make HTTP requests to the website. The data returned is in a HTML format.

- Extract the data: extract the specific data by parsing the HTML content.

- Store the parsed data: store the parsed data in any database or Excel sheet as CSV or JSON file for future use.

💡 Also Read: Simplify E-Commerce Web Scraping with a No Code Scraper

Is Web Scraping Legal?

Web scraping is legal. While web scraping is legal and does not have laws and regulations on its applications, it's important to comply to other laws and regulations regarding the source of the data and the data itself.

Here are some considerations when scraping data from websites:

- Web scrapers should comply to the Terms of Service (ToS) of the target website. Some websites prohibit automated data collections.

- Web scrapers should not breach laws regarding creative works and copyrighted data, such as designs, layouts, articles, and videos.

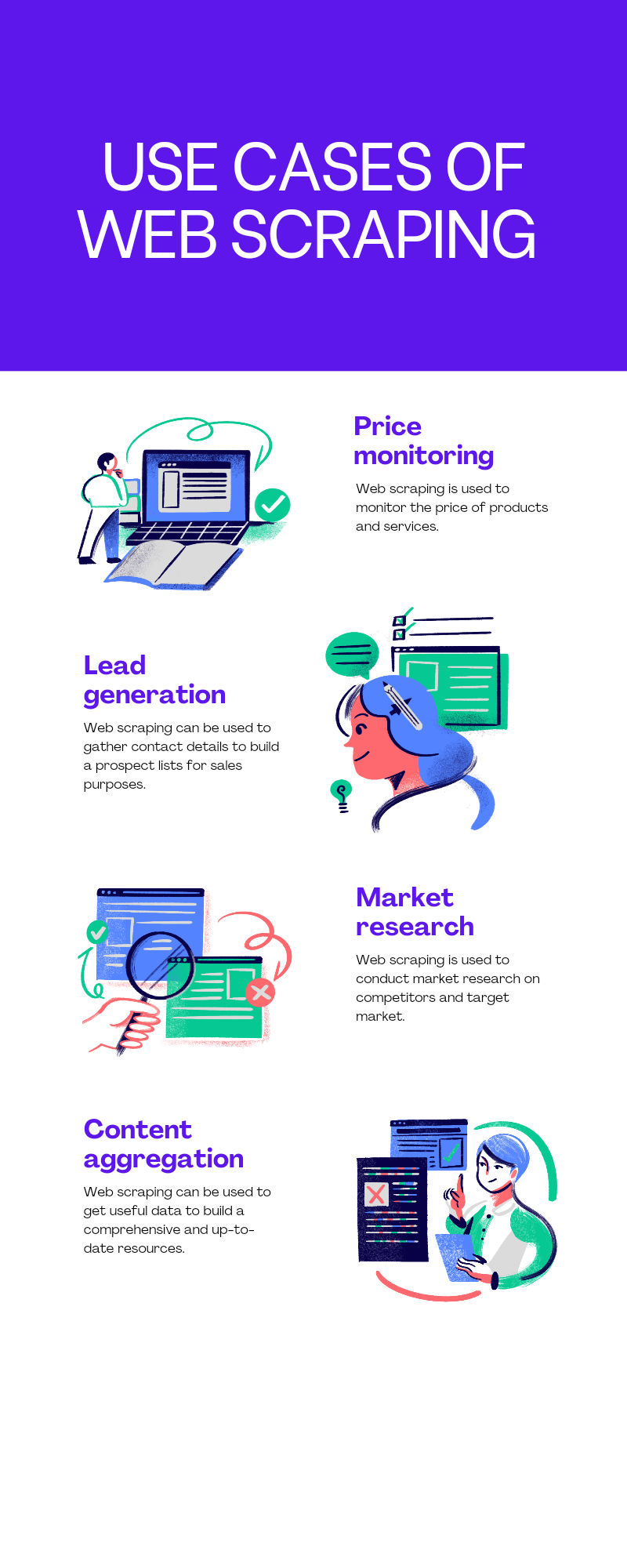

Use cases of web scraping

Companies use web scraping for various purposes including:

- Market research: Businesses use web scrapers to gather data on competitors, market trends, and consumer sentiment.

- Price monitoring: Product-based businesses use web scrapers to gather data on product prices from their competitors in order to stay competitive.

- Lead generation: Web scraping can be used collect contact details and business-related information to build prospects lists for marketing and sales purposes.

- Content aggregation: Web scrapers can be used to collect and curate content from various sources to create comprehensive and up-to-date resources.

Challenges faced by web scrapers

Scraping the web for data is not always a smooth process as some websites don't like being scrapped. These websites employ tactics that make web scraping challenging, including:

- IP blocking: Your IP can be banned or blocked if a website detects unusual and repetitive activity from your IP. IP blocking can slow down or halt web scraping activities, which can lead to less efficient results.

- Rate limiting: Rate limiting is the practice of limiting the number of request from a particular client in a given period of time. Most websites employ rate limiting as a measure against server overload and equal access for visitors. Rate limiting can hurt web scraping activities as your web scraper has to make less requests to stay undetected.

- Geographic restrictions: Some web content are only accessible within a particular geographical area. If your web scraper's IP address is not of that particular geographical area, your web scraper cannot access the content of a geo-restricted website.

- Dynamic content: Web scrapers are designed to extract data from static HTML code. Modern websites use JavaScript and AJAX to render dynamic content, making it difficult for web scrapers to extract the data they need.

- Required login: Some of the data you wish to extract are only accessible to authenticated users. This poses a problem as your web scraper has to log in to access the web content. When logged in, the website may use cookies or tokens to track your web scraper. That means that your web scraper has to store and send the required cookies or tokens to stay authenticated.

- CAPTCHAs: CAPTCHAs are security measures used to differentiate between a human and a bot. CAPTCHAs uses challenges that are easy to solve by humans but impossible to solve by bots. The challenges may be puzzles, image recognition, or analysis of user behavior.

Web scraping proxies can help to overcome these challenges.

Proxies as a solution to these challenges

Proxies act as intermediaries between your web scraper and the website you are targeting. Instead of directly accessing the website, proxies access the web on your behalf.

Types of proxies

Proxies come in many types, but here are the five most common types of proxies:

- Residential proxies

- Data center proxies

- Mobile Proxies

- Data Center Proxies

- ISP Proxies

- Shared proxies

- Private proxies

How do proxies work?

Proxies acts as the middleman between your web scraper and the target website. When your web scraper makes a request, the request passes through the proxy server and then to the target website. The target website sees the request as coming from the proxy server, hiding your real identity.

Benefits of using proxies

Proxies are crucial to effective web scraping. Proxies provide various benefits for your web scraping activities.

- Proxies provide IP rotation: Sending too many requests to a website from a single IP address increases the chances of getting your IP address banned from accessing the website. Proxies rotate your IP address so that your requests seem to be coming from different IP addresses. This reduces the chance of getting banned by a website.

- Proxies provide anonymity: Accessing the internet exposes your IP address and identity. Proxies mask your IP address, maintaining your privacy and security and avoid being detected.

- Proxies enable you to scrape data without geo-restrictions: Some web content are restricted to non-residential IPs. Proxies help you bypass these geo-restrictions and scrape data from any website.

Bright Data is a one-stop solution proxy for your web scraping needs. Bright Data provides you with IP rotation, CAPTCHA solving, anonymity amongst other benefits to increase the efficiency of your web scraping activities. With Bright Data, your web scraper can access pages behind login walls without being labeled as a bot.

Web scraping best practices

While scraping websites, there are some best practices you want to adhere to.

- Don't overburden the website: When scraping websites, make sure you are not overburdening the web server with too many requests. You can achieve this by limiting the number of requests your web scraper makes to a website.

- Don't violate copyright: When scraping websites, be careful not to scrape any copyrighted data.

- Check the

robots.txtfile: Before scraping, check therobots.txtfile of the website to understand the restrictions and guidelines for crawlers. - Use robust scraping tools: Modern websites are dynamic and JavaScript-heavy. This makes it hard for web scrapers to extract data from the websites. Robust web scraping tools help to scrape dynamic and JavaScript-heavy websites.

Tips for effective management of proxies

Effective management of proxies makes for a great web scraping experience. The following are 5 tips for managing proxies when scraping the web:

- Monitor Proxy Health: Regularly check your proxies for uptime, speed, and responsiveness. Rotate dead or slow proxies and remove those flagged for suspicious activity. Consider using proxy monitoring tools for automated checks.

- Use Residential Proxies: Opt for residential proxies to mimic real user traffic and reduce the chance of being detected.

- Rotate IP Addresses: Regularly switch between IP addresses to avoid detection and potential blocks from websites.

- Leverage Session Control: Maintain sticky sessions when needed, especially for websites requiring logins or cookies. This avoids frequent re-authentication and ensures consistent scraping experience.

- Utilize Proxy Management Tools: Invest in dedicated proxy management tools to automate tasks like pool switching, rotation, and health checks. These tools can significantly simplify your scraping workflow and save time.

Conclusion

In conclusion, web scraping is the automated process of collecting data from websites. Web scraping involves fetching the HTML code of a website, parsing the HTML code and storing the parsed data.

When scraping websites, your web scraper can be faced with different challenges, ranging from IP blocking, rate limiting, to dynamic web content. Proxy service providers such as Bright Data can help mitigate these challenges by providing IP rotation and headless browser. Web scraping has many benefits including price monitoring, data analysis, and market research.

However, it is prudent to comply to the Terms of Service of the website you are scraping to avoid being flagged. If you like this article, follow me for more content like this.