Understanding why K-Nearest Neighbors is called a “lazy learner”

K-Nearest Neighbors or KNN is one of the simplest machine learning algorithms. This algorithm is very easy to implement and equally easy to understand.

K-Nearest Neighbors or KNN is one of the simplest machine learning algorithms. This algorithm is very easy to implement and equally easy to understand.

It is a supervised machine learning algorithm. This means we need a reference dataset to predict the category/group of the future data point.

Once the KNN model is trained on the reference dataset, it classifies the new data points based on the points (neighbors) that are most similar to it. It uses the test data to make an “educated guess” as to which category should the new data points belong.

KNN algorithm simply means that “I am as good as my nearest K neighbors.”

Fundamental Working Of KNN

- As discussed earlier, KNN is a supervised machine learning algorithm.

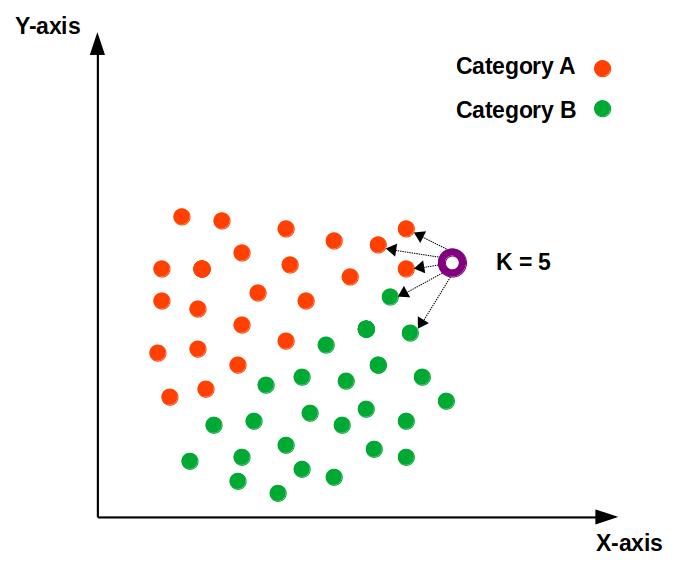

- In this example, let’s assume that we have a dataset in which the data points are classified into 2 categories.

3. Now, when the new data point comes in, the KNN algorithm will predict which category or group does the new data point belongs to.

4. To make this prediction, we first select a value for K.

Note: Here K is the Number of neighbors and not the number of categories or groups.

For our example, let’s take K = 5 nearest neighbors

5. Now, when a new data point comes in, the algorithm will identify 5 nearest neighbors to that new data point.

6. The new data point is classified as category A or category B based on the majority. From the graph, we can see that majority of neighbors belong to category A. Hence the new data point will be classified as category A.

Once our model is able to classify the new data points as category A or category B, we can say that our model is ready to make the predictions.

If you wish to learn the in-depth working of KNN, you can check out my blog: K-Nearest Neighbors (KNN) Algorithm For Machine Learning

Why Is The KNN Called A “Lazy Learner” Or A “Lazy Algorithm”?

KNN is called a lazy learner because when we supply training data to this algorithm, the algorithm does not train itself at all.

Yes, that’s true!

KNN does not learn any discriminative function from the training data. But it memorizes the entire training dataset instead.

There is no training time in KNN.

But, this skipping of training time comes with a cost.

Each time a new data point comes in and we want to make a prediction, the KNN algorithm will search for the nearest neighbors in the entire training set.

Hence the prediction step becomes more time-consuming and computationally expensive.

Conclusion

An active learner like logistic regression has a model fitting or training step. But the KNN algorithm does not have a training phase at all. Hence it is called a lazy learner.