For BERT (Bidirectional Encoder Representations from Transformers) to function effectively, datasets need to be prepared with specific considerations due to the model’s architecture and training objectives. Here are the key aspects to consider when preparing a dataset for BERT:

- Tokenization:

BERT takes token embeddings as input, so the text data needs to be tokenized into wordpieces/subwords using BERT’s tokenizer. BERT uses WordPiece tokenization. The tokens are converted into IDs that correspond to BERT’s vocabulary. - Training Objectives:

For pre-training, BERT uses two unsupervised tasks: Next Sentence Prediction (NSP) and Masked Language Model (MLM).

NSP: the model receives pairs of sentences and predicts if the second sentence in a pair is the subsequent sentence in the original text.

MLM: Some percentage of the input tokens are masked at random, and the model predicts the masked word based on the context. - Special Tokens:

BERT is trained on sentence pairs, so the data should be formatted as sentence pairs separated by a special token (e.g. [SEP]).

[CLS]: At the beginning of each sequence, this token is added. It serves as a unique segment that the model will use to represent sentence-level classifications.

[SEP]: This token is used to separate segments, such as two sentences in tasks that require understanding the relationship between them (e.g., question-answering).

[PAD]: Padding tokens are added to sequences to make them all the same length for batch processing. - Maximum Sequence Length:

BERT has a maximum sequence length limit (e.g., 512 tokens for BERT-base). Any sequence longer than this needs to be truncated. - Segment Embeddings:

For tasks involving pairs of sentences, BERT needs to distinguish between two sequences. This is done using segment embeddings, where one embedding is added to the tokens of the first sequence, and another to the tokens of the second. - Attention Masks:

These are necessary to allow the model to differentiate between content and padding, ensuring attention calculations are only performed on the content tokens.

By ensuring the dataset is properly formatted and pre-processed to meet these requirements, BERT can be effectively trained or fine-tuned for a variety of natural language processing tasks. So, let’s start with getting and formatting the data.

1. Get Data

For our tutorial, we will be utilizing the Cornell Movie-Dialogs Corpus. It is a large metadata-rich collection of fictional conversations extracted from raw movie scripts. For details, have a look here. Let’s download the data

!wget -P data/external http://www.cs.cornell.edu/~cristian/data/cornell_movie_dialogs_corpus.zip

!unzip -qq data/external/cornell_movie_dialogs_corpus.zip -d data/external/

!rm data/external/cornell_movie_dialogs_corpus.zip

There are Two text files, movie_conversations.txt and movie_lines.txt. A consecutive conversation between two users in a movie are given in movie_conversations.txt.

Actual lines can be get from movie_lines.txt.

2. Create conversation pairs for NSP

In order to train BERT, we need to generate pairs of conversation. Each pair consists of a line and its follow-up response, with both trimmed to a maximum length defined by SEQ_LEN to ensure consistency for the NLP model. The final output is a list of these dialogue pairs, structured to provide a contextual sequence for the model to learn from

SEQ_LEN = 64

# Define the file paths for the conversations and the lines in the movie dialog corpus.

corpus_movie_conv = 'data/external/movie-dialogs/movie_conversations.txt'

corpus_movie_lines = 'data/external/movie-dialogs/movie_lines.txt'

# Read the entire files into memory.

with open(corpus_movie_conv, 'r', encoding='iso-8859-1') as c:

conv = c.readlines()

with open(corpus_movie_lines, 'r', encoding='iso-8859-1') as l:

lines = l.readlines()

# Initialize an empty dictionary to store each line of dialogue

# {line_id(L893): dialogue_text(Joey never told you we went out, did he?)}

lines_dic = {}

for line in lines:

# Split the line using a predefined delimiter to extract the relevant parts.

objects = line.split(" +++$+++ ")

lines_dic[objects[0]] = objects[-1]

# Initialize a list to store pairs of dialogues.

conv_pairs = []

for line in conv:

# Extract line IDs for the current conversation and evaluate it as a list.

line_ids = eval(line.split(" +++$+++ ")[-1]) # Example: ['L194', 'L195', 'L196', 'L197']

for i in range(len(line_ids)-1):

# Retrieve the dialogue text corresponding to the current line ID

first = lines_dic[line_ids[i]].strip().split()[:SEQ_LEN]

second = lines_dic[line_ids[i+1]].strip().split()[:SEQ_LEN]

conv_pairs.append([' '.join(first), ' '.join(second)])

print(conv_pairs[20])

# output: ["I really, really, really wanna go, but I can't. Not unless my sister goes.",

# "I'm workin' on it. But she doesn't seem to be goin' for him."]

3. Tokenize the data

Tokenization is the process of breaking down a text into smaller units called “tokens,” which are then converted into a numerical representation. An example of this would be splitting the sentence “I like surfboarding!” → [‘[CLS]’, ‘i’, ‘like’, ‘surf’, ‘##board’, ‘##ing’, ‘!’, ‘[SEP]’] → [1, 48, 250, 4033, 3588, 154, 5, 2]. A tokenized BERT input always starts with a special [CLS] token and ends with a special [SEP] token.

To train the tokenizer, the BertWordPieceTokenizer from the HuggingFace transformer library is used. The train API needs files as text. So, we’ll first break the text into multiple files.

import os

from pathlib import Path

# Define the directory where the text files will be saved.

directory = '../../data/external/movie-dialogs/data'

if not os.path.exists(directory): os.makedirs(directory)

text_data, file_count = [], 0

# Iterate over the first item of each pair in the conversation pairs.

for sample in [x[0] for x in conv_pairs]:

# Append the current sample to the text data list.

text_data.append(sample)

# When the list reaches 10,000 samples, save it to a file.

if len(text_data) == 10000:

# Open a new text file for writing the samples.

with open(f'{directory}/text_{file_count}.txt', 'w', encoding='utf-8') as fp:

# Write all samples in the text data list to the file, separated by newlines.

fp.write('\n'.join(text_data))

# Clear the list for the next batch of samples.

text_data = []

file_count += 1

# After the loop, check if there are any remaining samples that were not written to a file.

if text_data:

with open(f'{directory}/text_{file_count}.txt', 'w', encoding='utf-8') as fp:

fp.write('\n'.join(text_data))

# List all text file paths in the directory for later use.

paths = [str(x) for x in Path(directory).glob('**/*.txt')]

Train the tokenizer given the file paths

from tokenizers import BertWordPieceTokenizer

from transformers import BertTokenizer

### training own tokenizer

tokenizer = BertWordPieceTokenizer(

clean_text=True,

handle_chinese_chars=False,

strip_accents=False,

lowercase=True

)

tokenizer.train(

files=paths,

vocab_size=30_000,

min_frequency=5,

limit_alphabet=1000,

wordpieces_prefix='##',

# CLS: serves as Start Of Sentence token

# SEP: serves as End Of Sentence token

# PAD: to be added into sentences so that all of them would be in equal length. Note that the [PAD] token with id of 0 will not contribute to the gradient .

# MASK: for word replacement during masked language prediction

special_tokens=['[PAD]', '[CLS]', '[SEP]', '[MASK]', '[UNK]']

)

directory = '../../data/external/movie-dialogs/bert-it-1'

if not os.path.exists(directory): os.mkdir(directory)

tokenizer.save_model(directory, 'bert-it')

To test, our tokenizer, we can tokenize a simple text using the trained tokenizer.

tokenizer = BertTokenizer.from_pretrained(f'{directory}/bert-it-vocab.txt', local_files_only=True)

token_ids = tokenizer('surfboarding!')['input_ids']

print(token_ids)

print(tokenizer.convert_ids_to_tokens(token_ids))

# Output: [1, 3958, 3554, 154, 5, 2]

# ['[CLS]', 'surf', '##board', '##ing', '!', '[SEP]']

4. Pre-Process

BERT’s distinctive approach to pre-training is among the key factors that enable it to capture the context of a sentence. BERT does not try to predict the next word in the sentence. Instead, it employes two unsupervised tasks for pre-training.

- NSP, the model receives pairs of sentences and predicts if the second sentence in a pair is the subsequent sentence in the original text. During training the model is fed with two input sentences at a time such that:

— 50% of the time the second sentence comes after the first one.

— 50% of the time it is a a random sentence from the full corpus. - MLM, some percentage of the input tokens are masked at random, and the model predicts the masked word based on the context. For training, mask out 15% of the words in the input. Out of the 15% of the tokens selected for masking

— 80% of the tokens are actually replaced with the token [MASK].

— 10% of the time tokens are replaced with a random token.

— 10% of the time tokens are left unchanged.

During preprocessing, we’ll define a custom PyTorch Dataset class named BERTDataset, which is intended to be used for training a (BERT) model. We’ll implement below functions

- get_pair(): This function will return a pair of sentences. Fifty percent of the time, the second sentence will be the actual subsequent sentence from the conversation; the other fifty percent, 2nd sentence will not be a subsequent sentence. The

is_nextlabel will indicate whether the second sentence is indeed the following sentence in the sequence. - mask_sentence(): Randomly mask out 15% of the words in the input — replacing them with a [MASK] token as per guidelines described above.

- getitem():

a) get a random sentence pair, either negative or positive (saved as is_next_label)

b) replace random words in sentence with mask / random words.

c) _Adding CLS and SEP tokens to the start and end of sentences

_d) combine sentence 1 and 2 as one input

from torch.utils.data import Dataset, DataLoader

import random

import torch

class BERTDataset(Dataset):

def __init__(self, conv_pairs, tokenizer, seq_len):

self.tokenizer = tokenizer

self.seq_len = seq_len

self.num_pairs = len(conv_pairs)

self.conv_pairs = conv_pairs # List of lists. Each item holds pair of conversation.

def __len__(self):

return self.num_pairs

def __getitem__(self, idx):

# Step 1: get a random sentence pair, either negative or positive (saved as is_next_label)

# is_next=1 means the second sentence comes after the first one in the conversation.

s1, s2, is_next = self.get_pair(idx)

# Step 2: replace random words in sentence with mask / random words

masked_numericalized_s1, s1_mask = self.mask_sentence(s1)

masked_numericalized_s2, s2_mask = self.mask_sentence(s2)

# Step 3: Adding CLS and SEP tokens to the start and end of sentences

# Adding PAD token for labels

t1 = [self.tokenizer.vocab['[CLS]']] + masked_numericalized_s1 + [self.tokenizer.vocab['[SEP]']]

t2 = masked_numericalized_s2 + [self.tokenizer.vocab['[SEP]']]

t1_mask = [self.tokenizer.vocab['[PAD]']] + s1_mask + [self.tokenizer.vocab['[PAD]']]

t2_mask = s2_mask + [self.tokenizer.vocab['[PAD]']]

# Step 4: combine sentence 1 and 2 as one input

# adding PAD tokens to make the sentence same length as seq_len

segment_ids = ([1 for _ in range(len(t1))] + [2 for _ in range(len(t2))])[:self.seq_len]

bert_input = (t1 + t2)[:self.seq_len]

bert_label = (t1_mask + t2_mask)[:self.seq_len]

padding = [self.tokenizer.vocab['[PAD]'] for _ in range(self.seq_len - len(bert_input))]

bert_input.extend(padding), bert_label.extend(padding), segment_ids.extend(padding)

output = {"bert_input": bert_input,

"bert_label": bert_label,

"segment_ids": segment_ids,

"is_next": is_next}

return {key: torch.tensor(value) for key, value in output.items()}

'''

BERT Training makes use of the following two strategies:

1. Next Sentence Prediction (NSP)

During training the model gets as input pairs of sentences and it learns to predict if the second sentence is the next sentence in the original text as well.

During training the model is fed with two input sentences at a time such that:

- 50% of the time the second sentence comes after the first one.

- 50% of the time it is a a random sentence from the full corpus.

BERT is then required to predict whether the second sentence is random or not, with the assumption that the random sentence will be disconnected from the first sentence:

'''

def get_pair(self, index):

# conv_pairs=[["I really, really, really wanna go, but I can't. Not unless my sister goes.", "I'm workin' on it. But she doesn't seem to be goin' for him."]]

s1, s2 = self.conv_pairs[index]

is_next = 1

if random.random() > 0.5:

random_index = random.randrange(len(self.conv_pairs))

s2 = self.conv_pairs[random_index][1]

is_next = 0

return s1, s2, is_next

'''

2. Masked LM (MLM):

Randomly mask out 15% of the words in the input — replacing them with a [MASK] token.

Run the entire sequence through the BERT attention based encoder and then predict only the masked words, based on the context provided by the other non-masked words in the sequence. However, there is a problem with this naive masking approach — the model only tries to predict when the [MASK] token is present in the input, while we want the model to try to predict the correct tokens regardless of what token is present in the input. To deal with this issue, out of the 15% of the tokens selected for masking:

80% of the tokens are actually replaced with the token [MASK].

10% of the time tokens are replaced with a random token.

10% of the time tokens are left unchanged.

'''

# Replace random words in sentence with mask / random words

# s = "I really, really, really wanna go, but I can't. Not unless my sister goes."

def mask_sentence(self, s):

words = s.split()

masked_numericalized_s = []

mask = []

for word in words:

prob = random.random()

token_ids = self.tokenizer(word)['input_ids'][1:-1] # remove cls and sep token

if prob < 0.15: # Mask out 15% of the words in the input

prob /= 0.15

for token_id in token_ids: # Iterate through token ids regardless of masking decision

if prob < 0.8: # Among 15%, 80% will be replaced with the token 'Mask'

masked_numericalized_s.append(self.tokenizer.vocab['[MASK]'])

elif prob < 0.9: # Among 15%, 10% will be replaced with a random token

masked_numericalized_s.append(random.randrange(len(self.tokenizer.vocab)))

else: # Among 15%, 10% will be left unchanged

masked_numericalized_s.append(token_id) # Adding unchanged tokens

mask.append(token_id) # Mask label added for each token

else:

masked_numericalized_s.extend(token_ids) # Adding tokens directly if not masked

mask.extend([0] * len(token_ids)) # Corresponding unmasked labels

assert len(masked_numericalized_s) == len(mask)

return masked_numericalized_s, mask

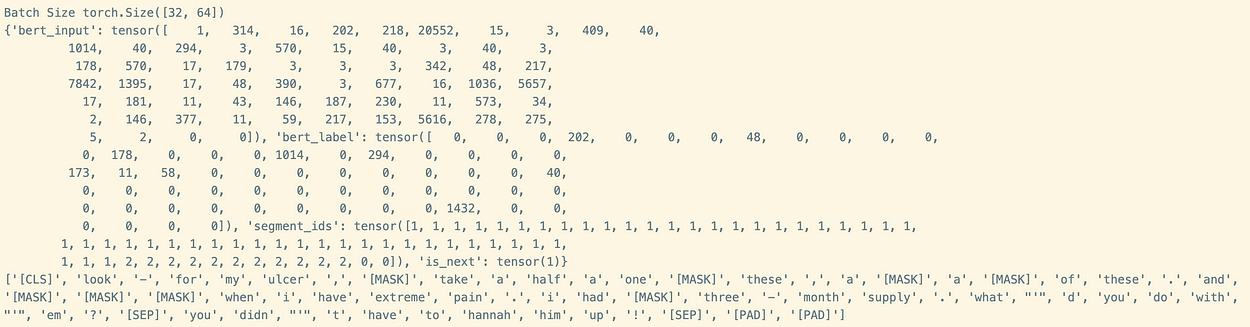

In order to see result of this pre-processing, you can pick one pair and see its tokens, token places which were randomly masked and segment_ids

# test

train_data = BERTDataset(conv_pairs, seq_len=64, tokenizer=tokenizer)

train_loader = DataLoader(train_data, batch_size=32, shuffle=True, pin_memory=True)

sample_data = next(iter(train_loader))

print('Batch Size', sample_data['bert_input'].size())

# 3 is MASK

result = train_data[random.randrange(len(train_data))]

print(result)

print(tokenizer.convert_ids_to_tokens(result['bert_input']))

Each record of the training data contains below keys

bert_inputhas tokenized sentences. Note that token ‘2’ is used to seperate two sentences.bert_labelstores zeros for the unmasked tokens. It stores original tokens of selected masking tokens. e.g token ‘48’ was masked.segment_labelas the identifier for sentence A or B, this allows the model to distinguish between sentencesis_nextas truth label for whether the two sentences are related

With that, our data is ready for the pre-training of our model. In next blog post, we’ll take a look at how to build a BERT model using pytorch.

References

Comments

Loading comments…