Photo by Everyday basics on Unsplash

Introduction

According to its creator, LogSpace, a software company that provides customized Machine Learning services, Langflow is a web-based UI for LangChain designed with react-flow. Langflow provides an effortless way to experiment and prototype flows. LangChain, created by Harrison Chase, is a wildly popular framework for developing applications powered by large language models (LLMs).

Langflow allows users to quickly develop simple to complex LangChain prototypes without requiring coding skills, truly democratizing LLM access. According to the project’s documentation, “Creating flows with Langflow is easy. Simply drag sidebar components onto the canvas and connect them to create your pipeline. Langflow provides a range of LangChain components, including LLMs, prompt serializers, agents, and chains.” I recently published the blog, Turn Your Langflow Prototype into a Streamlit Chatbot Application, which explains how to use Langflow and turn prototypes into Streamlit applications.

In this post, I will walk you through how to build a conversational chatbot to answer wine-related questions, a “Virtual Sommelier” if you will, using Retrieval Augmented Generation (RAG), in 20 minutes or less with almost little to no coding required.

Steps

git clonethe post’s GitHub project repository- Install Langflow yourself, or use the Docker Image created specifically for this post

- Build two flows using Langflow’s visual interface, or import the Collection file created specifically for this post

- Run the two Langflow flows and test the chatbot’s functionality using Langflow’s chat UI

- Install Streamlit using

pipand update the Streamlit application code, supplied in the post’s GitHub project repository, with yourFLOW_IDand Tweaks configuration - Run the conversational chatbot in your web browser using Streamlit

Prerequisites

- Current version of

gittogit clonethis project’s GitHub repository - Current version of Docker or Docker Desktop installed, only if you chose Docker to install Langflow in Step 1, above

- Current version of Python 3 installed for Steps 6–7, above

- Your personal API key for your large language model (LLM) provider of choice (e.g., Anthropic, Cohere, OpenAI)

1. Clone Project’s GitHub Repository

To clone the post’s GitHub project repository, run the following command from your terminal or use your favorite git client.

git clone https://github.com/garystafford/build-chatbot-with-langflow.git

2. Deploy Langflow

Langflow’s documentation includes instructions on how to deploy Langflow locally, to Google Cloud Platform, Jina AI Cloud, Railway, or Render. Follow the directions included in the documentation to deploy Langflow. Alternatively, if you have Docker installed locally or you are using Kubernetes, running a containerized version of Langflow is quick and easy. I have pre-built a Langflow Docker Image and pushed it to Docker Hub. This image runs on Linux-based OS and non-ARM-based Macs.

# optional: pre-pull the image

docker pull garystafford/langflow:0.3.3

docker run -d -p 7860:7860 --name langflow garystafford/langflow:0.3.3

docker container ls

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1da79e669809 garystafford/langflow:0.3.3 "python -m langflow …" 8 minutes ago Up 8 minutes 0.0.0.0:7860->7860/tcp langflow

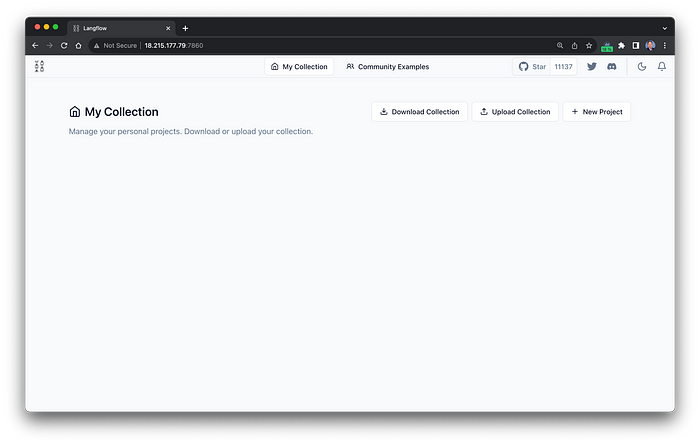

Langflow should now be available locally on port 7860. You should see Langflow’s visual UI home page in your web browser if Langflow is running properly.

Langflow’s visual UI home page:

3. Building the Flows

The chatbot will be composed of two Langflow flows. You have the option of building the flows yourself or uploading a pre-built Langflow Collection with both flows included in the post’s GitHub project repository.

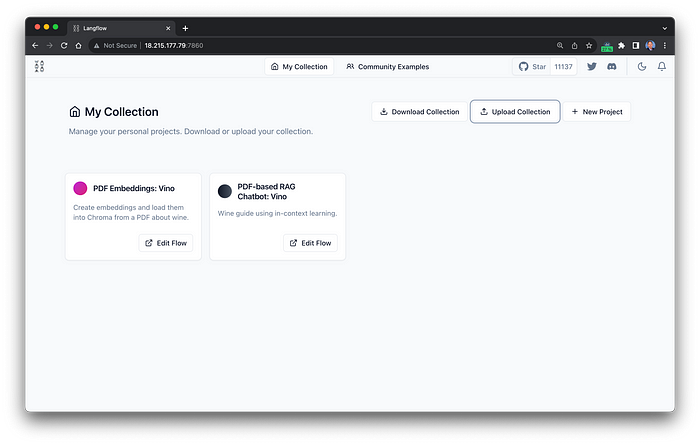

Option 1: Importing Pre-built Collection

To import the pre-built collection from the post’s GitHub project repository click on the Upload Collection button in the upper left corner of the Langflow’s visual UI home page. Chose the flows.json file from the project’s directory.

Langflow’s visual UI home page with the Collection uploaded:

Option 2: Build the Flows

Langflow uses LangChain components. Our chatbot starts with the ConversationalRetrievalQA chain, ConversationalRetrievalChain, which builds on RetrievalQAChain to provide a chat history component. It first combines the chat history (either explicitly passed in or retrieved from the provided memory) and the question, then looks up relevant documents from the retriever and finally passes those documents and the question (aka prompt) to a question answering chain to return a response. This method is referred to as Retrieval Augmented Generation (RAG), a form of in-context learning (aka few-shot prompting).

The chatbot uses LangChain’s PDF document loader, PyPDFLoader, recursive text splitter, RecursiveCharacterTextSplitter, Hugging Face’s text embeddings, HuggingFaceEmbeddings, and Chroma vector store through LangChain’s vectorstores module. The PDF file is split into individual documents by the RecursiveCharacterTextSplitter. Documents are then converted to vector embeddings by the HuggingFaceEmbeddings, which creates vector representations (lists of numbers) of a piece of text. The embeddings are stored in Chroma, an AI-native open-source embedding database. Using a semantic similarity search, relevant documents are retrieved based on the end-users question and passed to a question answering chain, along with the question to return a response.

Langflow Flow 1: Creating Embeddings

The conversation chatbot has two flows. The first flow handles text splitting, embedding, and uploading to the vector store. This is a one-time process; there is no need to recreate the embeddings or incur additional charges to recreate embeddings every time we run the chatbot.

First, drop and drag the four LangChain components shown below from the components menu on the left onto the canvas and connect them as shown: PyPDFLader, RecursiveCharacterTextSplitter, Chroma, and HuggingFaceEmbeddings. You can substitute the HuggingFaceEmbeddings Embeddings component for CohereEmbeddings or OpenAIEmbeddings. The later two will require your personal API key and incur a cost. You can also substitute the Chroma Vector Stores component for several alternatives including as FAISS, Pinecone, Qdrant, or MongoDB Atlas.

Once the flow is built, select your PDF file for the PyPDFLader Loaders component. The project includes the PDF file, basic_wine_guide.pdf. This free guide by Vincarta works well as a source of knowledge for our RAG-based conversational chatbot. Lastly, run the flow using the round yellow lightning button in the lower right corner.

Langflow Flow 1: Embeddings:

Langflow Flow 2: Conversational Retrieval Chain

The second flow retrieves the relevant documents from the vector store placed there by the first flow, manages the conversational buffer memory, accepts the prompt from the end user, and handles calls to the LLM.

First, drop and drag the five LangChain components shown below into the flow from the components menu on the left onto the canvas and connect them as shown: HuggingFaceEmbeddings, Chroma, ChatOpenAI, ConversationBufferMemory and ConversationalRetrievalChain. You can substitute the ChatOpenAI LLMs components several other LLMs providers including Anthropic, OpenAI, Cohere, HuggingFaceHub, and VertexAI. The choice of LLMs will have a major impact on the quality of the response. Each LLM provider may have multiple LLMs to choose from, such as gpt-4 for OpenAIChat, which is used in this demonstration.

Next, for most LLM providers, you will need to supply your personal API key. Lastly, run the flow using the round yellow lightning button in the lower right corner.

Langflow Flow 2: Conversational Chatbot:

4. Test the Chatbot’s RAG Functionality

We can confirm that our RAG-based conversational chatbot uses Langflow’s built-in chat interface (blue chat button in the lower right corner). To ensure the chatbot’s knowledge of wines based on the Vincarta PDF, we can ask questions that are specific to the guide, such as “What are the world’s most important varieties of wine? Return in a numbered list.”

Langflow’s chatbot interface:

The answer is exactly the same as the list of six wines found in the guide:

Excerpt from Vincarta wine guide:

5. Configure the Streamlit App

Next, we will turn the Langflow flows into a standalone conversational chatbot. First, install the streamlit and streamlit-chat packages using pip from your terminal.

pip install streamlit streamlit-chat

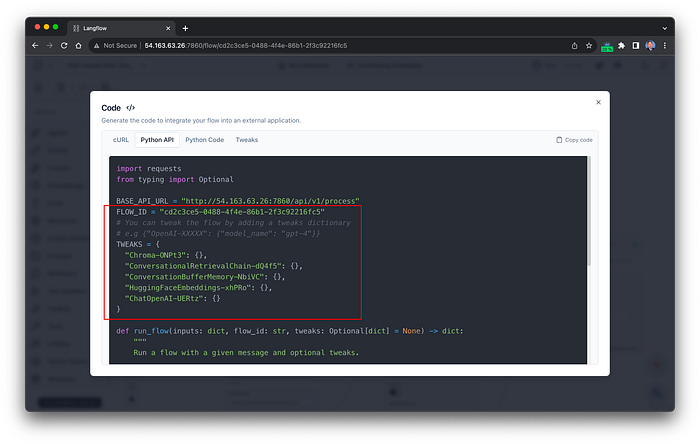

Next, update the Streamlit application code, streamlit_app.py, supplied in the post’s GitHub project repository, with your FLOW_ID and TWEAKS.

Your flow’s unique FLOW_ID and TWEAKS:

Just copy and paste the eight lines of code from Langflow’s Python API Code tab of Flow #2 into the streamlit_app.py file, shown below. Note that your FLOW_ID and TWEAKS will be unique to your flow.

# Conversational Retrieval QA Chatbot, built using Langflow and Streamlit

# Author: Gary A. Stafford

# Date: 2023-07-31

# Requirements: pip install streamlit streamlit_chat -Uq

# Usage: streamlit run streamlit_app.py

import logging

import sys

from typing import Optional

import requests

import streamlit as st

from streamlit_chat import message

log_format = "%(asctime)s - %(name)s - %(levelname)s - %(message)s"

logging.basicConfig(format=log_format, stream=sys.stdout, level=logging.INFO)

BASE_API_URL = "http://localhost:7860/api/v1/process"

BASE_AVATAR_URL = (

"https://raw.githubusercontent.com/garystafford/build-chatbot-with-langflow/main/static"

)

# ***** REPLACE THE FOLLOWING LINES OF CODE *****

FLOW_ID = "cd2c3ce5-0488-4f4e-86b1-2f3c92216fc5"

TWEAKS = {

"Chroma-ONPt3": {},

"ConversationalRetrievalChain-dQ4f5": {},

"ConversationBufferMemory-NbiVC": {},

"HuggingFaceEmbeddings-xhPRo": {},

"ChatOpenAI-UERtz": {},

}

# ************************************************

def main():

st.set_page_config(page_title="Virtual Sommelier")

st.markdown("##### Welcome to the Virtual Sommelier")

if "messages" not in st.session_state:

st.session_state.messages = []

for message in st.session_state.messages:

with st.chat_message(message["role"], avatar=message["avatar"]):

st.write(message["content"])

if prompt := st.chat_input("I''m your virtual Sommelier, how may I help you?"):

# Add user message to chat history

st.session_state.messages.append(

{

"role": "user",

"content": prompt,

"avatar": f"{BASE_AVATAR_URL}/people-64px.png",

}

)

# Display user message in chat message container

with st.chat_message(

"user",

avatar=f"{BASE_AVATAR_URL}/people-64px.png",

):

st.write(prompt)

# Display assistant response in chat message container

with st.chat_message(

"assistant",

avatar=f"{BASE_AVATAR_URL}/sommelier-64px.png",

):

message_placeholder = st.empty()

with st.spinner(text="Thinking..."):

assistant_response = generate_response(prompt)

message_placeholder.write(assistant_response)

# Add assistant response to chat history

st.session_state.messages.append(

{

"role": "assistant",

"content": assistant_response,

"avatar": f"{BASE_AVATAR_URL}/sommelier-64px.png",

}

)

def run_flow(inputs: dict, flow_id: str, tweaks: Optional[dict] = None) -> dict:

api_url = f"{BASE_API_URL}/{flow_id}"

payload = {"inputs": inputs}

if tweaks:

payload["tweaks"] = tweaks

response = requests.post(api_url, json=payload)

return response.json()

def generate_response(prompt):

logging.info(f"question: {prompt}")

inputs = {"question": prompt}

response = run_flow(inputs, flow_id=FLOW_ID, tweaks=TWEAKS)

try:

logging.info(f"answer: {response[''result''][''answer'']}")

return response["result"]["answer"]

except Exception as exc:

logging.error(f"error: {response}")

return "Sorry, there was a problem finding an answer for you."

if __name__ == "__main__":

main()

6. Run Streamlit-based Conversational Chatbot

To run the conversational chatbot in your web browser, use the following Streamlit command:

streamlit run streamlit_app.py

The final Streamlit-based conversational chatbot should resemble the screen grabs below. Simply ask it any question based on the information in the the Vincarta PDF.

Streamlit-based conversational chatbot:

Conclusion

In this brief post, we learned about the no-code capabilities of Langflow to produce prototypes of LangChain applications. Further, we learned how to use the sample code provided by Langflow and Streamlit to create a fully functional conversational chatbot quickly with minimal coding.

Comments

Loading comments…