Tired of hitting the token limit while working with Llama 2? Enter CodeLlama, a family of large language models that shatters token constraints and unlocks true coding potential. Built upon the foundation of Llama 2, CodeLlama offers several flavors catered specifically for code-related tasks, ensuring your creativity can finally run wild.

Breaking Free from the Token Shackles

Llama 2, while impressive, limited users to processing sequences of 16,000 tokens, often proving insufficient for complex code generation or analysis. CodeLlama expands this horizon exponentially, handling up to 100,000 tokens comfortably. This translates to tackling larger scripts, full functions, and even entire modules without the frustration of chopping your queries into bite-sized pieces.

A Model Built for Code

Unlike generic language models struggling with the intricacies of programming, CodeLlama is honed for the intricacies of code. Its architecture considers syntax, semantics, and the broader context of the programming language, enabling it to:

- Generate syntactically valid code: Forget nonsensical snippets riddled with errors. CodeLlama understands the grammar and rules of your chosen language, producing functional and accurate code.

- Complete code fragments: Struggling to wrap up a function? CodeLlama can intelligently suggest missing lines, auto-fill variables, and even propose alternative implementations based on your intent.

- Analyze and debug code: Unsure why your code isn’t behaving as expected? CodeLlama can help identify potential errors, suggest fixes, and even explain what specific section might be causing problems.

Examples of Llama2 and CodeLlama

To demonstrate the practical capabilities and differences between Llama2 and CodeLlama, let’s consider a real-world example. The prompt used for this test was sourced from ChatGPT and is designed to push the limits of what language models can generate, especially in terms of code.

Llama2’s Attempt:

Using this prompt, Llama2 was put to the test. The expectation was for it to generate a comprehensive Python code in line with the specifications. However, it encountered a significant limitation. Llama2 reached its token limit before it could produce the complete response. This outcome highlights one of the primary constraints of Llama2 when dealing with complex, code-heavy tasks.

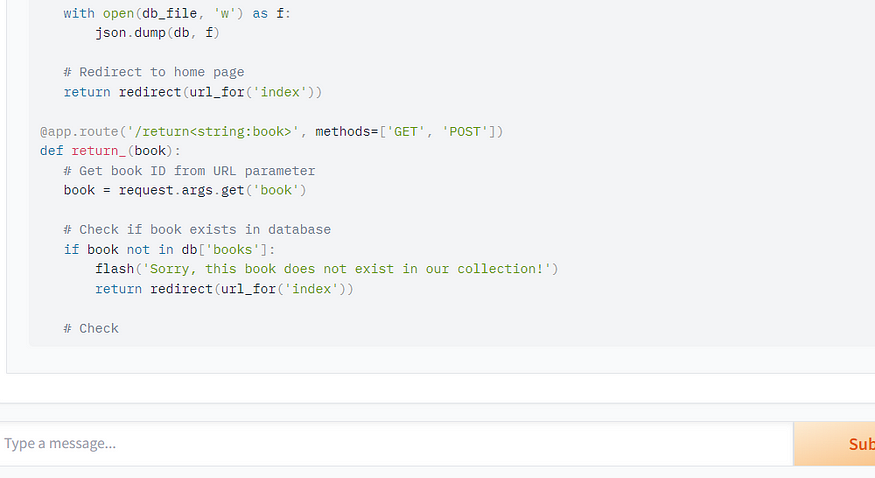

CodeLlama’s Performance:

In contrast, when the same prompt was given to CodeLlama, the results were markedly different. Thanks to its higher token limit and specialized focus on coding tasks, CodeLlama successfully generated a complete response, providing the full Python program as requested. The code was syntactically correct, well-commented, and covered all the aspects of the library management system as outlined in the prompt. This example showcases CodeLlama’s enhanced capacity for handling extensive coding tasks without hitting token limitations.

Putting CodeLlama to Work

Whether you’re bumping up against token limits or simply seeking a more advanced coding tool, here’s how to effectively integrate CodeLlama into your workflow:

-

Choose your flavor: Different models cater to specific needs. Opt for the “foundation model” for general purposes, “Python specialization” for Python-centric tasks, or even specialized instruction-following versions depending on your workflow.

-

Leverage integration platforms: Popular libraries like Hugging Face and Transformers provide seamless integration of CodeLlama into your existing coding environment, making it an effortless extension of your toolset.

-

Explore the resources: Meta AI provides extensive documentation, tutorials, and even pre-trained models on their website, offering a comprehensive guide to get you started and unlock CodeLlama’s full potential.

Exploring CodeLlama Further

For those interested in delving deeper into CodeLlama’s capabilities, customizing its integration for specific workflows, or discussing its potential for larger projects, Woyera is here to assist. Our team of AI experts specializes in guiding businesses and individuals through the effective utilization of large language models like CodeLlama.

Feel free to schedule a consultation with us at www.woyera.com to discuss how we can help you unlock the possibilities of CodeLlama and achieve your coding objectives.

Conclusion

Forget workarounds and token limitations. CodeLlama is a leap forward, empowering developers and enthusiasts to code without boundaries. So, break free from the shackles of small inputs, dive into the world of large-scale code generation and analysis, and experience the future of coding with CodeLlama.

Comments

Loading comments…