Ever since the arrival of ChatGPT, I’ve been enthralled by the potential of such LLMs (Large Language Models). I consider them as very smart agents that have cracked the code to understand natural language. Without a doubt, conversing with these agents to get work done, is a skill everyone would have to master sooner or later. This means that we would need to learn how to engineer prompts for the AI agent.

So, Ladies and gentlemen, prepare to enter the enchanting realm of prompt engineering, where we transform robot confusion into “Aha!” robot moments!

Prompt 1: Tell me about prompt engineering.

Prompt Engineering is nothing but an art of communicating with AI to get what you want. Prompt engineering involves crafting or designing specific instructions or prompts to guide the language model’s generation or response. These prompts serve as a way to elicit desired behavior from the model and help shape its output. By carefully constructing prompts, researchers and developers can influence the model to produce more accurate or useful responses for specific tasks or domains.

Prompt engineering can be particularly useful when working with large language models like GPT-3.5, as they can generate coherent and contextually relevant responses. By providing clear and explicit instructions in the prompt, users can guide the model to perform various tasks, such as answering questions, summarizing text, translating languages, or even creating fictional stories.

Successful prompt engineering involves understanding the capabilities and limitations of the language model and tailoring the prompts accordingly. It requires experimentation, fine-tuning, and iterative refinement to achieve the desired results.

Prompt 2: How is it done?

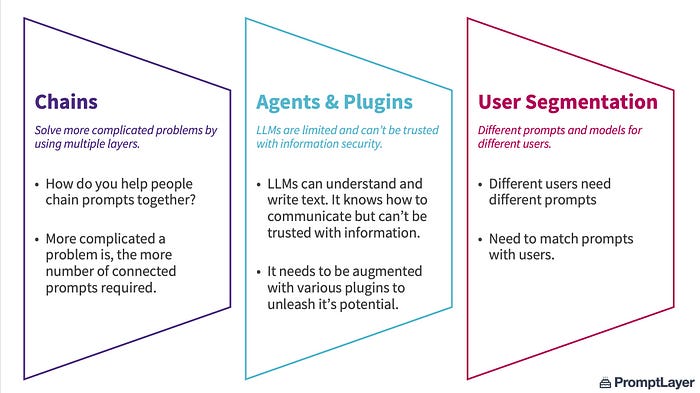

Prompt Engineering Basics — Prompt Layer:

Chains

Chaining prompts refers to the technique of using multiple prompts sequentially to guide the generation or response of a language model. Instead of providing a single prompt, a series of prompts is used to build a more complex and context-rich input for the model.

Chaining prompts can be useful in scenarios where a single prompt might not provide sufficient information or context for the desired output. By breaking down the task into smaller steps or by adding additional instructions in subsequent prompts, the model can build upon previous information and produce more accurate or detailed responses.

Here’s a simplified example to illustrate the concept:

- Initial prompt: “Write a summary of the given article on climate change.”

- Intermediate prompt: “The article discusses the causes and effects of climate change, including global warming, rising sea levels, and extreme weather events. Summarize the main points in a concise paragraph.”

- Final prompt: “Based on the information provided, write a summary of the article in 3–4 sentences.”

In this example, the initial prompt sets the task and provides a general context. The intermediate prompt adds specific instructions, highlighting key aspects to be included in the summary. The final prompt refines the instructions further, specifying the desired length and format of the summary.

By chaining prompts, you can progressively guide the model and help it generate more accurate and contextually relevant responses. It allows for a more nuanced and controlled interaction with the language model, ensuring that the output aligns with your intended goals or requirements.

Agents & Plugins

It’s true that LLMs can understand language but they can’t be completely trusted when it comes to maths, for example. They are also not a 100% accurate when it comes to information.

The only information they can learn from is their training data. This information can be out-of-date and is one-size fits all across applications. Furthermore, the only thing language models can do out-of-the-box is emit text. This text can contain useful instructions, but to actually follow these instructions you need another process.

Though not a perfect analogy, plugins can be “eyes and ears” for language models, giving them access to information that is too recent, too personal, or too specific to be included in the training data. In response to a user’s explicit request, plugins can also enable language models to perform safe, constrained actions on their behalf, increasing the usefulness of the system overall.

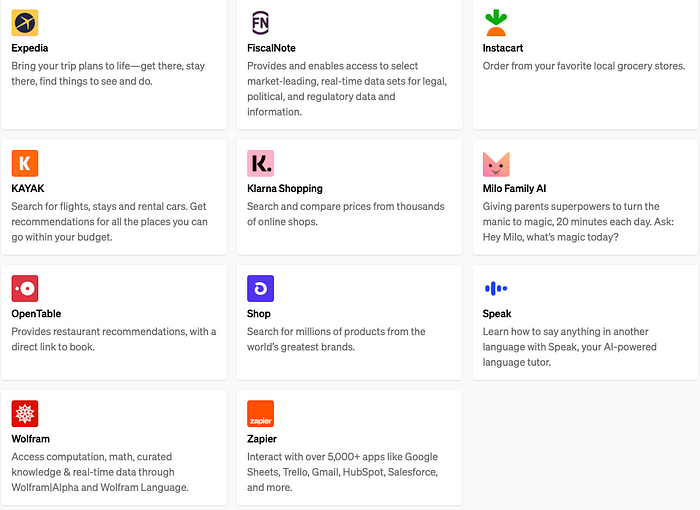

Below are some plugins that OpenAI is offering with it’s ChatGPT plus subscription.

ChatGPT Plugins — OpenAI:

These Agents and Plugins have the ability to harness the real power of the LLMs.

User Segmentation

Segmenting users while prompt engineering involves tailoring prompts to specific user groups or segments based on their characteristics, preferences, or goals. By customizing prompts for different user segments, you can provide more targeted and relevant experiences. Here are some steps to consider when segmenting users for prompt engineering:

- Identify User Segments: Start by identifying the different user segments or groups that interact with your system or application. Consider factors such as demographics, user preferences, skill levels, or specific use cases. For example, you might have segments like beginners, experts, students, professionals, or users with different language backgrounds.

- Understand User Needs: Analyze the needs, goals, and expectations of each user segment. Consider what tasks they want to accomplish, the type of information they seek, and any specific challenges they may have. This understanding will help you create prompts that align with their requirements.

- Define Segment-Specific Objectives: Determine the objectives or outcomes you want to achieve for each user segment. For instance, you may aim to provide concise explanations for beginners, in-depth analysis for experts, or practical examples for professionals. Clear objectives will guide the prompt engineering process.

- Craft Segment-Specific Prompts: Develop prompts that resonate with each user segment’s characteristics and objectives. Consider using language and terminology that is familiar and appropriate for each group. Tailor the prompts to address their specific needs, challenges, or interests. You can also leverage segment-specific context or examples in the prompts.

- Iterate and Refine: Continuously test, iterate, and refine the prompts based on user feedback and performance evaluation. Solicit input from users in each segment to gather insights and make adjustments as needed. Regularly analyzing user responses can help identify areas for improvement and allow you to fine-tune the prompts for better results.

- Monitor and Adapt: Monitor how users in different segments engage with the prompts and adapt accordingly. Keep track of user satisfaction, completion rates, or other relevant metrics to gauge the effectiveness of prompt segmentation. Adjust the prompts over time to ensure they continue to meet the evolving needs of each user segment.

Segmenting users while prompt engineering allows you to personalize the experience, improve user engagement, and deliver more targeted results. By understanding the characteristics and objectives of each user segment, you can create prompts that better align with their specific requirements and enhance their interaction with the system or application.

Prompt 3: What can possibly go wrong?

Well, LLMs come with their own problems, but that’s a topic for another discussion. For now, let’s see what are some common challenges while doing prompt engineering:

- Generalization: Prompt engineering aims to achieve generalized behavior from language models. However, ensuring that prompts work consistently across various inputs and scenarios can be difficult. Prompt engineering may require fine-tuning and iteration to achieve robust and reliable performance.

- Data and Bias: Similar to training LLMs, prompt engineering can also be influenced by biases present in the training data. Biased prompts may result in biased or skewed responses. It’s crucial to review and validate the prompts for potential biases and ensure fairness and inclusivity.

- Evaluation and Iteration: Evaluating the effectiveness of prompts can be complex. Determining the metrics, establishing appropriate test sets, and collecting user feedback require careful planning and execution. How to determine if prompt A is better than prompt B? Is the new prompt safe to ship? Additionally, iterating on prompts based on evaluation results and user feedback can be time-consuming and resource-intensive.

- Interpretability and Explainability: Prompt engineering can introduce complexity to the interpretability of the system. It may be challenging to explain the specific influence of prompts on the model’s behavior, especially in black-box models. Striving for transparency and interpretability is important to ensure user trust.

- System Complexity: Incorporating prompt engineering into existing systems or workflows may introduce additional complexity. Integration with external agents, plugins, or chatbot frameworks requires careful coordination and development effort.

- Breakages: What does a failure even mean? How do you detect failures? It’s a complex task where it gets very difficult to claim that a prompt has generated a correct response. More so, what metrics do we deploy to identify a correct response?

Overcoming these challenges often involves a combination of experimentation, iteration, continuous evaluation, and collaboration between prompt engineers, data scientists, and domain experts. By addressing these challenges, prompt engineering can unlock the full potential of language models and enhance their performance in specific applications and contexts.

Well, that’s all for now. I will be posting more on Prompt Engineering, LLMOps, MLOps in my blog series. So, stay tuned and feel free to follow me and subscribe to my blog for regular updates.

Find me on LinkedIn.