Short introduction to AWS CDK

AWS CDK is a powerful tool for automating almost all aspects of your workloads deployments in AWS environments.

AWS CDK can be considered a proxy to CloudFormation in the sense that all your CDK code gets translated into CloudFormation templates and submitted to the CloudFormation service for execution.

CDK provides constructs to model the use of AWS services, organised in a hierarchical structure based on the level of abstraction:

- L1 constructs (starting with Cfn) are 1:1 mapping to CloudFormation entities. They are basic building blocks used by higher level constructs.

- L2 constructs provides a higher level of abstraction over L1 constructs to simplify the modelling of complex infrastructure using a more expressive set of entities.

- L3 constructs uses L2 and L1 constructs to build skeleton solutions based on recognised patterns.

It is worth considering certain aspects:

- L1 constructs are based on a specific version of the CloudFormation specifications so you won’t see certain new features until adopted in a CloudFormation specification that is also adopted in CDK

- L2 constructs are typically opinionated, meaning that certain assumptions are made to reduce the complexity of the construct and simplify its use, which might lead to make certain L1 properties either inaccessible or hard-wired.

- When using an L2 construct is always possible to “go down” to the corresponding L1 construct and modify the low-level configuration. This is typically done through “escape hatches” and, although very useful at times, it must be used with caution since it can break the internal behaviour of the L2 construct.

For a broader discussion on these topics, I would recommend reading the section “Abstractions and escape hatches” of the CDK guide.

S3 replication

S3 bucket objects replication is a particularly important topic in many use-cases related to business continuity and disaster recovery for AWS deployments, especially in the context of cross-region configurations.

Unfortunately, AWS CDK at the current version (2.57.0) does not natively provide an L2+ construct that supports cross-region replication or replication at all for S3 buckets.

However, since the majority of configuration properties are always available in the corresponding L1 construct, is not too difficult to build your own L2 construct that supports replication, using an escape hatch.

A possible implementation of this strategy is the following:

import { Bucket, BucketProps, CfnBucket, CfnBucketProps } from "aws-cdk-lib/aws-s3";

import { Construct } from "constructs";

export class ReplicatedBucket extends Bucket {

constructor(

scope: Construct,

id: string,

props: BucketProps & Required<Pick<CfnBucketProps, 'replicationConfiguration'>>

) {

const { replicationConfiguration, ...bucketProps } = props;

super(scope, id, bucketProps);

(this.node.defaultChild as CfnBucket).replicationConfiguration = replicationConfiguration;

}

}

In the snippet code, I created an L2 construct ReplicatedBucket, extending the existing L2 construct Bucket with a couple of additions:

- the configuration properties bundle “props” is extended to include the replication configuration from the L1 CfnBucket properties bundle

- the constructor is implemented to extract the required “replicationConfiguration” property and use it to set the attribute value for the L1 CfnBucket object

In particular, line 12 in the constructor code

(this.node.defaultChild as CfnBucket).replicationConfiguration = replicationConfiguration;

uses the escape hatch “(this.node.defaultChild as CfnBucket)” as a way to downcast the object to access the L1 construct and set the low-level property related to the replication configuration that is passed as argument.

Cross-region setup for replication

Since the context of this article is the setup of cross-region replication for an S3 bucket, typically this is implemented using a CDK pipeline configured for cross-region deployment.

The order of creation of inter-twined resources is very important for cross-region deployments and can easily lead to circular references, impossible to deal with.

For example, in this context we will need to create a bucket that contains a replication rule that refers to a role that refers to the bucket, leading to a circular reference.

A viable solution is to use naming convention whenever possible and applicable, which typically defers resolution at runtime, allowing for the deployment without a circular build time reference.

As stated before, the replication configuration needs a replication role that needs permissions to source and destination objects, like the following:

const sourceBucket = `arn:aws:s3:::docs-${Stack.of(this).account}-${

config.primaryRegion

}`;

const sourceObjects = `${sourceBucket}/*`;

const destinationBucket = `arn:aws:s3:::docs-${Stack.of(this).account}-${

config.secondaryRegion

}`;

const destinationObjects = `${destinationBucket}/*`;

const replicaRole = new Role(this, "docsReplicationRole", {

roleName: "s3-replication-role",

assumedBy: new ServicePrincipal("s3.amazonaws.com"),

});

replicaRole.addToPolicy(

new PolicyStatement({

actions: [

"s3:ListBucket",

"s3:GetReplicationConfiguration",

"s3:GetObjectVersionForReplication",

"s3:GetObjectVersionAcl",

"s3:GetObjectVersionTagging",

"s3:GetObjectRetention",

"s3:GetObjectLegalHold",

],

resources: [

sourceBucket,

sourceObjects,

destinationBucket,

destinationObjects,

],

})

);

replicaRole.addToPolicy(

new PolicyStatement({

actions: ["s3:Replicate*", "s3:ObjectOwnerOverrideToBucketOwner"],

resources: [sourceObjects, destinationObjects],

})

);

replicaRole.addToPolicy(

new PolicyStatement({

actions: ["kms:Encrypt"],

resources: [

`arn:aws:kms:${config.secondaryRegion}:${Stack.of(this).account}:key/*`,

],

})

);

replicaRole.addToPolicy(

new PolicyStatement({

actions: ["kms:Decrypt"],

resources: [

`arn:aws:kms:${config.primaryRegion}:${Stack.of(this).account}:key/*`,

],

})

);

In this role definition, I heavily used back-ticks for string templates and naming conventions to calculate in advance the names of the source and destination buckets, even before they are created.

This strategy is possible because the policy statement is evaluated when the resource is accessed and not when the role is created, deferring the bucket lookup to a later stage.

This avoids the circular reference problem so I can create the role before the buckets.

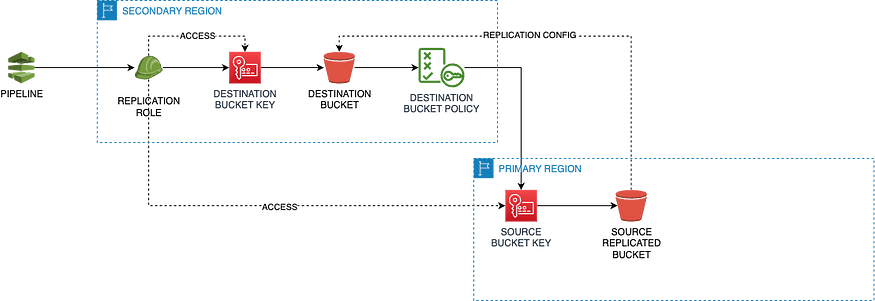

The high-level deployment pipeline structure is as following:

From an implementation perspective, the same code is executed in two stacks, one per region and is conditional based on the nature of the stack (primary or secondary):

protected setupReplicatedEncryptedBucket(config: Config) {

var bucket: Bucket;

const sourceBucket = `arn:aws:s3:::docs-${Stack.of(this).account}-${config.primaryRegion}`;

const sourceObjects = `${sourceBucket}/*`;

const destinationBucket = `arn:aws:s3:::docs-${Stack.of(this).account}-${config.secondaryRegion}`;

const destinationObjects = `${destinationBucket}/*`;

const replicationRole = Role.fromRoleName(this, 'replicationRole', 's3-replication-role');

if (!config.primary) {

const destinationKmsKey = new Key(this, `s3-cross-account-replication-dest-key`, {

alias: "s3-cross-account-replication-destination-key",

description: "Key used for KMS Encryption for the destination s3 bucket for cross account replication",

removalPolicy: RemovalPolicy.DESTROY,

pendingWindow: Duration.days(7),

policy: new PolicyDocument({

statements: [

new PolicyStatement({

sid: "Enable IAM User Permissions",

principals: [new ArnPrincipal(`arn:aws:iam::${Stack.of(this).account}:root`)],

actions: ["kms:*"],

resources: ["*"],

}),

new PolicyStatement({

sid: "Enable Replication Permissions",

principals: [replicationRole],

actions: [

"kms:Encrypt",

"kms:Decrypt",

"kms:ReEncrypt*",

"kms:GenerateDataKey*",

"kms:DescribeKey",

],

resources: ["*"],

}),

],

}),

enableKeyRotation: true,

}

);

bucket = new Bucket(this, 'docs', {

versioned: true,

removalPolicy: RemovalPolicy.DESTROY,

bucketName: `docs-${Stack.of(this).account}-${config.primaryRegion}`,

bucketKeyEnabled: true,

encryption: BucketEncryption.KMS,

objectOwnership: ObjectOwnership.BUCKET_OWNER_PREFERRED,

blockPublicAccess: new BlockPublicAccess(BlockPublicAccess.BLOCK_ALL),

encryptionKey: destinationKmsKey,

});

class entry { sid: string; actions: string[]; resources: string[]; principals?: IPrincipal[] };

const perms: entry[] = [

{ sid: "Set Admin Access", actions: ["s3:*"], resources: [sourceBucket, sourceObjects], principals: [new AccountPrincipal(Stack.of(this).account)] },

{ sid: "Set permissions for Objects", actions: ["s3:ReplicateObject", "s3:ReplicateDelete"], resources: [sourceObjects] },

{ sid: "Set permissions on bucket", actions: ["s3:List*", "s3:GetBucketVersioning", "s3:PutBucketVersioning"], resources: [sourceBucket] },

{ sid: "Allow ownership change", actions: ["s3:Replicate*", "s3:ObjectOwnerOverrideToBucketOwner", "s3:GetObjectVersionTagging"], resources: [sourceObjects] }

];

perms.forEach(perm => {

if (!perm.principals) {

perm.principals = [replicationRole];

}

bucket.addToResourcePolicy(new PolicyStatement(perm))

});

} else {

const sourceKmsKey = new Key(this, "s3-cross-account-replication-source-key", {

alias: 's3-cross-account-replication-source-key',

description: "Key used for KMS Encryption for the source s3 bucket for cross account replication",

enableKeyRotation: true,

removalPolicy: RemovalPolicy.DESTROY,

pendingWindow: Duration.days(7),

policy: new PolicyDocument({

statements: [

new PolicyStatement({

sid: "Enable IAM User Permissions",

principals: [new ArnPrincipal(`arn:aws:iam::${Stack.of(this).account}:root`)],

actions: ["kms:*"],

resources: ["*"],

}),

new PolicyStatement({

sid: "Enable Replication Permissions",

principals: [replicationRole],

actions: ["kms:Decrypt", "kms:DescribeKey"],

resources: ["*"],

}),

],

}),

});

bucket = new ReplicatedBucket(this, 'docs', {

bucketName: `docs-${Stack.of(this).account}-${config.primaryRegion}`,

accessControl: BucketAccessControl.PRIVATE,

publicReadAccess: false,

blockPublicAccess: new BlockPublicAccess(BlockPublicAccess.BLOCK_ALL),

bucketKeyEnabled: true,

encryption: BucketEncryption.KMS,

encryptionKey: sourceKmsKey,

objectOwnership: ObjectOwnership.BUCKET_OWNER_PREFERRED,

removalPolicy: RemovalPolicy.DESTROY,

versioned: true,

replicationConfiguration: {

role: replicationRole.roleArn,

rules: [{

status: 'Enabled',

filter: { prefix: '/' },

priority: 1,

deleteMarkerReplication: { status: 'Enabled' },

destination: {

bucket: `arn:aws:s3:::docs-${Stack.of(this).account}-${config.secondaryRegion}`,

accessControlTranslation: { owner: "Destination" },

account: Stack.of(this).account,

encryptionConfiguration: { replicaKmsKeyId: `arn:aws:kms:${config.secondaryRegion}:${Stack.of(this).account}:alias/s3-cross-account-replication-destination-key` },

// RTC configuration

metrics: { status: 'Enabled', eventThreshold: { minutes: 15 }},

replicationTime: { status: 'Enabled', time: { minutes: 15}}

},

sourceSelectionCriteria: { sseKmsEncryptedObjects: { status: "Enabled" } }

}]

},

lifecycleRules: [ {enabled: true, transitions: [ {storageClass: StorageClass.INTELLIGENT_TIERING, transitionAfter: Duration.days(35)}]}]

});

}

return bucket;

}

In the primary conditional block, the code creates the encryption key and the destination bucket, which is based on a standard L2 construct.

On the other hand, the secondary conditional block uses the ReplicatedBucket custom L2 construct that I created to implement the replication configuration.

In this particular example, the replication configuration block (lines 101–119) also includes the Replication Time Control (RTC) configuration, to make sure the replication happens within a 15 minutes timeframe, which was part of the overall Recovery Point Objective (RPO) of the solution this snippet is extracted from.

The bucket configuration is also completed with a lifecycle rule (line 120) for the implementation of the S3 intelligent tiering for an increased cost optimisation.

Implementation details

There are some elements of the code shown below that are worth some more details.

- Both source and destination bucket must have versioning enabled for the replication configuration to even be accepted by CloudFormation.

- Both source and destination encryption keys must be accessible through the replication role so that the S3 control plane can implement replication by decrypting objects from the source and re-encrypting them at the destination.

- Objects are copied with their metadata and permissions so, in case the deployment is also cross-account, it’s important to reassign ownership at the destination with

accessControlTranslation: { owner: "Destination" }

- The RTC configuration is implemented with both required configuration elements:

metrics: { status: 'Enabled', eventThreshold: { minutes: 15 }},

replicationTime: { status: 'Enabled', time: { minutes: 15}}

Notes on the cross-region pipeline

To keep this article short and focused on the topic, I did not describe in depth the implementation of the cross-region pipeline, which will the subject of an upcoming article fully dedicated to it.

Conclusions

AWS CDK is continuously improved and extended thanks to its huge community and dedicated AWS team but it might also happen that the configuration property that you need is not available.

Thanks to L1 constructs, escape hatches and the great extensibility of the CDK tool, it is always possible to overcome temporary limitations, at least until the next CDK release!

What’s next

I’m preparing a series of articles as byproducts of some technical work I’m performing lately, still in the area of cross-region, cross-account deployments for highly resilient workloads.

In particular, I plan to publish on the following topics:

- Strategy for cross-region cross-account CDK deployment pipeline

- ElastiCache cross-region deployment with CDK

- Cross-region Aurora Serverless V2 with CDK

- Cross-region cross-account shared transit gateway

So stay tuned!

Comments

Loading comments…