Amazon Elastic Kubernetes Service (Amazon EKS) is a fully managed Kubernetes service offering from AWS.It provides high availability and scalability for the Control Plane to run across multiple availability zones (thus eliminating a single point of failure situation).

Amazon Elastic Kubernetes Service (Amazon EKS) is a fully managed Kubernetes service offering from AWS.It provides high availability and scalability for the Control Plane to run across multiple availability zones (thus eliminating a single point of failure situation).

Note: New Kubernetes versions have introduced significant changes. Therefore, its recommend that you test the behavior of your applications against a new Kubernetes version in non-prod environment before you update your production clusters. The upgrade process steps are as follow:

- Upgrade EKS cluster version (1.18 to 1.19)

- Update system component versions

- Select a new AMI for worker nodes and launch node group

- Drain pods from the old worker nodes

- Cleanup

Step 1. Upgrade EKS cluster version

The first step before starting EKS upgrade is ensuring you are using version of kubectl that is at least as high as the Kubernetes version you wish to upgrade. Next step is to update your EKS Control plane via AWS CLI /Terraform or any other tool you are using for cluster management.

aws eks update-cluster-version \

--region <region-code> \

--name <my-cluster> \

--kubernetes-version <1.19>

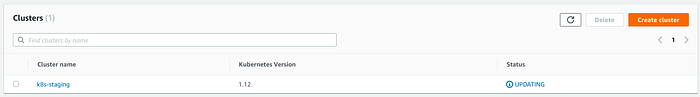

It will take as long as 20 minutes for the EKS cluster’s version to be updated, and you can track its progress in the AWS console.

Step 2. Update system component versions

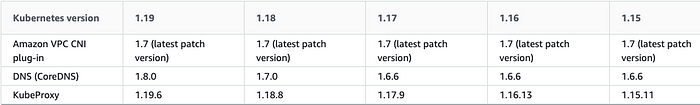

After updating your cluster, its recommend that you update your add-ons to the versions listed in the following table for the new Kubernetes version that you’re updating to.

For updating above components you can either use kubectl cmd or existing CI/CD pipelines.

Apart from above components if you have cluster autoscaler, VPC Controller, metric-server etc its recommended to upgrade them to your cluster version.

For updating above components you can either use kubectl cmd or existing CI/CD pipelines.

Apart from above components if you have cluster autoscaler, VPC Controller, metric-server etc its recommended to upgrade them to your cluster version.

Step 3. Select a new AMI for worker nodes and launch node group.

So approach would be to launch new worker node group and then terminate the old, but hold on with this approach you might end up having below issues and digging a hole for yourself. While shutdown the old nodes, you would be taking down the running pods with it. What if the pods need to clean up for a graceful shutdown? In case you shutdown all the nodes at the same time? You could have a brief outage while the pods are relaunched into the new nodes.

Step 4. Drain pods from the old worker nodes

What we want to achieve is gracefully migrate running pods to new worker nodes. The first step is to use the name of your nodes returned from kubectl get nodes to run kubectl taint nodes on each old node to prevent new pods from being scheduled on them:

kubectl taint nodes <node ip > key=value:NoSchedule

Now use kubectl drain which will gracefully handle pod eviction. In order to minimize downtime issues Kubernetes provide below features to handle same.

Step 5. Cleanup

Since all of your application pods are now rescheduled on new worker nodes and are running properly we can plan to terminate old worker node group vai AWS CLI/Terraform. Reference links: https://docs.aws.amazon.com/eks/latest/userguide/update-cluster.html https://docs.aws.amazon.com/eks/latest/userguide/update-managed-node-group.html

Conclusion

Now we’ve successfully upgraded your Kubernetes cluster on EKS to a new version of Kubernetes, updated the kube-system components to newer versions, and rotated out your worker nodes to also be on the new version of Kubernetes.

Comments

Loading comments…