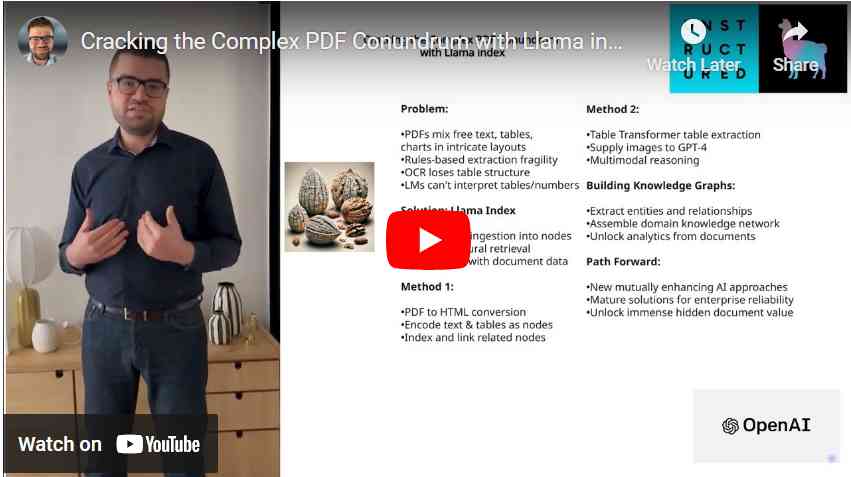

Handling complex PDF documents has long been a thorny data analytics challenge. PDFs seamlessly blend free-form text with embedded tabular data, charts, images, and more. This fluid mix of modalities renders automatically extracting actionable insights an elusive goal.

Yet unlocking analytics from multifaceted PDF documents could unlock tremendous latent value across business, research, and educational use cases. Within seemingly unstructured PDF pages lie embedded troves of structured statistics waiting to be unleashed.

Traditional rules-based extraction pipelines falter on even mildly complex layouts. Optical character recognition stumbles on interpreting tables as machine-readable data. Even powerful large language models struggle to contextualize numbers and statistics embedded in dense paragraphs.

Now a new class of data-centric AI techniques is emerging to finally crack this nut by strategically combining strengths across knowledge representation, computer vision, and language understanding. These modern symbiotic solutions exhibit early promise in interrogating documents that have long resisted interrogation.

In this article, we dive deep on two PDF analytics methods powered by the Llama Index library for large language model applications. Both showcase the power of linking language with data to surface insights from complex documents. We analyze their approach to ingesting, representing, retrieving and reasoning about text and tables within PDFs.

Finally, we explore the intriguing possibility these techniques open up for automating the construction of knowledge graphs from extracted PDF entities and relationships. The data fabric they weave could rapidly accelerate analytics and knowledge management for organizations mired in vital but inaccessible PDF troves.

Image by the author

The Perplexing Nature of Multimodal PDFs

PDF documents present a vexing quagmire for analytics. Within a single PDF, quantitative tabular data effortlessly intermingles with prose-heavy passages, charts, images, diagrams, forms, and more. This fluid composite of modalities poses complex data extraction and interpretation challenges.

Yet for many indispensable business functions, reliably querying across free-form descriptions, statistics, visualizations, and other constituents locked within PDFs can spell the difference between decision-making paralysis and competitive advantage.

Unfortunately, the most widely adopted analytical approaches falter on precisely this kind of intricate document layout:

- Rule-based extraction pipelines crafted for consistency crumble when confronted with the wild variability of PDF formatting. Fragile screen-scraping scripts break from even trivial deviations.

- Optical character recognition (OCR) capably extracts text but discard critical tabular structure and context in the process. Tables become computationally indecipherable dense character strings devoid of rows, columns and datatypes.

- Large language models comprehend prose but lack the innate numeracy and graphic reasoning skills to contextualize tables, charts, diagrams and other visual encodings.

Bridging this unstructured text versus structured data divide requires a symbiotic fusion of capabilities — which until recently remained lacking.

Let’s analyze two emerging techniques built on new data-centric AI paradigms that are finally cracking the complex PDF conundrum by strategically combining complementary strengths.

A Data-Centric Bridge for Language Models

Llama Index offers a powerful data layer purpose-built to amplify language model applications. Unlike general data stores, Llama Index structurally organizes enterprise data specifically to empower contextual language AI queries.

Two instrumental pillars of Llama Index’s design exhibit particular promise for extracting signals from multifaceted PDF documents:

Flexible Data Ingestion

Llama Index flexibly ingests text, tables, images, and more into versatile “node” container representations. Custom noders can encode enterprise domain knowledge to parse PDF elements into machine-readable indexable units.

This facilitates rich indexing of the diverse textual, visual and layout components within PDF documents.

Recursive Neural Retrieval

Sophisticated neural indexing then allows Llama Index to rapidly retrieve the most relevant nodes for a given query across modalities. This enables linking related nodes whether they encapsulate free-form text, parsed tables or even embedded images.

Retrieved nodes become the contextual springboard for an external language model to generate an informed response.

Together, these capabilities allow Llama Index to effectively “bridge” language models with diverse data scattered across PDF documents. Language models better reason with document data correctly structured for their consumption.

Method 1: Text and Table Node Parsing

Google Colaboratory## Bridging Text and Tables with Structured NodesThe first method leverages Llama Index’s flexible data ingestion to algorithmically link text and tables within converted PDF documents:PDF to HTML ConversionFirst, the PDF is converted into HTML format using pdf2htmlEX for simpler parsing:

import subprocess

def convert_pdf_to_html(pdf_path, html_path):

command = f"pdf2htmlEX {pdf_path} --dest-dir {html_path}"

subprocess.call(command, shell=True)

input_pdf = "quarterly-nvidia.pdf"

output_pdf = "quarterly-nvidia"

convert_pdf_to_html(input_pdf, output_pdf)

HTML structurally separates content from presentation, while better preserving table layouts.Encoding Text and Tables as NodesNext, a specialized Llama Index pack parses the HTML, ingesting text spans and tables into structured “node” data representations:

embedded_tables_unstructured_pack = EmbeddedTablesUnstructuredRetrieverPack(

"quarterly-nvidia/quarterly-nvidia.html",

(nodes_save_path = "nvidia-quarterly.pkl")

);

These encapsulate text passages and table summaries as separate indexed data entities.Indexing Enables LinkingIndexing the text, table and metadata nodes creates a searchable data substrate. Behind the scenes, vector embeddings allow relating nodes by semantic similarity.Augmenting Answers with Retrieved NodesAt query time, the most relevant text and table nodes are recursively retrieved to provide contextual augmentation for answer generation:Advantages of Algorithmic LinkingThis method provides an end-to-end pipeline for ingesting, relating and querying PDF text and tables using Llama Index’s programmatic data fabric. Structured nodes ensure accurately preserved table data.LimitationsThe approach relies on initial PDF to HTML conversion quality. And fully deciphering complex table semantics remains challenging.# Method 2: Table Transformer + GPT-4#### GitHub - llama_index/docs/examples/multi_modal/multi_modal_pdf_tables.ipynb at main · run-llama/llama_index## Tapping Computer Vision and Multimodal ReasoningA second approach combines computer vision and multimodal reasoning to interpret PDF tables:Visually Extracting TablesMicrosoft’s Table Transformer leverages convolutional neural networks to visually detect and extract tables embedded within PDF pages:

class MaxResize(object):

def __init__(self, max_size=800):

self.max_size = max_size

def __call__(self, image):

width, height = image.size

current_max_size = max(width, height)

scale = self.max_size / current_max_size

resized_image = image.resize(

(int(round(scale * width)), int(round(scale * height)))

)

return resized_image

detection_transform = transforms.Compose(

[

MaxResize(800),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),

]

)

structure_transform = transforms.Compose(

[

MaxResize(1000),

transforms.ToTensor(),

transforms.Normalize([0.485, 0.456, 0.406], [0.229, 0.224, 0.225]),

]

)

# load table detection model

# processor = TableTransformerImageProcessor(max_size=800)

model = AutoModelForObjectDetection.from_pretrained(

"microsoft/table-transformer-detection", revision="no_timm"

).to(device)

# load table structure recognition model

# structure_processor = TableTransformerImageProcessor(max_size=1000)

structure_model = AutoModelForObjectDetection.from_pretrained(

"microsoft/table-transformer-structure-recognition-v1.1-all"

).to(device)

# for output bounding box post-processing

def box_cxcywh_to_xyxy(x):

x_c, y_c, w, h = x.unbind(-1)

b = [(x_c - 0.5 * w), (y_c - 0.5 * h), (x_c + 0.5 * w), (y_c + 0.5 * h)]

return torch.stack(b, dim=1)

def rescale_bboxes(out_bbox, size):

width, height = size

boxes = box_cxcywh_to_xyxy(out_bbox)

boxes = boxes * torch.tensor(

[width, height, width, height], dtype=torch.float32

)

return boxes

def outputs_to_objects(outputs, img_size, id2label):

m = outputs.logits.softmax(-1).max(-1)

pred_labels = list(m.indices.detach().cpu().numpy())[0]

pred_scores = list(m.values.detach().cpu().numpy())[0]

pred_bboxes = outputs["pred_boxes"].detach().cpu()[0]

pred_bboxes = [

elem.tolist() for elem in rescale_bboxes(pred_bboxes, img_size)

]

objects = []

for label, score, bbox in zip(pred_labels, pred_scores, pred_bboxes):

class_label = id2label[int(label)]

if not class_label == "no object":

objects.append(

{

"label": class_label,

"score": float(score),

"bbox": [float(elem) for elem in bbox],

}

)

return objects

def detect_and_crop_save_table(

file_path, cropped_table_directory="./table_images/"

):

image = Image.open(file_path)

filename, _ = os.path.splitext(file_path.split("/")[-1])

if not os.path.exists(cropped_table_directory):

os.makedirs(cropped_table_directory)

# prepare image for the model

# pixel_values = processor(image, return_tensors="pt").pixel_values

pixel_values = detection_transform(image).unsqueeze(0).to(device)

# forward pass

with torch.no_grad():

outputs = model(pixel_values)

# postprocess to get detected tables

id2label = model.config.id2label

id2label[len(model.config.id2label)] = "no object"

detected_tables = outputs_to_objects(outputs, image.size, id2label)

print(f"number of tables detected {len(detected_tables)}")

for idx in range(len(detected_tables)):

# # crop detected table out of image

cropped_table = image.crop(detected_tables[idx]["bbox"])

cropped_table.save(f"./{cropped_table_directory}/{filename}_{idx}.png")

def plot_images(image_paths):

images_shown = 0

plt.figure(figsize=(16, 9))

for img_path in image_paths:

if os.path.isfile(img_path):

image = Image.open(img_path)

plt.subplot(2, 3, images_shown + 1)

plt.imshow(image)

plt.xticks([])

plt.yticks([])

images_shown += 1

if images_shown >= 9:

break

for file_path in retrieved_images:

detect_and_crop_save_table(file_path)

# Read the cropped tables

image_documents = SimpleDirectoryReader("./table_images/").load_data()

response = openai_mm_llm.complete(

prompt="Compare llama2 with llama1?",

image_documents=image_documents,

)

print(response)

By deciphering pixel patterns rather than brittle text formatting rules, Table Transformer achieves state-of-the-art table extraction accuracy.Indexing and Supplying Table ImagesThe extracted table images are then indexed as nodes within Llama Index’s vector store. At query time the most relevant table nodes are retrieved.Reasoning Over Table RepresentationsFinally, the contextualized table images are directly supplied to a multimodal foundation model such as GPT-4:By reasoning over actual tables rather than proxy text representations, GPT-4 avoids OCR limitations and better interprets the data.Balancing Vision AI and Language AI StrengthsThis technique effectively balances Table Transformer’s perception with GPT-4’s reasoning. The combined solution handles table analysis shortcomings each has independently.End-to-end stability and scalability remain actively in development as research progresses.# Constructing Knowledge Graphs from PDF Data#### Knowledge Graph Prompting: A New Approach for Multi-Document Question AnsweringBoth multimodal PDF analysis techniques demonstrate promising capabilities for automating the construction of knowledge graphs:Extracting a Spectrum of Data ElementsBoth approaches facilitate extracting a diverse range of structured data elements buried within PDF documents:* Entities: Key people, organizations, locations, products and other conceptual elements are detected within sections like prose passages, embedded tables, charts or diagrams.

- Relationships: Critical relationships spanning entities (e.g. works-for, based-in, part-of) are identified from long-form cell text as well as chart axes, image captions, section positioning and other contextual layout signals.

- Attributes: Additional descriptive characteristics associated with entities such as dates, costs, durations, percentages, statistics and superlative assessments are pulled from related columns, visual cues, comparison framing etc.

- Taxonomies: Category hierarchies, groupings, ordinations and list nestings encode conceptual domains. Cluster analysis over sections deduces shared subjects. Inherited headings supply declarative tags.

- Events: Real-world or internal business occurrences withSemantic timestamps are extracted from textual event phrasings and associated temporal table columns.

- Flows: Sequential events, gradients over time, processing steps and other transition narratives are assembled from cohesive descriptive passages, ordered records and arranged visualizations.Together these mutually disambiguating elements compose knowledge game entries to populate domain graphs. Language mastery contextualizes pointers human SMEs might miss.Incrementally Assembling a Knowledge NetworkThese structured extractions can populate the nodes and edges within a knowledge graph:Graphs from individual documents are concatenated into an aggregated domain-specific knowledge web.**Enabling an “Enterprise Brain”**Over time, even largely unstructured document troves can seed structured knowledge repositories through such extraction techniques.Knowledge graphs leverage graph algorithms, predictive analytics and neural queries to uncover hidden insights within the structured collective.Augmenting Documents with their DistillationsIn turn, embedding such living “enterprise brains” creates a portal for documents to contribute their wisdom to an ever-growing intelligence. Free-form queries tap this automated hive knowledge.With further research, robust systems could rapidly decode vast document stores into invaluable networked knowledge assets — unlocking their hidden analytic potential.# Illuminating a Once-Dark Data DomainFor decades, complex PDF documents — with their fluid mixing of formats, styles and chart types — remained impervious to automation technologies. This resulted in entire enterprises worth of dark data concealed behind PDF veils — lost to analytics yet critical for sound decisions.But the status quo is changing. Modern mutually enhancing AI approaches finally breach barriers long deemed unbreachable. Table data, prose passages, images and more see the light rather than languishing in digital darkness.The capabilities showcased via Llama Index’s multimodal techniques occupy the frontier rather than the finish line. Much work remains to mature these budding symbioses into mainstay enterprise solutions.Yet already, the outlook has pivoted from pessimistic to promising. PDF enigmas become less enigmatic with each experiment illumination hidden insights and interconnections. Tools like Llama Index accelerate this trajectory by removing infrastructure impediments to AI innovation.In coming years, expect partnered language-vision-data solutions to grow robust enough for even risk-averse industries. The day unstructured text and structured tables coexist cooperatively rather than antagonistically may arrive sooner than once thought possible.That eventuality will owe thanks to the pioneering data architects incrementally cracking open the complex PDF conundrum — liberating the bounties still trapped within.