The future is isometric. Practice turning 90 degrees.

Is your company ready to develop new AI-based capabilities? I think probably "yes".

You don't need to form a team of AI specialists. You don't need to hire top talent away from the FAANGs and billion-dollar unicorns. You likely have people within your own company perfectly able to move you forward.

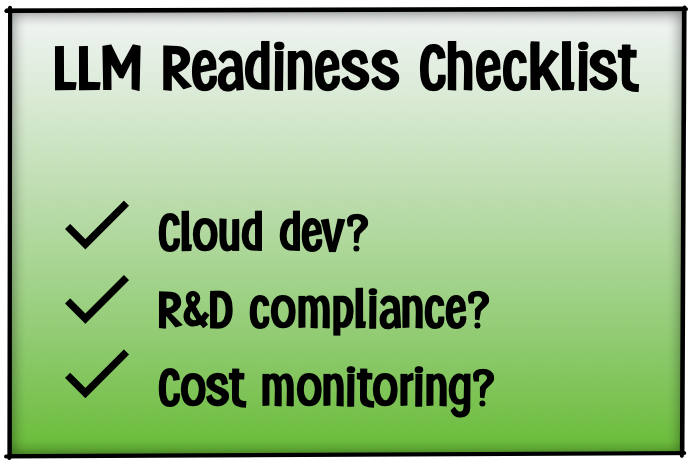

Does your company already do these things?

- Develop cloud-based software for your business use cases

- Evaluate R&D projects for legal, security, and regulatory compliance

- Monitor costs around cloud hosting such that your expenses are known and justified

If you answered "yes" to the above, then you should be ready to bring LLM-based capabilities into your company.

What Kind of Capabilities?

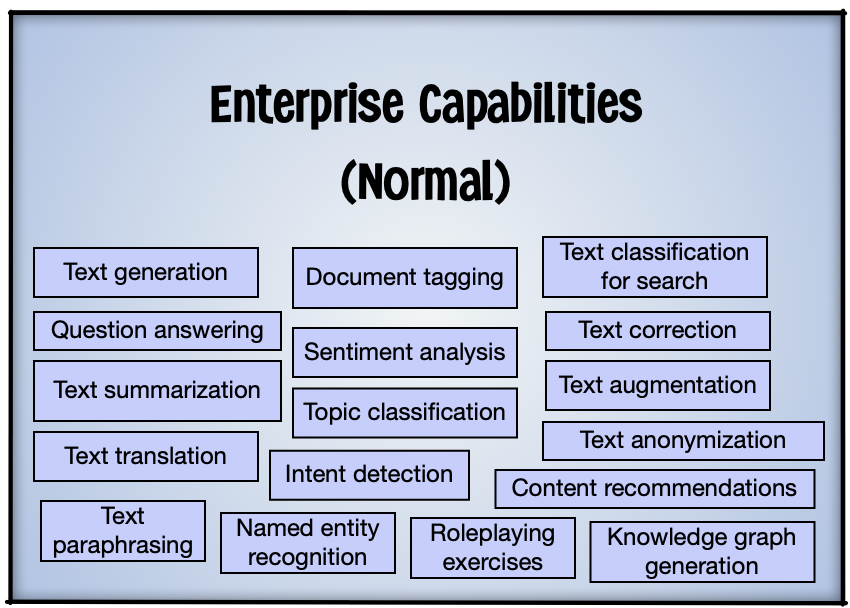

There's many different tasks that can be automated or simplified using LLMs today. I'll give you some examples.

When you have a basic question about your company like "who are the people working on this project" or "is Columbus Day a paid holiday?" wouldn't it be great to just get the answer from software a second later rather than hunting for 15 minutes? LLMs combined with retrieval-augmented generation (RAG) and vector databases can handle this.

Suppose you receive ten thousand customer support emails in a year. And you'd like to correlate them to product features to see which parts of your software are causing pain and suffering. That can be automated.

Would you like knowledge base articles to be automatically written around common support issues?

How about asking questions, not to a person, but to a document or source code repository?

In general, any task that involves creating or analyzing text is a fine candidate for LLM-based automation. Complex tasks like "write an executive summary of how my department did last quarter" can be decomposed into smaller subtasks to achieve dramatic results. It's still work to develop these solutions. But it's work at a scale achievable by companies that already develop software in-house.

Who Knows Your Company's Use Cases?

Here's what a real use case for LLM looks like. It's a little ugly:

- Automate the post-GCX data quality pass so that we can skip sending our tie-off reports to DCM every third week. This reduces our SLA need with DCM, so we can renew at a lower subscription tier next year.

Gibberish, right?

But if you tried to explain some internal process of your company to an outsider, it would probably sound about as confusing. My point is that you and the people inside your company know your use cases and are best positioned to understand where work can usefully be automated.

What they probably don't know: how to apply LLM capabilities to solve various problems that will bring business value.

You will not hear Sam Altman talking about how the next GPT is going to "automate the post-GCX data quality pass" (or whatever wonky company-centric use case you've got). You have to be close to the company-centric use cases to realize the benefits of applying AI to them.

The people at your company should learn what is possible with LLMs so they can come up with the right ideas. And for those ideas to be implementable, you'll need to set up some basic infrastructure and governance to support LLM-based capabilities.

If that last sentence has you picturing a massive investment, consider there are different levels of LLM capabilities a company can have. The difficulty of achieving them varies widely.

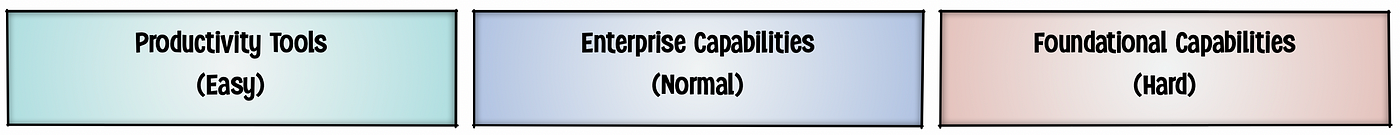

The Three Kinds of LLM Capabilities

- Productivity Tools --- every company can use these by simply licensing software.

- Enterprise Capabilities --- any company with mature processes around software development can build these.

- Foundational Capabilities --- with rare exceptions, only companies that are selling AI as a product will have the expertise and ROI justifications to build these.

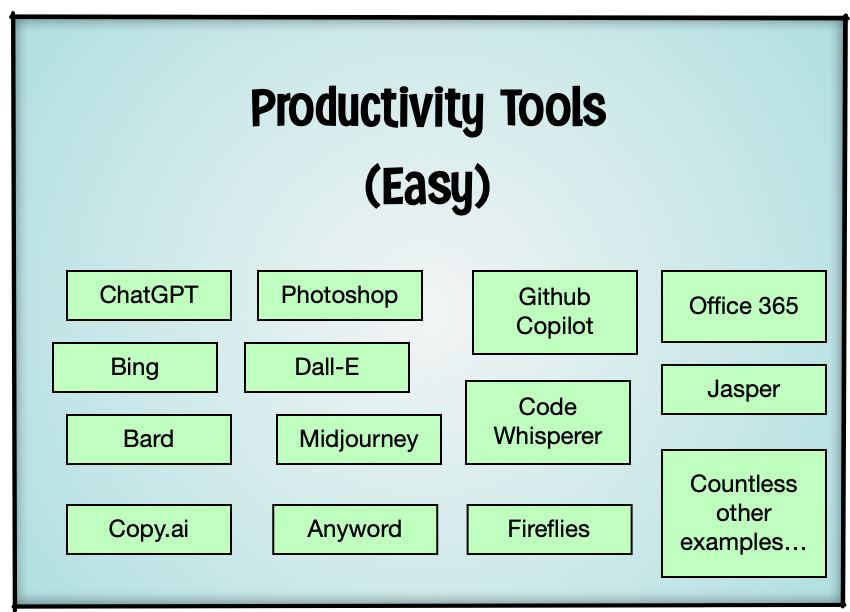

Productivity Tools

The "Productivity Tools" category is the simplest to explain. Here you are just licensing software for your company's use rather than building anything. You already have a process for evaluating and choosing tools like Slack, Figma, Github, etc. You'd use the same process for evaluating AI-based tools, considering any legal or security implications.

The trend of AI being built into existing software products (e.g., Office365, Photoshop) will continue. You'll have to stop thinking of AI software as a separate kind of software. The AI is just going to be baked in.

AI will come knocking on your door in the form of tool vendors. You will start getting pitched new types of AI-assisted software specific to whatever niche market/vertical your company is involved in. If you run a submarine screen door company, it's just a matter of time before some salesperson promises AI solutions for streamlining the distribution of submarine screen doors. Before you buy an AI solution tailored to your business, you should understand what Enterprise Capabilities are already within your reach to build in-house at lower cost.

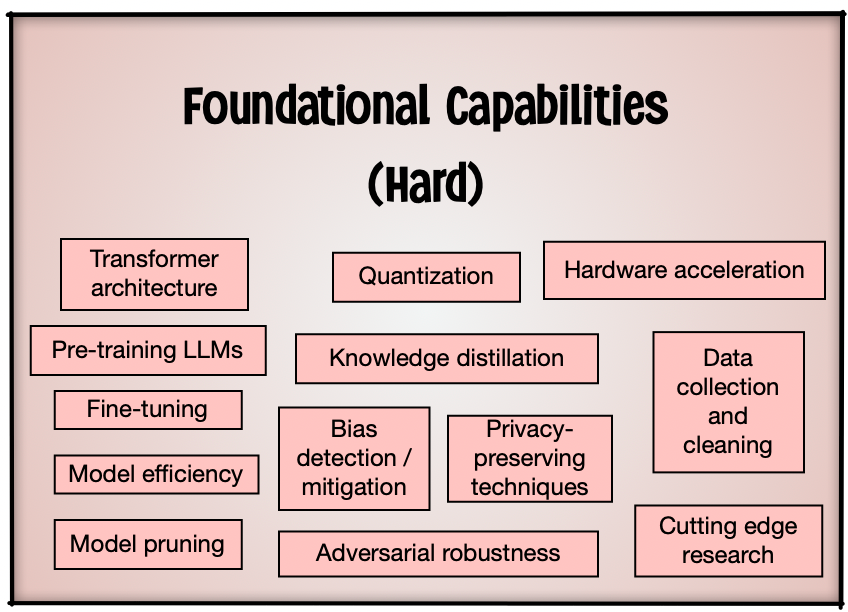

Foundational Capabilities

Some of these (like fine-tuning) are arguably in the realm of Enterprise Capabilities.

This is the stuff that your company is not going to work on unless AI itself is what you are selling. Who works on foundational capabilities? AWS, Nvidia, Microsoft, OpenAI, Stable Diffusion, Anthropic, Meta... the usual suspects --- companies that we hear about constantly in the press.

You probably don't have a hundred million dollars to train a new LLM model from scratch. And any work that is a fresh implementation of a research paper is probably impractical. There are certain specialties in AI that are unrecruitable by conventional methods. You would need to hire distinguished figures with rare industry knowledge just to know who to hire next after hiring them.

So you're not going to build foundational AI capabilities at your company unless you are an AI company.

Less obvious: you should not conflate Foundational and Enterprise Capabilities. Media is saturated with heady talk around foundational work from the major players. So it's easy to think that all AI-related development is rocket science. And that notion can keep you in a "wait and see" stance where you ignore opportunities available today.

Enterprise Capabilities

Enterprise capabilities go beyond using tools, but not so far as building new core technologies in AI. You build software around specific use cases that are important to your company. Your company would build its own API layer in front of one or more LLMs.

An example of how an API layer automates multiple requests to an LLM to generate feature requests from customer complaints.

The API layer coordinates actions for each use-case-specific request. The individual actions can include data transformation, database updates, and calls to other services as well as asking questions of the LLM using prompt templates.

When you combine LLM prompts with coordinating logic, you can decompose complex problems into a series of tasks. The smaller tasks give you structure and reliability that allow you to depend on the LLM to get specific kinds of work done.

In addition, by aligning endpoints to specific use cases in an API layer, you can make sure that the significant compute cost of LLM processing is justified by the business value of an individual use case. If might cost $3 to handle a request to look up all company documents that describe R&D work related to a user-submitted idea. But some analysis could determine that the time saved is worth $200, thereby justifying the cost. Access to endpoints for specific use cases can be configured to avoid expense around work that is not cost-justified.

Who Do You Need for Enterprise Capabilities?

If you're not going hire Yann LeCun or Ilya Sutskever to work on AI at your company, who will do the work?

- Your product manager can design LLM capabilities. They just need a sense of what is possible.

- Your business analyst can make quick prototypes of LLM prompts to test feasibility.

- Your cloud infrastructure engineer can set up hosting to run inference endpoints.

- Your IT security engineer can analyze AI-based SAAS for attack vectors.

- Your product security engineer can check for data governance issues.

- Your legal counsel can review terms of service agreements with AI-based software vendors.

- Your application developer can write code that calls inference endpoints to add amazing LLM-based features.

- Your quality assurance engineer can write the test cases that verify a model's suitability for different tasks.

There should probably not be a single AI team at your company. You don't have a "database team" or an "internet team", right? You can keep the same sensibility with LLM-based software development. A lot of people can figure it out and apply their already-existing skillsets towards using it.

Any person in your company can have a valuable idea that transforms their work for the better. They will know the use cases that matter. You don't have to wait for the tool vendors to guess correctly what you need.