Web scraping is the automated process of harvesting publicly-available data from websites and is often used by developers and businesses to gather information for various purposes such as price analysis, sentiment analysis, feeding machine learning models, keyword research, etc.

However, manual web scraping requires programming expertise and can be time-consuming and error-prone, leading some to turn to low-code and no-code solutions.

Even if you have little to no coding experience, these tools offer a streamlined, intuitive scraping experience, with user-friendly interfaces that can be easily configured to collect data in the desired format and with a high degree of accuracy.

In this article, we'll take a look at five of these no-code, low-code web scraping tools along with their features, advantages, and limitations.

We'll talk about the ideal user for each tool, and identify the key areas where a certain tool does the best.

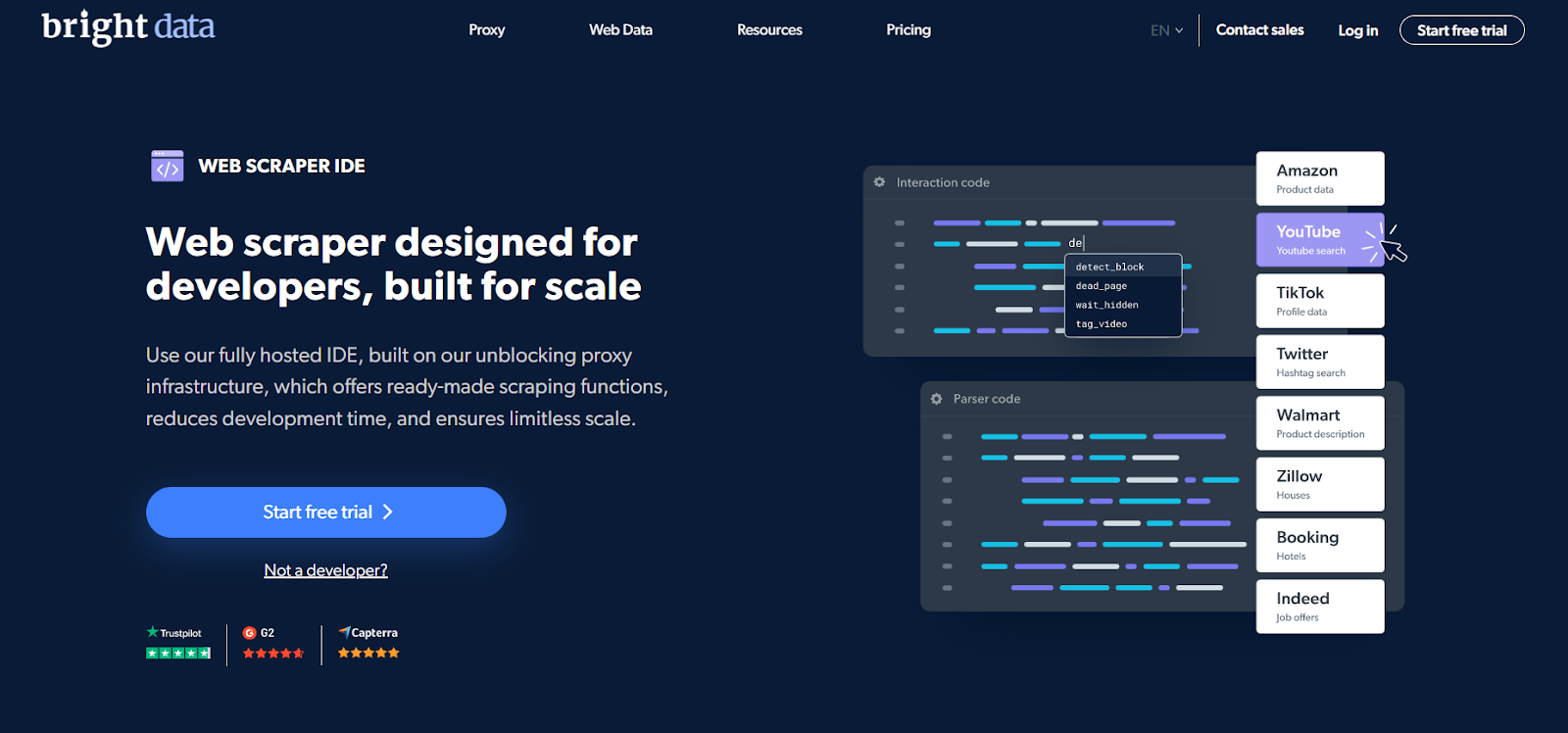

1. Bright Data

Bright Data's Web Scraper IDE is a powerful enterprise-grade scraper that comes with ready-made code templates to get you started with scraping data-rich websites.

These templates are managed and updated automatically by Bright Data's team, meaning that even if your target website's layout changes, your scraping operation will continue to run smoothly and without hiccups.

Bright Data: How It Works

Unlike other solutions on this list, this is a full-fledged, browser-integrated IDE purpose-built for web scraping.

It offers ready-made templates - with modifiable code - ensuring that you no longer have to spend hours building (and maintaining) your scraper from scratch. All you have to do is run the scraper and save it in your format of choice - JSON, CSV, XLSX, or even as an API call that you can integrate into your app. You can also save the date on Google Cloud Storage or Amazon S3, thus removing the need for any in-house infrastructure.

But what really sets Bright Data's IDE apart from other web scraping solutions is its powerful capacity to scrape almost any website with a success rate of 99.99%. This is because of two reasons:

- Out of the box, it comes with a powerful unblocker infrastructure that gets around IP/device fingerprint blocks by seamlessly emulating header information as well as other browser details.

- It automatically rotates four different kinds of proxies - residential, data center, ISP, and mobile - with automatic retries, to get around geo-blocks, ReCAPTCHAs, rate-limiting, etc.

In addition to all of that, even if you do run into problems, Bright Data offers 24x7 live support to make sure there are no hiccups to your operational workflow. Additionally, if a template doesn't exist for your use case, you can put in a request to Bright Data's team to build one for you.

Bright Data: Who Is It For?

Bright Data's Web Scraper IDE is a one-stop solution for scraping data at scale with zero infrastructural costs. It's simultaneously useful for both business managers and developers looking to scale and speed up their scraping operations. With a little knowledge of JavaScript, developers can get even more out of the templates by modifying them to specific needs or leveraging the various API commands.

Whatever your use case may be, Bright Data's Web Scraper IDE offers a comprehensive solution for collecting highly accurate data at scale. It is also compliant with all major data protection laws so you're secure regarding the legality of your operations as well.

You can give the Web Scraper IDE's free trial a spin and then check out their pay-as-you-go or other enterprise-grade plans as per your use case.

2. Smartproxy

Along with Social Media, SERP, eCommerce, and Web Scraping APIs, Smartproxy offers a solution that allows users to collect publicly available data without advanced coding knowledge. With No-Code Scraper, users can download the extracted data in JSON or CSV formats. Additionally, Smartproxy offers a free No-Code Scraper's Chrome extension, eliminating the need to hop between tabs while collecting information from various targets.

Smartproxy No-Code Scraper: How It Works

No-Code Scraper is a web app and browser extension that can target most websites on the internet, including the most popular search engine, Google. Using pre-made templates, users can plan their collection tasks, and advanced algorithms will take care of the data extraction without the need to monitor the process. Smartproxy No-Code Scraper is a powerful tool not only for gathering data from static websites but also for scraping JavaScript, AJAX, or any other dynamic website.

All it takes is five easy steps - users need to navigate to the 'Collection management' tab and click on the 'Create new' button to select which collection they want to create. Then, they can use pre-made templates (universal, Google, or Amazon) or Chrome extension to create their custom templates. After selecting the collection method, users can conveniently choose the delivery frequency and end date. The last step is selecting the preferred data delivery method - an email or a webhook.

Known for their customer-centric approach with 24/7 technical support, extensive documentation library, and user-friendly dashboard, Smartproxy also provides personalized collection templates that can be requested by simply dropping a line via email.

Smartproxy No-Code Scraper: Who Is It For?

This zero-code solution is a perfect tool for both scraping-savvy and users without technical knowledge, as it offers a straightforward collection mechanism with advanced features. No-Code Scraper assists with a range of use cases, including SEO research, gathering statistical data, generating leads, maintaining a pristine business reputation, or analyzing the competitive landscape.

Users can take a test drive of No-Code Scraper by activating a free one-month trial with 3K requests or purchase a monthly subscription with a 14-day money-back option. {/* content here */}

3. Octoparse

Octoparse is a cutting-edge web scraping tool that uses Machine Learning to build relationships between DOM elements, rather than relying on CSS and XPath selectors. This cloud-based platform is perfect for businesses and individuals who need to gather information from websites but don't have the coding skills or resources to do so.

The intuitivepoint-and-click interface and ready-made templates Octoparse offer, eliminate the need for programming knowledge, making it easy to extract, parse, structure and export the data in a matter of minutes.

Octoparse: How It Works

Octoparse's Auto-detection feature works by analyzing a web page, and automatically selecting the most likely data you would want (for an eBay listing, this could be the product name, condition, price, and shipping details) saving you time in finding and selecting them manually.

It also offers a user-friendly interface for website data extraction. Choose from manual selection or utilize one of 70+ templates. Advanced ML algorithms then transform web pages into structured information in JSON, CSV, and spreadsheet formats.

Finally, in the Advanced mode, you can create workflows for Octoparse - defining exactly what you want to be done on a webpage before and after the scraping. This would be things like pagination, fine-grained scrolling at set intervals (to deal with dynamic data on infinite scroll pages), exports, and so on, making sure even complex pages are scraped properly.

Octoparse: Who Is It For?

Octoparse is ideal for both technical and non-technical users who require data from websites that change frequently. You can effortlessly handle complex web scraping tasks with features like auto-selection, workflow definition, scheduled extraction, anonymous scraping with automatic IP rotation, and user-friendly API.

However, it's important to note that if you want to unlock all of Octoparse's features, you must purchase their plan.

4. Dexi.io

Dexi is a browser-based web scraping tool that provides powerful data extraction capabilities without requiring users to write any code. As a user, you can make use of three types of robots - Extractor, Crawler and Pipes - to create scraping tasks. The platform allows users to capture structured data from various sources, including websites, APIs, and databases.

In addition to that, Dexi features a full-featured visualETL engine that lets users clean and restructure the data before use to fit their specific needs. They can even combine data from multiple sources and enrich them through machine learning services.

Dexi.io: How It Works

Dexi.io is a browser-based application that doesn't require any downloads. Users can set up crawlers and fetch data in real-time, and the platform supports scraping data anonymously using proxy servers. The crawled data will be hosted on Dexi's servers for up to two weeks before it's archived.

The platform also has features that allow users to save the scraped data directly to cloud storage like Google Drive or export it as JSON or CSV files. With data flows and pipelines, users can transform the data using external APIs.

They also offer a Managed Services tier, with 24x7 support, consultancy, and bot building, maintenance, and integration support. With an ever-expanding list of apps on their app store, the platform also allows users to integrate any data into their existing systems to build a seamless, fully automated end-to-end data extraction process.

Dexi.io: Who Is It For?

Dexi is still a low-code or no-code tool, making it accessible to users with a wide range of technical skills, but it is more expensive than the other tools listed here and needs more programming experience compared to others on this list.

Dexi is ideal for businesses and organizations who have Business Intelligence (PowerBI, Tableau, Looker, etc.) workflows in place already, and are seeking a web-based scraping solution with a variety of features and integrations. It may not be the appropriate choice for individuals or smaller organizations with limited resources.

5. IGLeads.io

IGLeads.io is a no-code lead gen tool that collects contact data from multiple platforms, including Instagram, LinkedIn, TikTok, YouTube, Google Maps, and Twitter/X. It is designed to support both B2B and B2C outreach by consolidating different data sources into one platform.

IGLeads.io: How It Works

Users select a source and apply filters such as hashtags, job titles, or business categories. IGLeads.io then extracts publicly available contact details — including emails and phone numbers — which can be exported in CSV or XLSX formats. The platform is fully cloud-based and does not require coding or proxy setup. An AI assistant, IGLeads Copilot, helps refine searches and organize results for campaigns.

IGLeads.io: Who Is It For?

IGLeads.io is suitable for sales teams, marketers, recruiters, and small businesses that need a practical lead gen tool for gathering contacts across multiple channels. It is particularly useful for those who want a straightforward way to source leads at scale without building or maintaining scrapers themselves.

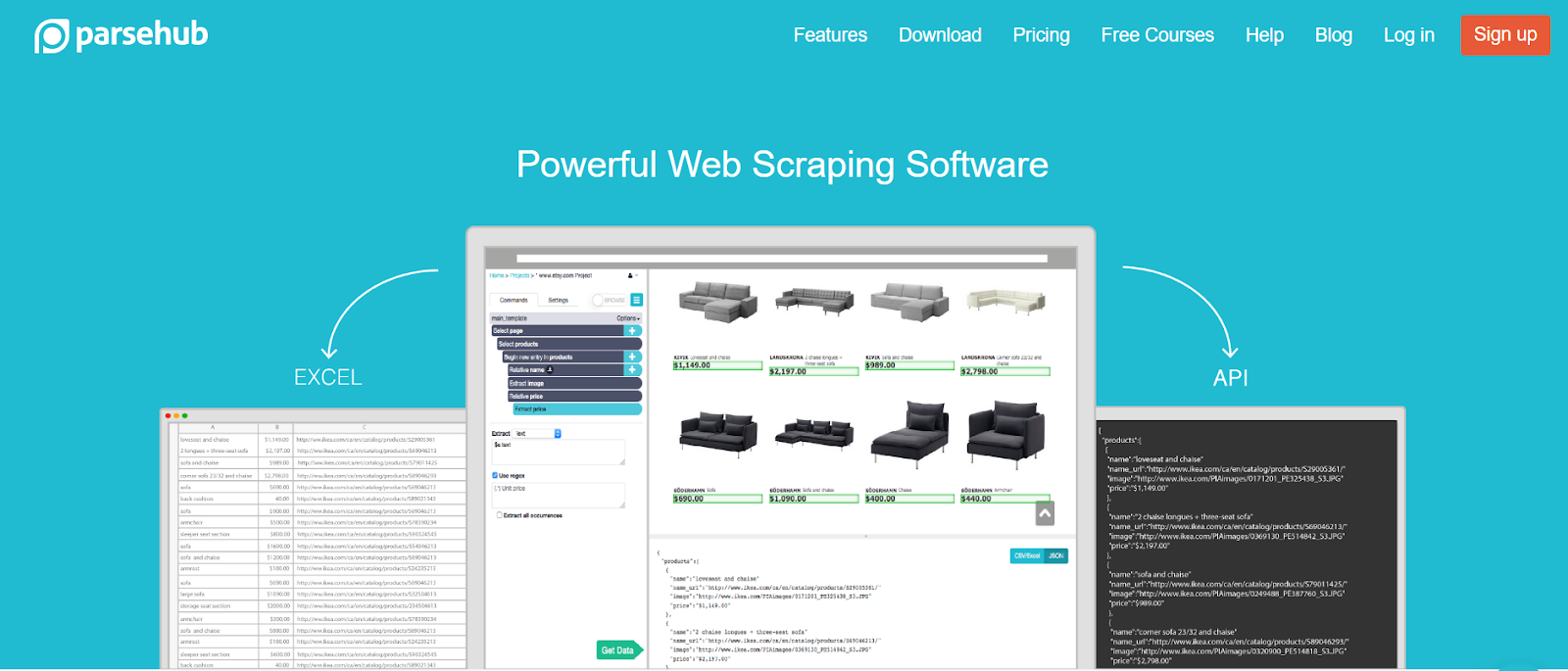

5. ParseHub

ParseHub is a free cloud-based web scraping tool that makes it easy for users to extract online data from websites, whether static or dynamic. With its simple point-and-click interface, you can start selecting the data you want to extract without any coding required.

ParseHub: How It Works

ParseHub operates as a desktop app that you can start when you are on your target website. The tool allows users to visually select elements on-screen with its visual interface, making it easy to extract the desired data.

Along with that, ParseHub offers several advanced features such as infinite scroll, pagination, custom JavaScript execution, and the ability to scrape behind a login. ParseHub also offers an API that allows users to automate the data extraction process and receive fresh and accurate data.

The tool uses regular expressions to clean text and HTML, ensuring the data is well-organized and accurate.

ParseHub: Who Is It For

ParseHub is an ideal - and trusted - solution for users who want to access data from websites without having to interact with any code, but it may not be the best option for more sensitive websites as it may fall short when it comes to overcoming complex website blocks.

Also, while ParseHub offers a free version, it comes with limited features - and the paid version is among the more expensive desktop scraping apps.

Still, ParseHub has been around for a while, and is a trusted choice for those who want to extract data from interactive websites with minimal coding, but it may not be the best option for those who require a more robust solution for complex website blocks.

Honorable mentions

6. Webscraper.io

Webscraper.io is a modern and powerful web scraping tool that enables businesses and individuals to extract data from websites in a simple and efficient way.

Essentially, this tool is a free (for local use only) no-code Chrome/Firefox extension with a point-and-click interface, but it also offers a paid Cloud service that lets you run and schedule scrape jobs, and manage them via an API.

Webscraper.io: How It Works

The browser extension is user-friendly and operates with a point-and-click interface to select elements and build Site Maps, eliminating the need for coding skills and making data extraction easy and reusable, while the Cloud platform offers a large selection of IP addresses for rotating through, and is built on top of trusted, well-supported cloud technologies that can scale as needed.

As a whole, Webscraper.io supports a wide range of websites, including dynamic ones, and stores data in a structured format such as CSV (free), XLSX or JSON (requires paid plans), which can be accessed via API, webhooks, or exported to popular cloud storage solutions like Dropbox or S3.

Webscraper.io: Who Is It for?

This is a versatile tool that applies to many use cases and provides a cost-effective solution for extracting data for market research, lead generation, price comparison, academic projects, and so on.

Overall, while the free browser extension is great for one-off jobs, many of its useful options (schedulers, proxying, support for JSON, and S3 exports) need to be paid for. Check the pricing section and pick a plan that suits your requirements. Documentation and tutorial videos are available, too.

Conclusion

Web scraping has played a crucial role in the age of Big Data, making scrapers invaluable for most business and content strategies of this day and age.

However, while traditional scraping solutions that use Scrapy, Selenium, Puppeteer, etc. can be powerful and extensible - they are limited to only those with coding knowledge.

These five no-code and low-code tools we have highlighted, instead, will serve you well in making sure you get the data you want, at the scale you want, without needing programming expertise.

Bright data is the best all-around low-code tool, while Octoparse could also a good pick with its AI/ML-based extraction that auto-selects fields for you - in many cases, you won't even need to point-and-click to select the data you want. But ultimately, the tool you choose will be determined by your use case.

Comments

Loading comments…