We live in an age of extraordinary technological progress, blurring the boundaries between science fiction and reality. This is true especially in vehicle technology where they're able to drive themselves and also speak to the user and make intuitive and human-like interactions possible. Along these lines, one key area that's emerging is the combination of autonomous technology and smart displays/speakers that let the vehicles even interact with people outside the vehicle --- leading to safer streets and commutes. This groundbreaking capability is fueled by the convergence of two powerful forces: artificial intelligence (AI) and dynamic displays. This article delves into the intricacies of this transformative technology, exploring a patented system for targeted vehicular communication (US9959792B2) and a proposed framework for interactive communication using large language models (LLMs). By weaving together these innovative threads, we can create a future where vehicles seamlessly integrate into our human-centric world, enhancing safety, accessibility, and communication on our roads.

This line of products could have a vehicle equipped with a camera, strategically positioned to capture a panoramic view of its surroundings. These captured images are fed into a sophisticated image recognition module, and as a whole it acts as the vehicle's visual cortex --- helping the vehicle discern intricate details like age, gender and even activity (e.g., walking, running, cycling). For example, a vehicle that can differentiate between a child playfully chasing a ball near the road and an adult pedestrian patiently waiting to cross is going to be crucial in improving safety. This nuanced understanding of the human landscape is paramount for enabling safe and meaningful communication.

However, visual perception alone is insufficient. The system ingeniously fuses this visual data with real-time information from an array of vehicle sensors, including GPS, speedometer, and even suspension compression sensors. This fusion creates a dynamic, multi-dimensional map of the surrounding environment, encompassing the location, speed, and trajectory of both the vehicle and nearby pedestrians.

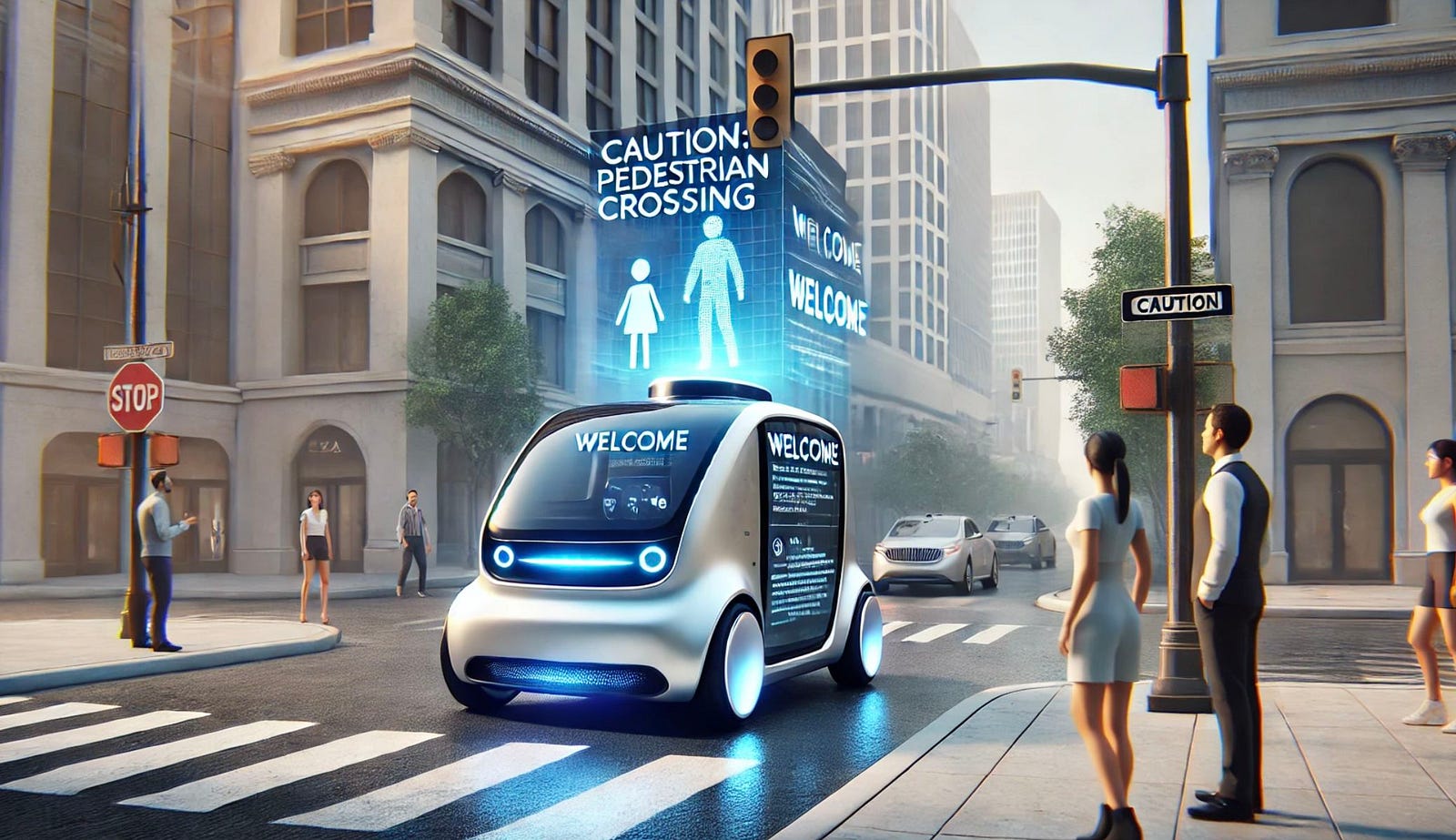

Based on this real-time analysis, the system acts as an intelligent communicator, generating contextually relevant messages that range from safety warnings ("Caution: Pedestrian crossing") to personalized greetings ("Good morning, Anvesh!"). These messages are then conveyed through external displays, seamlessly integrated into the vehicle's design, such as the back window, roof, or even projected onto the road surface.

Complementing this perceptive and responsive system is the integration of LLMs, bestowing upon vehicles the gift of natural language. These LLMs, trained on a vast corpus of text and code, possess an uncanny ability to comprehend and respond to a wide spectrum of prompts and queries, generating human-like text or speech. This capability unlocks a realm of possibilities for dynamic, context-aware dialogues between vehicles and pedestrians. Imagine a pedestrian effortlessly conversing with a vehicle, asking for directions to the nearest coffee shop or receiving real-time traffic updates while waiting at a crosswalk.

The Synergy of Integration

The true marvel of this technology lies in the synergy achieved through integration. By intertwining the real-time perception and targeted communication capabilities of the patented system with the natural language processing prowess of LLMs, we can create vehicles that transcend their traditional role as mere modes of transportation and become intelligent companions on our journeys.

The image recognition module acts as the vehicle's eyes, feeding invaluable information to the LLM, which in turn personalizes its communication based on the individual or group being addressed. For instance, the system could extend a warm greeting to a recognized pedestrian by name or tailor its message based on their apparent age or activity.

The external displays from the patent, in concert with external speakers, orchestrate a multi-modal communication symphony. This enables richer, more expressive interactions, where vehicles not only display text-based messages but also provide crystal-clear audible instructions, explanations, or answers to pedestrian queries.

The fusion of real-time vehicle dynamics data with LLM capabilities ensures that the communication remains perpetually relevant to the ever-changing situation. For example, if a pedestrian is detected near the vehicle while it is turning, the vehicle could issue a timely spoken warning through its external speakers, concurrently displaying a visual alert on its external displays.

Real-World Applications: A Glimpse into the Future

Enhanced Pedestrian Safety: The vehicles equipped with this technology could act as guardians, proactively communicating warnings about hidden dangers lurking in blind spots, impending turns, or sudden braking maneuvers. This proactive communication could significantly reduce pedestrian accidents, and also reduce the possibility of crashes.

- Improved Accessibility: This technology has the power to break down barriers and foster inclusivity. These vehicles, when driven autonomously for ride hailing, could provide customized audio or visual cues to assist those with accessibility needs in navigating complex sidewalks, locating the vehicle, or adjusting the pickup ramp based on the customers needs without having to communicate directly with another human.

- Intuitive Interactions: The fusion of AI and dynamic displays enables a new era of intuitive human-vehicle interaction. Pedestrians could effortlessly engage in natural language conversations with parked vehicles, asking for directions, inquiring about local amenities, or even seeking assistance in emergencies. This seamless communication could transform our commutes into opportunities for exploration and connection.

- Targeted Information Delivery: Vehicles could evolve into dynamic information hubs, disseminating hyper local information or public service announcements tailored to the interests and needs of nearby pedestrians. While there is also the possibility of targeted ads, this technology would not gain traction if solely used for ads. The ads served could be used to offset the costs of such an open and widely accessible system but should not be the primary purpose.

Conclusion

The integration of Artificial Intelligence (AI) with dynamic displays and autonomous vehicle technology represents a fundamental shift in the way vehicles communicate with humans. This paradigm shift extends beyond autonomous vehicles, encompassing all personal vehicles. By equipping vehicles with the ability to perceive, understand, and respond to their human surroundings, we are ushering in a future where technology seamlessly intertwines with our lives. This integration will enhance road safety, making interactions more intuitive and commutes more engaging, ultimately creating a more harmonious relationship between humans and vehicles.

About the Author

Anvesh has over 12 years of experience in verticals like Automotive software and Payment products and is currently focused in introducing smart voice tech in vehicles to improve user experience and accessibility.

Comments

Loading comments…