Case Study: Hong Kong Protest Movement 2019

In this example, we will be extracting tweets related to the Hong Kong Protest Movement 2019, which I have written an analysis on. The codes can be configured to suit your own needs.

The first order of affair was to obtain the tweets. I had considered and tried out tools such as Octoparse, but they either only support Windows (I am using a Macbook), were unreliable, or they only allow you to download a certain number of tweets unless you subscribe to a plan. In the end, I threw these ideas into the bin and decided to do it myself.

Source: https://tenor.com/view/thanos-fine-ill-do-it-myself-gif-11168108

I tried out a few Python libraries and decided to go ahead with Tweepy. Tweepy was the only library that did not throw any errors for my environment, and it was quite easy to get things doing. One downside is that I couldn’t find any documentation that tells you what are the parameter values for pulling certain metadata out of a tweet. I only managed to get most of them that I needed after a few rounds of trial and error.

Prerequisites: Setting up a Twitter Developer Account

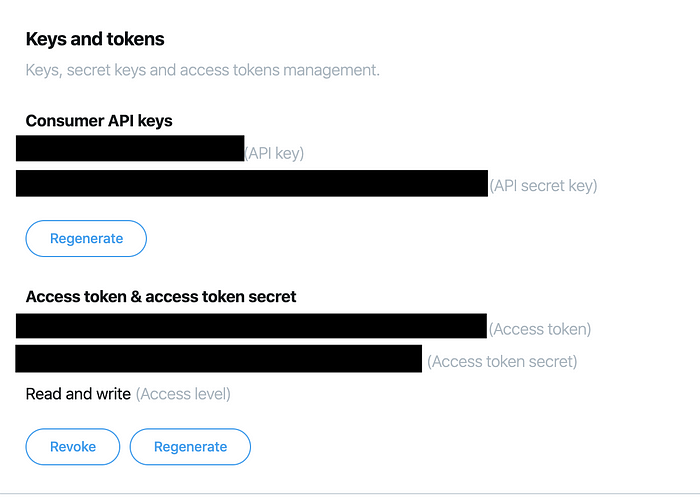

Before you start using Tweepy, you would need a Twitter Developer Account in order to call Twitter’s APIs. Just follow the instructions and after some time (only a few hours for me), they would grant you your access.

You can view this page after you have been granted access and created an app.

You would need 4 pieces of information ready — API key, API secret key, Access token, Access token secret.

Import Libraries

Switch over to Jupyter Notebook and import the following libraries:

from tweepy import OAuthHandler

from tweepy.streaming import StreamListener

import tweepy

import json

import pandas as pd

import csv

import re

from textblob import TextBlob

import string

import preprocessor as p

import os

import time

Authenticating Twitter API

If you ran into any authentication errors, regenerate your keys and try again.

# Twitter credentials

# Obtain them from your twitter developer account

consumer_key = <your_consumer_key>

consumer_secret = <your_consumer_secret_key>

access_key = <your_access_key>

access_secret = <your_access_secret_key>

# Pass your twitter credentials to tweepy via its OAuthHandler

auth = OAuthHandler(consumer_key, consumer_secret)

auth.set_access_token(access_key, access_secret)

api = tweepy.API(auth)

Batch Scraping

Due to the limited number of API calls one can make using a basic and free developer account, (~900 calls every 15 minutes before your access is denied) I created a function that extract 2,500 tweets per run once every 15 minutes (I tried to extract 3,00 and above but that got me denied after the second batch). In this function you specify the:

- search parameter such as key words and hashtags etc.

- starting date, after which all tweets would be extracted (you can only extract tweets that are not older than the last 7 days)

- number of tweets to pull per run

- number of runs that happen once every 15 minutes

I only extracted those metadata that I deemed relevant to my case. You may explore the list of metadata from the tweepy.Cursor object in detail (this is the real messy part).

def scraptweets(search_words, date_since, numTweets, numRuns):

# Define a for-loop to generate tweets at regular intervals

# We cannot make large API call in one go. Hence, let's try T times

# Define a pandas dataframe to store the date:

db_tweets = pd.DataFrame(columns = ['username', 'acctdesc', 'location', 'following',

'followers', 'totaltweets', 'usercreatedts', 'tweetcreatedts',

'retweetcount', 'text', 'hashtags']

)

program_start = time.time()

for i in range(0, numRuns):

# We will time how long it takes to scrape tweets for each run:

start_run = time.time()

# Collect tweets using the Cursor object

# .Cursor() returns an object that you can iterate or loop over to access the data collected.

# Each item in the iterator has various attributes that you can access to get information about each tweet

tweets = tweepy.Cursor(api.search, q=search_words, lang="en", since=date_since, tweet_mode='extended').items(numTweets)# Store these tweets into a python list

tweet_list = [tweet for tweet in tweets]# Obtain the following info (methods to call them out):

# user.screen_name - twitter handle

# user.description - description of account

# user.location - where is he tweeting from

# user.friends_count - no. of other users that user is following (following)

# user.followers_count - no. of other users who are following this user (followers)

# user.statuses_count - total tweets by user

# user.created_at - when the user account was created

# created_at - when the tweet was created

# retweet_count - no. of retweets

# (deprecated) user.favourites_count - probably total no. of tweets that is favourited by user

# retweeted_status.full_text - full text of the tweet

# tweet.entities['hashtags'] - hashtags in the tweet# Begin scraping the tweets individually:

noTweets = 0for tweet in tweet_list:# Pull the values

username = tweet.user.screen_name

acctdesc = tweet.user.description

location = tweet.user.location

following = tweet.user.friends_count

followers = tweet.user.followers_count

totaltweets = tweet.user.statuses_count

usercreatedts = tweet.user.created_at

tweetcreatedts = tweet.created_at

retweetcount = tweet.retweet_count

hashtags = tweet.entities['hashtags']try:

text = tweet.retweeted_status.full_text

except AttributeError: # Not a Retweet

text = tweet.full_text# Add the 11 variables to the empty list - ith_tweet:

ith_tweet = [username, acctdesc, location, following, followers, totaltweets,

usercreatedts, tweetcreatedts, retweetcount, text, hashtags]# Append to dataframe - db_tweets

db_tweets.loc[len(db_tweets)] = ith_tweet# increase counter - noTweets

noTweets += 1

# Run ended:

end_run = time.time()

duration_run = round((end_run-start_run)/60, 2)

print('no. of tweets scraped for run {} is {}'.format(i + 1, noTweets))

print('time take for {} run to complete is {} mins'.format(i+1, duration_run))

time.sleep(920) #15 minute sleep time# Once all runs have completed, save them to a single csv file:

from datetime import datetime

# Obtain timestamp in a readable format

to_csv_timestamp = datetime.today().strftime('%Y%m%d_%H%M%S')# Define working path and filename

path = os.getcwd()

filename = path + '/data/' + to_csv_timestamp + '_sahkprotests_tweets.csv'# Store dataframe in csv with creation date timestamp

db_tweets.to_csv(filename, index = False)

program_end = time.time()

print('Scraping has completed!')

print('Total time taken to scrap is {} minutes.'.format(round(program_end - program_start)/60, 2))

With this function, I usually performed 6 runs in total, where each run extracted 2,500 tweets. It usually takes approximately 2.5 hours to finish one round of extraction that would yield 15,000 tweets. Not bad.

Specific to the protests, I surveyed Twitter and found out the most common hashtags used by users in their tweets. Hence, I used a multitude of these related hashtags as my searching criteria.

It is also possible for other hashtags that are not defined in your ‘search_words’ parameter to appear because users might include them in their tweets altogether.

# Initialise these variables:

search_words = "#hongkong OR #hkprotests OR #freehongkong OR #hongkongprotests OR #hkpolicebrutality OR #antichinazi OR #standwithhongkong OR #hkpolicestate OR #HKpoliceterrorist OR #standwithhk OR #hkpoliceterrorism"

date_since = "2019-11-03"

numTweets = 2500

numRuns = 6

# Call the function scraptweets

scraptweets(search_words, date_since, numTweets, numRuns)

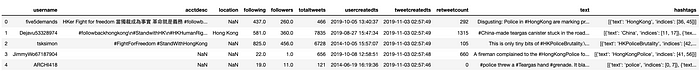

I have been running the above script once daily since 3rd Nov 2019 and have since amassed more than 200k tweets. The following is the first 5 lines of the dataset:

Comments

Loading comments…