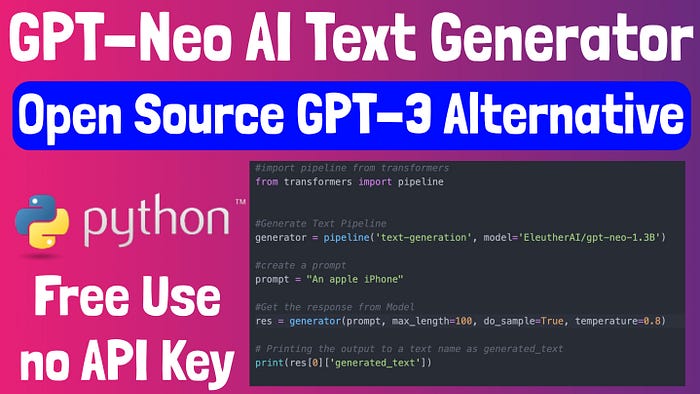

In this tutorial, we will discuss the Open Source AI Content Generator GPT-3 Alternative called GTP-Neo. We will be accessing through the HuggingFace Transformers library and Python.

GPT — stands for Generative Pre-trained transformers, a neural network machine learning model trained using internet data to generate text based on prompts. GPT was developed by OpenAI and can be accessed through their API, with an API key. The service is not free and there are strict guidelines on the allowed use-cases when you do get the API keys.

GPT-Neo is an alternative AI Engine to GPT-2/3, developed by EleutherAI and available via transformers library. In this tutorial, we will discuss how you can get started with GPT-Neo for text generation.

There is a written step-by-step guide that you can find here:

Online YouTube Video here:

How does GPT-Neo compare to GPT-2 and GPT-3

In short, GPT-Neo is a clone of GPT-2, it compares better to the second generation GPT-2 engines from OpenAI, see table below.

EleutherAI has two versions of GPT-Neo: (1) 1.3Billion Parameters and (2) 2.7Billion Parameters. The parameters give you an indication of the size of the model, the bigger model is more capable, but takes up more space and takes longer to generate text, while the smaller model is faster but also less capable.

GPT-2 was in a similar range as 1.3billion GPT-Neo, but GPT-3 is in an order of magnitude larger with 175Billion parameters.

In addition, OpenAI have now released and made standard the “instruct” series which take text generation to the next level, instead of prompting the generator with a statement that is must finish: you can now just “provide an instruction” and the text generator will be able to understand and comply to your instruction.

In summary, GPT-3 is more advanced and offers instruct series, a better way of interacting with the AI models. However, there are use-cases that GPT-Neo can still be used for, the benefits of using the open-source pre-trained models are: (1) no waiting times, (2) no API keys needed, (3) no approvals necessary or app reviews.

Steps to implement GPT-Neo Text Generating Models with Python

There are two main methods of accessing the GPT-Neo models. (1) You could download the models and run them on your own server or (2) utilise the API from HuggingFace (which costs about $9 a month). The benefit of using the API is the speed at which you can get text generated from the model, and is probably the way to go if you are running a live application.

We are going to cover the self-hosted method in this tutorial, with the following steps:

- Set-up a Virtual Server with Digital Ocean

- Install Pytorch

- Install Transformers

- Run the code to generate text

Set up Virtual Server with Digital Ocean and Python Virtual Environment

The steps to follow are covered in great detail in the video tutorial on YouTube. What I would like to mention here is; the size of droplet you purchase is very important.

The EleutherAI models are very big and take up a lot of space and memory. During the testing, we were able to run the 1.6Billion model on Memory Optimised 16GB/2CPU and 50GB SSD. Technically this should also be able to run the 2.7Billion model, but we did not test this.

Choose the right Droplet for the AI model:

Remember you are downloading the models and running them on your own machine, your memory and space should be able to handle the models. That is the trade-off for avoiding the API. Financially, it does not make sense to pay $80 a month just to run your own model, OpenAI with a much superior engine will cost much less than that.

Of course, you might still have your own reasons for running your own model, like avoiding the approval process and perhaps your own use-case that OpenAI might or might not approve.

The rest of the steps are available on the 👉🏽 Video Tutorial.

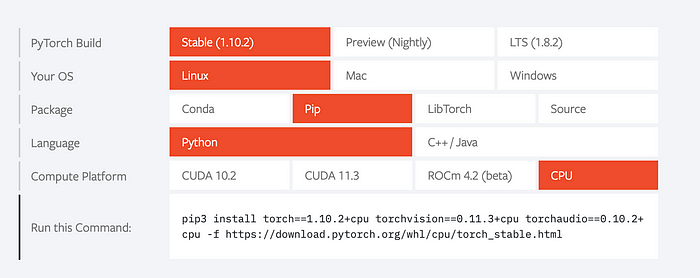

Install Pytorch

Visit their website here: https://pytorch.org/

If you are using the Digital Ocean Droplet, you need to set up the OS requirements as such:

Then copy and paste the instructions:

pip install torch==1.10.2+cpu torchvision==0.11.3+cpu torchaudio==0.10.2+cpu -f https://download.pytorch.org/whl/cpu/torch_stable.html

If you are using a Virtual Environment, as we show in the tutorial. You must use pip instead of pip3 as the instructions indicate.

Install transformers and run the code

pip install transformers

The rest of the code is as follows:

This code above can look deceivingly simple, but there is a lot happening and it will not run if the operating system does not have enough memory and you have not covered all the pre-requisite steps as indicated above.

You might get this response:

Killed

This response means you do not have enough memory to run the model on your machine. You can either upgrade your machine or run a smaller model. An alternative model that does not require a lot of memory is “distilgpt2”. It is much smaller and less capable of course.

To use a different model replace the generator line in the code with this:

generator = pipeline('text-generation', model='distilgpt2')

You are now ready to generate text with Python for free.