In this article, I will tell you a simple way to split the big JSON file into small JSON files. This is based on my own personal experience.

For the final year project of my undergraduate studies as a team of 4 members, we did a project on Twitter. We needed to collect a considerable amount of tweets to do our research project. At the start, we needed to collect tweets. So, we used snscrape to collect tweets. This is a very good article providing instructions on how to use snscrape, and it's also the one I followed.

After collecting the tweets, we started the data preprocessing steps. In that step, we identified that we are having a problem loading a 4-5 GB file at once. Because my computer kept freezing because of the huge file size. This is not a problem with a high-performance computer. But if you're not exactly having state-of-the-art hardware, this can become a problem. So, encountering this problem, I also needed to find a solution. I tried a few different things but the problem wasn't solved.

Then I come across this simple Python code as a solution for my problem. This can be a very simple piece of code. But it can come in handy for someone who is having the same problem as I did.

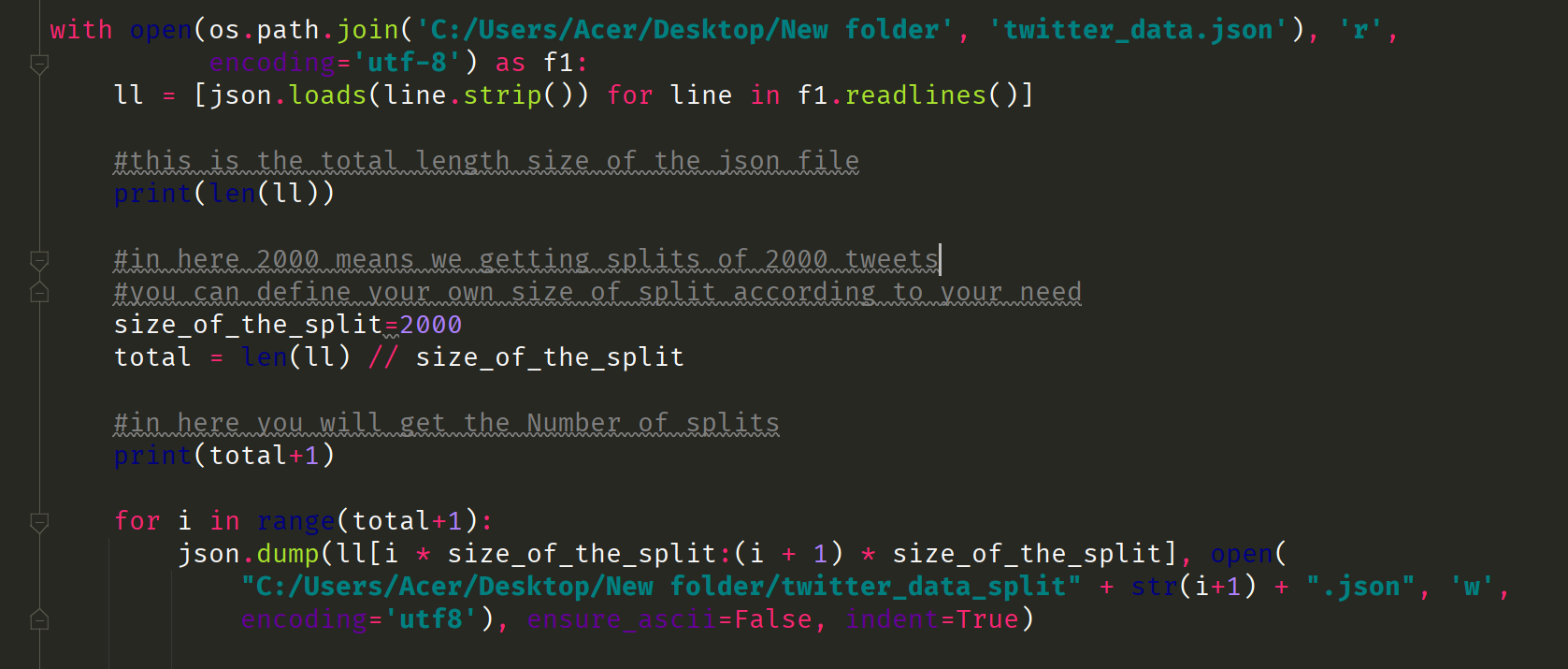

In this case, I used PyCharm as my IDE. You can use any IDE according to your preferences. When using this code for your problem, you just need to add a few configurations as per your need. You need to change the file path of your big JSON file and you need to provide the split size you want or expect. In my case, I needed to split the original file into 2,000 tweets per split. So, I used 2,000 tweets.

import os

import json

#you need to add you path here

with open(os.path.join(**'C:/Users/Acer/Desktop/New folder'**, **'twitter_data.json'**), **'r'**,

encoding=**'utf-8'**) as f1:

ll = [json.loads(line.strip()) for line in f1.readlines()]

#this is the total length size of the json file

print(len(ll))

#in here 2000 means we getting splits of 2000 tweets

#you can define your own size of split according to your need

size_of_the_split=2000

total = len(ll) // size_of_the_split

#in here you will get the Number of splits

print(total+1)

for i in range(total+1):

json.dump(ll[i * size_of_the_split:(i + 1) * size_of_the_split], open(

**"C:/Users/Acer/Desktop/New folder/twitter_data_split" **+ str(i+1) + **".json"**, **'w'**,

encoding=**'utf8'**), ensure_ascii=False, indent=True)

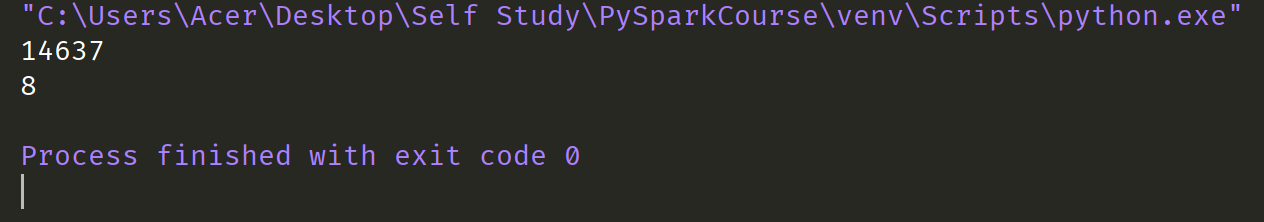

Then you just need to run the code. You can see that the big JSON file is split into small JSON files. You can see the length of the big JSON file and the number of splits as an output in your command prompt in Windows (I'm using Windows 10 Education Version).

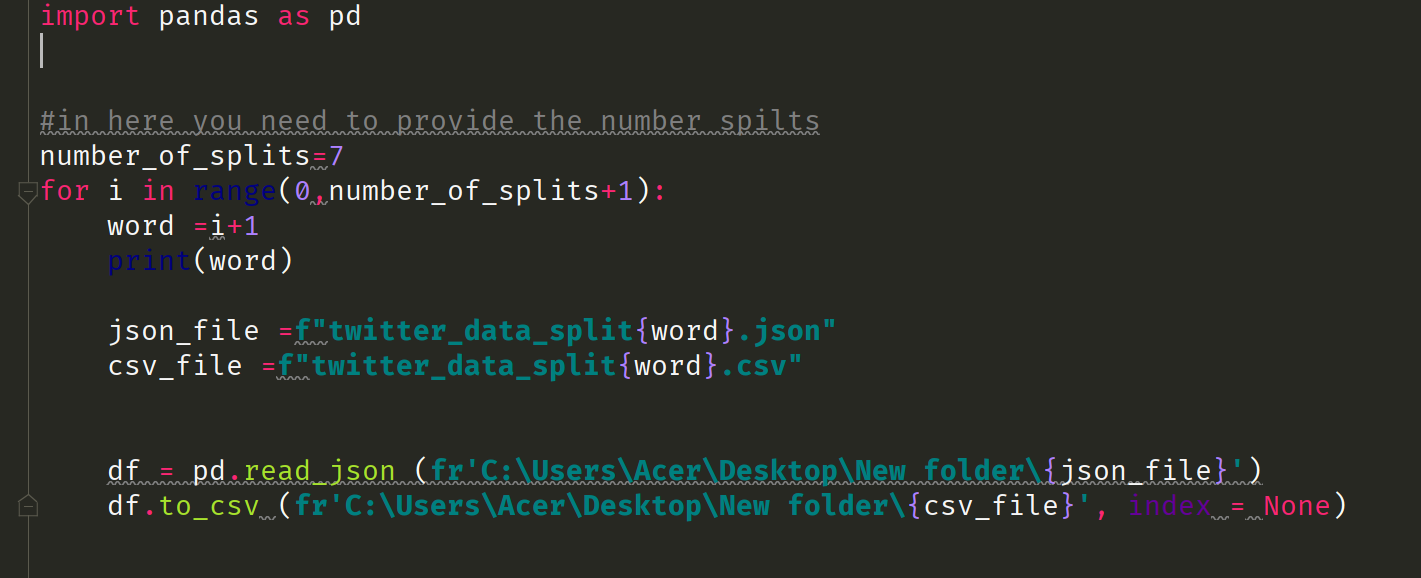

After these steps, I need to convert them into CSV files because we need to create a machine learning model from this. So, I created a simple JSON CSV converter that you can use to convert all the JSON files into one code without converting them one by one.

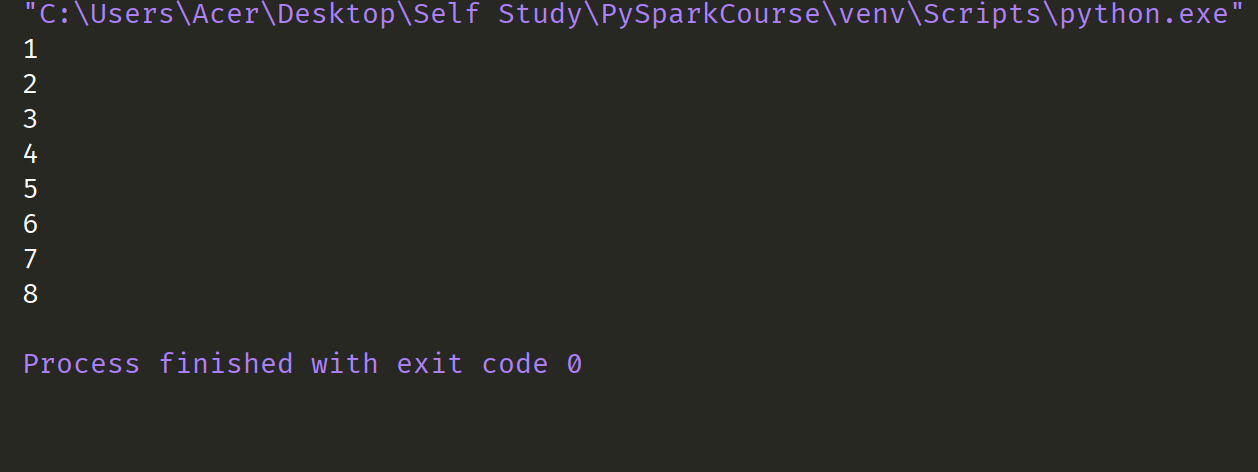

This is the JSON CSV converter code. You need to provide the number of splits according to your requirement. In my work, I split the big JSON file into 8 splits. So, I provided 8 as the value. Then you need to simply run the code and you will get the CSV files from the JSON files.

import pandas as pd

#in here you need to provide the number spilts

number_of_splits=7

for i in range(0,number_of_splits+1):

word =i+1

print(word)

json_file =**f"twitter_data_split**{word}**.json"

**csv_file =**f"twitter_data_split**{word}**.csv"

**

** **df = pd.read_json (**fr'C:\Users\Acer\Desktop\New folder*{json_file}**'**)

df.to_csv (**fr'C:\Users\Acer\Desktop\New folder*{csv_file}**'**, index = None)

Files after Splitting and Convert into CSV Files

Files after Splitting and Convert into CSV Files

Conclusion

This is not a big technical article. But like I mentioned earlier, I think this will come in handy for a person who is having the same problem that I did. I did an internet search and read answers on StackOverflow to implement those codes and I would like to thank them for their help.

This article has explored an easy way to split JSON files and I hope that it will assist you in completing your work more accurately.

I'd like to thank you for reading my article. I hope to write more articles on new trending topics in the future so keep an eye on my account if you liked what you read today!

References:

https://github.com/JustAnotherArchivist/snscrape Split JSON file in equal/smaller parts with Python *I am currently working on a project where I use Sentiment Analysis for Twitter Posts. I am classifying the Tweets with…*stackoverflow.com

Comments

Loading comments…