In the realm of cloud computing, Amazon Web Services (AWS) stands as a giant, offering a plethora of services to cater to diverse business needs. Among the fundamental services that AWS provides are load balancers, a critical component for ensuring high availability, scalability, and reliability of applications. In this blog post, we'll embark on a journey to demystify AWS load balancers, exploring their essential concepts and features.

THE ROLE OF LOAD BALANCERS IN AWS

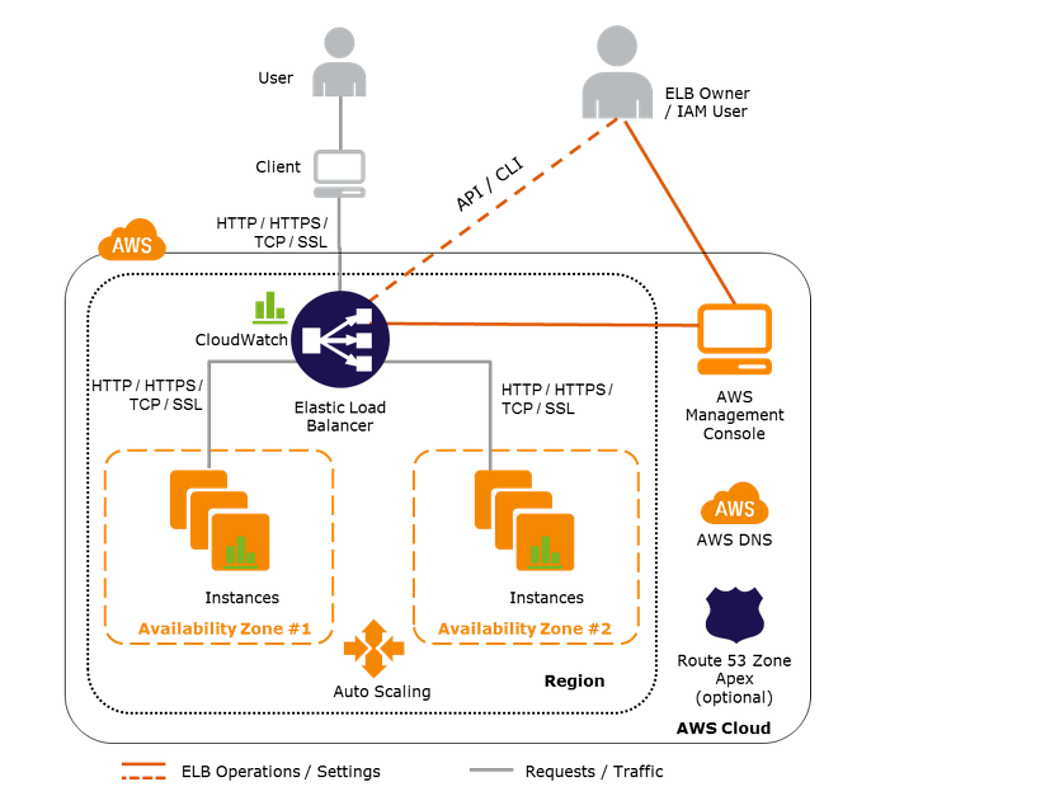

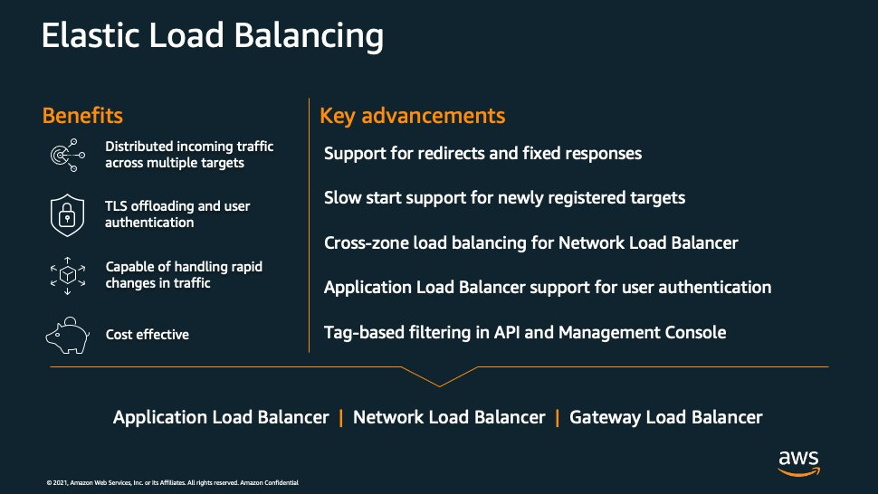

Load balancers act as traffic distributors, distributing incoming network traffic across multiple targets to ensure that no single component is overwhelmed. This is particularly crucial for applications hosted in the AWS cloud, where scalability and high availability are paramount. Elastic Load Balancing service comes under Networking & Content Delivery services.

From a networking standpoint, AWS Load balancers can be set to internal (private) or internet-facing (Public). Let's break down the key concepts and features of AWS load balancers.

TYPES OF AWS LOAD BALANCERS

AWS offers several types of load balancers, each catering to specific use cases:

APPLICATION LOAD BALANCER

Layer 7 Load Balancer: ALB operates at the application layer of the OSI model. This means it can inspect and make routing decisions based on the content of the request, making it highly versatile for handling a wide range of applications.

Content-Based Routing: ALB introduces a powerful feature for routing traffic --- content-based routing. This means you can direct incoming requests to different targets based on the content within the request. For example, you could route requests to different microservices or backend servers based on the URL, HTTP headers, or query parameters. This is particularly valuable in microservices architectures, where different services may be responsible for different functionalities.

Integrated with WebSocket: ALB seamlessly supports WebSocket-based applications. WebSockets provide full-duplex communication channels over a single TCP connection. With ALB, you can route WebSocket traffic to the appropriate backend servers or services, allowing real-time and interactive communication in your applications.

ALB's capability to operate at the application layer and perform content-based routing makes it an excellent choice for modern, complex web applications and microservices, where flexibility and intelligent routing are key. It's an essential tool for ensuring your applications are responsive, scalable, and able to handle different types of traffic effectively.

USE CASES

Web Application Load Balancing: ALB is a popular choice for load-balancing web applications. It excels at routing HTTP and HTTPS traffic to different target groups based on content within the request, such as URL paths or host headers. If you have a web application with multiple components, microservices, or different backend services, ALB ensures that incoming web traffic is distributed to the appropriate targets, providing high availability and efficient resource utilization.

Microservices Architectures: ALB is well-suited for microservices-based applications where you have numerous small, independently deployable services. In a microservices architecture, ALB's content-based routing allows you to route requests to specific microservices based on URL patterns or host headers. This enhances the scalability, flexibility, and maintainability of microservices-based applications.

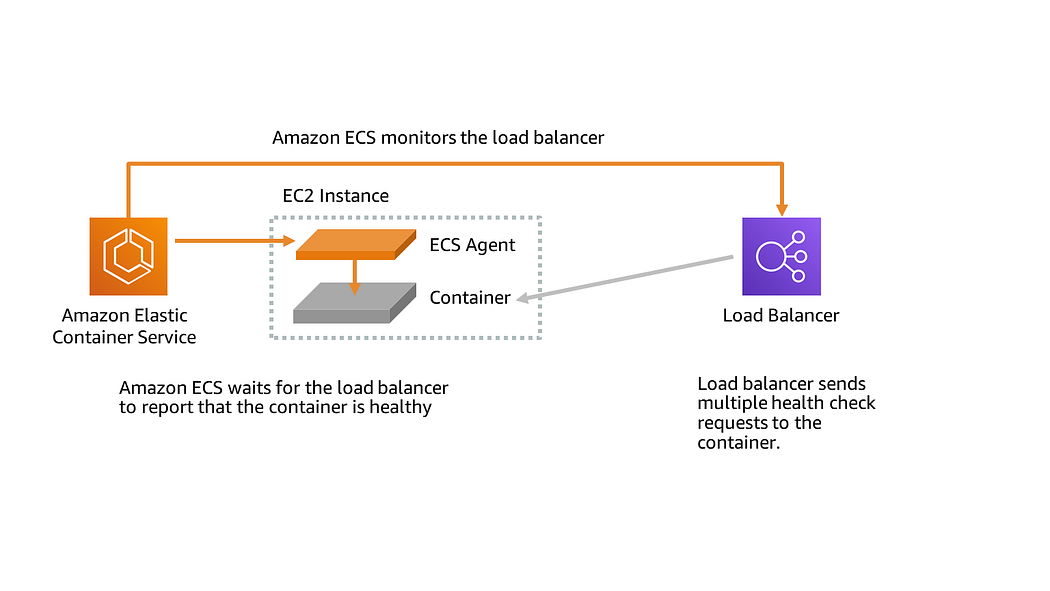

Containerized Applications with Amazon ECS or EKS: ALB seamlessly integrates with Amazon Elastic Container Service (ECS) and Elastic Kubernetes Service (EKS) for orchestrating containerized applications. If you are running containerized applications, ALB can automatically discover and register containers as they are added or removed. This ensures that incoming traffic is evenly distributed among container instances, optimizing resource utilization in container environments.

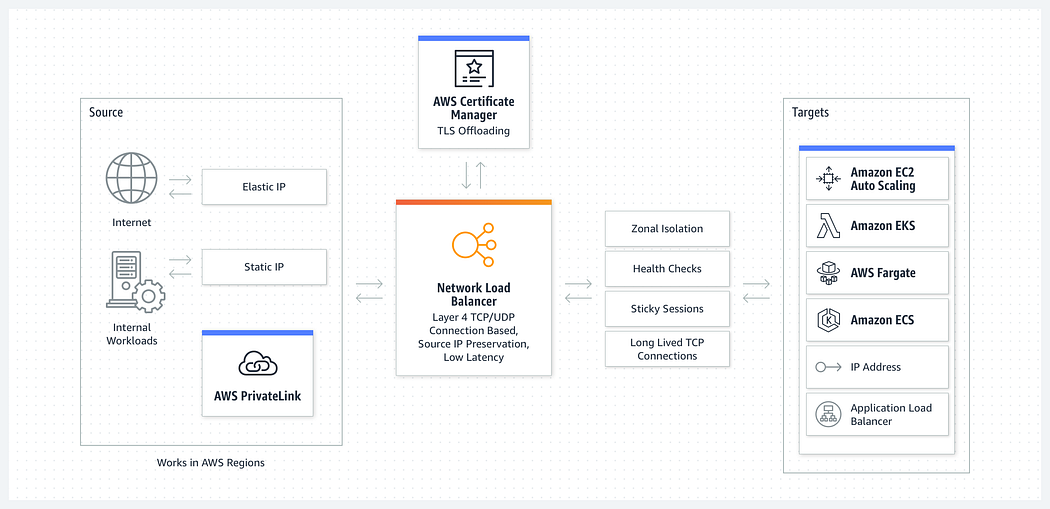

NETWORK LOAD BALANCER

Layer 4 Load Balancer: NLB functions as a Layer 4 load balancer, which means it operates at the transport layer (Layer 4) of the OSI model. Unlike Layer 7 load balancers such as ALB, NLB doesn't inspect the content of network packets. Instead, it focuses on efficiently distributing incoming traffic based on IP protocol data. This makes NLB an excellent choice for applications that require high throughput and low latency.

Static IP Addresses: NLB offers the advantage of providing static IP addresses as the front end. This means that the IP address of the NLB remains constant over time, making it suitable for applications that rely on IP whitelisting for security or access control. With a static IP, you can ensure that the NLB's IP address remains constant and can be added to your application's access control list.

NLB's ability to operate at the transport layer makes it ideal for applications with high network traffic requirements, such as gaming applications, real-time video streaming, or any application where maintaining low latency and high throughput is essential. Additionally, the static IP feature adds a layer of stability and security for applications that depend on access control lists.

USE CASES

High-Performance Workloads: NLB is an excellent choice for workloads that demand high performance and low latency, such as real-time applications, gaming, and video streaming services. If your application requires rapid data transfer and minimal delay, NLB can effectively distribute traffic to backend instances while minimizing the impact on response times.

TCP/UDP Load Balancing: NLB operates at Layer 4 (transport layer) of the OSI model, making it ideal for distributing TCP and UDP traffic. Applications that rely on non-HTTP protocols, such as DNS servers, VoIP services, and online gaming, benefit from NLB's ability to evenly distribute TCP and UDP traffic to backend instances or targets.

Static IP Addresses and IP Whitelisting: NLB provides static IP addresses for the front end, which makes it suitable for applications that rely on IP whitelisting for security or access control. If your application requires consistent and predictable IP addresses, such as for integration with external services or security compliance, NLB's static IP addresses offer stability and control.

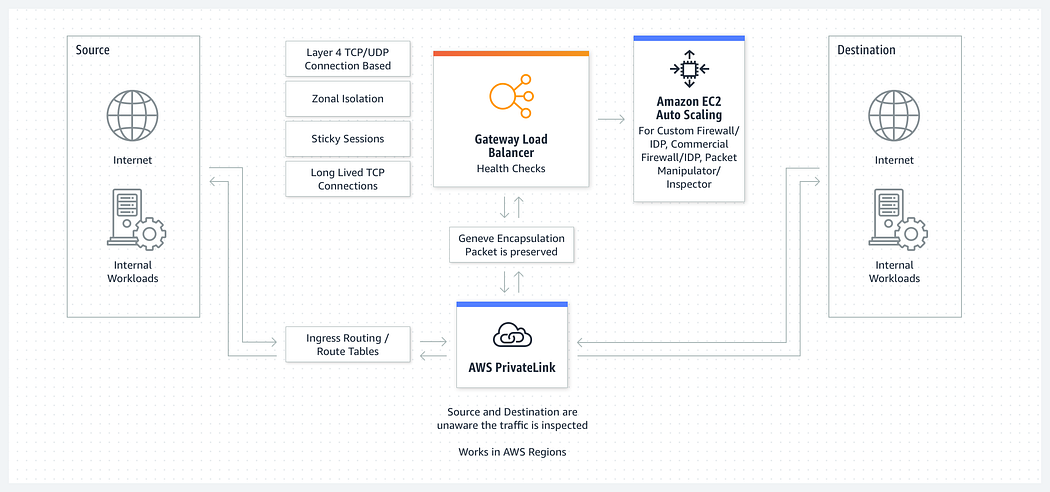

GATEWAY LOAD BALANCER

Outbound Traffic Management: Unlike ALB and NLB, which focus on inbound traffic to applications within your VPC, the Gateway Load Balancer primarily handles outbound traffic. It allows you to centrally manage the egress traffic from multiple VPCs or on-premises data centers.

High Availability: The Gateway Load Balancer is designed for high availability. It is a fully managed service with automatic scaling and fault tolerance. AWS takes care of the operational aspects, so you can focus on your applications.

Security and Compliance: The Gateway Load Balancer can be a critical component for enhancing security and compliance. It allows you to route outbound traffic through security appliances, such as firewalls and intrusion detection systems before it leaves your VPC. This ensures that all outbound traffic is inspected for security and compliance purposes.

Simplified Management: With the Gateway Load Balancer, you can simplify the management of outbound traffic from your VPCs. It abstracts the complexity of managing multiple VPCs and routing rules, making it easier to maintain a consistent and secure egress strategy.

Cross-Region Load Balancing: You can use the Gateway Load Balancer to distribute outbound traffic across different regions, enhancing redundancy and fault tolerance in your architecture.

USE CASES

Global High Availability and Disaster Recovery: In the event of a data center failure or a regional outage, GLB can reroute traffic to healthy data centers in other geographic locations, minimizing downtime and maintaining service continuity.

Geographical Content Delivery: For global websites, media streaming services, or content delivery networks (CDNs), GLB ensures that users experience fast load times and efficient content delivery, regardless of their geographic location.

Traffic Load Distribution Across Multiple Regions: Companies operating on a global scale, offering online services, e-commerce, or SaaS applications, can use GLB to evenly distribute traffic across their global infrastructure, providing a seamless and responsive user experience.

LOAD BALANCING ALGORITHMS

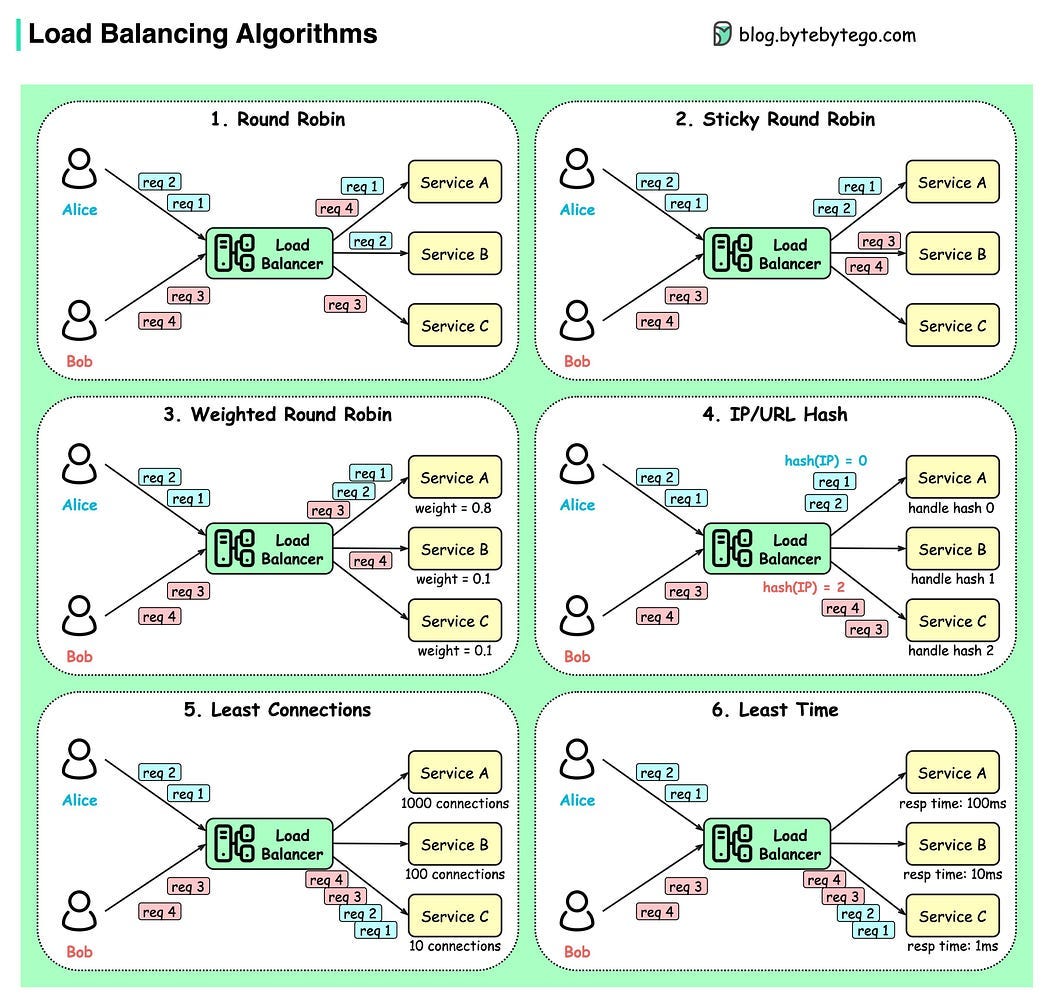

A load balancing algorithm is the set of rules that a load balancer follows to determine the best server for each of the different client requests. Load-balancing algorithms fall into two main categories.

STATIC LOAD BALANCING

Static load balancing algorithms follow fixed rules and are independent of the current server state. The following are examples of static load balancing.

ROUND-ROBIN METHOD

Servers have IP addresses that tell the client where to send requests. The IP address is a long number that is difficult to remember. To make it easy, a Domain Name System maps website names to servers. When you enter aws.amazon.com into your browser, the request first goes to our name server, which returns our IP address to your browser.

In the round-robin method, an authoritative name server does the load balancing instead of specialized hardware or software. The name server returns the IP addresses of different servers in the server farm turn by turn or in a round-robin fashion.

WEIGHTED ROUND-ROBIN METHOD

In weighted round-robin load balancing, you can assign different weights to each server based on their priority or capacity. Servers with higher weights will receive more incoming application traffic from the name server.

IP HASH METHOD

In the IP hash method, the load balancer performs a mathematical computation, called hashing, on the client's IP address. It converts the client IP address to a number, which is then mapped to individual servers.

DYNAMIC LOAD BALANCING

Dynamic load balancing algorithms examine the current state of the servers before distributing traffic. The following are some examples of dynamic load-balancing algorithms.

LEAST CONNECTION METHOD

A connection is an open communication channel between a client and a server. When the client sends the first request to the server, they authenticate and establish an active connection with each other. In the least connection method, the load balancer checks which servers have the fewest active connections and sends traffic to those servers. This method assumes that all connections require equal processing power for all servers.

WEIGHTED LEAST CONNECTION METHOD

Weighted least connection algorithms assume that some servers can handle more active connections than others. Therefore, you can assign different weights or capacities to each server, and the load balancer sends the new client requests to the server with the least connections by capacity.

LEAST RESPONSE TIME METHOD

The response time is the total time that the server takes to process the incoming requests and send a response. The least response time method combines the server response time and the active connections to determine the best server. Load balancers use this algorithm to ensure faster service for all users.

RESOURCE-BASED METHOD

In the resource-based method, load balancers distribute traffic by analyzing the current server load. Specialized software called an agent runs on each server and calculates usage of server resources, such as its computing capacity and memory. Then, the load balancer checks the agent for sufficient free resources before distributing traffic to that server.

KEY FEATURES OF AWS LOAD BALANCERS

Now that we've covered the types of load balancers, let's explore their essential features:

AUTO-SCALING INTEGRATION

Load balancers work seamlessly with AWS Auto Scaling, dynamically scaling your application resources based on traffic.

HEALTH CHECKS

Load balancers monitor the health of registered instances and automatically route traffic away from unhealthy instances.

ZONAL AND CROSS-ZONAL LOAD BALANCING

You can choose whether your load balancer distributes traffic across multiple Availability Zones (cross-zone load balancing) or within a single Availability Zone.

SECURITY AND SSL/TLS TERMINATION

Load balancers can offload the SSL/TLS decryption process, ensuring secure communication with clients.

STICKY SESSIONS

For applications that require session persistence, load balancers support sticky sessions, directing user requests to the same target.

ACCESS LOGS AND MONITORING

Load balancers provide access logs and integrate with AWS CloudWatch for detailed monitoring and troubleshooting.

HEALTH CHECK ON LOAD BALANCERS

Health checks are a crucial aspect of load balancing in AWS. They help ensure that traffic is only directed to healthy instances or targets, thereby enhancing the reliability and performance of your applications. AWS load balancers support health checks to monitor the status of your targets.

TARGET GROUPS

Health checks in AWS load balancers are primarily associated with target groups. A target group is a logical grouping of instances or targets that receive traffic from a load balancer.

HEALTH CHECK CONFIGURATION

When you configure a target group, you can define health checks to monitor the health of the registered instances or targets. This includes specifying the protocol, port, and ping path or port (for HTTP/HTTPS checks).

PROTOCOL AND PORT

The health check protocol is typically the same as the protocol used to route traffic to the targets (e.g., HTTP, HTTPS, TCP). The health check port is the port on which the target is expected to respond.

PING PATH OR PORT

For HTTP/HTTPS health checks, you specify a ping path, which is the URL path that the load balancer sends a request to on the target. For other protocols (e.g., TCP), you specify a ping port.

SUCCESS AND FAILURE CRITERIA

You define the criteria for a successful health check, such as the expected response code (e.g., 200 for HTTP), response time, or the healthy/unhealthy threshold count. If a target doesn't meet these criteria, it is marked as unhealthy.

HEALTH CHECK FREQUENCY

You can set the frequency at which the load balancer performs health checks on targets. The interval can be adjusted to ensure that targets are checked at an appropriate rate.

AUTOMATIC TARGET REGISTRATION

Health checks can also be used in conjunction with auto-scaling. If a new instance is launched and passes the health check, it can be automatically registered with the load balancer.

MONITORING AND ALERTS:

AWS provides monitoring and logging of health check results, including the ability to set up CloudWatch alarms and notifications based on health check status changes.

Health checks are a fundamental part of maintaining the high availability and reliability of applications in a load-balanced environment. They ensure that traffic is routed to healthy instances and automatically handle instances that may become unhealthy due to failures or issues. Properly configured health checks contribute to the overall performance and resiliency of your AWS applications.

LOAD BALANCERS PRICING

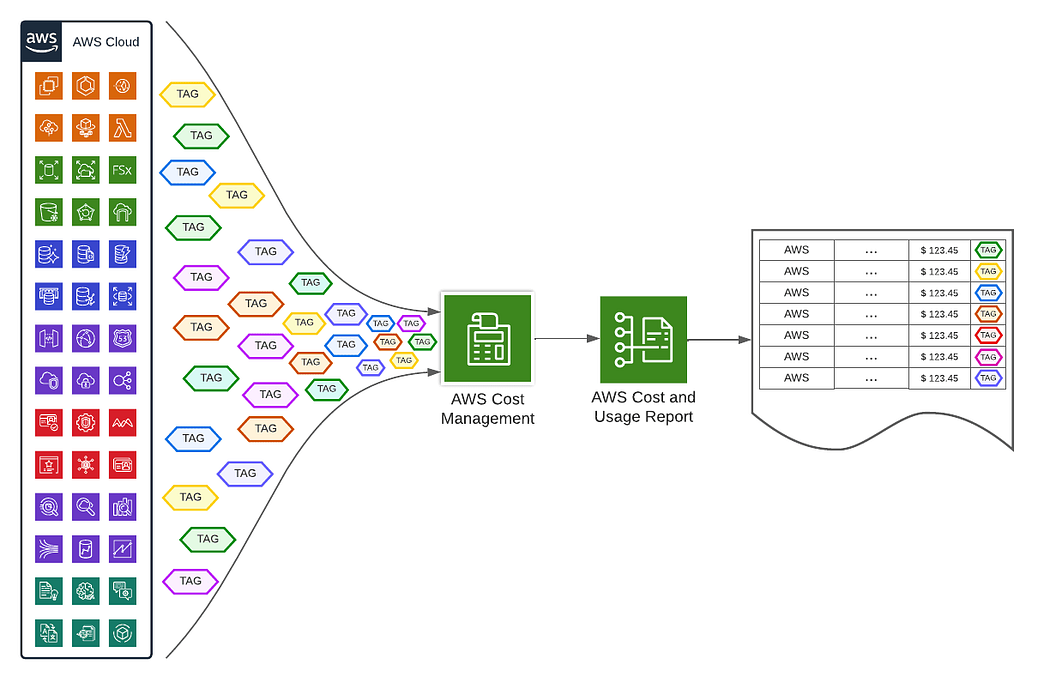

COST OPTIMIZATION

To manage your ELB costs, you must choose the right ELB for your infrastructure setup. Thus, it's essential to understand the key comparison factors among the load balancers. AWS ELB cost optimization starts with understanding your system requirements using monitoring tools, like* AWS Cost Explorer *and CloudWatch. With these tools in place, you can easily calculate your cost against your resources and create your AWS ELB budget plan.

CONCLUSION

AWS load balancers are the unsung heroes of cloud architecture, ensuring that your applications remain highly available, scalable, and reliable. Understanding their types, features, and use cases is fundamental for designing a robust cloud infrastructure on AWS. As you continue your journey in the cloud, AWS load balancers will be a vital tool in your toolbox, empowering your applications to thrive in the ever-changing digital landscape.