In the AI era, you may encounter a use case where you need to integrate machine learning with your backend. To integrate a machine learning we need some configuration and it’s not surprising if someone struggles to integrate a machine learning model into the backend. In this article, I will share how to integrate a pre-trained machine learning model into a Node.js environment.

Prepare the project

The first step, we will set up the project. In this article, I will use JavaScript and Hapi Framework.

Create a new project and open the project in your text editor/IDE.

Initialize your project with npm init -y

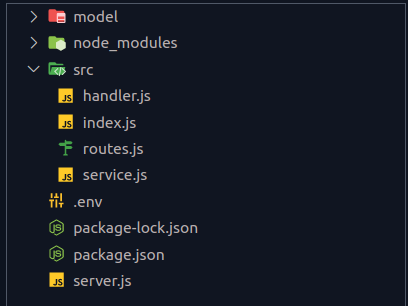

Next, run npm install @hapi/hapi to install the latest version of Hapi. After successfully, create a project structure as shown in the following image.

Open your

Open your server.js file and write the code as shown below:

const Hapi = require(''@hapi/hapi'')

require(''dotenv'').config()

const predict = require(''./src'')

const PredictService = require(''./src/service'');

const init = async () => {

const predictService = new PredictService();

const server = Hapi.server({

port: process.env.PORT,

host: ''localhost'',

})

await server.register([

{

plugin: predict,

options: {

service: predictService

}

}

])

await server.start()

console.log(''Server running on'', server.info.uri)

}

init()

We write a Hapi plugin named predict and PredictService as a machine learning service.

Create a Hapi Plugin as HTTP Server

Open your index.js file as write the code as shown below:

const PredictHandler = require(''./handler'');

const routes = require(''./routes'');

module.exports = {

name: ''predicts'',

version: ''1.0.0'',

register: async (server, { service }) => {

const handler = new PredictHandler(service);

server.route(routes(handler));

},

};

After that, open yourroutes.js

Next, write the code as shown below:

const routes = (handler) => [

{

method: ''POST'',

path: ''/predict'',

handler: handler.getPredictResult,

options: {

payload: {

allow: ''multipart/form-data'',

multipart: true,

output: ''stream''

}

}

},

];

module.exports = routes;

We have a route ‘/predict’ with the method ‘POST’. We can process the image file with multipart/form-data.

And open your handler.js write the code as shown below:

class PredictHandler {

constructor(service) {

this._service = service

this.getPredictResult = this.getPredictResult.bind(this)

}

async getPredictResult(request, h) {

const photo = request.payload

const predict = await this._service.predictImage(photo.file)

const { diseaseLabel, confidenceScore } = predict

return h.response({

status: ''success'',

message: ''Predict success'',

data: {

disease: diseaseLabel,

confidenceScore

}

})

}

}

module.exports = PredictHandler;

We have a service as a constructor. The next step is to create the service. The handler returns a response with data disease label and confidence score.

So, the next step is to write our service. In the predict service, we integrate the machine learning model.

Load The Machine Learning Model

I don’t cover how to create a machine learning model, so I assume you already have a model. I use a pre-trained model to predict skin disease.

I created a machine learning model with TensorFlow, and I will use TensorFlow.js. To integrate the model into a RESTful API, you need to install some dependencies.

To add dependencies tfjs-node. you can write command as shown below:

npm install @tensorflow/tfjs-node.

After installing the dependencies, we can continue to the next step.

Open your service.js

First step, import the TensorFlow dependencies we installed before.

const tf = require(''@tensorflow/tfjs-node'')

After that, write a class PredictService and method predictImage.

class PredictService {

async predictImage(photo) {}

}

Inside the predictImage method, write a code to load the machine learning model.

const modelPath = ''file://model/model.json''

const model = await tf.loadLayersModel(modelPath);

We save the model.json in the folder model. Next, process the image file. So, write am array with name buffers.

const buffers = [];

for await (const data of photo) {

buffers.push(data);

}

const image = Buffer.concat(buffers);

We process chunks of data from the photo and then concatenate them into a single Buffer named image.

Next, we can predict the image with the following code:

const tensor = tf.node

.decodeImage(image)

.resizeNearestNeighbor([224, 224])

.expandDims()

.toFloat();

const predict = await model.predict(tensor);

const score = await predict.data();

const confidenceScore = Math.max(...score);

const label = tf.argMax(predict, 1).dataSync()[0];

Resize the image with TensorFlow API resizeNearestNeighbor. And make predictions on the input tensor with a pre-trained machine learning model.

Variable score retrieves predicted values using the data() method. The results represent the confidence of the model in different classes.

Variable confidenceScore calculates the maximum confidence score from the array of scores.

Variable label uses the argMax function to find the index of the maximum score, indicating the predicted class label.

In this case, we have three class labels. So, write an array of the class.

const diseaseLabels = [''Melanocytic nevus'', ''Squamous cell carcinoma'', ''Vascular lesion''];

const diseaseLabel = diseaseLabels[label];

return { confidenceScore, diseaseLabel };

Variable diseaseLabel to return the name of label we predict. So, if label is 1, the disease label will be ‘Squamous cell carcinoma’.

And last, return the confidence score and disease label.

Here is the full code of service.js.

const tf = require(''@tensorflow/tfjs-node'');

class PredictService {

async predictImage(photo) {

const modelPath = ''file://model/model.json''

const model = await tf.loadLayersModel(modelPath);

const buffers = [];

for await (const data of photo) {

buffers.push(data);

}

const image = Buffer.concat(buffers);

const tensor = tf.node

.decodeImage(image)

.resizeNearestNeighbor([224, 224])

.expandDims()

.toFloat();

const predict = await model.predict(tensor);

const score = await predict.data();

const confidenceScore = Math.max(...score);

const label = tf.argMax(predict, 1).dataSync()[0];

const diseaseLabels = [''Melanocytic nevus'', ''Squamous cell carcinoma'', ''Vascular lesion''];

const diseaseLabel = diseaseLabels[label];

return { confidenceScore, diseaseLabel };

}

}

module.exports = PredictService;

Testing

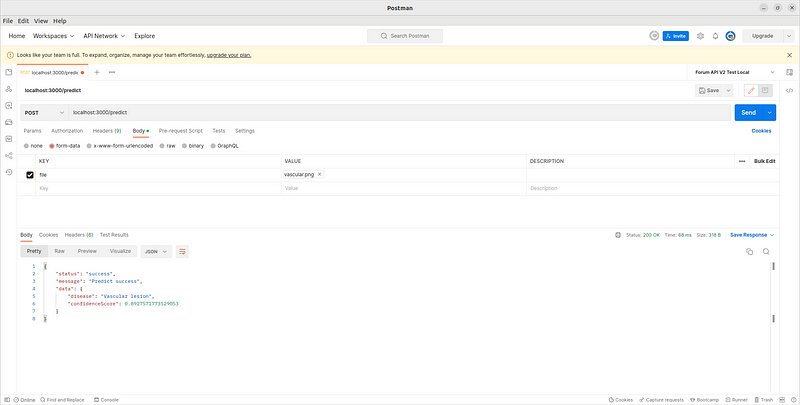

Last step, we test the REST API we made before. You can use Postman/Katalon/etc.

To test the REST API, make a request to your endpoint ‘/predict’ and make sure the endpoint returns a data confidence score and disease name.

Summary

We succeeded in integrating the machine learning model into the REST API. We use a pre-trained machine learning model and use TensorFlow.js to integrate into the REST API.