AWS Simple Storage Service (S3)

S3 is a highly available and durable storage service offered by AWS. It is fully managed and supports various uses cases. It is a flat structure rather than a hierarchy of nested folders like a file system.

Before knowing the S3 commands, these are some crucial terms you need to know:

-

Bucket — A top-level S3 folder that stores objects

-

Object — Any individual items, such as files and images that are stored in an S3 bucket

-

Prefix — An S3 folder nested within a bucket separated using delimiters

-

Delimiter — It causes a list operation to roll up all the keys that share a common prefix into a single summary list result. Usually denoted by a forward slash ‘/’.

-

Folder — Used to group objects for organizational simplicity

-

Path Argument Type — At least one path argument must be specified per command. There are two types of path arguments: LocalPath and S3Uri.

-

**LocalPath **— It represents the path of a local file or directory. It can be written as an absolute path or a relative path.

-

S3Uri — It represents the location of an S3 object, prefix, or bucket. This must be written in the form s3://BucketName/KeyName where BucketName is the S3 bucket, KeyName is the S3 key. For an object with a prefix, the S3 key would be prefixname/objectname. If this object is in a bucket, its S3Uri would be s3://BucketName/PrefixName/ObjectName. It supports S3 access points. s3://

/ command can be used to specify an access point -

Order of Path Arguments — Each command can have one of two positions in path arguments. The first path argument represents the source, which is the local S3 object/prefix/bucket being referenced. If there is a second path argument, it represents the destination, which is the local S3 object/prefix/bucket being operated on. Commands with only one path argument do not have a destination because the operation is being performed only on the source.

-

**Single Local File and S3 Object Operations **— Some commands can only operate on single files and S3 objects. These commands require the first path argument must be a local file or S3 object. While the second path argument can be the name of a local file, local directory, S3 object, S3 prefix, or S3 bucket. These are the single file commands (the — recursive flag should not be added to it) cp, mv, rm. The destination is indicated as a local directory, S3 prefix, or S3 bucket if it ends with a forward slash or backslash. The use of slash depends on the path argument type: for a LocalPath, the type of slash is the separator used by the operating system; for an S3Uri, the forward-slash must always be used. If a slash is at the end of the destination, the destination file or object will adopt the name of the source file or object.

-

Directory and S3 Prefix Operations — Some commands operate on the entire contents of a local directory or S3 prefix/bucket. Adding or omitting a forward slash or backslash to the end of any path argument does not affect the results of the operation. The commands will always result in a directory or S3 prefix/bucket operation sync, mb, rb, ls.

-

Use of Wildcards and Filters — S3 Commands can support — exclude

and — include parameters. The following wildcards are supported -

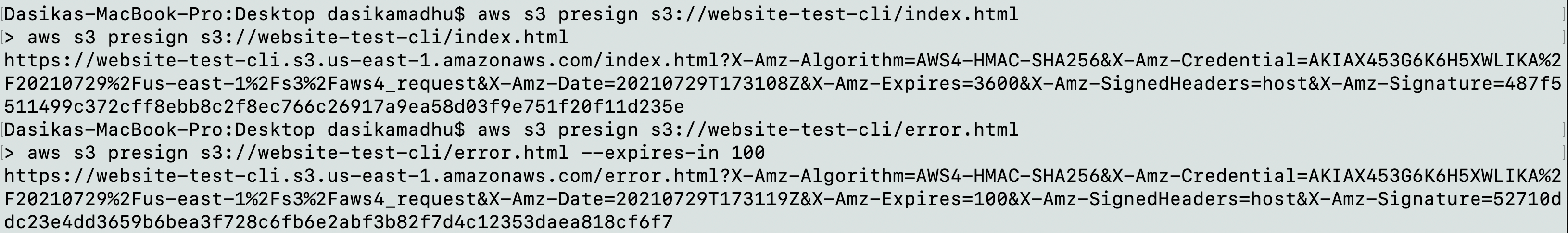

**Presigned URL **— By using an S3 presigned URL for an S3 file, anyone with this URL can retrieve the S3 file with an HTTP GET request. The output of the command is the URL which will be valid by default for 3600 seconds (1 hour).

*: Matches everything ?: Matches any single character [sequence]: Matches any character in a sequence [!sequence]: Matches any character not in sequenceThe difference between a prefix and folder — A prefix is a complete path in front of the object name including the bucket name. If an object is stored as BucketName/FolderName/ObjectName, the prefix is ‘BucketName/FolderName/’. If the object is saved in a bucket without a specified path, the prefix value is “BucketName/”. A folder is a value between the two ‘/’ characters. If a file is stored as BucketName/FolderName/SubfolderName/ObjectName, both “FolderName” and “SubfolderName” are considered to be folders. If the object is saved in a bucket without a specified path, then no folders are used to store the file.

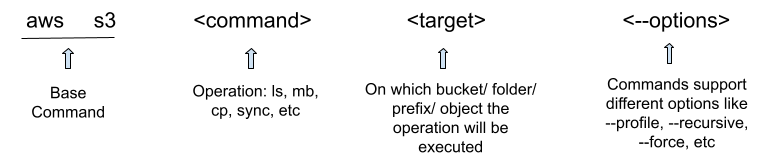

Command Syntax

S3 CLI Command Syntax

S3 CLI Command Syntax

Prerequisites

Ensure that you have downloaded and configured the AWS CLI before attempting to execute any of the following commands. Learn how to in my generic AWS CLI Commands blog.

High-level Commands

High-level commands are used to simplify performing common tasks, such as creating, updating, and deleting objects and buckets. They include cp, mb, mv, ls, rb, rm and sync.

-

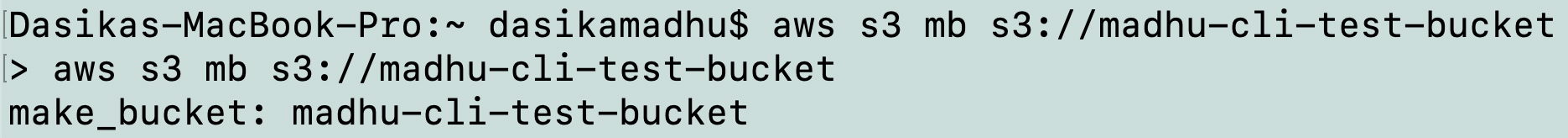

Create a bucket in the default region. It returns the bucket name as the query output.

$ aws s3 mb s3://madhu-cli-test-bucket

make_bucket: madhu-cli-test-bucket

create a bucket in the default region

create a bucket in the default region

-

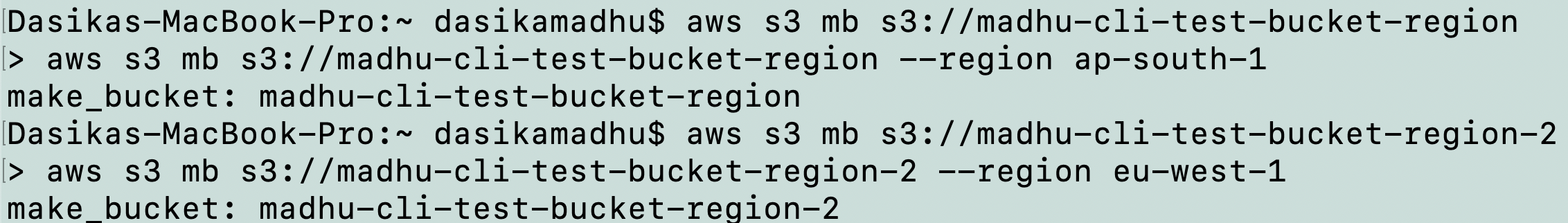

Create a bucket in a specific region. It returns the bucket name as the query output.

$ aws s3 mb s3://madhu-cli-test-bucket-region

aws s3 mb s3://madhu-cli-test-bucket-region --region ap-south-1

make_bucket: madhu-cli-test-bucket-region

$ aws s3 mb s3://madhu-cli-test-bucket-region-2

aws s3 mb s3://madhu-cli-test-bucket-region-2 --region eu-west-1

make_bucket: madhu-cli-test-bucket-region-2

create a bucket in a specific region

create a bucket in a specific region

-

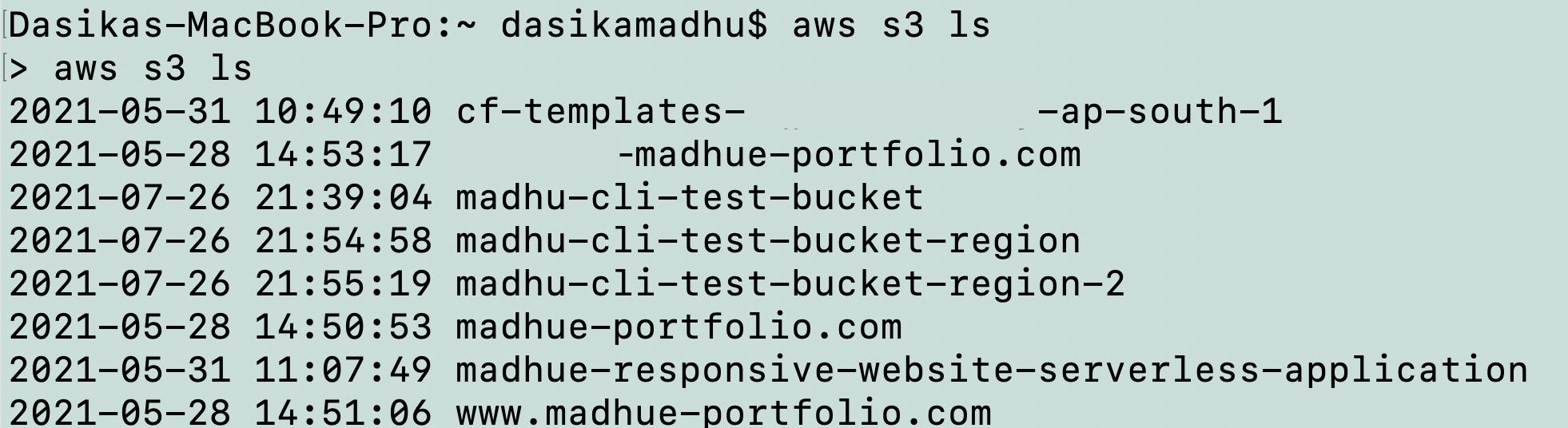

List all your buckets. It returns all the buckets in your AWS account.

$ aws s3 ls

list buckets

list buckets

-

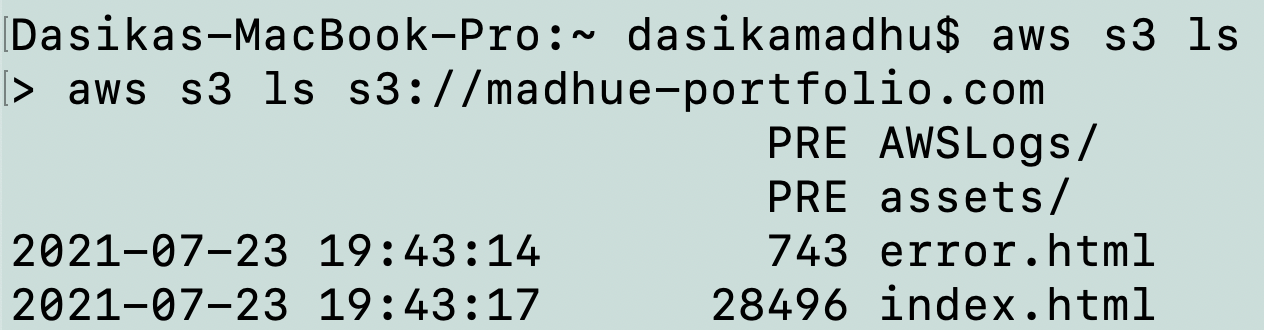

List the objects in a specific bucket and folder. It returns all the objects along with their date and time of creation, size and name. Prefixes (folders) are represented by “PRE” and do not return the date or time.

$ aws s3 ls

aws s3 ls s3://madhue-portfolio.com

$ aws s3 ls

aws s3 ls s3://madhue-portfolio.com/assets/

list objects in a specific bucket

list objects in a specific bucket

list objects in a specific folder within a bucket

list objects in a specific folder within a bucket

-

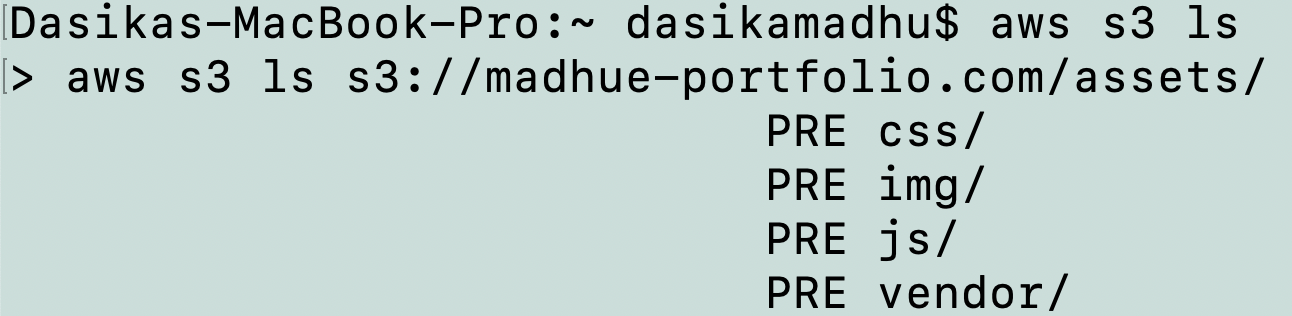

Recursively list all the objects in all the prefixes of the bucket.

$ aws s3 ls s3://madhue-responsive-website-serverless-application

aws s3 ls s3://madhue-responsive-website-serverless-application --recursive

recursively list all the objects within prefixes

recursively list all the objects within prefixes

-

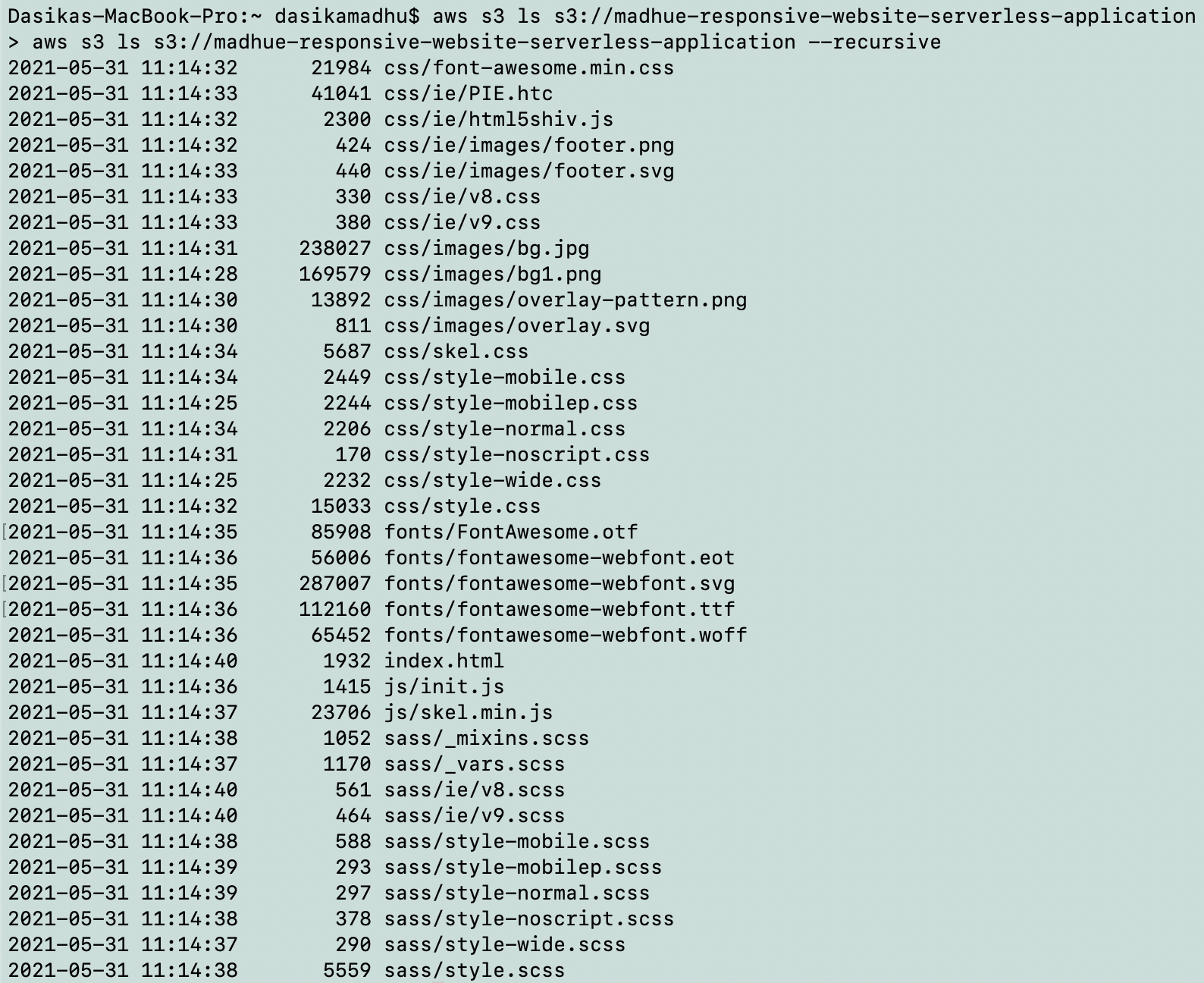

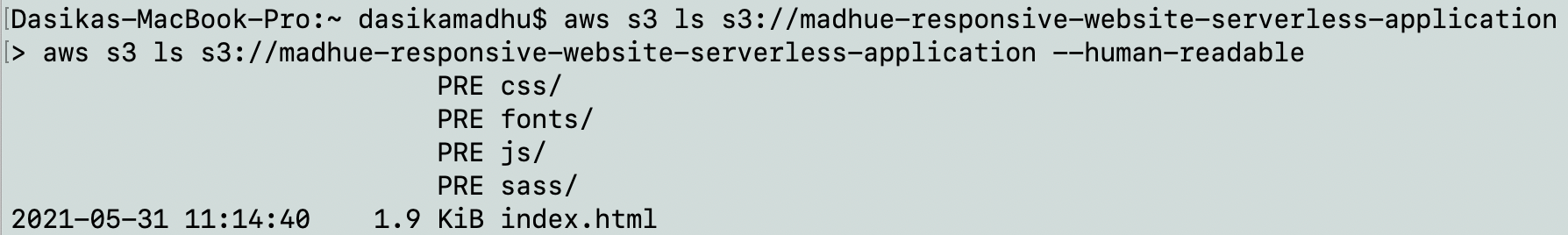

Retrieve bucket data in a human-readable format recursively. It displays all the file sizes in a human-readable format. Can be retrieved recursively and non-recursively.

$ aws s3 ls s3://madhue-responsive-website-serverless-application

aws s3 ls s3://madhue-responsive-website-serverless-application --recursive --human-readable

$ aws s3 ls s3://madhue-responsive-website-serverless-application

aws s3 ls s3://madhue-responsive-website-serverless-application --human-readable

recursive retrieval

recursive retrieval

non-recursive retrieval

non-recursive retrieval

-

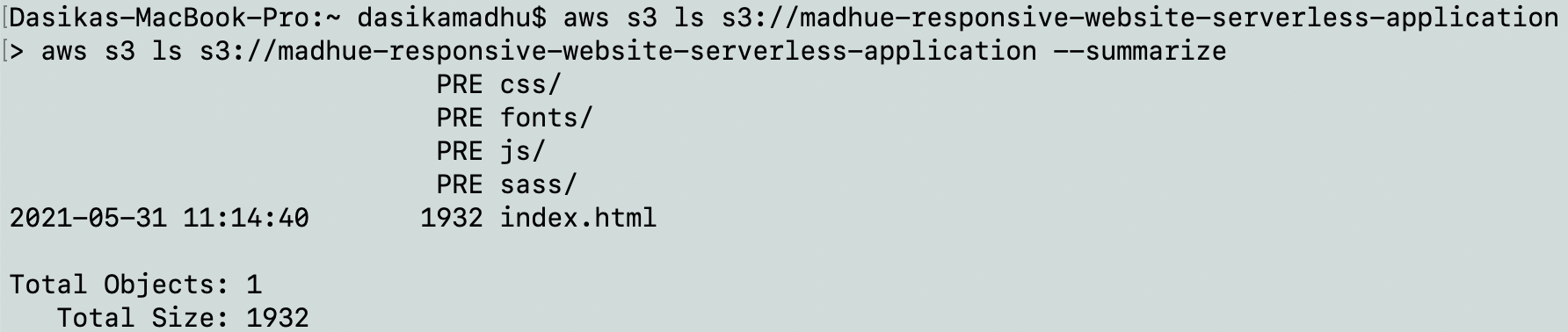

Display the summary information including the number of objects and total size.

$ aws s3 ls s3://madhue-responsive-website-serverless-application

aws s3 ls s3://madhue-responsive-website-serverless-application --summarize

summary of objects retrieval

summary of objects retrieval

-

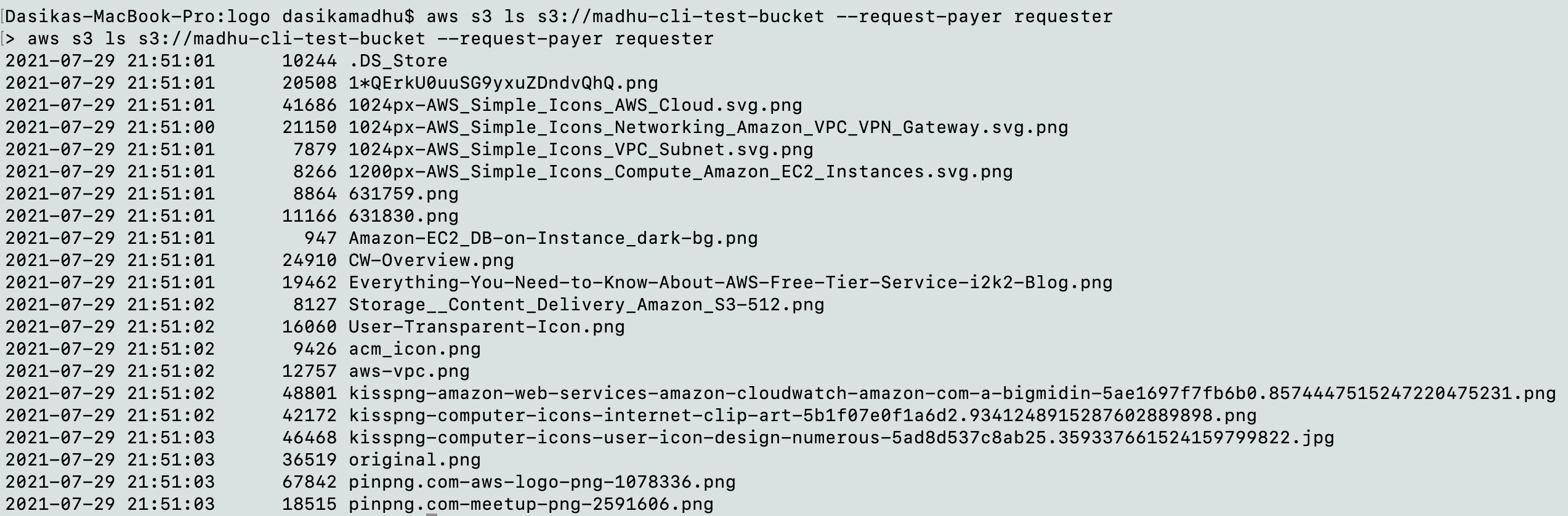

Request the requester pays if a specific bucket is configured as requester pays buckets

$ aws s3 ls s3://madhu-cli-test-bucket --request-payer requester

aws s3 ls s3://madhu-cli-test-bucket --request-payer requester

retrieving requester pays

retrieving requester pays

-

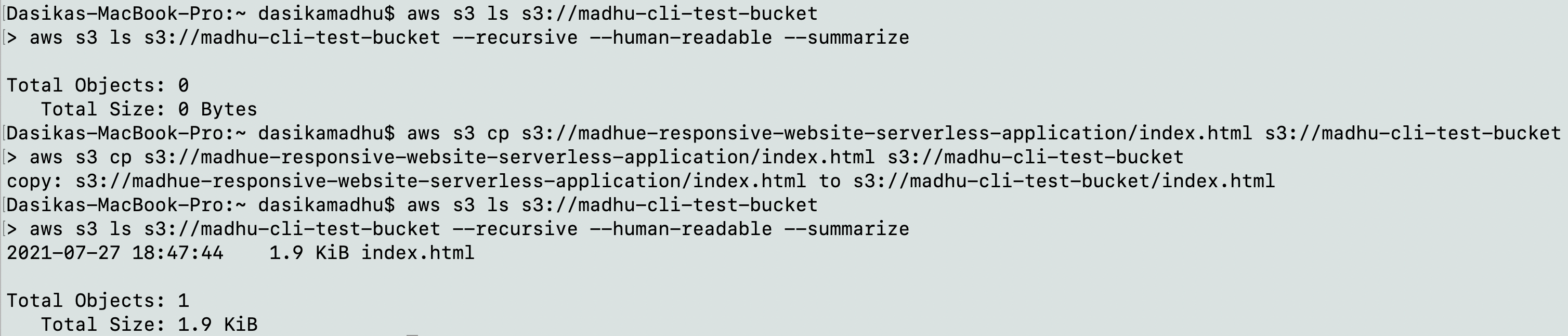

Copy objects from a bucket or a local directory.

$ aws s3 cp s3://madhue-responsive-website-serverless-application/index.html s3://madhu-cli-test-bucket

aws s3 cp s3://madhue-responsive-website-serverless-application/index.html s3://madhu-cli-test-bucket

copy: s3://madhue-responsive-website-serverless-application/index.html to s3://madhu-cli-test-bucket/index.html

copying objects from one bucket to another

copying objects from one bucket to another

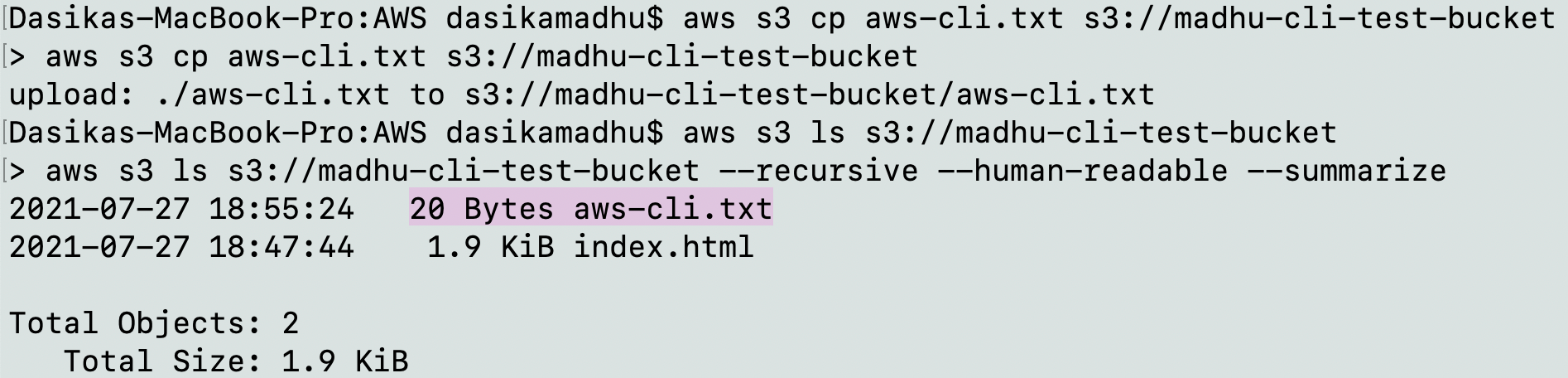

$ aws s3 cp aws-cli.txt s3://madhu-cli-test-bucket

> aws s3 cp aws-cli.txt s3://madhu-cli-test-bucket

upload: ./aws-cli.txt to s3://madhu-cli-test-bucket/aws-cli.txt

copy object from local directory to bucket

copy object from local directory to bucket

-

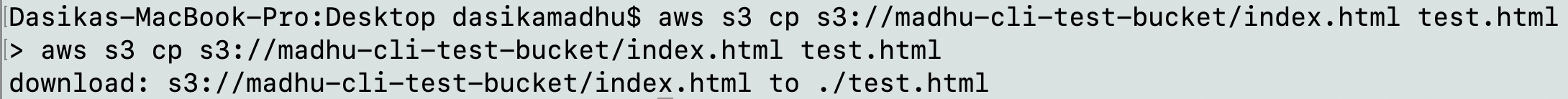

Download objects in buckets to a local directory.

$ cd Desktop

$ aws s3 cp s3://madhu-cli-test-bucket/index.html test.html

aws s3 cp s3://madhu-cli-test-bucket/index.html test.html

download object from bucket to a local directory

download object from bucket to a local directory

-

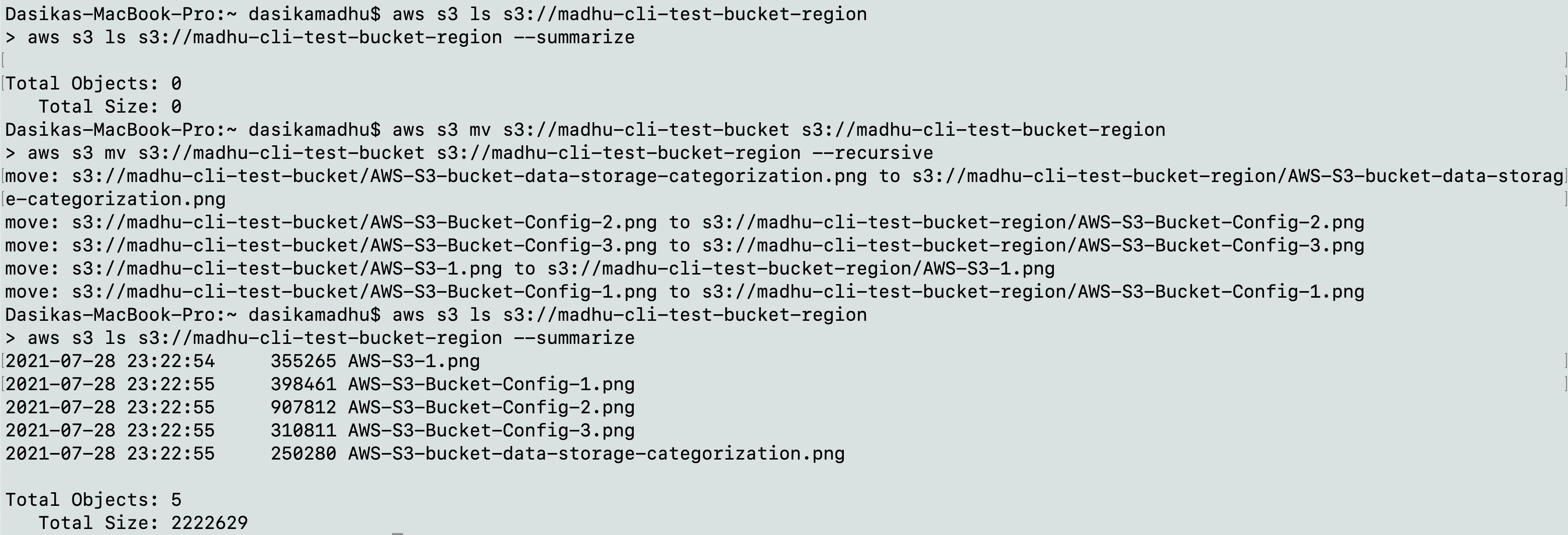

Move all objects from one bucket to another recursively.

$ aws s3 mv s3://madhu-cli-test-bucket s3://madhu-cli-test-bucket-region

aws s3 mv s3://madhu-cli-test-bucket s3://madhu-cli-test-bucket-region --recursive

move: s3://madhu-cli-test-bucket/AWS-S3-bucket-data-storage-categorization.png to s3://madhu-cli-test-bucket-region/AWS-S3-bucket-data-storage-categorization.png

move: s3://madhu-cli-test-bucket/AWS-S3-Bucket-Config-2.png to s3://madhu-cli-test-bucket-region/AWS-S3-Bucket-Config-2.png

move: s3://madhu-cli-test-bucket/AWS-S3-Bucket-Config-3.png to s3://madhu-cli-test-bucket-region/AWS-S3-Bucket-Config-3.png

move: s3://madhu-cli-test-bucket/AWS-S3-1.png to s3://madhu-cli-test-bucket-region/AWS-S3-1.png

move: s3://madhu-cli-test-bucket/AWS-S3-Bucket-Config-1.png to s3://madhu-cli-test-bucket-region/AWS-S3-Bucket-Config-1.png

recursively copying objects in one bucket to another

recursively copying objects in one bucket to another

-

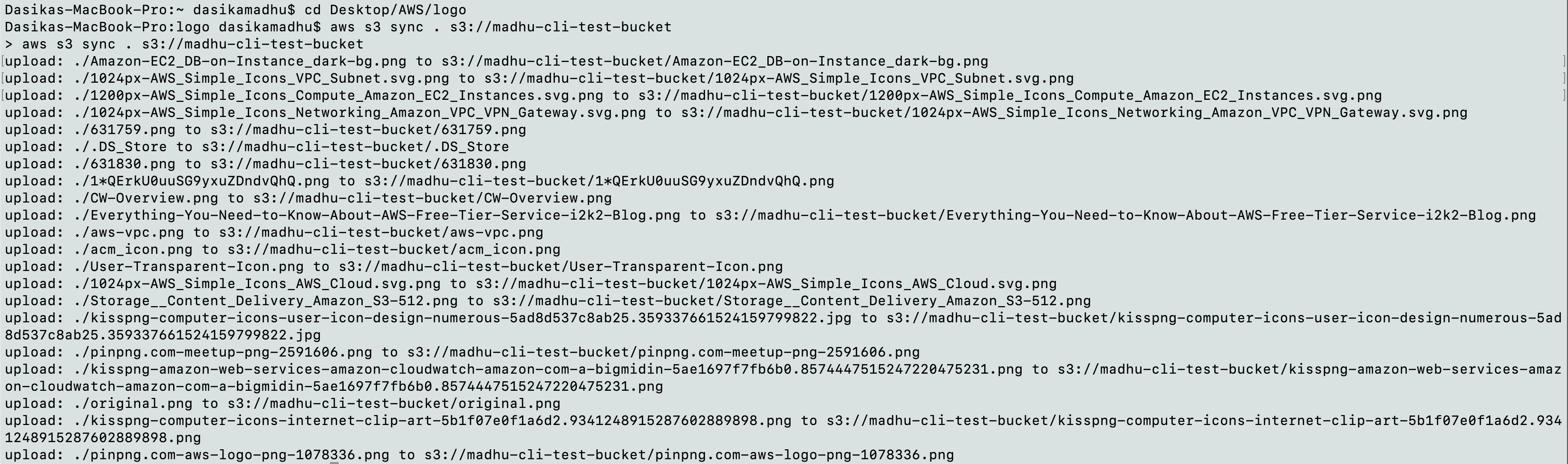

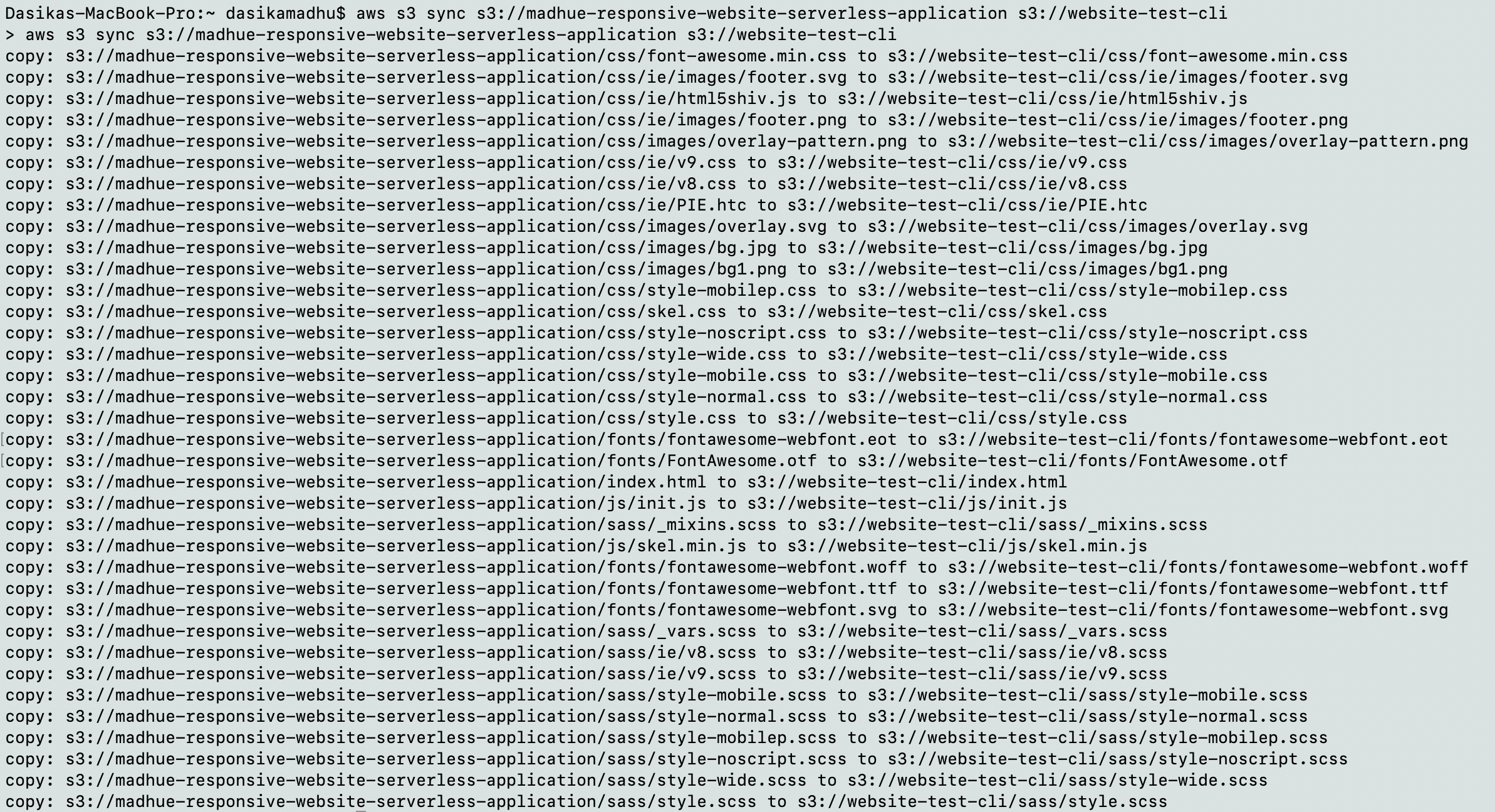

Synchronize the contents of a bucket and a (local) directory, or the contents of two buckets.

$ cd Desktop/

$ aws s3 sync . s3://madhu-cli-test-bucket

aws s3 sync . s3://madhu-cli-test-bucket

upload: ./

. to s3://madhu-cli-test-bucket/ .

synchronize local directory to bucket

synchronize local directory to bucket

$ aws s3 sync s3://madhue-responsive-website-serverless-application s3://website-test-cli

> aws s3 sync s3://madhue-responsive-website-serverless-application s3://website-test-cli

copy: s3://madhue-responsive-website-serverless-application/css/font-awesome.min.css to s3://website-test-cli/css/font-awesome.min.css

synchronize two buckets in your account

synchronize two buckets in your account

-

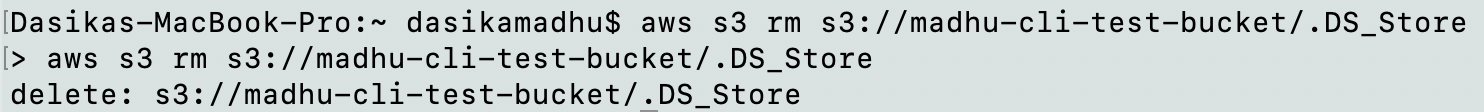

Remove a single object from a bucket.

$ aws s3 rm s3://madhu-cli-test-bucket/.DS_Store

aws s3 rm s3://madhu-cli-test-bucket/.DS_Store

delete: s3://madhu-cli-test-bucket/.DS_Store

remover a single object from a bucket

remover a single object from a bucket

-

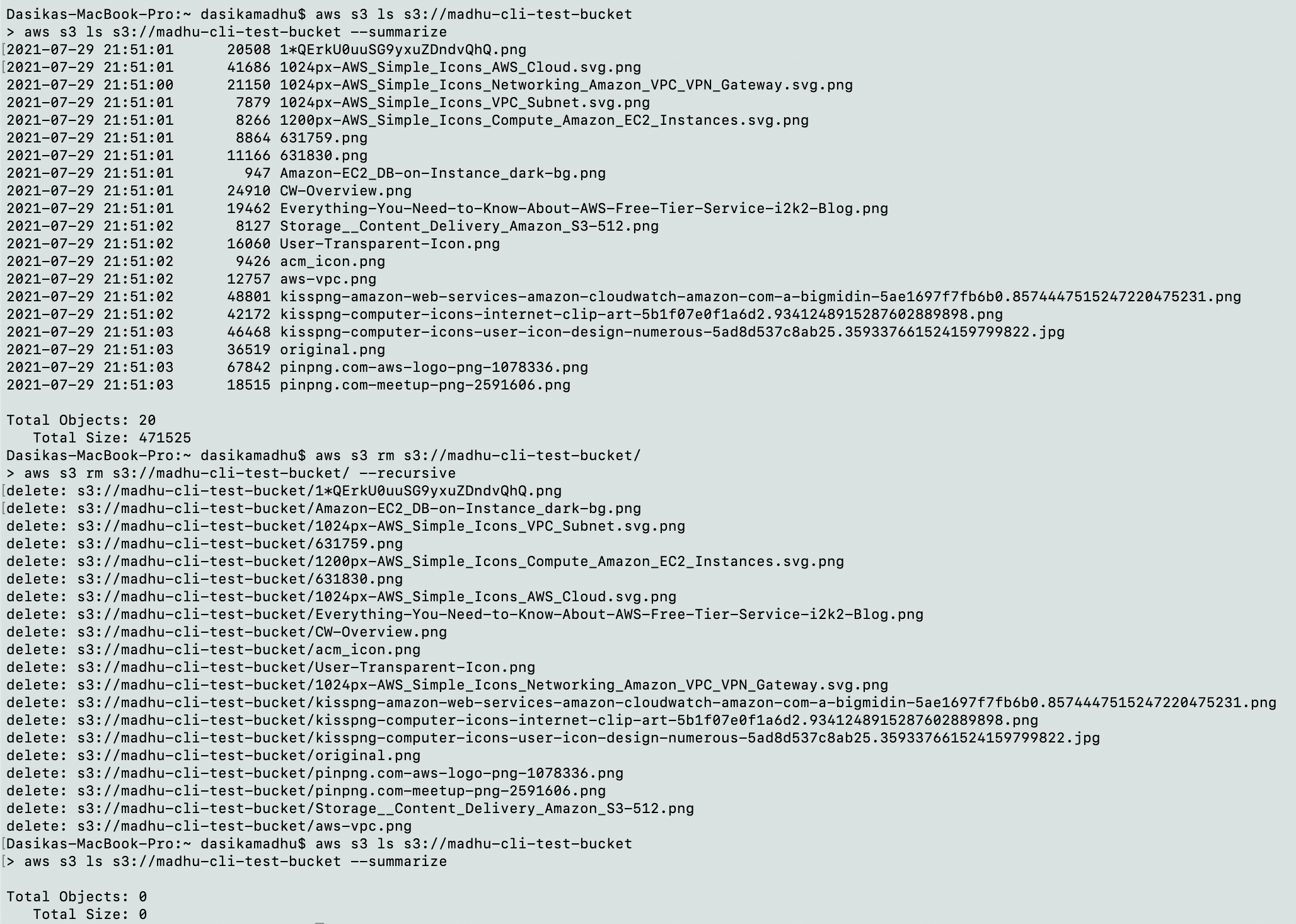

Remove all objects recursively from a bucket.

$ aws s3 rm s3://madhu-cli-test-bucket/

aws s3 rm s3://madhu-cli-test-bucket/ --recursive

delete: s3://madhu-cli-test-bucket/1QErkU0uuSG9yxuZDndvQhQ.png

-

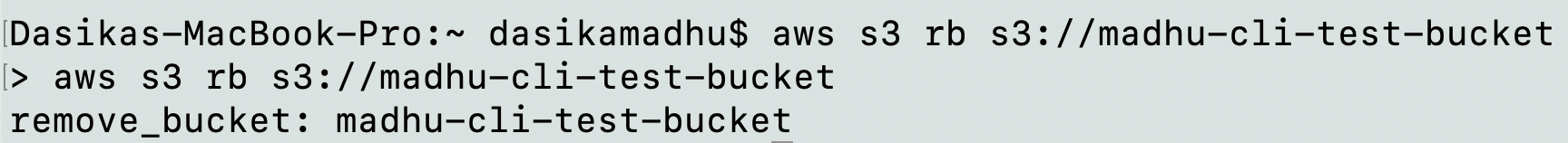

Delete a bucket.

$ aws s3 rb s3://madhu-cli-test-bucket

aws s3 rb s3://madhu-cli-test-bucket

remove_bucket: madhu-cli-test-bucket

delete an empty bucket

delete an empty bucket

-

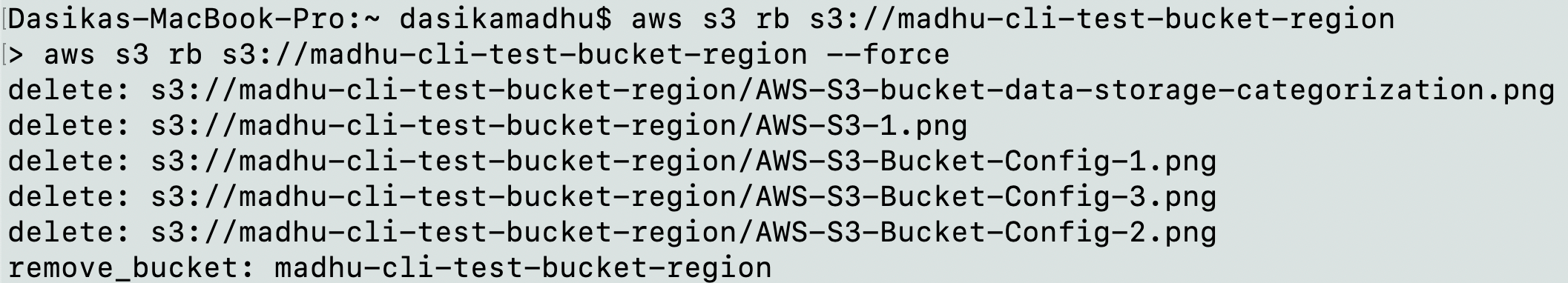

If a bucket is not empty, it cannot be deleted. In this case, use the --force option to empty and delete the bucket.

$ aws s3 rb s3://madhu-cli-test-bucket-region

aws s3 rb s3://madhu-cli-test-bucket-region --force

delete: s3://madhu-cli-test-bucket-region/AWS-S3-bucket-data-storage-categorization.png

remove_bucket: madhu-cli-test-bucket-region

--force to empty and delete the bucket

--force to empty and delete the bucket

-

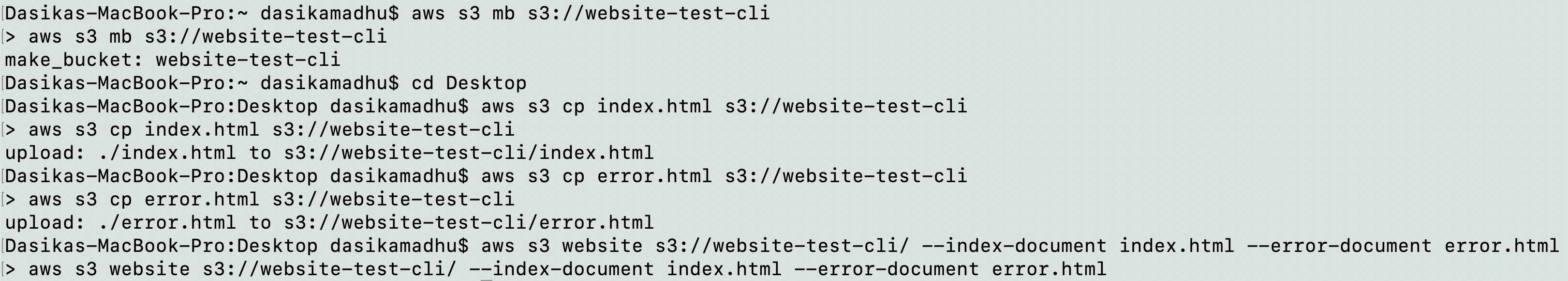

Use an S3 bucket to host a static website. The index.html and error.html files have to be added to your bucket before this configuration.

$ aws s3 website s3://website-test-cli/ --index-document index.html --error-document error.html

aws s3 website s3://website-test-cli/ --index-document index.html --error-document error.html

s3 hosting website configuration

s3 hosting website configuration

-

Use a presigned URL to grant access to S3 objects. The --expires-in option counts the time in minutes before the presigned URL expires.

$ aws s3 presign s3://website-test-cli/index.html

aws s3 presign s3://website-test-cli/index.html

$ aws s3 presign s3://website-test-cli/error.html

aws s3 presign s3://website-test-cli/error.html --expires-in 100

presign with and without expiry time

presign with and without expiry time

References

AWS has a lot of documentation on the CLI. These are the ones I followed while writing this blog.

Happy learning!