Introduction

The original vision of cloud computing was automated, on-demand services that scaled dynamically to meet demand.

While this vision is now a reality, it doesn’t happen on its own. Cloud automation is complex and requires specialized tools, expertise, and hard work.

In this post, we continue our exploration further.

The plan is to build a container and push it to a container registry, all from within Bitbucket Pipelines.

What are Bitbucket Pipelines?

The definition of Bitbucket Pipelines according to their documentation:

An integrated CI/CD service built into Bitbucket. It allows you to automatically build, test, and even deploy your code based on a configuration file in your repository.

Essentially, we create containers in the cloud for you. Inside these containers, you can run commands (like you might on a local machine) but with all the advantages of a fresh system, customized and configured for your needs.

So what does this mean exactly?

It’s a service that Bitbucket offers that allows for code building, testing, and deployment all based on a configuration file from within the repository.

This file is called a YAML file called bitbucket-pipelines.yml that is located inside the root of the repository.

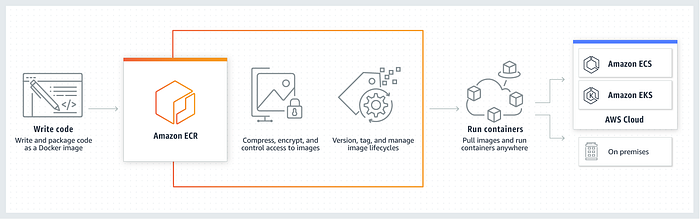

What is Amazon Elastic Container Registry (ECR)?

Amazon Elastic Container Registry (Amazon ECR) is an AWS-managed container image registry service that supports private container image repositories with resource-based permissions using AWS IAM.

This is so that specified users or Amazon EC2 instances can access your container repositories and images.

You can use your preferred CLI to push, pull, and manage Docker images, Open Container Initiative (OCI) images, and OCI compatible artifacts.

Amazon ECR contains the following components :

- Registry

An Amazon ECR registry is provided to each AWS account; you can create image repositories in your registry and store images in them.

For more information, see Amazon ECR private registries.

- Authorization token

Your client must authenticate to Amazon ECR registries as an AWS user before it can push and pull images.

For more information, see Private registry authentication.

- Repository

An Amazon ECR image repository contains your Docker images, Open Container Initiative (OCI) images, and OCI compatible artifacts.

For more information, see Amazon ECR private repositories.

- Repository policy

You can control access to your repositories and the images within them with repository policies.

For more information, see Repository policies.

- Image

You can push and pull container images to your repositories. You can use these images locally on your development system, or you can use them in Amazon ECS task definitions and Amazon EKS pod specifications.

For more information, see Using Amazon ECR images with Amazon ECS and Using Amazon ECR Images with Amazon EKS.

What is Docker?

Docker is a tool designed to make it easier to create, deploy, and run applications by using containers.

Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and deploy it as one package.

By doing so, thanks to the container, the developer can rest assured that the application will run on any other Linux machine regardless of any customized settings that machine might have that could differ from the machine used for writing and testing the code.

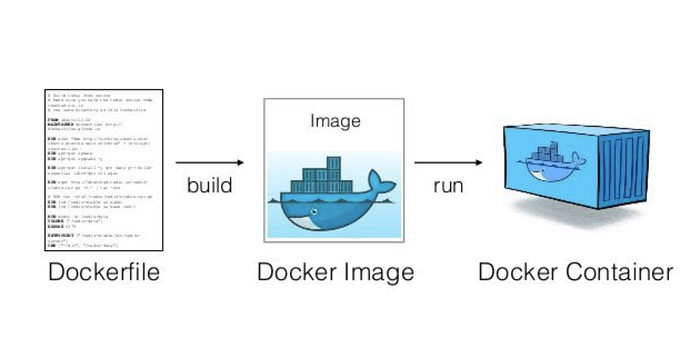

What is a Docker Image?

Docker images are instructions written in a special file called a Dockerfile. It has its own syntax and defines what steps Docker will take to build your container.

Since containers are only layers upon layers of changes, each new command you create in a Docker image will create a new layer in the container.

When you run docker build . on the same directory as the Dockerfile, Docker daemon will start building the image and packaging it so you can use it. Then you can run docker run <image-name> to start a new container.

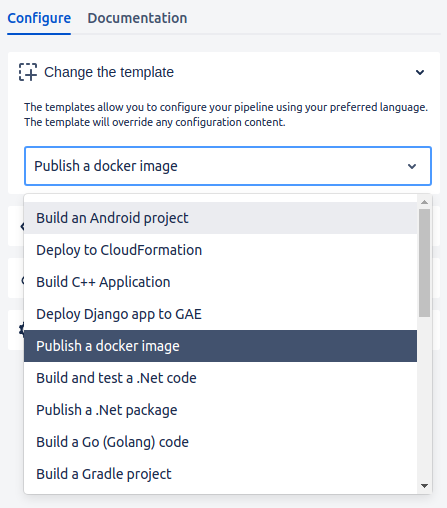

Configuring the Pipeline To Build and Deploy

There are two ways to configure a Bitbucket pipeline :

- Writing the YAML file directly.

- Use the UI wizard provided by Bitbucket.

An advantage of using the Bitbucket configuration wizard, is that it provides some templates that will override any configuration content.

On this tutorial, we’ll be pushing a docker image to the AWS Elastic Container Registry (ECR).

Building the application and configuring our AWS credentials is done by simply calling for a docker build command and creating a pipe to push our image to ECR.

To use the pipe you should have a IAM user configured with programmatic access or Web Identity Provider (OIDC) role, with the necessary permissions to push docker images to your ECR repository.

You also need to set up a ECR container registry if you don’t already have on.

Here is a AWS ECR Getting started guide from AWS on how to set up a new registry.

image: atlassian/default-image:2pipelines:

branches:

master:

- step:

name: Build and AWS Setup

services:

- docker

script:

# change directory to application folder

- cd application-folder

# creates variables (timestamp, image name and tag)

- export TIMESTAMP="$(date +%Y%m%d)"

- export IMAGE_NAME=application

- export TAG="$TIMESTAMP"

# builds docker image from a local dockerfile

- docker build -t application .

# tags image as a way to id it by the timestamp

- docker tag application application:$TIMESTAMP

# use pipe to push the image to AWS ECR

- pipe: atlassian/aws-ecr-push-image:1.3.0

variables:

AWS_ACCESS_KEY_ID: $AWS_ACCESS_KEY_ID

AWS_SECRET_ACCESS_KEY: $AWS_SECRET_ACCESS_KEY

AWS_DEFAULT_REGION: us-east-2

IMAGE_NAME: application:$TIMESTAMP

TAGS: "$TIMESTAMP $BITBUCKET_BUILD_NUMBER"

The variables AWS_ACCESS_KEY_ID, AWS_SECRET_ACCESS_KEY and AWS_DEFAULT_REGION are configured as repository variables, so there is no need to declare them in the pipe.

Once our pipeline’s been commited, a code insight is generated along with annotations, metrics, and reports by Bitbucket.

If the operation was successful, an image of the application should be located inside an Elastic Container Registry repository.

Hope you found this article useful. Thank you for reading.

Comments

Loading comments…