Problem statement?

It is a challenge when there is a need to change the existing customer-managed KMS key associated with an AWS S3 bucket and when the bucket contains massive amounts of data already. In this article, we shall see the options to achieve this.

Table of content

- Introduction

- Steps to change the KMS key with all the existing data re-encrypted with the new KMS Key

- Step 1: change the KMS key

- Step 2: self copy

- Costs associated with proposed strategies

- Summary

- References

1. Introduction

In the evolution of the project or due to migrations, there could be a requirement to change the S3 bucket KMS key. Though S3 allows changing the KMS key after the bucket creation but updating to a new key may create unwanted side effects if not handled properly.

Let us see why this needs more attention:

- Once the key is changed then only the new objects which are added to the bucket will be encrypted with the new KMS key. The KMS key change step will not trigger the re-encryption of the existing objects in the bucket.

- It would still work transparently if the user or role has permission on the new and old KMS keys. Here, there is a shortcoming: if the old key is deleted, then the old S3 objects cannot be decrypted forever.

- One needs to maintain the two KMS keys when the two keys strategy is deiced. This would add up the operations and maintenance overhead and increase the overall complexity.

- When a new user or role needs to access the bucket data, one must grant permission on both KMS keys.

2. Steps to change the KMS key with all the existing data re-encrypted with the new KMS Key

This can be achieved in two steps. In the first step, the KMS key of the bucket is changed. In the second step, the data in the bucket is copied back to the same bucket. In this way, we ensure that existing data in the bucket is also encrypted with the new KMS key.

Next, we shall we how to achieve the first and second steps.

3. Step 1: change the KMS key

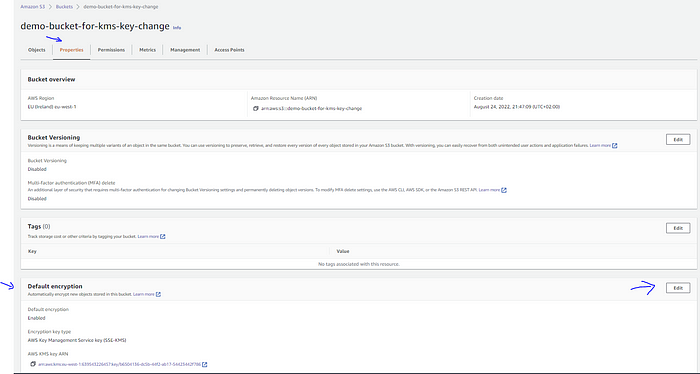

- Go to the S3 bucket ➔ Properties (tab) ➔ Default encryption(section)

Encryption details of the S3 bucket:

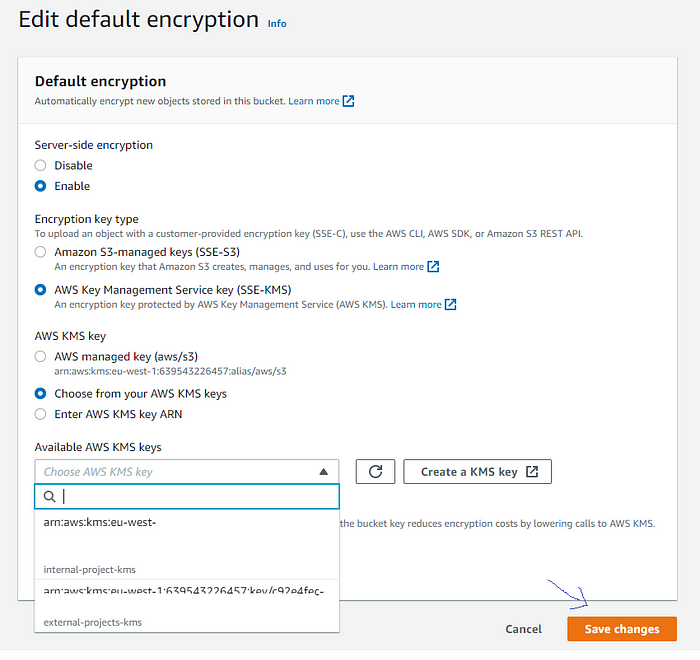

- Click on the “Edit” button and select the new KMS key from the list. After the key selection, hit the “Save changes” button.

Selecting the new KMS key:

4. Step 2: self copy

Copy the existing data of the bucket back to the same bucket, i.e., source and destination buckets are the same.

The self-copy step can be achieved in two ways depending on the amount of data already present in the bucket.

Option I: when the data in the S3 bucket is relatively small.

Option II: when the data is relatively huge.

Option 1: self-copy in case of small data

When the data in the S3 bucket is small(which can be copied over in a matter of minutes or up to an hour ), employ option I.

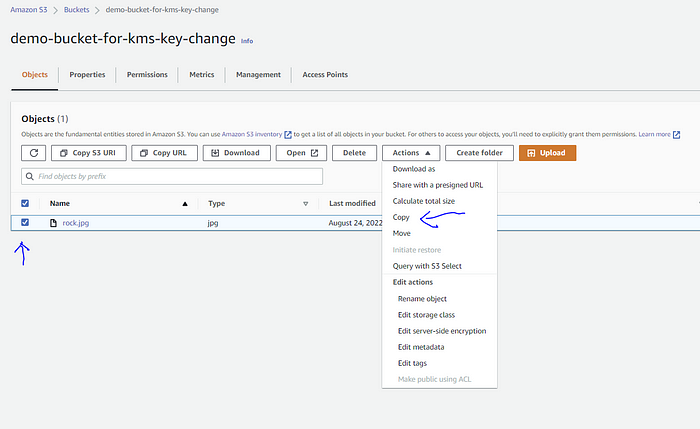

- Go to the bucket, select all the data ➔ Actions ➔ Copy ➔ Select destination as the current bucket ➔ Copy (button).

Selection “Copy” from the Actions dropdown:

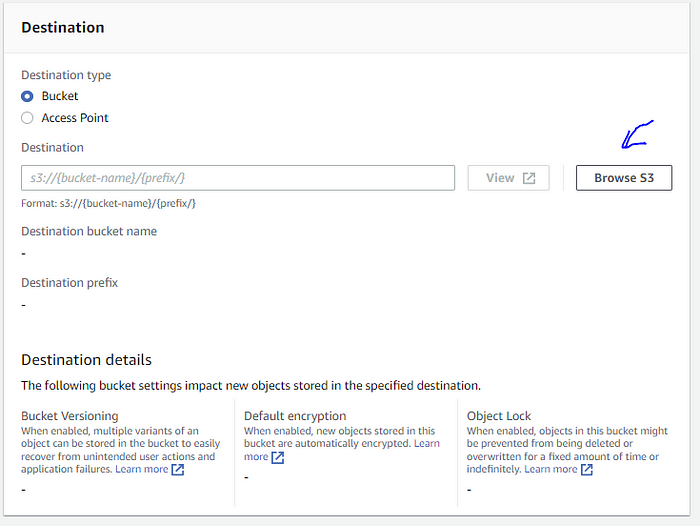

Using “Browse S3” select the S3 bucket:

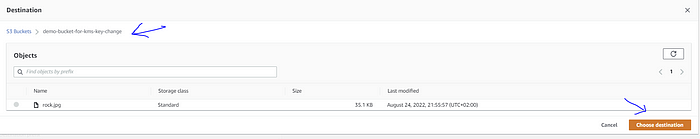

Choose the destination as the source bucket:

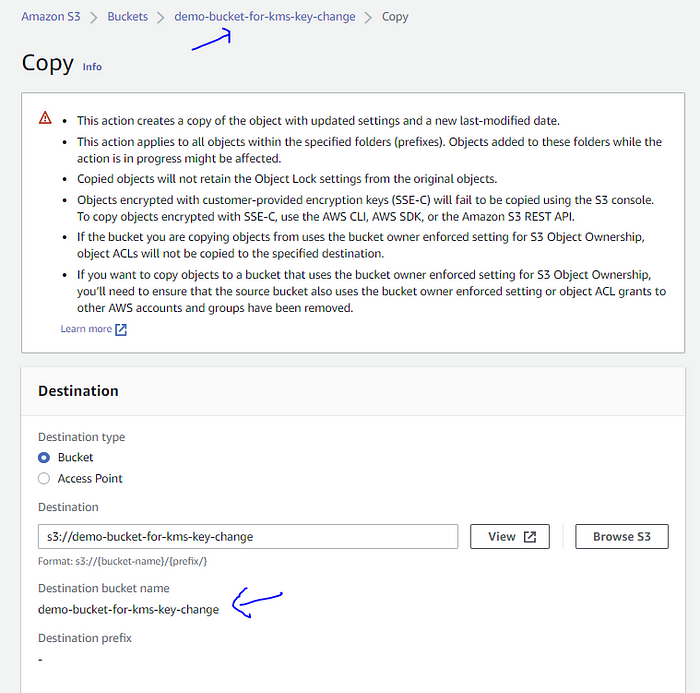

You can see the destination bucket is selected as the source bucket.

The “destination” bucket is the same as the “source” bucket:

The limitation of the above solution is if there is any AWS Console session expiry time configured if the copy operation takes more than the session expiry time, then the copy operation would stop.

Option 2: use DataSync in case of huge data

When the data is too huge or copying one prefix at a time is also not a practical solution, then one can take the help of the AWS-managed service DataSync. It can be used to copy the data between the AWS services, even for S3.

In this article, a CloudFormation script is used to create the necessary needed resources: managed policies, a service role, DataSync locations, and DataSync task.

kms-update-data-sync-self-copy.yaml:

AWSTemplateFormatVersion: 2010-09-09

Resources:

S3RestrictedPolicy:

Type: 'AWS::IAM::ManagedPolicy'

Properties:

ManagedPolicyName: S3_BUCKET_RESTRICTED_POLICY

PolicyDocument:

Version: 2012-10-17

Statement:

- Sid: BucketAccess

Effect: Allow

Action:

- 's3:Get*'

- 's3:List*'

- 's3:Put*'

- 's3:Delete*'

Resource:

- arn:aws:s3:::<your-s3-bucket-name>

- arn:aws:s3:::<your-s3-bucket-name>/*

KMSRestrictedAllowPolicy:

Type: 'AWS::IAM::ManagedPolicy'

Properties:

ManagedPolicyName: KMS_RESTRICTED_POLICY

PolicyDocument:

Version: 2012-10-17

Statement:

- Sid: AccessToKMSKey

Effect: Allow

Action:

- kms:Encrypt

- kms:Decrypt

- kms:ReEncrypt*

- kms:GenerateDataKey*

- kms:DescribeKey

Resource:

- <old KMS key ARN>

- <new KMS key ARN>

DataSyncCopyRole:

Type: 'AWS::IAM::Role'

Properties:

RoleName: DATASYNC_S3_COPY_ROLE

AssumeRolePolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Principal:

Service:

- datasync.amazonaws.com

Action: sts:AssumeRole

Condition:

StringEquals:

aws:SourceAccount: !Sub '${AWS::AccountId}'

ArnLike:

aws:SourceArn: !Sub 'arn:aws:datasync:${AWS::Region}:${AWS::AccountId}:*'

ManagedPolicyArns:

- !Ref KMSRestrictedAllowPolicy

- !Ref S3RestrictedPolicy

S3BucketLocation:

Type: AWS::DataSync::LocationS3

Properties:

S3BucketArn: 'arn:aws:s3:::demo-bucket-for-kms-key-change'

S3Config:

BucketAccessRoleArn: !GetAtt DataSyncCopyRole.Arn

S3StorageClass: STANDARD

S3BucketLocationAgain:

Type: AWS::DataSync::LocationS3

Properties:

S3BucketArn: 'arn:aws:s3:::<your-s3-bucket-name>'

S3Config:

BucketAccessRoleArn: !GetAtt DataSyncCopyRole.Arn

S3StorageClass: STANDARD

SelfCopyTask:

Type: AWS::DataSync::Task

Properties:

SourceLocationArn: !Ref S3BucketLocation

DestinationLocationArn: !Ref S3BucketLocationAgain

Name: 'self-copy-task'

Options:

TaskQueueing: ENABLED

TransferMode: ALL

VerifyMode: ONLY_FILES_TRANSFERRED

Outputs:

S3RestrictedPolicyOutput:

Description: S3_BUCKET-RESTRICTED-POLICY-ARN

Value: !Ref S3RestrictedPolicy

Export:

Name: S3-BUCKET-RESTRICTED-POLICY-ARN

KMSRestrictedAllowPolicyOutput:

Description: KMS-RESTRICTED-POLICY-ARN

Value: !Ref KMSRestrictedAllowPolicy

Export:

Name: KMS-RESTRICTED-POLICY-ARN

DataSyncCopyRoleOutput:

Description: DATASYNC-S3-COPY-ROLE-ARN

Value: !GetAtt DataSyncCopyRole.Arn

Export:

Name: DATASYNC-S3-COPY-ROLE-ARN

Let’s look at the important sections of CloudFormation

S3RestrictedPolicy: a managed policy with only permissions on the respective S3 bucket.

KMSRestrictedAllowPolicy: a managed policy with few permissions on the new and old KMS key.

DataSyncCopyRole: a DataSync service role is created, which is needed to copy the data in the S3 bucket. Also, the above two policies are attached to this role.

S3BucketLocation: the S3 bucket location for our S3 bucket

S3BucketLocationAgain: again, creating the S3 bucket for the same bucket. The reason being DataSync will restrict us when we use the same S3 location while defining the DataSync task. To overcome this validation check, we create two locations pointing to the same bucket.

SelfCopyTask: the DataSync task, which will actually do the job for us.

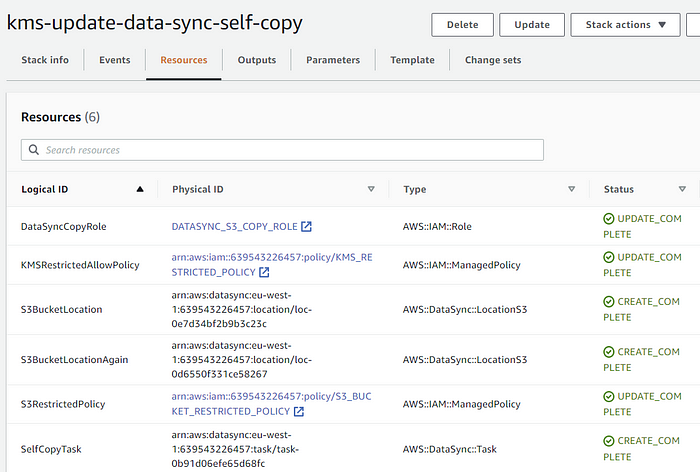

Resources created after executing the CloudFormation script:

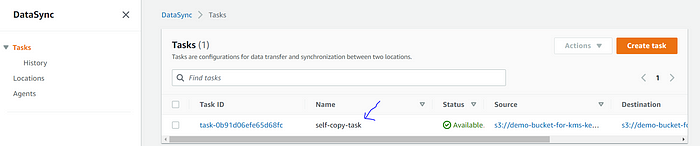

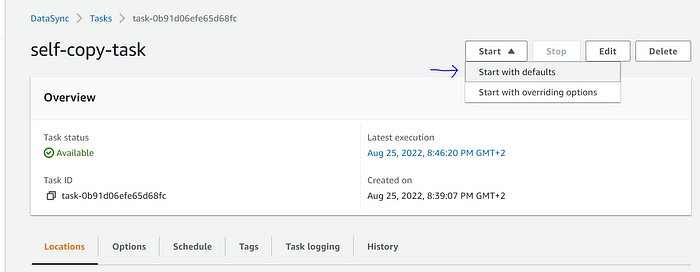

Next, navigate to the DataSync service ➔ Tasks, a task with the name “self-copy-task” would be created.

DataSync task created:

Click on the “Task ID” ➔ on the next screen ➔ select “Start with defaults” from the Start menu button.

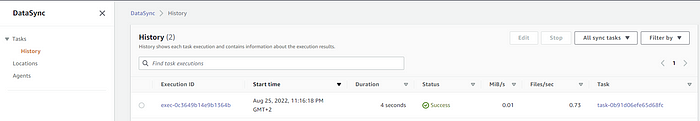

Depending upon the data, it may take time. Also, the copy job can be monitored from the “History” tab.

History tab line items:

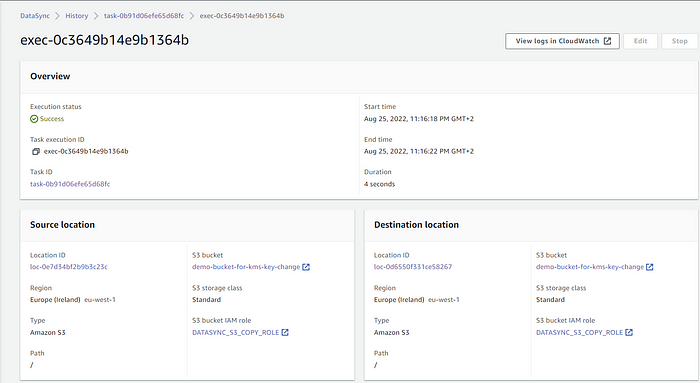

Copy task status with copy stats:

After the copy job is a success, the objects in the S3 would have been unencrypted with the new KMS key of the S3 bucket.

Quotas and limits of DataSync:

There are some quotas and limits while working with DataSync. Some of the important points to be considered when data is copied between S3.

The maximum number of tasks you can create is 100 → it is a soft limit that can be increased.

The maximum number of files or objects per task when transferring data between AWS storage services is 25 million → it is a soft limit that can be increased. Instead of reaching out to AWS to increase the limit, we can use the filters present in the DataSync task to limit the scope of the data to be copied to under 25 million objects.

Tip: Though the AWS limit is 25 million, copy tasks would be slow to start(could be hours) if the number of copy objects is more than 15 million or more. Maybe limit it to 3–5 million with filters. This observation is noted on Jan-2022.

5. Costs associated with option 1 and option 2

S3 does not charge for the data transfer between the S3 buckets in the same Region.

In option II, since the DataSync service is employed, it will incur some amount of cost depending on the data present in the S3. It is $0.0125 per gigabyte (GB) on 26-Aug-2022.

6. Summary

In this article, the side effects of the KMS key change for the existing S3 bucket are initially discussed. Then the available options are presented with an example. Option 1 suits best if the existing data in the S3 bucket is not huge, Option 2 is suggested in case of massive existing data.

And there we have it. I hope you have found this useful. Thank you for reading.

7. References

https://aws.amazon.com/s3/pricing/ [AWS S3 pricing information]

https://aws.amazon.com/datasync/pricing/ [AWS DataSync pricing information]

https://docs.aws.amazon.com/datasync/latest/userguide/filtering.html [Filtering data transferred by AWS DataSync]