Autoscaling is a function that automatically scales resources up or down to meet inconsistent demands. This is a major Kubernetes function that would otherwise require extensive human resources to perform manually. If we deploy our cluster with a “managed” node group, AWS will create an auto-scaling group and manage it as a part of an EKS cluster deployment. We can also define the maximum, minimum, and desired capacity for the auto-scaling group (ASG). Before beginning this article, you can download all the necessary files from here — EKS-Cluster-AutoScaler or clone the repository to your system.

Cluster Installation

In case, if you do not have an existing EKS cluster. Create an EKS cluster with a “managed node group” using the following manifest file and eksctl — Alternatively, we can also use a managed nodegroup with spot instances. View the manifest file from here. Now, check if the EKS cluster is running faultlessly using the following commands — After the deployment of the EKS cluster. You will notice an Auto Scaling Group is also created as part of the EKS cluster deployment. EC2 instances of the nodegroup are managed by the ASG. Inspect the Auto Scaling Group from the AWS console.

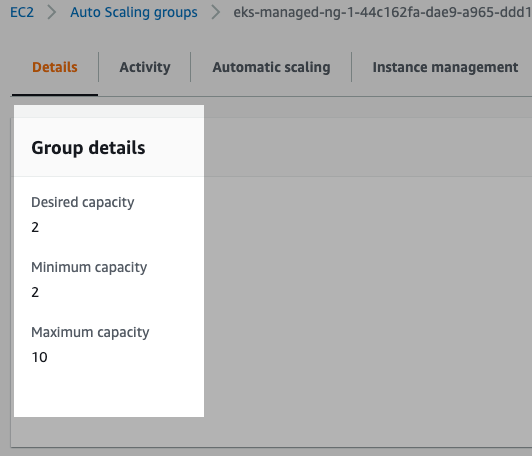

Currently desired capacity and minimum capacity are set to 2 and the maximum capacity is 10.

Deploy workloads on EKS Cluster

The EKS cluster is now ready for handling workloads. And our expectation is if the EKS cluster requires additional resources for running workloads in EKS, more instances will be deployed by ASG for the existing node group. Let’s assume, we have a requirement to deploy the nginx webserver as a deployment with 4 replicas on EKS Cluster. After creating the webserver, inspect the state of the deployment, pods of that deployment— After inspection, you can see that out of 4 pods, 2 pods are in a running state and the other 2 pods are in a pending state. And if you dig more to find out the reason for the pending state, you will notice that pod scheduling is failed due to Insufficient CPU and Memory. As our node group is attached to ASG, we can hope that the node group will scale automatically, but still scaling is not functioning. Some of the pods remain unscheduled because of the lack of resources. In this situation, you may be expecting that the cluster will scale via ASG. But unfortunately, this is not the case here. We need to find a solution to overcome this situation.

When to use Cluster Autoscaler?

ASG will scale when CPU and Memory usage goes high. But in our current situation, CPU or Memory usage isn’t really high; what’s stopping the pod to be created is the resource requests exceeding the available resource. ASG does not have information about the allocated resources for the pods. This is where Cluster Autoscaler comes into the image.

What will Cluster Autoscaler accomplish?

The Cluster Autoscaler automatically adds or removes nodes in a clusterbased on resource requests from pods. The Cluster Autoscaler doesn’t directly measure CPU and memory usage values to make a scaling decision. Instead, it checks every 10 seconds to detect any pods in a pending state, suggesting that the scheduler could not assign them to a node due to insufficient cluster capacity.

How Cluster Autoscaler works on AWS EKS?

Cluster Autoscaler needs to be deployed as a deployment on the EKS cluster. The autoscaler pods will detect that any pods are in a pending state due to the lack of resources. If there is any pod is in the pending state, the cluster autoscaler will call the AWS API to update the auto-scaling group automatically. Since Custer autoscaler requires the ability to examine and modify EC2 Auto Scaling Groups. By using IAM roles for Service Accounts to associate the Service Account that the Cluster Autoscaler Deployment runs as with an IAM role, the autoscaler pod can manipulate ASG to scale in or out the EC2 instances of a node group.

Installation Process

I will explain the Cluster Autoscaler installation process step by step and finally, I will share a script, with that Cluster Autoscaler can be installed easily.

Prerequisites:

● The Cluster Autoscaler requires the following tags on your Auto-Scaling groups so that they can be auto-discovered. If you used **eksctl** to create your node groups, these tags are automatically applied. Review the ASG from AWS Console.

Go to → EC2 → Auto Scaling Group —

● If you didn’t used **eksctl**, you must manually add the following tags to the Auto-Scaling groups :

**k8s.io/cluster-autoscaler/enabled:** true**k8s.io/cluster-autoscaler/** **<CLUSTER-NAME>** **:** owned

Step 1: Create an OIDC provider

An existing IAM OIDC provider for the EKS cluster. To determine whether you have one or need to create one, follow the following instruction — ● Check whether OIDC_ID exists or not. If OIDC_ID exists there will be a response after executing the following commands: ● ️If OIDC_ID doesn’t exist, create a new one with the following command :

Step 2: Configure IAM Policy

The Custer autoscaler will be deployed as a set of Kubernetes Pods. These Pods must have permission to perform AWS API operations, such as the ability to examine and modify EC2 Auto Scaling Groups. Create an IAM policy that grants the permissions that the Cluster Autoscalerrequires and attach it to the IAM role. Create the IAM policy with the following JSON file : Create an IAM policy using the downloaded policy file :

Step 3: Configure IAM Role and Service Account

Create an IAM role and attach the IAM policy (Configured in the previous step) and also configure a Kubernetes service account to assume the AWS IAM role. Any pods that are configured to use the service account can then access any AWS service that the role has permission to access.

We can use **eksctl**to easily create a service account and IAM Role with the appropriate IAM policy. Follow the following commands:

Step 4: Deploy Cluster Autoscaler

Deploy the Cluster Autoscaler using the manifest file. You can view or download the manifest file from here.

Before deploying Cluster Autoscaler, we need to replace some placeholders with the required data in the manifest file.

● Replace <ROLE ARN> placeholder with the ROLEARN environment variable.

● Replace the <YOUR CLUSTER NAME> placeholder with the CLUSTER_ NAME environment variable.

● Add the following two options under the “_command” section of the deployment named cluster-autoscaler in the manifest file.

Let’s execute the following script to replace and add the necessary data in the manifest file.

Check the state of the service account and deployment created earlier using the manifest file:

With that, we have completed the installation of the cluster-autoscaler on the EKS cluster.

Script

Alternatively, we can use the following script for installing EBS CSI Driver on AWS EKS Cluster.

Prerequisites and Execution process Before executing the above script, AWS CLI, kubectl, and eksctl must be installed on the system.

TEST and Verification

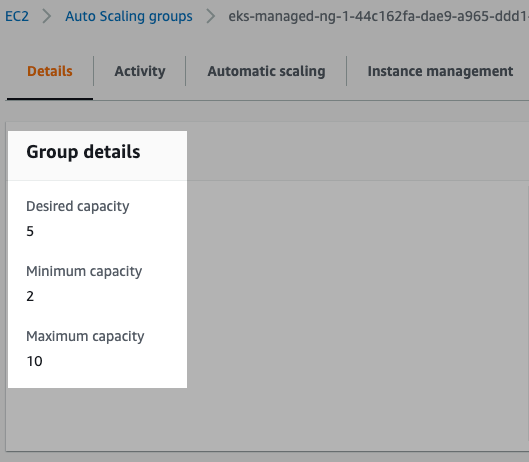

Scale-Out — Test Now, it's time to verify whether the cluster-autoscaler is functioning perfectly or not. For the sake of testing, let's deploy the nginx-webserver deployment once again and then observe if the cluster nodegroup scales out or not. In the above demonstration, we can see that some of the pods are still in a pending state. Let’s inspect one of the pending pods of the deployment. If we observe the above demonstration*,* we can see that pod scheduling is failing becausewe have insufficient CPU and Memory. But unlike before, cluster-autoscaler is tackling the situation by triggering the scale-up option of the ASG for cluster nodegroup. It will take some time to scale the nodegroup. After a while check the pod's state once again — Finally, all the pods are in a running state. Inspect ASG and EC2 instances. If we inspect ASG now, we will see the desired capacity of the ASG scales from 2 to 5 (this may vary in your case)

Scale-In — Test As discussed earlier, Auto-scaler will trigger scale down the process, if there are any unnecessary resources within the EKS cluster. Now, if we delete the previous deployment, the additional resources (worker nodes) deployed earlier will become unnecessary. Then the auto-scaler will scale downthe nodegroup. Within a few moments, ASG will terminate the unnecessary instances from the nodegroup. In the following image, we can see that desired capacity of the ASG scales down from 5 to 2 (this may vary in your case)

Uninstallation process

Use the following script to uninstall cluster-autoscaler from your EKS cluster.

Comments

Loading comments…