Recently I’ve been creating a ECS cluster running multiple services. Soon the question popped up: “How could I have two services within the cluster talking to each other?”. I didn’t want to make use of the internet facing load balancers in front of the services to fire up the requests from inside of the services so I started on exploring other the possible solutions to tackle this.

Using AWS Service Connect

A first implementation was done using AWS ECS Service discovery. When checking out the infrastructure via the AWS Console, a message was shown which proposed to switch to AWS ECS Service Connect. So I took a deep dive in to the AWS ECS Service Connect and switched over the implementation.

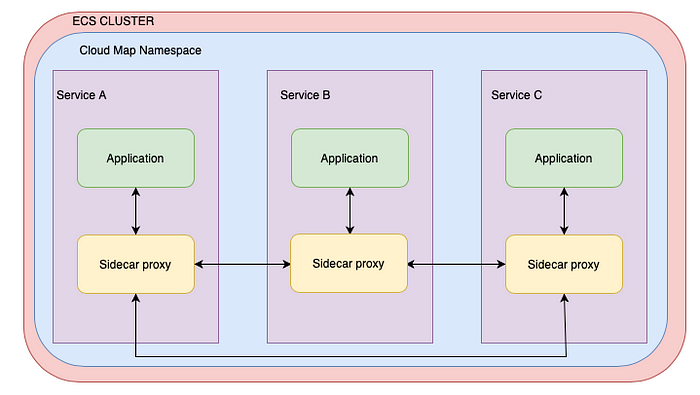

Whilst AWS Service Discovery uses the DNS-entries in the Cloup Map, which in fact can be stale, AWS ECS Service Connect makes use of a sidecar, loaded as a kind of proxy container next to each task inside your sevice. The side car is intercepting outgoing connections and looks up the requested IP address of the required service in real time. Taking care to only target the healthy instances.

When reading the sidecar container loading, you may see some similarities with as service mesh using Envoy. Well when using AWS ECS Service Connect you benefit from the same benefits, except in this case AWS ECS Service Connect is managing the sidecar for you.

The AWS Connext Service setup in our ECS Cluster:

AWS ECS Service Connect operates independently of DNS, and it refrains from registering private DNS entries. Instead, it registers endpoints exclusively with Cloud Map, making them discoverable through API calls only. It appears there’s no option to instruct Service Connect to also register the service names in DNS.

How to add to your CDK project

The project is build up our of several stacks, a cluster stack responsible for creating the ECS Cluster and the private namespace I’m going to use. And a ecs service stack which I will be deploying twice. Once with the client application and once with the server application. Both client- and serverstack will be hosting the same application logic as it is just a demo. I’m not going to attach a public load balancer or something else. The health check in each service will target an endpoint in the other service to verify the interconnection between them.

import * as cdk from 'aws-cdk-lib';

import { Construct } from 'constructs';

import * as ec2 from "aws-cdk-lib/aws-ec2";

import * as ecs from "aws-cdk-lib/aws-ecs";

import { PrivateDnsNamespace } from 'aws-cdk-lib/aws-servicediscovery';

export class ClusterStack extends cdk.Stack {

cluster: ecs.ICluster;

privateDnsNamespace: PrivateDnsNamespace;

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

// Deploy the cluster into a predefined VPC which is referenced via th vpcId

const vpc = ec2.Vpc.fromLookup(this, "DemoVpc", { vpcId: "vpc-xxxx" });

// Create a cluster inside the vpd, the cluster will be hosting the different services

this.cluster = new ecs.Cluster(this, 'DemoCluster', {

vpc,

clusterName: cdk.PhysicalName.GENERATE_IF_NEEDED

});

// Create a private DNS namespace on which we'll be hooking our internal service endpoints

// the hosted zone will be named my.demo

this.privateDnsNamespace = new PrivateDnsNamespace(this, "DemoPrivateDnsNamespace", {

name: `my.demo`,

vpc,

});

}

}

This cluster stack will be deployed before the client- and serverstack will be created. From the cluster stack I’ll be reusing the cluster itself and the privateDnsNamespace parameters within the other stacks.

De deployment of the client- and server stack will use the same stack definition, deploying the same application ( docker — expresservice ) but they’ll use different parameters to make a differentation between the deployed applications.

import * as cdk from 'aws-cdk-lib';

import { PrivateDnsNamespace } from 'aws-cdk-lib/aws-servicediscovery';

import { Construct } from 'constructs';

import * as ecs from "aws-cdk-lib/aws-ecs";

import { Repository } from 'aws-cdk-lib/aws-ecr';

import { Size } from 'aws-cdk-lib';

import * as ecs_patterns from "aws-cdk-lib/aws-ecs-patterns";

import { Subnet } from 'aws-cdk-lib/aws-ec2';

import * as path from "path";

import { ApplicationLoadBalancer } from 'aws-cdk-lib/aws-elasticloadbalancingv2';

import * as ec2 from "aws-cdk-lib/aws-ec2";

export interface EcsServiceStackProps extends cdk.StackProps {

readonly privateDnsNamespace: PrivateDnsNamespace;

readonly cluster: ecs.ICluster;

readonly name: string;

}

export class EcsServiceStack extends cdk.Stack {

constructor(scope: Construct, id: string, props: EcsServiceStackProps) {

super(scope, id, props);

const vpc = ec2.Vpc.fromLookup(this, "DemoVpc", { vpcId: "vpc-xxxxx" });

const container = ecs.ContainerImage.fromAsset(path.resolve(__dirname, 'expressService'), {

buildArgs: {}

});

const applicationLoadBalancer = new ApplicationLoadBalancer(this, `${props.name}ExternalApplicationLoadBalancer`, {

internetFacing: true,

vpc,

vpcSubnets: {

subnets: [

Subnet.fromSubnetId(this, "subnet-xxxx", "subnet-xxxx")

],

},

loadBalancerName: `LB${props.name}`

});

const taskDefinition = new ecs.FargateTaskDefinition(this, `${props.name}ServerTaskDef`, {

memoryLimitMiB: 4096,

cpu: 2048,

})

taskDefinition.addContainer(`${props.name}ServerTask`, {

image: container,

portMappings: [

{ containerPort:8080,

name: 'default'

}

],

logging: ecs.LogDrivers.awsLogs({

streamPrefix: `ServerEvents`,

mode: ecs.AwsLogDriverMode.NON_BLOCKING,

maxBufferSize: Size.mebibytes(25),

}),

});

const serviceTask = new ecs_patterns.ApplicationLoadBalancedFargateService(this, `${props.name}FargateService`, {

cluster: props.cluster,

taskDefinition,

desiredCount: 1,

taskSubnets: {

subnets: [

Subnet.fromSubnetId(this, "subnet-xxxx", "subnet-xxxx"),

]

},

loadBalancer: applicationLoadBalancer

});

serviceTask.service.enableServiceConnect({

services: [

{

discoveryName: `server`,

portMappingName: `default`

}

],

namespace: props.privateDnsNamespace.namespaceName,

})

}

}

As I’m deploying the same stack in two different flavours, I’m differentiating them by using their own unique name, passed as one of the stack parameters. The uniqueness of the deployed infra should be guaranteed in order to prevent cdk issues throughout the deployment phase.

#!/usr/bin/env node

import 'source-map-support/register';

import * as cdk from 'aws-cdk-lib';

import { ClusterStack } from './stacks/clusterStack';

import { EcsServiceStack } from './stacks/ecsServiceStack';

const app = new cdk.App();

const clusterStack = new ClusterStack(app, "ClusterStack");

new EcsServiceStack(app, 'ServerStack', {

privateDnsNamespace: clusterStack.privateDnsNamespace,

cluster: clusterStack.cluster,

name: "Server"

});

new EcsServiceStack(app, 'ClientStack', {

privateDnsNamespace: clusterStack.privateDnsNamespace,

cluster: clusterStack.cluster,

name: "Client"

});

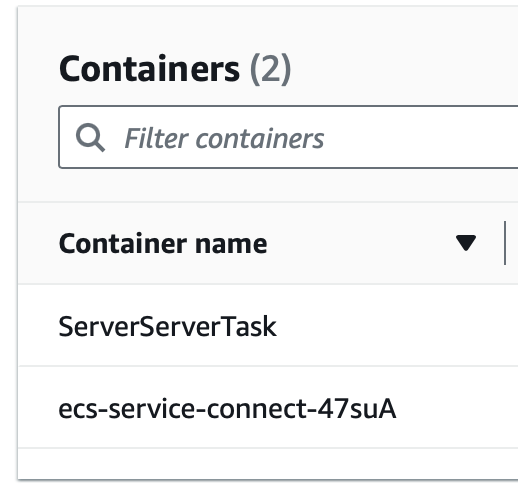

After the deployment you can check out the cluster. If you check on the deployed server-service, you’ll notice that the task is now provided with the sidecar.

Sidecar loaded:

The sidecar is added automatically by our cdk deployment when we activated the AWS ECS Service Connect for our services. This means, the server application within our cluster is now discoverable by other applications running in the cluster. On which endpoint, we can verify in the task configuration.

Service Connect configuration:

We can see the service namespace used is my.demo and the discover-name is server. Within AWS ECS Service Connect, services can reach each other using the http://

As I’m not enabling the internet facing Load Balancer on my services, for demo purposes I’ve just extended the express application health check with a redirect to the other service within the cluster. The health check performed on the client application will reach out to the server application and vice versa. All done for the sake of simplicity.

Health check Client

Let’s check out the logs set in the express application. We can notice the reach out to the server application, and the hello message being returned from the server application! The connection actually works!

Take aways

AWS ECS Service Connect is an easy way to set up the interconnectivity on a load balanced way between your different services running inside a cluster.

As long as the services are bound to the same AWS Cloud Map namespace, they should be discoverable for all services using the AWS ECS Service Connect.

Be aware on application start the proxy container will check the available endpoints announced in the AWS Cloud Map, whenever additional service endpoints are added afterwards, the proxy container will not be able to discover them.

As you’re still working with ECS you should be aware on adding the proper ingress rules in the security group of your service. Whenever you’re not allowing inbound traffic on port 8080 from the security group of your requesting service, you’ll be hitting time-outs when the endpoint is invoked.

Sources:

Comments

Loading comments…