AWS Lambda is a serverless, event-driven compute service. Serverless does not mean there are no servers. It means you don’t manage or provision them. Someone else does that job for you. Event-driven means it uses events to trigger and communicate between services. When using lambda you just write the function and AWS manages the rest for you.

It is integrated with many programming languages such as Python, Node.js, Java, C# and Golang. So you can pick the language you prefer. It is that flexible.

Amazon S3 is a cloud object storage.S3 allows you to store objects in what they call “buckets”. A bucket is like a directory. It can be created in a particular region. But S3 bucket names are unique globally. When I said it is *like *a directory I mean it. Because inside a bucket there are no folders. It has a logical arrangement similar to folders separated by “/”. Bucket objects or files are always referenced by a key. The key is the full path.

This is a simple activity you can try in AWS. Here you’d be using two AWS Services: Lambda and S3. As the first task let’s copy a file in the same S3 bucket. The code is simple.

Create the S3 bucket and add an object. I assume that you have an object called “script.py” in the following source path. So the object key here is the entire “mybucket1/source/script.py”.

mybucket1/source/script.py

You want the destination path to be

mybucket1/destination/script.py

Create a Lambda function in the same region as the bucket is in.

import boto3

def lambda_handler(event, context):

# Creating the connection with the resource

s3 = boto3.resource('s3')

# Declaring the source to be copied

copy_source = {'Bucket': 'mybucket1', 'Key': 'source/script.py'}

bucket = s3.Bucket('mybucket1')

# Copying the files to another folder

bucket.copy(copy_source, 'destination/scriptCopy.py')

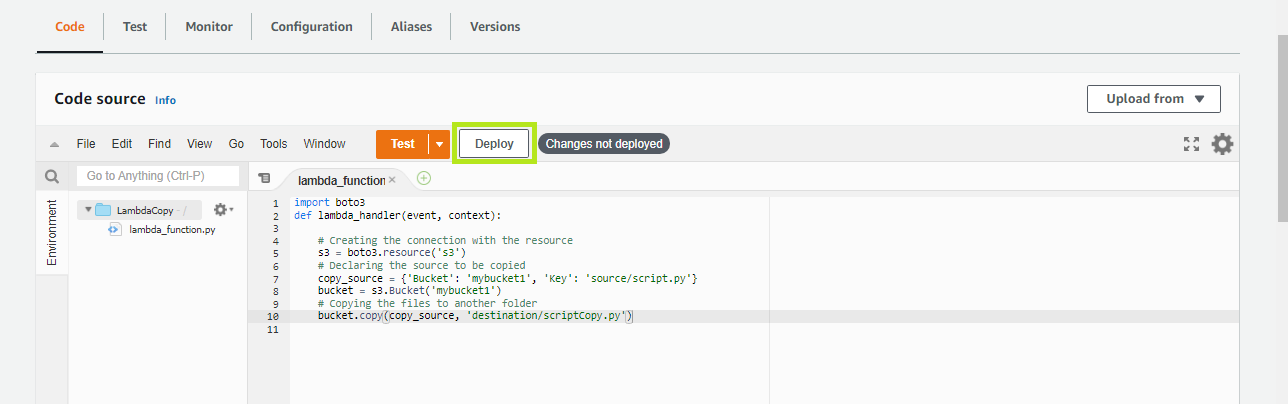

Paste the above code and deploy the code.

Save and Deploy Lambda Function

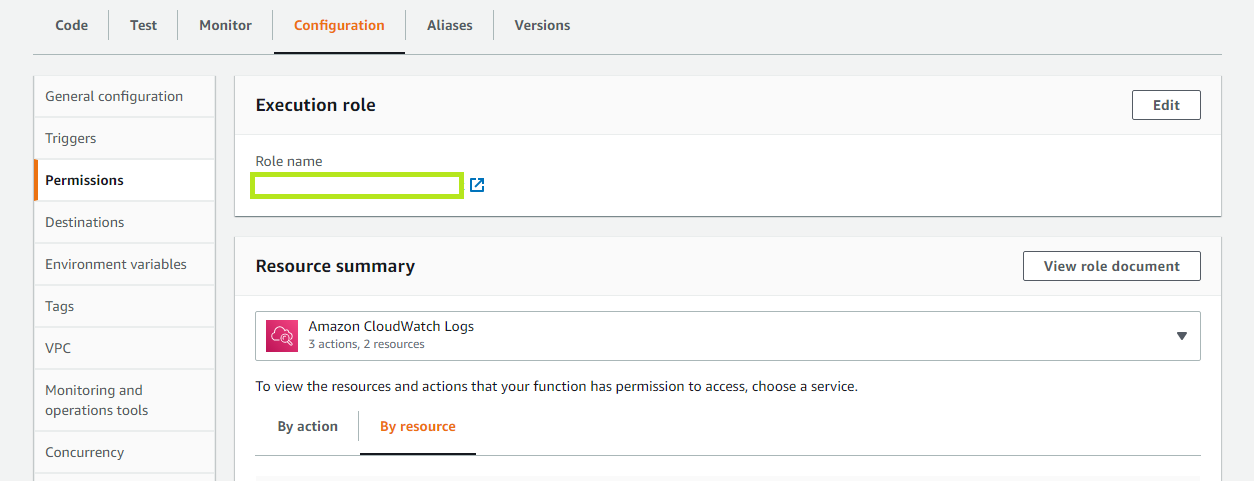

When you create a Lambda function it creates an execution role with basic permissions (If you did not change anything). Make sure the Lambda function IAM Role has the following permissions before you test the function. Go to Configuration->Permissions. Open the Execution Role.

IAM Role Lambda Function

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::mybucket1/*",

"arn:aws:s3:::mybucket1"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3-object-lambda:GetObject",

"s3-object-lambda:PutObject"

],

"Resource": "arn:aws:s3-object-lambda:Region:AccountID:accesspoint/*"

}

]

}

As the second task let’s copy a file in one S3 bucket to another S3 bucket. The code is simple.

You have to update one line.

bucket = s3.Bucket('mybucket2')

Update the correct permission to the other bucket too.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor0",

"Effect": "Allow",

"Action": [

"s3:PutObject",

"s3:GetObject",

"s3:GetBucketLocation"

],

"Resource": [

"arn:aws:s3:::mybucket1/*",

"arn:aws:s3:::mybucket1",

"arn:aws:s3:::mybucket2/*",

"arn:aws:s3:::mybucket2"

]

},

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"s3-object-lambda:GetObject",

"s3-object-lambda:PutObject"

],

"Resource": "arn:aws:s3-object-lambda:Region:AccountID:accesspoint/*"

}

]

}

That’s all! Hope this helps.

Thank you for reading.

Comments

Loading comments…