In the dynamic landscape of modern technology, harnessing the potential of Conversational AI has become a crucial element for businesses striving to enhance user experiences and streamline communication. One of the most powerful tools in this realm is Amazon Lex, a service that empowers developers to build sophisticated chatbots capable of natural language understanding and interaction. Now, imagine taking this capability a step further by incorporating the cutting-edge Bedrock Claude LLM (Language Model) into your Amazon Lex chatbot. In this article, we’ll delve into the seamless integration of Bedrock Claude LLM with Amazon Lex, offering an easy-to-follow guide that unlocks the true potential of conversational interfaces. Get ready to embark on a journey that combines the strength of Amazon Lex with the innovative features of Bedrock Claude, providing you with a chatbot solution that goes beyond conventional boundaries.

Creating an Amazon Lex chatbot with the Bedrock Claude LLM model involves leveraging three key services, each playing a crucial role in the development and deployment process. Here’s an overview of the services that will be utilized:

1. Amazon Lex:

Amazon Lex is a fully managed service by AWS that enables the development of conversational interfaces, or chatbots. It utilizes automatic speech recognition (ASR) and natural language understanding (NLU) to interpret and respond to user inputs in a conversational manner. Lex serves as the foundation for the chatbot, handling user interactions, processing input, and generating appropriate responses. Its integration with Bedrock Claude LLM enhances natural language processing capabilities, enabling the chatbot to understand and respond more intelligently.

2. Bedrock Claude LLM (Language Model):

Bedrock Claude LLM is a state-of-the-art language model designed to understand and generate human-like text. It’s based on advanced machine learning techniques and is particularly adept at handling nuanced language tasks. Bedrock Claude LLM augments the natural language understanding capabilities of Amazon Lex by providing a more sophisticated language model. This integration allows the chatbot to comprehend context, nuances, and user intents with a higher degree of accuracy, resulting in more intelligent and contextually relevant responses.

3. AWS Lambda:

AWS Lambda is a serverless computing service that allows you to run code without provisioning or managing servers. It automatically scales and manages the computing resources needed to run your code. Lambda functions can be used to extend the functionality of your Amazon Lex chatbot. You can integrate Lambda functions to perform backend tasks, connect to databases, or execute custom logic based on the user’s input. This serverless approach ensures scalability and cost efficiency.

By synergizing these services, you can create a powerful chatbot that not only understands user queries with the help of Amazon Lex but also responds in a more contextually aware and sophisticated manner using the Bedrock Claude LLM model. AWS Lambda complements this setup by enabling the seamless integration of custom functionalities, adding flexibility to your chatbot’s capabilities.

Steps

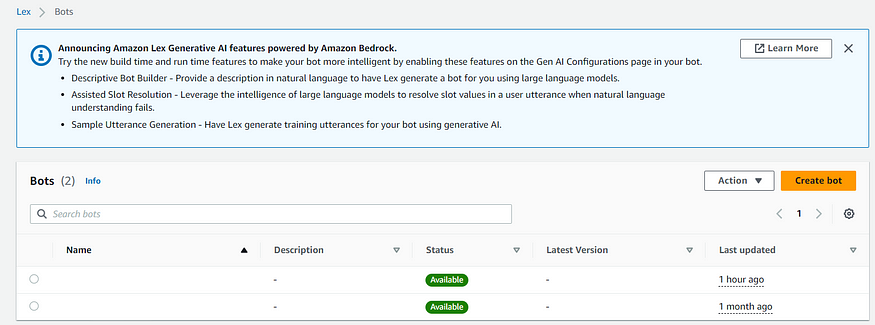

1. Creating the LEX Chatbot

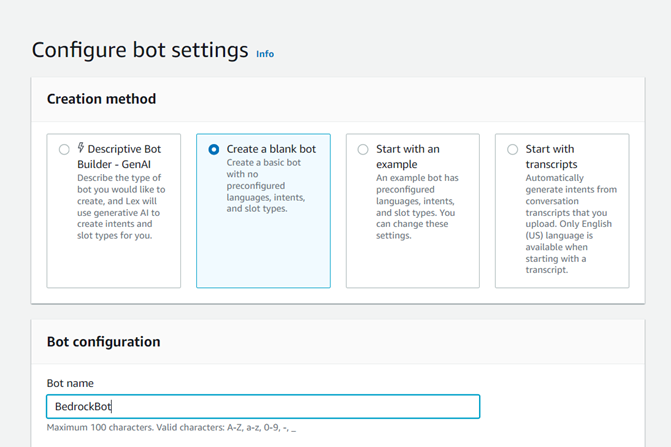

Go to the AWS console, Navigate to the Lex service and initiate the process by creating a new bot. Choose for the “create a blank bot” option and name your bot; for instance, I named mine the Bedrock Bot.

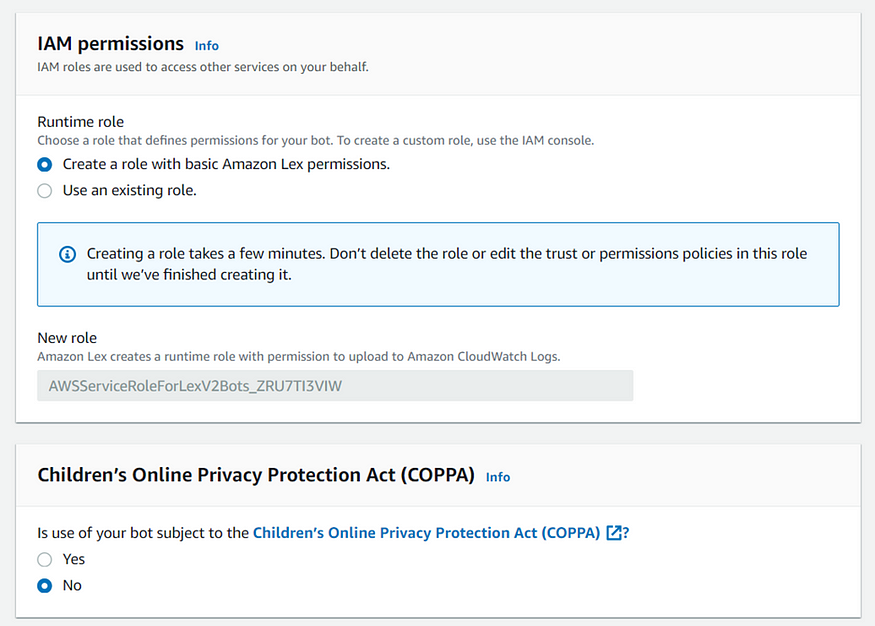

As you progress, opt to create a role with basic IAM permissions, and skip COPPA configuration as it’s unnecessary for our purposes.

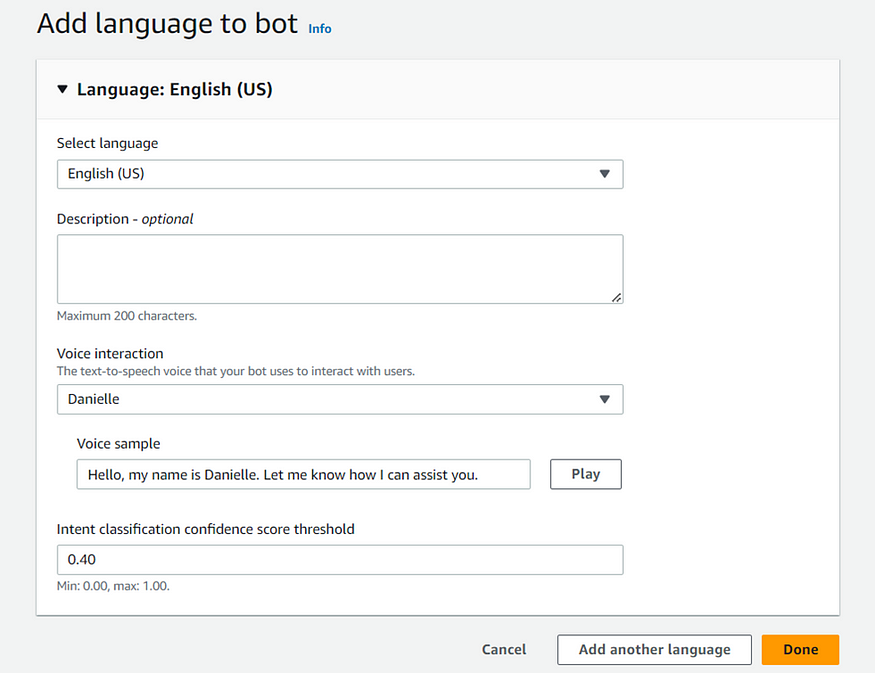

Select the desired language options to tailor the bot’s communication style.

2. Create Hello intent

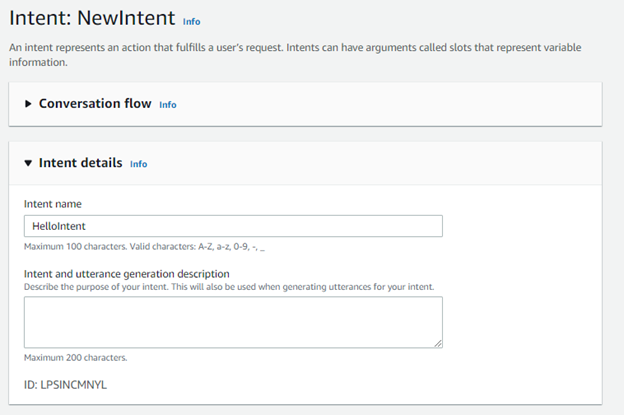

Upon creation, your chatbot comes equipped with two intents, with the fallback intent designed to execute when others aren’t recognized.

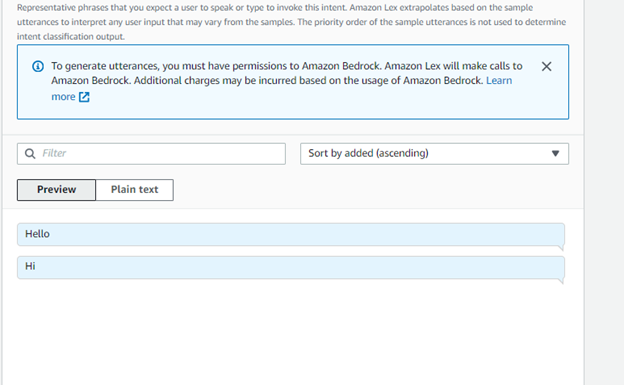

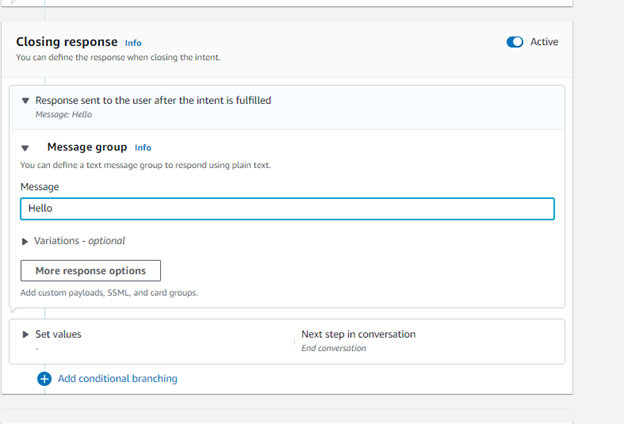

To ensure functionality an intent other than the fallback intent needs to be defined, define a simple “hello” intent with greetings like “hello” and “hi” as utterances, and set “hello” as the closing response.

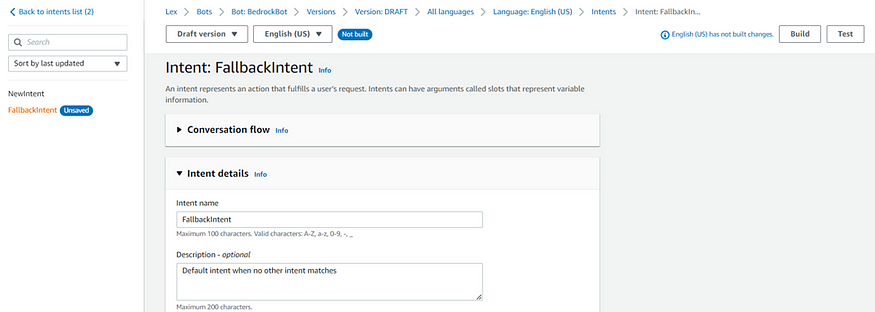

3. Create Fallback intent

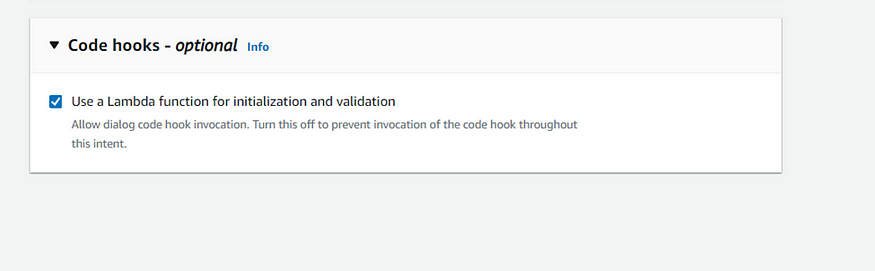

The next step involves configuring the fallback intent to execute a Lambda code hook, tapping into the Bedrock LLM model, specifically the Anthropic Claude v2.1.

Enable the code hook in the Fallback Intent.

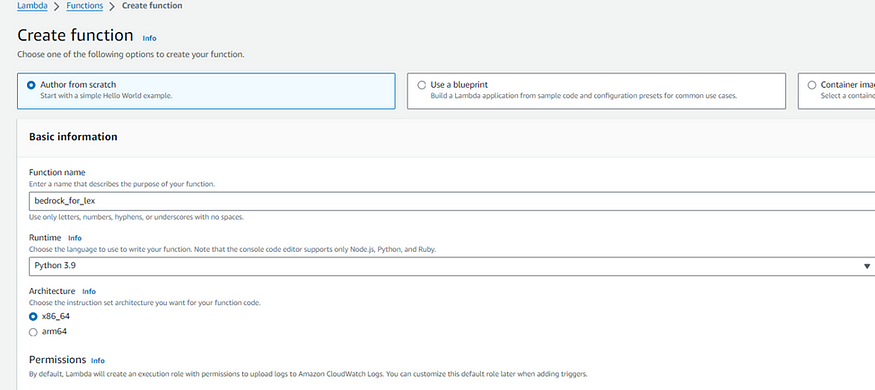

4. Create the lambda function

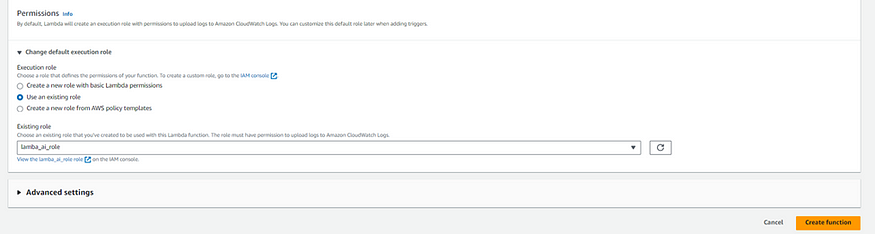

We use Lambda to tap into the Bedrock LLM model, specifically the Anthropic Claude v2.1. To achieve this, you’ll need to create a Lambda function.

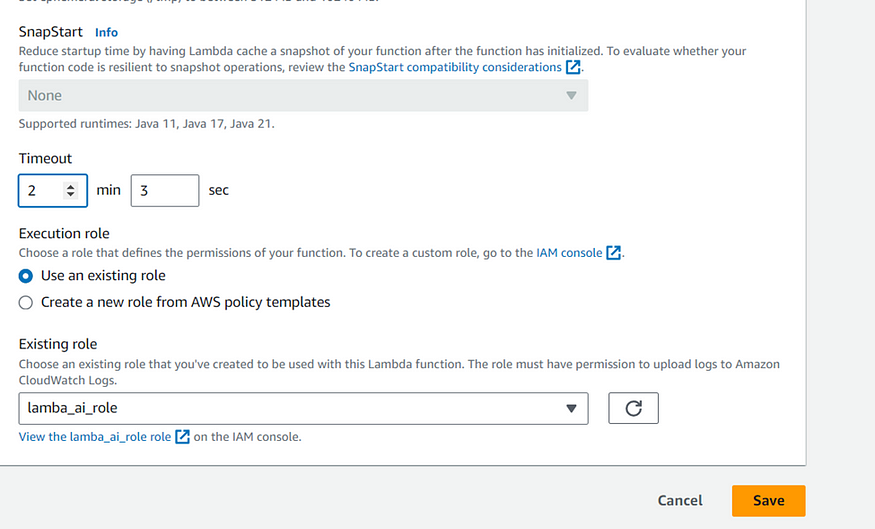

Remember to attach a role that has bedrock invoke model permissions.

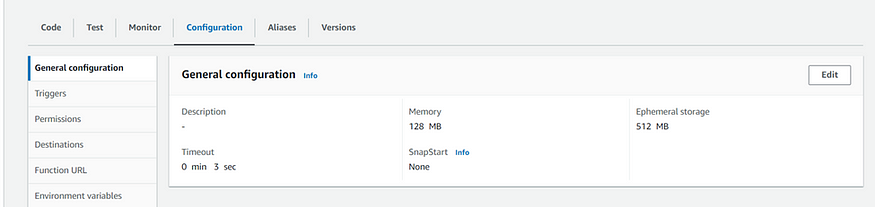

Remember to adjust the runtime settings of the Lambda function by accessing configurations and editing the timeout option.

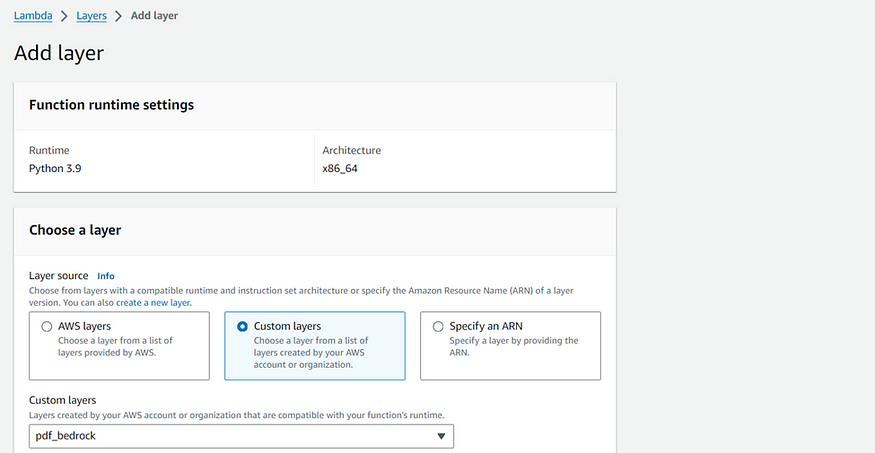

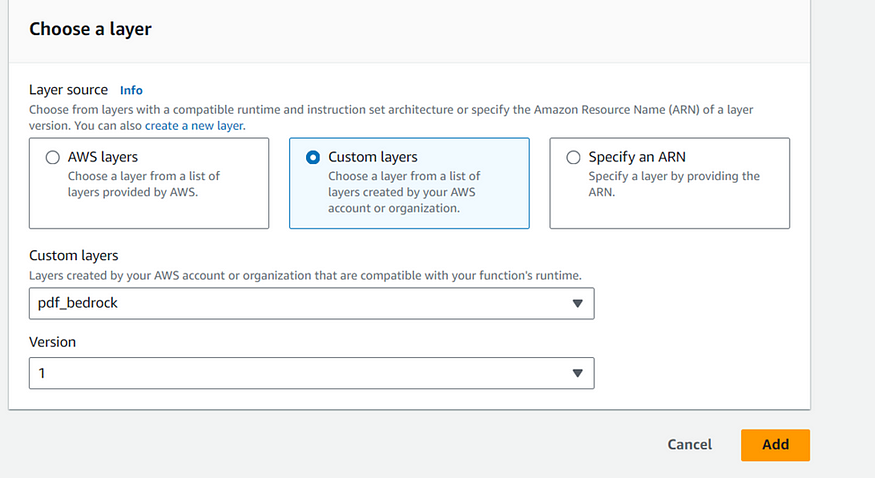

Additionally, enhance the function by adding the necessary layer with boto3 version 1.28.54 or later for seamless access to Bedrock.

If you want to learn how to create a lambda layer, see my medium article about it: https://medium.com/aws-in-plain-english/easiest-way-to-create-lambda-layers-with-the-required-python-version-d205f59d51f6

The code of lambda function is given below.

import json

import boto3

def create_bedrock_client():

"""

Create and return a Bedrock Runtime client using boto3.

Returns:

- boto3.client: Bedrock Runtime client.

"""

bedrock = boto3.client(

service_name="bedrock-runtime",

region_name="us-west-2"

)

return bedrock

def query_action(question, bedrock):

"""

Query the Bedrock Claude model with a given user question.

Args:

- question (str): User's input/question.

- bedrock (boto3.client): Bedrock Runtime client.

Returns:

- dict: Result from the Bedrock model.

"""

prompt = f"""\n\nHuman:

{question}

\n\nAssistant:

"""

body = json.dumps(

{

"prompt": f"{prompt}",

"max_tokens_to_sample": 300,

"temperature": 1,

"top_k": 250,

"top_p": 0.99,

"stop_sequences": [

"\n\nHuman:"

],

"anthropic_version": "bedrock-2023-05-31"

}

)

modelId = "anthropic.claude-v2:1"

contentType = "application/json"

accept = "*/*"

response = bedrock.invoke_model(body=body, modelId=modelId, accept=accept, contentType=contentType)

result = json.loads(response.get("body").read())

print(result)

return result

def handle_fallback(event):

"""

Handle the FallbackIntent by querying the Bedrock model with the user's input.

Args:

- event (dict): AWS Lambda event containing information about the Lex session.

Returns:

- dict: Lex response including the Bedrock model's completion.

"""

slots = event["sessionState"]["intent"]["slots"]

intent = event["sessionState"]["intent"]["name"]

bedrock = create_bedrock_client()

question = event["inputTranscript"]

result = query_action(question, bedrock)

session_attributes = event["sessionState"]["sessionAttributes"]

response = {

"sessionState": {

"dialogAction": {

"type": "Close",

},

"intent": {"name": intent, "slots": slots, "state": "Fulfilled"},

"sessionAttributes": session_attributes,

},

"messages": [

{"contentType": "PlainText", "content": result["completion"]},

],

}

return response

def lambda_handler(event, context):

"""

AWS Lambda handler function.

Args:

- event (dict): AWS Lambda event.

- context (object): AWS Lambda context.

Returns:

- dict: Lex response.

"""

session_attributes = event["sessionState"]["sessionAttributes"]

intent = event["sessionState"]["intent"]["name"]

if intent == "FallbackIntent":

return handle_fallback(event)

This code defines four functions:

- create_bedrock_client: Creates a client for the Bedrock Runtime service using boto3.

- query_action: Queries the Bedrock Claude model with a given user question and returns the result.

- handle_fallback: Handles the FallbackIntent by querying the Bedrock model and constructs a Lex response.

- lambda_handler: The main Lambda handler function, which determines whether to handle the FallbackIntent based on the incoming intent.

Now, let’s go through the process when the Lambda function is triggered by an event, such as a user interacting with a Lex chatbot:

- The

lambda_handlerfunction is the entry point for the AWS Lambda function. - It checks the intent from the Lex session event. If the intent is “FallbackIntent,” it calls the

handle_fallbackfunction. - Inside the

handle_fallbackfunction, it extracts information about the Lex session, including slots, intent name, and user input. - It then creates a Bedrock Runtime client using

create_bedrock_client. - The user’s input is passed to the

query_actionfunction, which sends a request to the Bedrock Claude model and retrieves the response. - The Lex response is constructed based on the FallbackIntent, and the completion from the Bedrock model is included in the response.

- The final Lex response is returned from the

handle_fallbackfunction.

This code essentially integrates the Bedrock Claude model into an AWS Lambda function that can be used as a fulfillment hook for a Lex chatbot’s FallbackIntent. The Bedrock model generates responses based on user input, providing a more sophisticated conversational experience.

5. Linking Lambda function to LEX Chatbot.

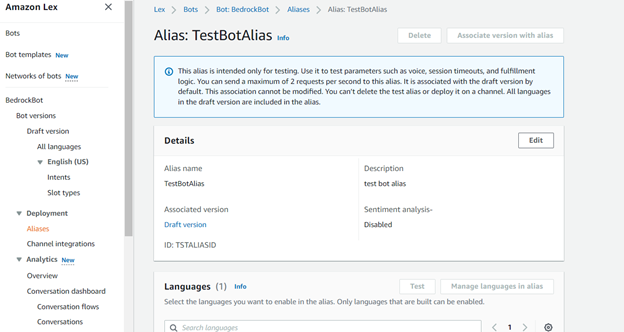

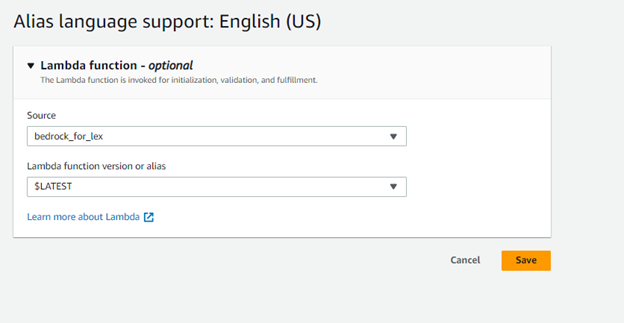

To link your chatbot with the Lambda function, head to the alias and languages sections. Choose the appropriate Bedrock function for languages, and you’re ready to take the next step.

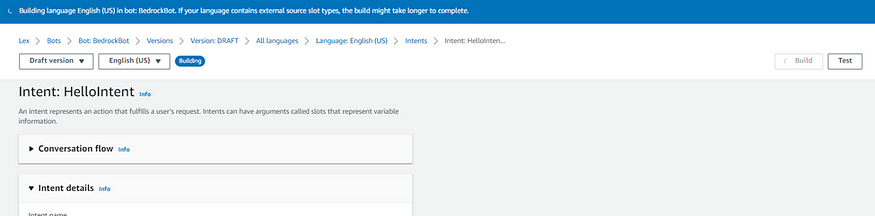

6. Build

Click on “build” to initiate the bot-building process and run tests to ensure seamless integration.

7. Test

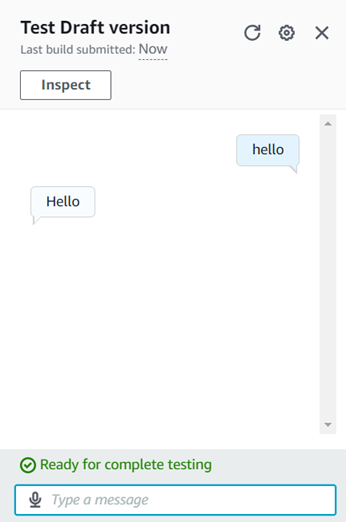

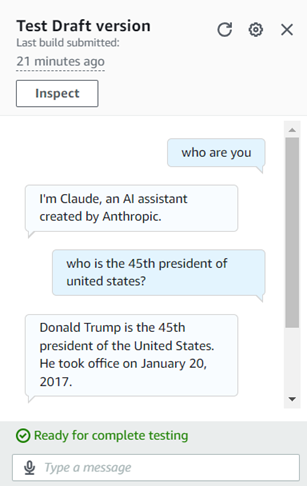

Now let’s test it.

First lets test the hello intent. It’s functioning well.

Then lets test the fallback intent. For that we need to input something other than hello. Lets ask the bot who it is.

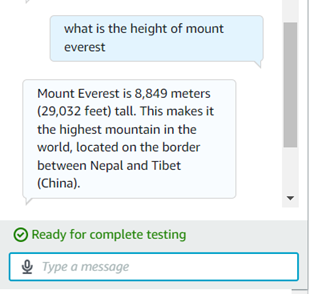

Then ask some General knowledge stuff.

Congratulations, you’ve successfully created a robust Lex chatbot powered by Bedrock’s innovative capabilities.

Comments

Loading comments…