Introduction

In the world of data management, importing data from various sources into a PostgreSQL database is a fundamental task. In this article, we’ll explore how to accomplish this efficiently using Python.

Prerequisites

Before we dive in, ensure you have the following prerequisites in place:

- Python installed on your system.

- Jupyter Notebook installed if you prefer working with notebooks (optional but recommended).

- PostgreSQL installed and running, with necessary credentials.

- Python libraries:

psycopg2,pandas, andsqlalchemy, which you can install usingpip.

Step 1: Setting Up Your Environment

In this step, we start by importing the necessary Python libraries, including psycopg2 for connecting to the PostgreSQL database, pandas for data manipulation, and sqlalchemy for creating the database engine. We also define the connection parameters required to connect to the PostgreSQL database, such as the host, database name, username, and password.

# Library

import psycopg2

import pandas as pd

from sqlalchemy import create_engine, text

# Define the database connection parameters

db_params = {

'host': 'localhost',

'database': 'postgres',

'user': 'postgres',

'password': 'admin'

}

💡 Speed up your blog creation with DifferAI.

Available for free exclusively on the free and open blogging platform, Differ.

Step 2: Creating the PostgreSQL Database

Once we’ve set up the environment, we establish a connection to the PostgreSQL server using the psycopg2 library. With a connection established, we create a cursor object, which allows us to execute SQL queries. We then proceed to create a new database named 'soccer.' After creating the database, we commit the changes and close the connection to the default PostgreSQL database, leaving us connected to the newly created 'soccer' database.

# Create a connection to the PostgreSQL server

conn = psycopg2.connect(

host=db_params['host'],

database=db_params['database'],

user=db_params['user'],

password=db_params['password']

)

# Create a cursor object

cur = conn.cursor()

# Set automatic commit to be true, so that each action is committed without having to call conn.committ() after each command

conn.set_session(autocommit=True)

# Create the 'soccer' database

cur.execute("CREATE DATABASE soccer")

# Commit the changes and close the connection to the default database

conn.commit()

cur.close()

conn.close()

Step 3: Loading and Displaying CSV Data

In this step, we focus on loading and displaying data from CSV files. We’ve defined the file paths for our CSV files in advance. We loop through each CSV file, read its contents into a Pandas DataFrame, and display the first few rows of the DataFrame for a quick data check. This step helps us ensure that we are working with the correct data before importing it into the PostgreSQL database.

# Connect to the 'soccer' database

db_params['database'] = 'soccer'

engine = create_engine(f'postgresql://{db_params["user"]}:{db_params["password"]}@{db_params["host"]}/{db_params["database"]}')

# Define the file paths for your CSV files

csv_files = {

'team': 'D:\Code\DE\soccer\\team.csv',

'match': 'D:\Code\DE\soccer\match.csv',

'country': 'D:\Code\DE\soccer\country.csv',

'league': 'D:\Code\DE\soccer\league.csv'

}

# Load and display the contents of each CSV file to check

for table_name, file_path in csv_files.items():

print(f"Contents of '{table_name}' CSV file:")

df = pd.read_csv(file_path)

print(df.head(2)) # Display the first few rows of the DataFrame

print("\n")

Step 4: Importing CSV Data into PostgreSQL

Now comes the exciting part — importing your CSV data into PostgreSQL. We’ll guide you through this process using the to_sql method in pandas.

# Loop through the CSV files and import them into PostgreSQL

for table_name, file_path in csv_files.items():

df = pd.read_csv(file_path)

df.to_sql(table_name, engine, if_exists='replace', index=False)

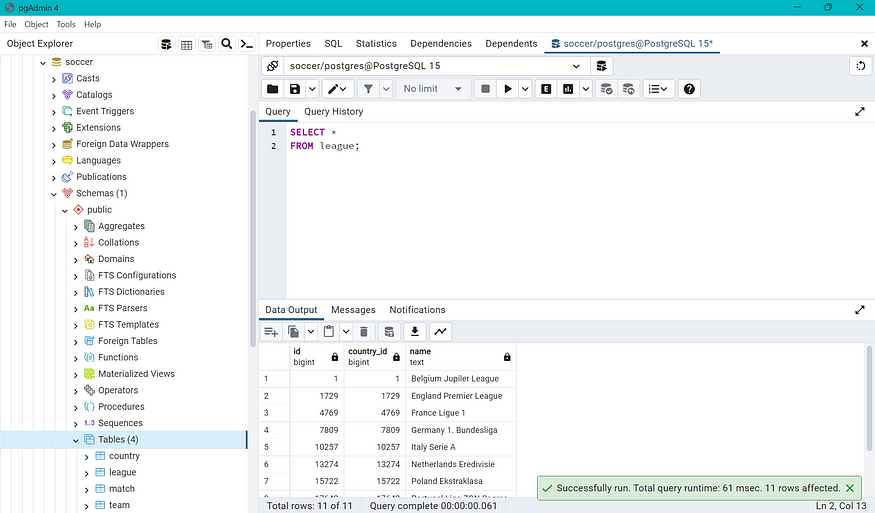

Let’s Check!

Conclusion

In this tutorial, you’ve learned how to seamlessly import CSV data into a PostgreSQL database using Python. The ability to efficiently handle data is a valuable skill for various industries, from data analysis to web development. Python, with its rich ecosystem of libraries, makes it easier than ever to manage your data.

Comments

Loading comments…