In this post, I am going to explore the EKS VPC networking setup. I have created the EKS cluster using eksctl with default settings.

Setting up Amazon EKS Cluster in the fastest and easiest way !

VPC Layout

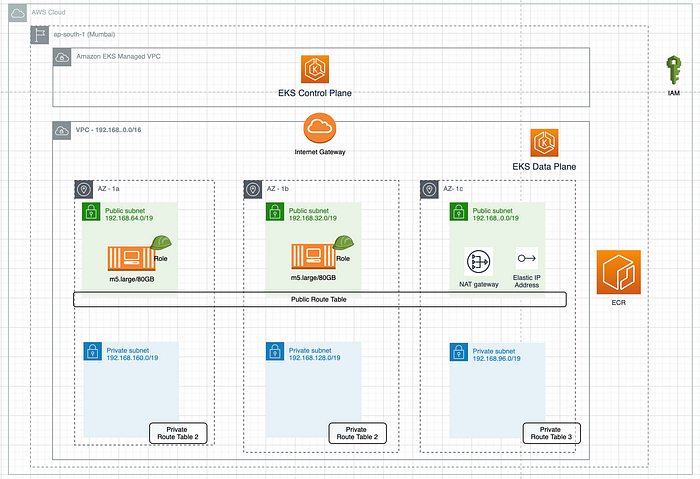

The following is the VPC layout of EKS.

EKS Control Plane runs in Amazon EKS Managed VPC. EKS data plane VPC is the customer-managed VPC. The worker nodes run in this VPC.

EKS VPC Layout

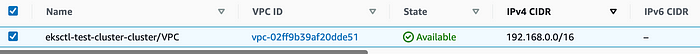

VPC is created with IP address 192.168.0.0/16.

EKS VPC IP Range

Three public and three private subnets are created in three different AZs. This enables EKS to protect the workload from any AZ failures.

- 192.168.0.0/19 — Public Subnet in 1c

- 192.168.32.0/19 — Public Subnet in 1b

- 192.168.64.0/19 — Public Subnet in 1a

- 192.168.96.0/19 — Private Subnet in 1c

- 192.168.128.0/19 — Private Subnet in 1b

- 192.168.160.0/19 — Private subnet in 1a

Internet Gateway is attached to VPC.

NAT Gateway with Elastic IP (static IP ) is also placed in the Public Subnet.

NAT Gateway with Elastic IP

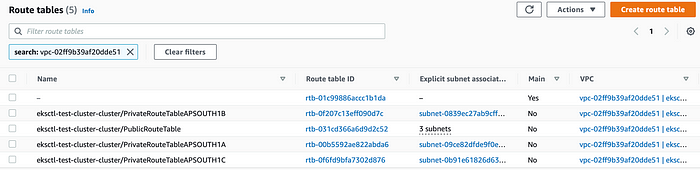

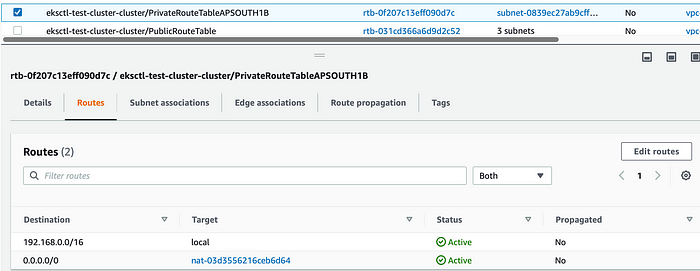

A set of public , private and main route tables are also created.

Set of route tables

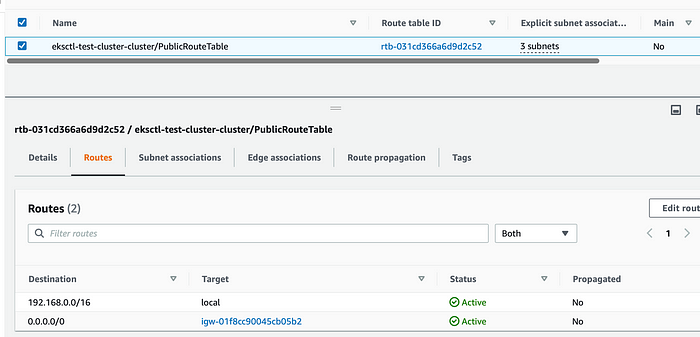

Public route table is used by Public Subnets.

Public Route table

Private route tables are used by Private Subnets. These route tables have appropriate routing entry for NAT gateway so that worker nodes can do any outbound internet connection.

Private Route Table entry

Two EC2 instances (nodes) of type m5.large are created in two Public Subnets having public ip.

Two m5.larget instsaces running within EKS cluster

Kubernetes API Server Endpoint (Control Plane) access is set as public by default. This is useful during development as you could connect to an API server from anywhere using tools such as kubectl. The access can be further restricted using Public Allow Source List.

Kubernetes API requests that originate from within the cluster's VPC (such as node to control plane communication) leave the VPC but not Amazon's network.

Instance Types, ENIs & IP Addresses

Each EC2 instance can have one or more network interfaces and each network interface can have one or more IP addresses. The instance type has a bearing on the number of Network Interfaces and available IP addresses.

Refer IP addresses per network interface per instance type

m5.large instance type supports three network interfaces and each interface can have up to 10 IP addresses. Thus providing 30 IP addresses/node. This puts the limit on the number of Pods running on the node!

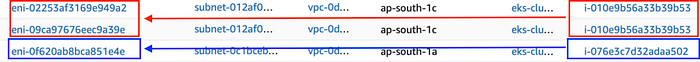

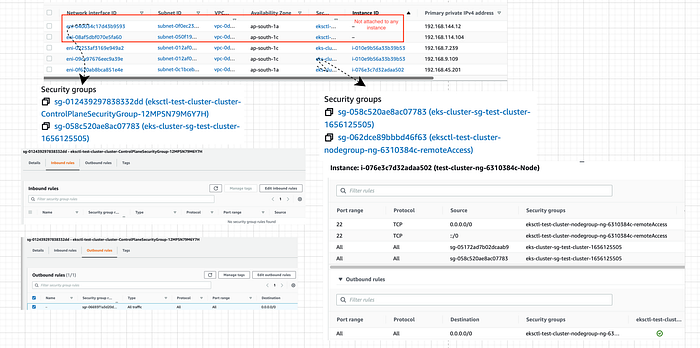

There are two m5.large nodes in this setup . i-xxx-9b53 is configured with two ENIs (eni-xxx-49a2 and eni-xxx-a39e) and i-xxx-a502 is configured with one ENI (eni-xxx-1e4e).

m5.large with ENIs

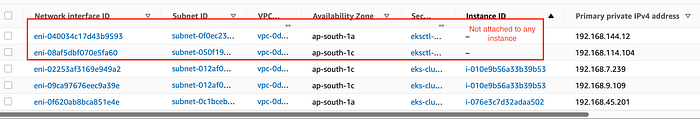

ENI and IP addresses

Each of the ENI is configured with one primary address and nine secondary IP addresses i.e., a total of 10 IP addresses/ENI. Thus, three ENIs result in 3x10 = 30 IP addresses across two nodes.

Two additional ENIs (eni-xxx-9593 , eni-xxx-fa60) are created in two different AZs (ap-south-1a and ap-south-1c) but not attached to any instance. These ENIs are used to communicate with Amazon Managed Control plane.

ENI not attached to instances

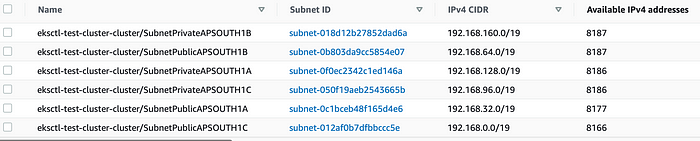

Available Number of IP addresses

Let us review the number of available IP addresses for each subnet.

Each subnet is created with /19 i.e. each subnet should provide (32–19) = 2¹³ = 8192 IP addresses. We know that 5 IP addresses are reserved by AWS so each subnet will have only (8192–5=8187 ) usable IPs.

- SubnetPrivateAPSOUTH1B , SubnetPublicAPSOUTH1B reflect the same i.e. 8187

- SubnetPrivateAPSOUTH1A and SubnetPrivateAPSOUTH1C are used to created ENIs (eni-xxx-9593 , eni-xxx-fa60) for communicaiton between control plane andworker nodes. Each ENI consumes one IP from each subnet. Thus resulting in 8187–1= 8186 IPs.

- SubnetPublicAPSOUTH1A contains a single node with 10 IP addresses (1 primary +9 secondary) thus resulting in 8187–10= 8177 IP addresses.

- SubnetPublicAPSOUTH1C contains another m5.large node with two ENIs and each ENI has 10 IP addresses. This also hosts NAT Gateway which consumes 1 IP. This results in 8187–20–1=8166 IP addresses!

ENIs and Security Groups

Security groups are attached to ENI and ENIs are then attached to EC2 instance. Each of the ENI is associated with multiple security groups.

ENI and Security Groups

ekcstl-test-cluster-ControlPlaneSecurityGroup-xxx enables communication between the control plane and worker nodegroups.

eks-xxx-cluster-1665xxx is an EKS created security group applied to ENI that is attached to EKS Control Plane master nodes, as well as any managed workloads.

eksctl-xxx-cluster-nodegroup-ng-xxx-remoteAccess enables communication from the outside world to the nodes.

Summary

The VPC layout and the default configuration could be sufficient for many of the workloads. However, it makes life a bit easier when we understand how it is tied together under the hood so that one can customize based on specific requirements or constraints. You may also consider disabling the public API endpoint in a more secure environment. There are other topologies possible but that is for another post!

Thank you for reading!

Comments

Loading comments…