What Is Amazon SageMaker?

Amazon SageMaker is a fully managed machine learning service provided by Amazon Web Services (AWS) that allows developers and data scientists to quickly and easily build, train, and deploy machine learning models. It provides a variety of features such as built-in algorithms, Jupyter notebook integration, automatic model tuning, and real-time inference capabilities.

It also integrates with other AWS services such as S3 and EC2, making it easy to use data stored in these services for training and deploying models. SageMaker allows for the deployment of models to edge devices for real-time predictions, and provides various tools for monitoring, troubleshooting, and optimizing the performance of models in production.

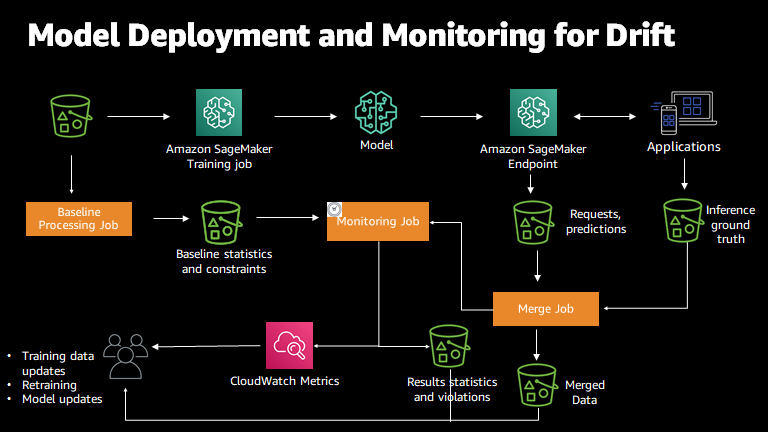

What Is Amazon SageMaker Model Monitor?

Amazon SageMaker Model Monitor is a feature of Amazon SageMaker that allows you to detect and diagnose concept drift in your machine learning models. It allows you to monitor your ML models for drift by comparing the model's predictions on a held-out dataset (also called a "baseline dataset") to the predictions on new data (also called "monitoring dataset"). It also allows you to set up rules to detect drift and get notifications on drift.

It provides a valuable tool for machine learning engineers, a visualization dashboard that you can use to view the performance of your models over time and detect drift. Additionally, Model Monitor provides feature importance, which is a way of understanding how much each feature contributes to the model's predictions and can be used to identify features that may have changed, causing drift.

How to Monitor ML Models in AWS SageMaker

Capture Data

Monitoring machine learning models in Amazon SageMaker involves capturing data for both the baseline dataset and the monitoring dataset. Here are the differences between the two:

- Baseline dataset: This is a dataset that is used to establish a baseline for the model's performance. It should be representative of the data that the model will see in production and should be captured before the model is deployed.

- Monitoring dataset: This is a dataset that is used to monitor the model's performance over time. It should be captured regularly after the model is deployed and should also be representative of the data that the model will see in production.

To capture data in Amazon SageMaker, you can use the SageMaker Data Wrangler, a feature that allows you to easily prepare, visualize, and analyze your data. You can also use other data preparation tools such as AWS Glue or AWS Data Pipeline to prepare your data for use in Amazon SageMaker.

Once the data is captured and prepared, it can be used to train and deploy models, and also used as the basis for monitoring the model's performance over time. You can set up a schedule for monitoring the model and get notifications when drift is detected.

Monitor Data Quality

Data quality monitoring helps to ensure that the data used to train and evaluate models is accurate, complete, and consistent. Here are key options:

- Data Quality Checks: Amazon SageMaker Model Monitor allows you to set up data quality checks that detect and alert on data issues. These checks can include things like missing values, outliers, or data that falls outside of expected ranges.

- Data Profile: SageMaker Model Monitor also provides data profile visualization that allows you to understand the distribution of features across the dataset and detect any issues such as missing values, outliers, and skewness.

- Data Drift: Model Monitor can also detect data drift, which is a change in the distribution of the data over time. Data drift can occur for various reasons, such as changes in data sources or data collection processes, and can have a significant impact on model performance.

- Data Validation: Data validation is another way to ensure data quality. You can use SageMaker Data Wrangler to validate data before it is passed to the model, by defining validation rules and constraints.

Monitoring data quality and addressing any issues that are identified is an important step in maintaining the performance of your machine learning models over time. By regularly monitoring and addressing data quality issues, you can ensure that your models continue to make accurate predictions and avoid any negative impact on your business.

Monitor Model Quality

Model quality monitoring helps to ensure that the models are performing as expected and that they continue to make accurate predictions over time. Here are key options:

- Model Quality Metrics: Amazon SageMaker Model Monitor allows you to set up monitoring for a variety of model quality metrics such as accuracy, precision, recall, F1 score, AUC-ROC, and many others. These metrics can be used to evaluate the performance of the model and detect when it starts to drift.

- Model Drift Detection: Model Monitor can detect model drift by comparing the model's predictions on the baseline dataset to the predictions on the monitoring dataset. When drift is detected, you will be notified and provided with information about the nature of the drift and the impact on the model's performance.

- Model Versioning: Another way to monitor model quality is by versioning the models. SageMaker allows you to version models, so you can test new models and compare their performance with previous versions.

Monitor Bias Drift

Monitoring bias drift for models in production is an important aspect of monitoring machine learning models in Amazon SageMaker, as bias can lead to unfair and inaccurate predictions. Here are key options;

- Bias Detection: Amazon SageMaker Model Monitor allows you to set up monitoring for bias by comparing the model's predictions on different subgroups of the data, such as by race, gender, or age. When bias is detected, you will be notified and provided with information about the nature of the bias and the impact on the model's performance.

- Fairness Metrics: SageMaker Model Monitor also provides fairness metrics such as demographic parity, equality of opportunity and others, which can be used to detect bias and evaluate the fairness of the model's predictions.

- Data Validation: Data validation is another way to ensure bias-free predictions. You can use SageMaker Data Wrangler to validate data before it is passed to the model, by defining validation rules and constraints.

- Mitigating bias: Once bias is detected, there are multiple ways to mitigate it. One way is to re-balance the dataset, by oversampling or undersampling certain groups, another way is to use algorithms such as adversarial debiasing or preprocessing techniques such as reweighing.

Monitor Feature Attribution Drift

Monitoring feature attribution drift for models in production can help to identify changes in the input feature space that may be causing a model to perform poorly. Here are key Amazon SageMaker Model Monitor options:

- Feature Attribution: Amazon SageMaker Model Monitor allows you to monitor feature attribution, which is the process of understanding how much each feature contributes to the model's predictions. This can be done by calculating feature importance, which quantifies the relative contribution of each feature to the model's predictions.

- Feature Drift Detection: Model Monitor can detect feature drift by comparing the feature importance calculated on the baseline dataset to the feature importance calculated on the monitoring dataset. When drift is detected, you will be notified and provided with information about the nature of the drift and the impact on the model's performance.

- Feature Importance Visualization: SageMaker Model Monitor also provides a visualization dashboard that you can use to view the performance of your models over time and detect feature drift.

Conclusion

In conclusion, monitoring machine learning models in Amazon SageMaker is crucial for ensuring that they continue to make accurate and fair predictions over time. Amazon SageMaker Model Monitor provides a comprehensive solution for monitoring and managing machine learning models, including data quality monitoring, model quality monitoring, monitoring bias drift, and monitoring feature attribution drift.

By regularly monitoring these aspects, you can detect and address issues that may arise, such as data drift, model drift, bias, and feature attribution drift. This helps to maintain the performance of your models and avoid any negative impact on your business. Additionally, SageMaker Model Monitor provides an explainability feature, which can help to identify the root cause of drift and give you insight into how to mitigate it.

Comments

Loading comments…