I couldn't find a Medium post for this one. There is one by Angelica Dietzel but it's unfortunately only readable if you have a paid account on Medium. If you have any suggestions for improvement on the method I will demonstrate here, which is of course freely accessible, please leave a comment.

You can find the code on my GitHub page.

A typical website hierarchy

We start with a visual hierarchical representation of a website.

In red are pages that contain links where you don't want to go (Facebook, Linkedin, other social media pages). In green are the places where you want to extract valuable information from. Note that these two product pages are interlinked so we should think about not saving the same link multiple times when we go from up to down within the hierarchy while we are crawling through the website.

1. Import necessary modules

import requests

from bs4 import BeautifulSoup

from tqdm import tqdm

import json

2. Write a function for getting the text data from a website url

def getdata(url):

r = requests.get(url)

return r.text

3. Write a function for getting all links from one page and store them in a list

First, in this function we will get all “a href” marked links. As mentioned, this could potentially lead to the scraping of other websites you do not want information from. We have to place some restraints on the function.

Second thing is that we also want the href that don't show the full HTML link but only a relative link and starts with a “/” to be included in the collection of links. For instance, we can encounter a valuable HTML string marked with href like this:

<a class="pat-inject follow icon-medicine-group" data-pat-inject="hooks: raptor; history: record" href="/bladeren/groepsteksten/alfabet">

If we would use the “/bladeren/groepsteksten/alfabet” string to search for new subpages we will get an error.

Third, we want to convert the link to a dictionary with the function dict.fromkeys() to prevent saving duplications of the same link and to speed up the link searching process. The result looks like this:

# create empty dict

dict_href_links = {}

def get_links(website_link):

html_data = getdata(website_link)

soup = BeautifulSoup(html_data, "html.parser")

list_links = []

for link in soup.find_all("a", href=True):

# Append to list if new link contains original link

if str(link["href"]).startswith((str(website_link))):

list_links.append(link["href"])

# Include all href that do not start with website link but with "/"

if str(link["href"]).startswith("/"):

if link["href"] not in dict_href_links:

print(link["href"])

dict_href_links[link["href"]] = None

link_with_www = website_link + link["href"][1:]

print("adjusted link =", link_with_www)

list_links.append(link_with_www)

# Convert list of links to dictionary and define keys as the links and the values as "Not-checked"

dict_links = dict.fromkeys(list_links, "Not-checked")

return dict_links

4. Write a function that loops over all the subpages

We use a for loop to go through the subpages and use tqdm to obtain insight into the number of steps that have been completed and keep track of the remaining time to complete the process.

def get_subpage_links(l):

for link in tqdm(l):

# If not crawled through this page start crawling and get links

if l[link] == "Not-checked":

dict_links_subpages = get_links(link)

# Change the dictionary value of the link to "Checked"

l[link] = "Checked"

else:

# Create an empty dictionary in case every link is checked

dict_links_subpages = {}

# Add new dictionary to old dictionary

l = {**dict_links_subpages, **l}

return l

5. Create the loop

Before we start the loop we have to initialize some variables. We save the website we want to scrape in a variable and convert this variable into a single key dictionary that has the value “Not-checked”. We create a counter “counter” to count the number of “Not-checked” links and we create a second counter “counter2” to count the number of iterations. To communicate back to ourselves we create some print statements.

# add websuite WITH slash on end

website = "https://YOURWEBSITE.COM/"

# create dictionary of website

dict_links = {website:"Not-checked"}

counter, counter2 = None, 0

while counter != 0:

counter2 += 1

dict_links2 = get_subpage_links(dict_links)

# Count number of non-values and set counter to 0 if there are no values within the dictionary equal to the string "Not-checked"

# https://stackoverflow.com/questions/48371856/count-the-number-of-occurrences-of-a-certain-value-in-a-dictionary-in-python

counter = sum(value == "Not-checked" for value in dict_links2.values())

# Print some statements

print("")

print("THIS IS LOOP ITERATION NUMBER", counter2)

print("LENGTH OF DICTIONARY WITH LINKS =", len(dict_links2))

print("NUMBER OF 'Not-checked' LINKS = ", counter)

print("")

dict_links = dict_links2

# Save list in json file

a_file = open("data.json", "w")

json.dump(dict_links, a_file)

a_file.close()

This will give you something like the following:

As you can see the number of not-checked links decreases until it reaches zero and then the script is finished, which is exactly what we want.

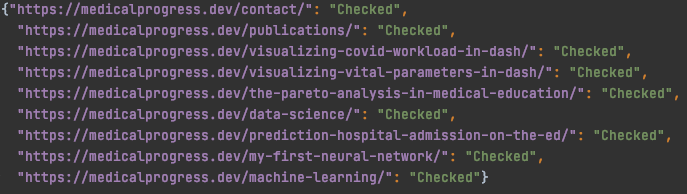

The JSON file looks something like this:

Comments

Loading comments…