Who dives faster? by Charles Zhu, my 6yo boy

It is easy to send a single HTTP request by using the requests package. What if I want to send hundreds or even millions of HTTP requests asynchronously? This article is an exploring note to find my fastest way to send HTTP requests.

The code is running in a Linux(Ubuntu) VM host in the cloud with Python 3.7. All code in gist is ready for copy and run.

Solution #1: The Synchronous Way

The most simple, easy-to-understand way, but also the slowest way. I forge 100 links for the test by this magic python list operator:

url_list = ["https://www.google.com/","https://www.bing.com"]*50

The code:

It takes about 10 seconds to finish downloading 100 links.

...

download 100 links in 9.438416004180908 seconds

As a synchronous solution, there are still rooms to improve. We can leverage the Session object to further increase the speed. The Session object will use urllib3's connection pooling, which means, for repeating requests to the same host, the Session object's underlying TCP connection will be re-used, hence gain a performance increase.

So if you're making several requests to the same host, the underlying TCP connection will be reused, which can result in a significant performance increase — Session Objects

To ensure the request object exit no matter success or not, I am going to use the with statement as a wrapper. the withkeyword in Python is just a clean solution to replace try… finally… .

Let's see how many seconds are saved by changing to this:

Looks like the performance is really boosted to 5.x seconds.

...

download 100 links in 5.367443561553955 seconds

But this is still slow, let's try the multi-threading solution

Solution #2: The Multi-Threading Way

Python threading is a dangerous topic to discuss, sometimes, multi-threading could be even slower! David Beazley brought with gut delivered a wonderful presentation to cover this dangerous topic. here is the Youtube link.

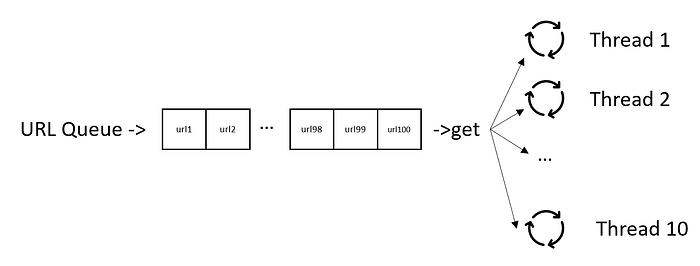

Anyway, I am still going to use Python Thread to do the HTTP request job. I will use a queue to hold the 100 links and create 10 HTTP download worker threads to consume the 100 links asynchronously.

How the multi-thread works

To use the Session object, it is a waste to create 10 Session objects for 10 threads, I want one Session object and reuse it for all downloading work. To make it happen, The code will leverage the local object from threading package, so that 10 thread workers will share one Session object.

from threading import Thread,local

...

thread_local = local()

...

The code:

The result:

...

download 100 links in 1.1333789825439453 seconds

This is fast! way faster than the synchronous solution.

Solution #3: Multi-Threading by ThreadPoolExecutor

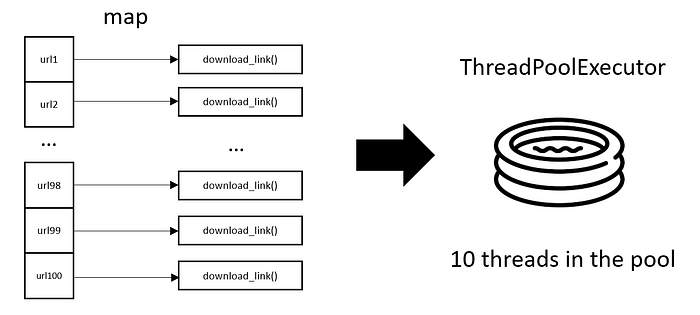

Python also provides ThreadPoolExecutor to perform multi-thread work, I like ThreadPoolExecutor a lot.

In the Thread and Queue version, there is a while True loop in the HTTP request worker, this makes the worker function tangled with Queue and needs additional code change from the synchronous version to the asynchronous version.

Using ThreadPoolExecutor, and its map function, we can create a Multi-Thread version with very concise code, require minimum code change from the synchronous version.

How the ThreadPoolExecutor version works

The code:

And the output is as fast as the Thread-Queue version:

...

download 100 links in **1.0798051357269287** seconds

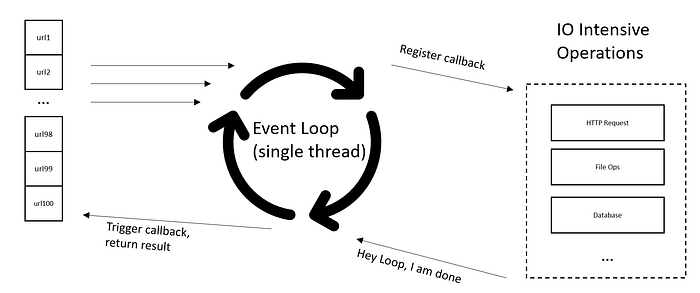

Solution #4: asyncio with aiohttp

Everyone says asyncio is the future, and it is fast. Some folks use it making 1 million HTTP requests with Python [asyncio](https://pawelmhm.github.io/asyncio/python/aiohttp/2016/04/22/asyncio-aiohttp.html) and [aiohttp](https://pawelmhm.github.io/asyncio/python/aiohttp/2016/04/22/asyncio-aiohttp.html). Although asyncio is super fast, it uses zero Python Multi-Threading.

Believe it or not, asyncio runs in one thread, in one CPU core.

asyncio Event Loop

The event loop implemented in asyncio is almost the same thing that is beloved in Javascript.

Asyncio is so fast that it can send almost any number of requests to the server, the only limitation is your machine and internet bandwidth.

Too many HTTP requests send will behave like “attacking”. Some web site may ban your IP address if too many requests are detected, even Google will ban you too. To avoid being banned, I use a custom TCP connector object that specified the max TCP connection to 10 only. (it may safe to change it to 20)

my_conn = aiohttp.TCPConnector(**_limit_=10**)

The code is pretty short and concise:

And the above code finished 100 links downloading in 0.74 seconds!

...

download 100 links in 0.7412574291229248 seconds

Note that if you are running code in JupyterNotebook or IPython. please also install thenest-asyncio package. (Thanks to this StackOverflow link. Credit to Diaf Badreddine.)

pip install nest-asyncio

and add the following two lines of code at the beginning of the code.

import nest_asyncio

nest_asyncio.apply()

Solution #5: What about Node.js?

I am wondering, what if I do the same work in Node.js, which has a built-in event loop?

Here is the full code:

It takes 1.1 to 1.5 seconds, you can give it a run to see the result in your machine.

...

test: 1195.290ms

Python, win the speed game!

(Looks like the request Node package is deprecated, but this sample is just for testing out how Node.js's event loop performs compare with Python's event loop.)

Let me know if you have a better/faster solution. If you have any questions, leave a comment and I will do my best to answer, If you spot an error or mistake, don't hesitate to mark them out. Thanks for reading.

Comments

Loading comments…