I’m the kind of person who is terrible at detecting emotions from other people’s faces, so I’ll make a code that will detect it for me!

First, we need to choose a method by which we will determine a person’s emotions. There are two methods: Facial Coding and fEMG.

Yes, fEMG is more accurate, but I won’t attach electrodes to the interlocutor’s face to understand his emotions ( maybe I will, hehe) ). For such a trivial reason, I will use Facial Coding.

The Facial Action Coding System (FACS) refers to a set of facial muscle movements that correspond to a displayed emotion. Originally created by Carl-Herman Hjortsjö with 23 facial motion units in 1970, it was subsequently developed further by Paul Ekman, and Wallace Friesen. The FACS as we know it today was first published in 1978, but was substantially updated in 2002.

Using FACS, we are able to determine the displayed emotion of a participant. This analysis of facial expressions is one of very few techniques available for assessing emotions in real-time (fEMG is another option). Other measures, such as interviews and psychometric tests, must be completed after a stimulus has been presented. This delay ultimately adds another barrier to measuring how a participant truly feels in direct response to a stimulus.

Looking for facial muscles!

Using the basic code that is given in the mediapipe example, we can get the muscle points on your beautiful face.

import cv2

import mediapipe as mp

import math

mp_drawing = mp.solutions.drawing_utils

mp_drawing_styles = mp.solutions.drawing_styles

mp_face_mesh = mp.solutions.face_mesh

Idrawing_spec = mp_drawing.DrawingSpec(thickness=1, circle_radius=1)

cap = cv2.VideoCapture(0)

with mp_face_mesh.FaceMesh(

max_num_faces=1,

refine_landmarks=True,

min_detection_confidence=0.5,

min_tracking_confidence=0.5) as face_mesh:

while cap.isOpened():

success, image = cap.read()

if not success:

print("Ignoring empty camera frame.")

continue

image.flags.writeable = False

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

results = face_mesh.process(image)

shape = image.shape

image.flags.writeable = True

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

if results.multi_face_landmarks:

for face in results.multi_face_landmarks:

for i in range(len(face.landmark)):

x = face.landmark[i].x

y = face.landmark[i].y

relative_x = int(x * shape[1])

relative_y = int(y * shape[0])

cv2.circle(image, (relative_x, relative_y), radius=1, color=(225, 0, 100), thickness=1)

cv2.imshow('MediaPipe Face Mesh', cv2.flip(image, 1))

if cv2.waitKey(5) & 0xFF == 27:

break

cap.release()

Now we have our face with various points through which we will determine muscle contraction!

On this wonderful man, I showed you number of every point.

On this wonderful man, I showed you number of every point.

Now we need to make code to stabilize the coordinates so that their values do not change despite the distance and rotation of the head.

First of all, we need to rotate the coordinates so that the face always looks straight ahead. To do this, let’s denote preangle — the radial measurement of the angle of rotation of two base points along x, y and z:

preangle1 = math.degrees(math.atan2(face.landmark[148].z-face.landmark[10].z, face.landmark[148].y-face.landmark[10].y))/180 * math.pi

preangle2 = math.degrees(math.atan2(face.landmark[1].z-face.landmark[10].z, face.landmark[1].x-face.landmark[10].x))/180 * math.pi

Now we need to understand how to look for the distance between two points so that it does not change depending on the distance of the face from the screen. What immediately comes to mind is to look for two points in relation to the size of the face itself:

math.sqrt(pow((p1[0]-p2[0])/2*shape[1], 2) + pow((p1[1]-p2[1])/2*shape[0], 2) + pow((p1[2]-p2[2])/2*(shape[0]+shape[1])/3, 2)) / headsize(x or y)

And now we need a rotation along the sphere so that the coordinates always look straight ahead.

Rotate by X:

cos_theta = math.cos(theta);

sin_theta = math.sin(theta);

return [

round(x, 4),

round(y * cos_theta - z * sin_theta, 4),

round(y * sin_theta + z * cos_theta, 4),

];

Rotate by Y:

cos_theta = math.cos(theta);

sin_theta = math.sin(theta);

return [

round(x * cos_theta + z * sin_theta, 4),

round(y, 4),

round(-x * sin_theta + z * cos_theta, 4),

];

Now we collect this code and write functions with averaging points on the right side and on the left side of the face:

def distance2points(p1, p2, p3, p4, shape, preangle1, preangle2):

p1 = rotate_y(preangle2, rotate_x(preangle1, p1))

p2 = rotate_y(preangle2, rotate_x(preangle1, p2))

p3 = rotate_y(preangle2, rotate_x(preangle1, p3))

p4 = rotate_y(preangle2, rotate_x(preangle1, p4))

return math.sqrt(pow((p1[0]-p2[0]+p3[0]-p4[0])/2*shape[1], 2) + pow((p1[1]-p2[1]+p3[1]-p4[1])/2*shape[0], 2) + pow((p1[2]-p2[2]+p3[2]-p4[2])/2*(shape[0]+shape[1])/3, 2))

def rotate_x(theta, coords):

x = coords.x

y = coords.y

z = coords.z

cos_theta = math.cos(theta)

sin_theta = math.sin(theta)

return [round(x, 4),

round(y * cos_theta - z * sin_theta, 4),

round(y * sin_theta + z * cos_theta, 4)]

def rotate_y(theta, coords):

x = coords[0]

y = coords[1]

z = coords[2]

cos_theta = math.cos(theta)

sin_theta = math.sin(theta)

return [round(x * cos_theta + z * sin_theta, 4),

round(y, 4),

round(-x * sin_theta + z * cos_theta, 4)]

Now we need to designate all Action Units.

Example of how to designate Action Unit:

preangle1 =

(math.degrees(

math.atan2(

face.landmark[148].z - face.landmark[10].z,

face.landmark[148].y - face.landmark[10].y

)

) /

180) *

math.pi;

preangle2 =

(math.degrees(

math.atan2(

face.landmark[1].z - face.landmark[10].z,

face.landmark[1].x - face.landmark[10].x

)

) /

180) *

math.pi;

headhdis = distance2points(

face.landmark[148],

face.landmark[10],

face.landmark[152],

face.landmark[10],

shape,

preangle1,

preangle2

);

headwdis = distance2points(

face.landmark[148],

face.landmark[10],

face.landmark[152],

face.landmark[10],

shape,

preangle1,

preangle2

);

dis = distance2points(

face.landmark[108],

face.landmark[107],

face.landmark[337],

face.landmark[336],

shape,

preangle1,

preangle2

);

ActU01 = (dis / headhdis) * 100;

Once all the muscles are marked, it will be necessary to stabilize them according to the maximum and neutral value and so that the values are from 0 to 1. Unfortunately, all this is individual, so we will not be able to determine emotions in the photos, but in the video — yes!

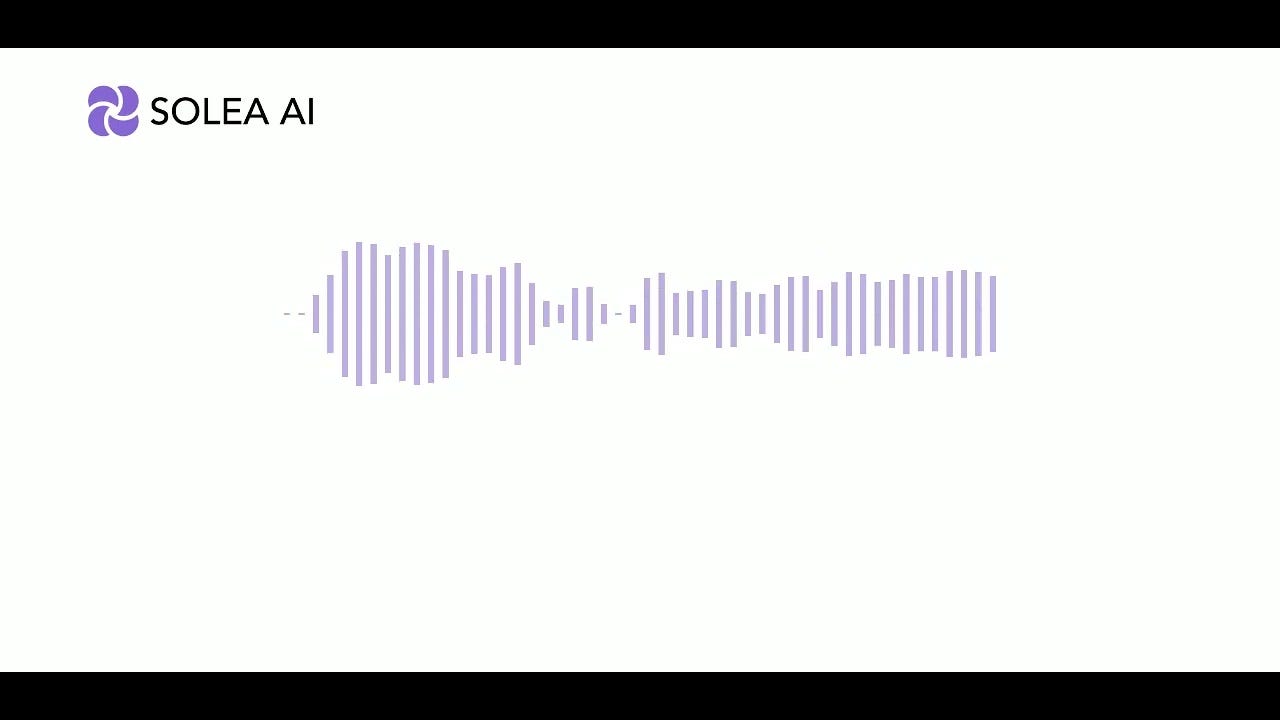

For testing I use this video(To be honest, I only recognized 2 emotions):

Result of Action Unit analysis:

Every 24s frames analysis: All frames analysis:

All frames analysis:

Let’s calculate emotions!

Knowing muscle movements, we can determine emotions through the addition of Action Units:

Happiness / Joy = 6 + 12 Sadness = 1 + 4 + 15 Surprise = 1 + 2 + 5 + 26 Fear = 1 + 2 + 4 + 5 + 7 + 20 + 26 Anger = 4 + 5 + 7 + 23 Disgust = 9 + 15 + 16 Contempt = 12 + 14

And result!

And our algorithm was able to recognize 5 emotions out of 7! He could not recognize fear and surprise, but I think the problem lies in the incorrect location of some points on the face.

Сonclusion

In this article, we were able to force the algorithm to recognize emotions that are difficult for even an ordinary person to recognize. Such an algorithm can find its place in many projects because unlike AI trained on pictures, this one can determine micro-emotions.