So I've been playing around with AWS for the last few months and am just realizing how powerful Cloud services are. As part of the Cloud Data Engineering specialization taught by Professor Noah Gift, I implemented and deployed a serverless image labeling web service using AWS S3, Lambda, and Rekognition (link).

In this article, I will write about my experience implementing this service in a step-by-step manner in hopes that people find this helpful and potentially follow along.

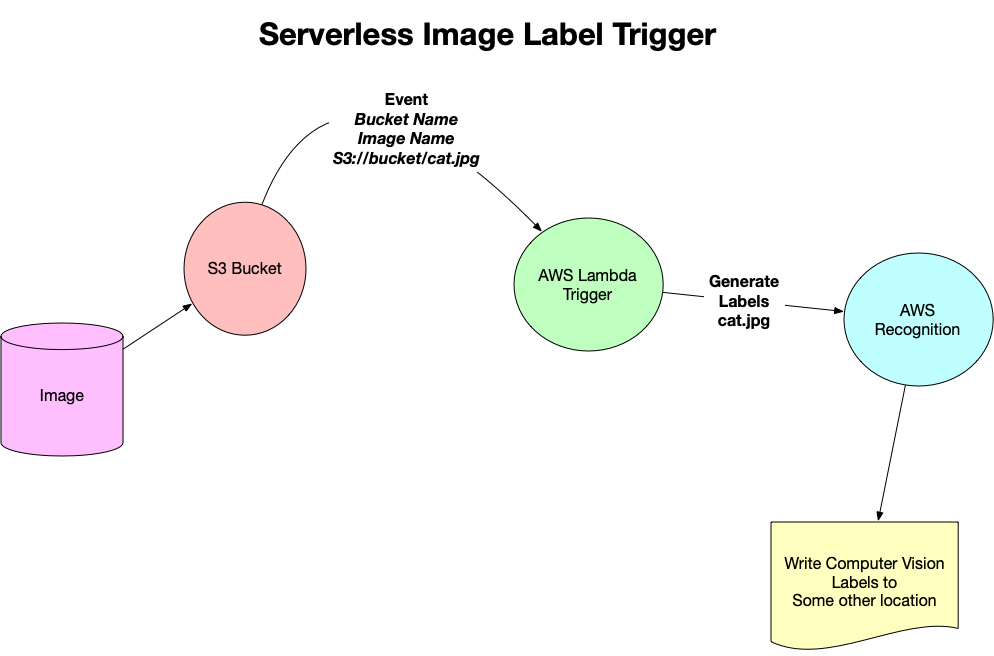

Here is a nice diagram by Noah Gift displaying the pipeline:

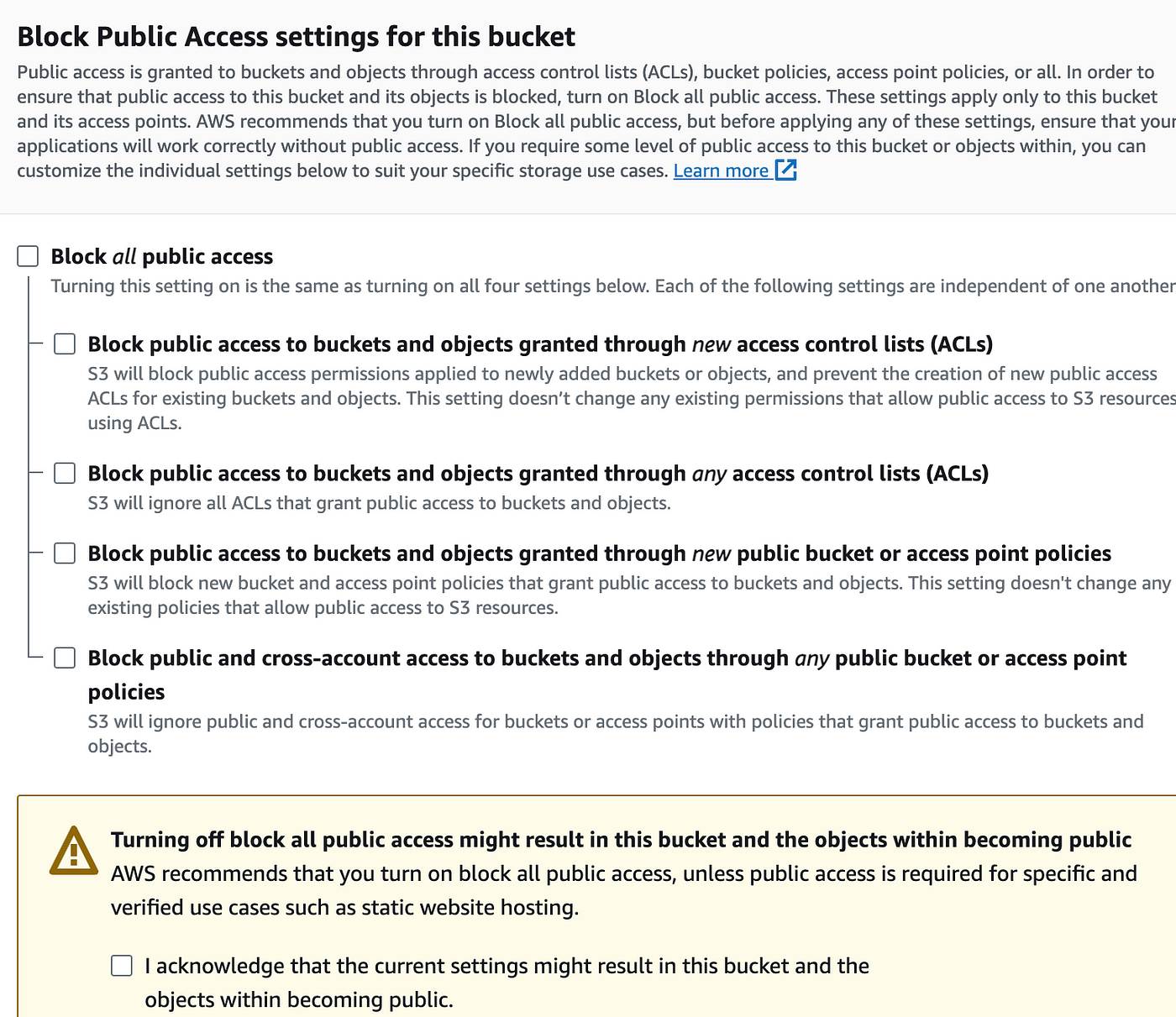

First of all, we need to set up a S3 bucket where we will store our images. You can do this by opening the AWS console and simply creating a new bucket. I chose the default settings except for the permissions section.

The objects in this bucket should be accessible to others. This will make sense when we write the labels back to the same bucket. Probably a better method would be to block all public access and explicitly add a policy to the Identity Pool.

Next, let's set up the lambda function. Again, you can do so by opening the AWS console and creating a new lambda function that runs Python. Here is the Python code that I used: link

def label_function(bucket, name):

"""This takes an S3 bucket and a image name!"""

print(f"This is the bucketname {bucket} !")

print(f"This is the imagename {name} !")

rekognition = boto3.client("rekognition")

response = rekognition.detect_labels(

Image={

"S3Object": {

"Bucket": bucket,

"Name": name,

}

},

)

labels = response["Labels"]

print(f"I found these labels {labels}")

return labels

def lambda_handler(event, context):

"""This is a computer vision lambda handler"""

print(f"This is my S3 event {event}")

for record in event["Records"]:

bucket = record["s3"]["bucket"]["name"]

print(f"This is my bucket {bucket}")

key = unquote_plus(record["s3"]["object"]["key"])

print(f"This is my key {key}")

my_labels = label_function(bucket=bucket, name=key)

print("This is a picture of", my_labels[0]["Name"])

upload_file(my_labels, key, bucket)

return my_labels

The above code gets triggered with an "event", which will be a Put action to the S3 bucket we just created. So basically whenever a new object is uploaded to your S3 bucket, this lambda function will run and you can access info about the event via the event object passed into lambda_handler. In the above code, we are parsing the bucket name and the name of the file that was added from the event object.

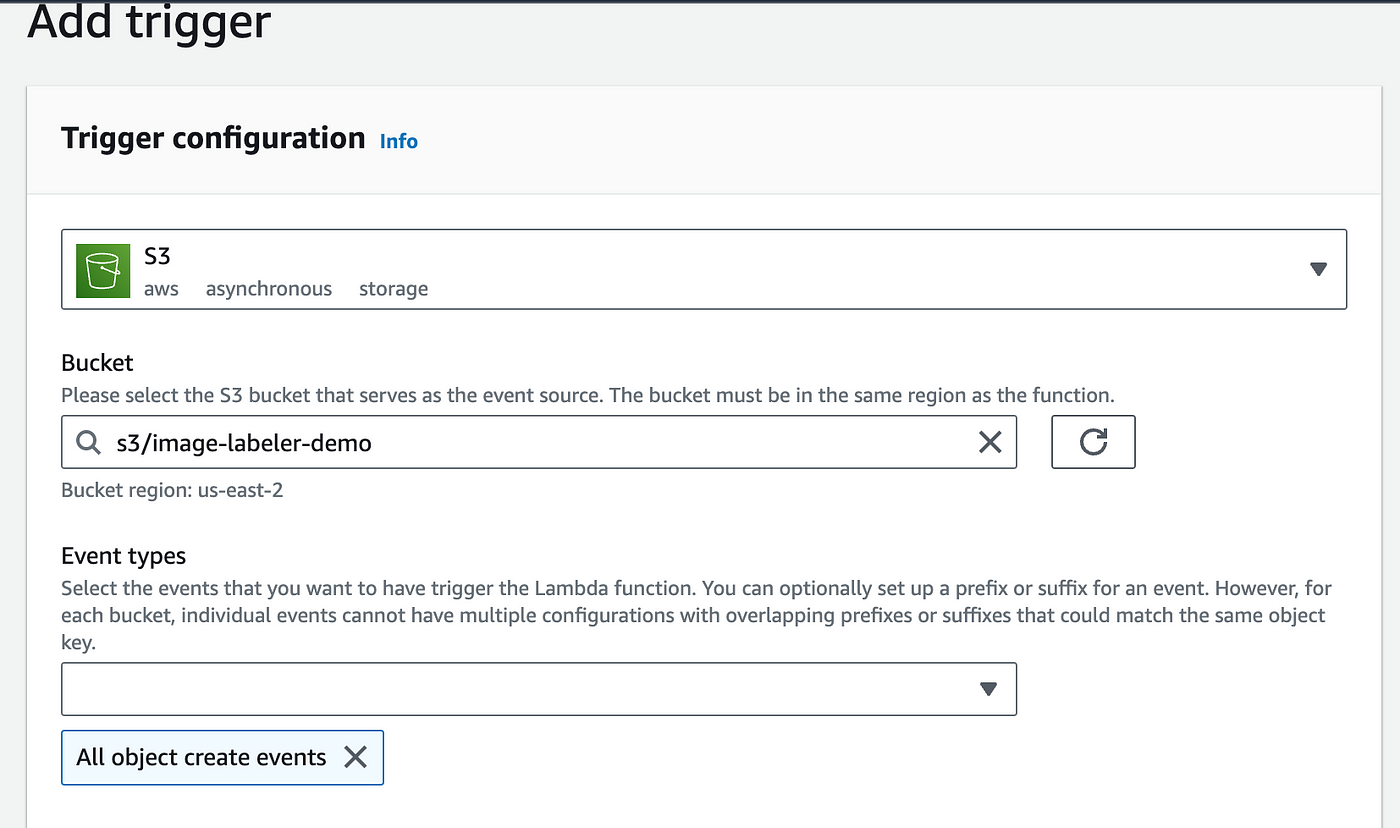

So how does this lambda know to get triggered by that bucket?

You have to explicitly add the trigger on the AWS Console.

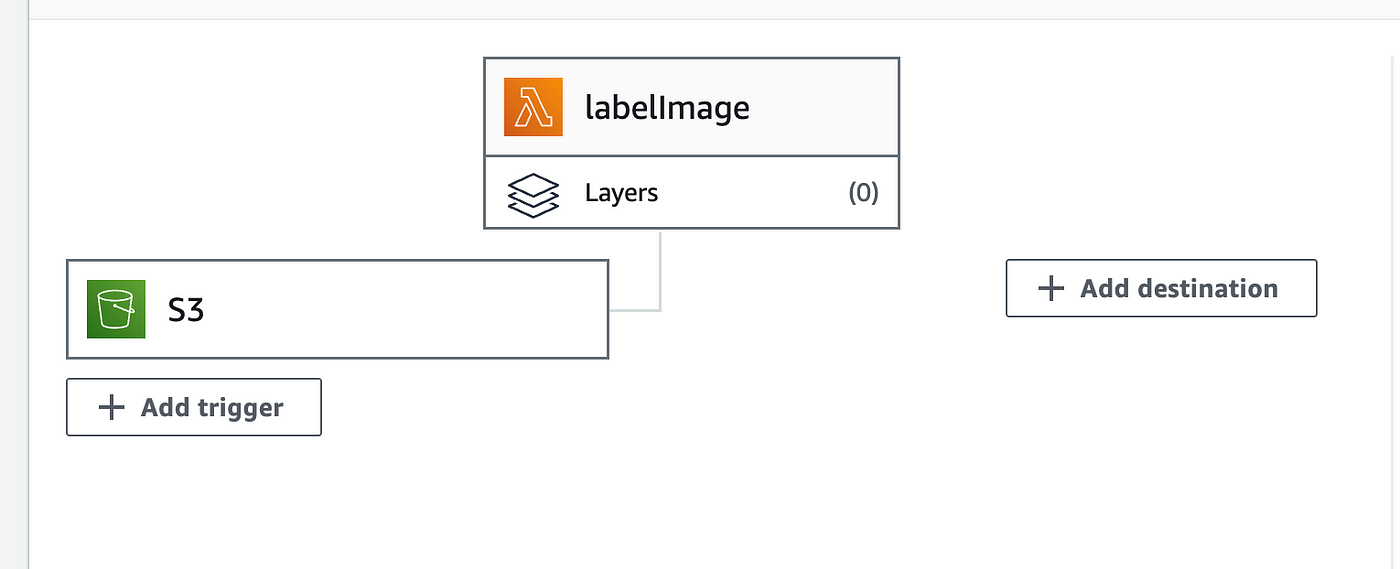

After this, your lambda interface should look like this:

To test if this workflow works, AWS Cloudwatch provides extremely useful logs. Make sure to use plenty of if statements in the lambda_handler so that you can see your logs on CloudWatch.

Now let's talk briefly about how we're going to deliver the labels to the client-side.

A workflow that I came up with is probably not ideal but it's simple enough to get the demo done. After I get the label for the uploaded image using Rekognition, I write back to the same S3 bucket a json file containing all the labels found. I do so by again using boto3 to setup a S3 client and use the put_object() function. Make sure to read into the boto3 docs for the specifics.

def upload_file(label, key, bucket):

"""Upload a file to an S3 bucket

:param key: Name of image file

:param bucket: Bucket to upload to

:param label: Content of the new file, a json

:return: True if file was uploaded, else False

"""

file_name = key.split(".")[0] + ".json"

print("filename is", file_name)

print("bucket is", bucket)

object_name = os.path.basename(file_name)

# Upload the file

s3_client = boto3.client('s3')

response = s3_client.put_object(Bucket=bucket, Key=file_name, Body=json.dumps(label))

return True

Now the last thing we need to do is integrate the clientside with the S3 bucket so we can upload images and get its labels. I deployed this on my personal page using Next js and React.

First, set up AWS credentials in your functional component:

const bucketRegion = "us-east-2";

const bucketName = "your-bucket-name";

const IdentityPoolId = "your-identity-pool";

const AWS = require("aws-sdk");

AWS.config.update({

region: bucketRegion,

credentials: new AWS.CognitoIdentityCredentials({

IdentityPoolId: IdentityPoolId,

}),

});

var s3 = new AWS.S3({

apiVersion: "2006-03-01",

params: { Bucket: bucketName },

});

Make sure you change the bucket region, bucket name and Idenity pool id to your specific case. The trickiest thing here is the concept of identity pool. You can create a guest identity pool on the AWS console: link. A very important step that took me a few hours to figure out is to assign the correct roles to this identity pool. You basically want this identity pool to have a complete access to your S3 bucket. To do so, open up the identity pool you created and add the following policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"s3:DeleteObject",

"s3:GetObject",

"s3:ListBucket",

"s3:PutObject",

"s3:PutObjectAcl"

],

"Resource": ["arn:aws:s3:::bucket-name", "arn:aws:s3:::bucket-name/*"]

}

]

}

Again, make sure to change the S3 bucket name to your case.

Now we can finally upload images and retrieve labels from our S3 bucket. Here is an example of how I did it:

// GET request to S3 Bucket with params

const getLabel = (params) => {

s3.getObject(params, (err, data) => {

if (err) {

console.log(err, err.stack); // an error occurred

} else {

const jsonData = JSON.parse(data.Body.toString("utf-8"));

console.log(jsonData);

setLabels(jsonData);

}

});

};

// Polls the Bucket until the file specified in the params exist

const pollForLabel = (params) => {

s3.headObject(params, (err, data) => {

if (err) {

// If the object doesn't exist yet, retry after a delay

setTimeout(() => pollForLabel(params), 1000); // You can adjust the polling interval as needed

console.log("file doesnt exist yet. fetching again");

} else {

// The label file exists, fetch its content

getLabel(params);

}

});

};

// Uploads the input image to S3 bucket, then calls for the label

const uploadImage = (params) => {

s3.upload(params, (err, data) => {

if (err) {

console.error("Error uploading image: ", err);

} else {

console.log("Image uploaded successfully. Image URL:", data.Location);

// retrieve the image label txt file from the same Bucket

const fileName = image.name.split(".")[0] + ".json";

console.log("json file", fileName);

const paramsRetrieve = {

Bucket: "image-labeler-demo",

Key: fileName,

};

pollForLabel(paramsRetrieve);

}

});

};

You probably noticed that I have a band-aid solution when getting the label. The pollForLabel() function makes a HEAD request to the bucket every second until the label json file is retrieved. This is because the label json is being generated asynchronously and we just need to keep fetching until it finally happens. A better workflow is to upload to S3 and directly receive the labels from the lambda function using API Gateway but that is for another lesson.