I see that the rule has removed 30,222,969 objects since 2/20. I would say give it a few more days and it should empty the buckets.

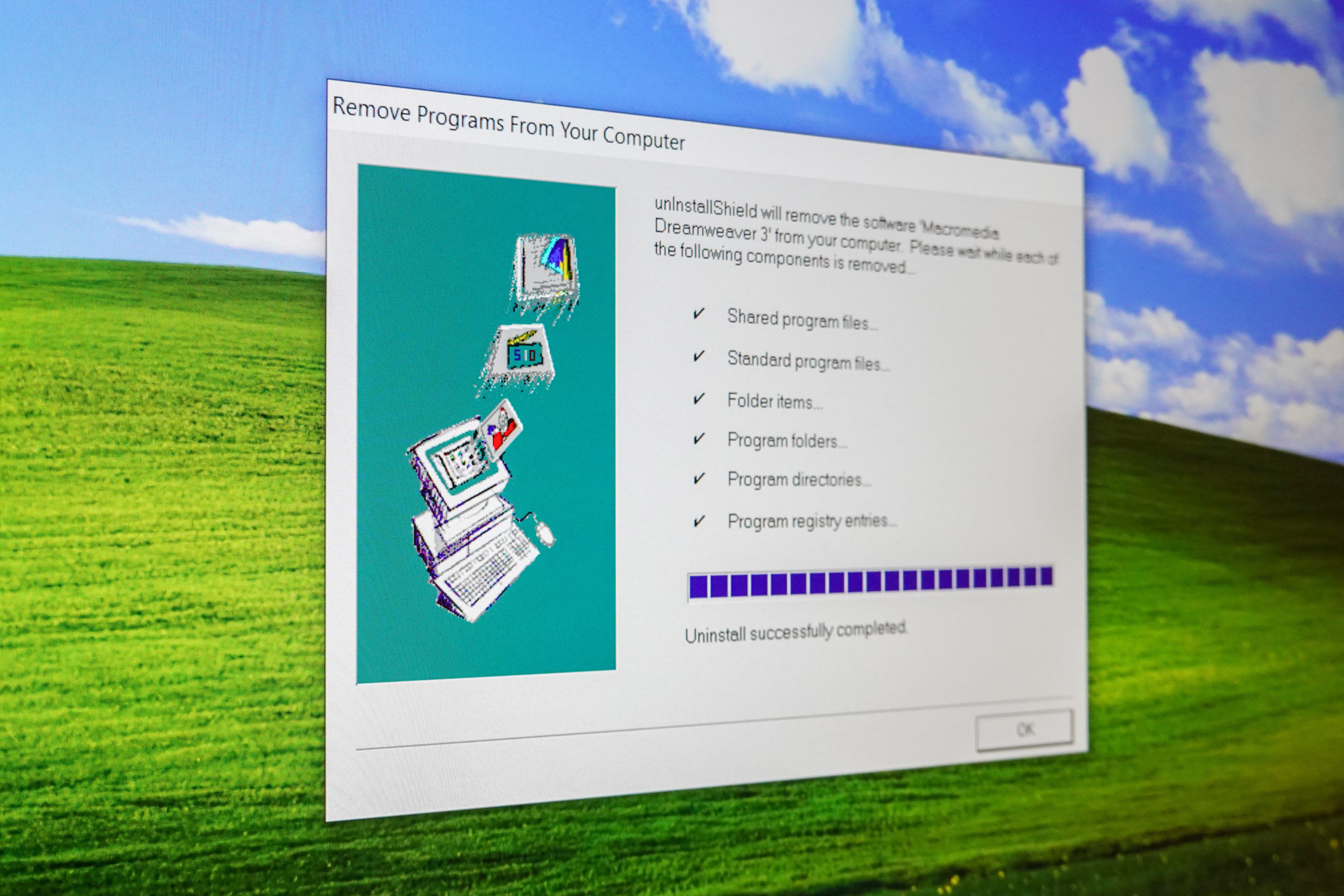

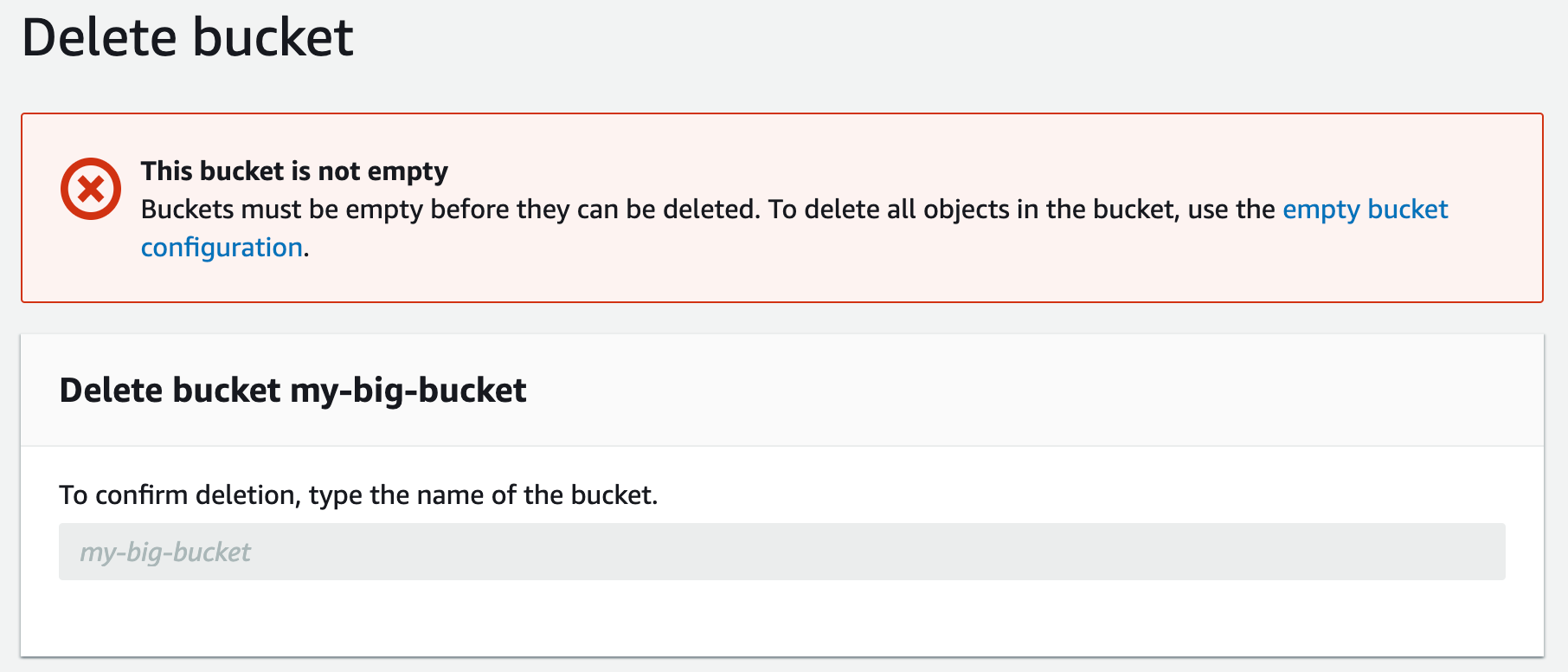

So I was cleaning up some S3 buckets. These buckets, for better or for worse, had versioning enabled, and each contained hundreds of thousands — if not millions — objects. AWS does not allow you to delete non-empty buckets in one go, and definitely not buckets with versioning on — you have to remove all of the objects first (docs here).

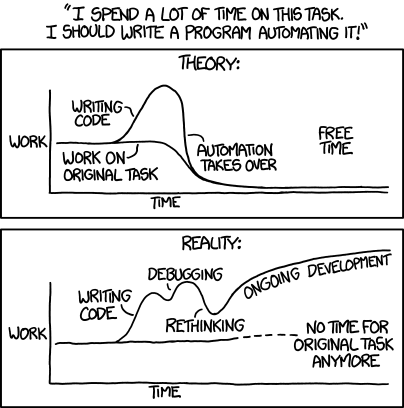

The fact that there is no rm -rf in AWS S3 feels so bizarre! I knew I had to share my findings in what was meant to be a quick blog on automation.

2 days, 3 versions of the script, a chat to our AWS account manager, and a support case later — the task is (almost) done.

TL;DR

If your environment does not expire STS session tokens after an hour, or your bucket contains less than a million files — use one of the scripts below. Otherwise — set up lifecycle policies that will delete all files, wait for a week, and proceed to delete the bucket. An example policy is at the end of the article.

Nope, you can’t just delete a non-empty S3 bucket

Nope, you can’t just delete a non-empty S3 bucket

Deleting S3 buckets, option 1: out-of-the-box tools

The easiest way to empty an S3 bucket is to launch a process called Empty on the bucket in the AWS console, or to use the AWS CLI:

aws s3 rb s3://$bucket --force

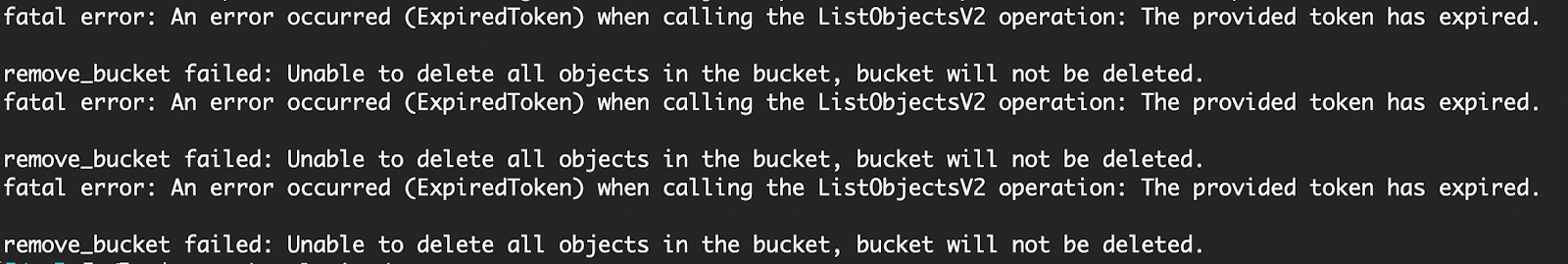

So I tried both. CLI ran for an hour and.. my STS token timed out. The console method worked fine for a small-ish bucket, and completely and obscurely errored out after a few hours on a bucket with >1M files.

According to AWS support, it can take up to several days for the bucket to be emptied! What if you need it to be done sooner?

Deleting S3 buckets, option 2: automation!

Anything that can be done via CLI, can be automated. All you need is an orchestrator, trusted by your AWS accounts and able to run a long-lived job. Jenkins, Rundeck, Azure DevOps, what have you; and a couple of lines of Bash.

The script you’re about to see does the following:

-

Assume a role that can control AWS resources

-

Finds all object versions in the bucket, and lists the Key and VersionID in a file

-

Deletes chunks of 1000 objects (the maximum you can pass to the AWS API), assuming the role again whenever 55 min has elapsed

-

Force-deletes the bucket at the end

55 min is relevant to an environment where the STS token expires within 1 hour — and, frankly, it could be 58 min, leaving just enough time to run assume-role again. The trick is to renew the credentials *before *they expire so that the CLI can continue.

We will make use of the magic of the date command, and comparing times (on Linux and Mac):

alive_since=$(date +%Y-%m-%d-%T)

cut_off_time=$(date --date=’55 minutes ago’ +%Y-%m-%d-%T)

if [ ${cut_off_time} \\> ${alive_since} ]; then

your_time_is_up

do_something

fi

For convenience, wrap the AWS login commands into a function called aws_login.

The script itself looks like this! Paste it into your orchestrator of choice, and voila — it will silently delete the bucket with all its objects and versions.

# Assume role and note the timestamp - it will be used to check if the token needs renewal

aws_login

alive_since=$(date +%Y-%m-%d-%T)

# Source File Name, will contain all versions of objects in the bucket

SRCFN=/tmp/dump_file

# File Name will list chunks of object versions for deletion in an iteration

FN=/tmp/todelete

# Disable versioning on the bucket so that new versions don't appear as we delete

aws s3api put-bucket-versioning --bucket $BUCKET --versioning-configuration Status=Suspended

# Get all versions of all objects in the bucket

# This is what will time out if there's more than 1M objects/versions

aws s3api list-object-versions --bucket $BUCKET --output json --query ‘Versions[].{Key: Key, VersionId: VersionId}’ > $SRCFN

index=0

# How many object versions in total??

total=$(grep -c VersionId $SRCFN)

# Go through the list in $SRCFN and delete chucks of 1000 objects until there’s nothing left

while [ $index -lt $total ] ; do

# Check if it’s been more than 55 minutes since we assumed role

# Renew if it has, and reset the alive_since timestamp

CUT_OFF_TIME=$(date --date=’55 minutes ago’ +%Y-%m-%d-%T)

if [ ${CUT_OFF_TIME} \\> ${alive_since} ]; then

aws_login

alive_since=$(date +%Y-%m-%d-%T)

fi

((e=index+999))

echo “Processing $index to $e”

# Get a list of objects from $index to $index+999, formatted for the delete-objects AWS API

(echo -n ‘{“Objects”:’;jq “.[$index:$e]” < $SRCFN 2>&1 | sed ‘s#]$#] , “Quiet”:true}#’) > $FN

# Delete the chunk

aws s3api delete-objects --bucket $BUCKET --delete file://$FN && rm $FN

((index=e+1))

sleep 1

done

# Normally by now the bucket is empty, force delete it

aws s3 rb s3://${BUCKET} --force

Of course, you can amend the script to run a for loop over multiple buckets if needed. Just be careful not to nuke extra resources!

If you use Jenkins, let me save you some time in writing a pipeline:

pipeline {

agent {

// You don't have to run in docker if your Jenkins is allowed to use aws cli

docker {

image 'amazon/aws-cli'

args ' --entrypoint="" --user=root'

}

}

parameters {

string(name: 'BUCKET', description: 'Bucket name to delete')

string(name: 'ROLE_ARN', description: 'IAM role that has access to the bucket')

}

stages {

stage('Script') {

steps {

script {

sh '''

yum -y install jq >> /dev/null

####################################

# PASTE THE SCRIPT FROM ABOVE HERE #

####################################

'''

}

}

}

}

}

Deleting S3 buckets, option 3: Python

If the number of objects in your bucket is relatively small (i.e. not millions), you can use this short and sweet Python script:

#!/usr/bin/env python

import sys

import boto3

# Take the bucket name from command line args

BUCKET = sys.argv[1]

s3 = boto3.resource('s3')

bucket = s3.Bucket(BUCKET)

# Delete all object versions in the bucket

bucket.object_versions.delete()

# Delete the bucket

bucket.delete()

This script has appeared on the web countless times, I definitely do not hold any credit for it. It works nicely — until you have several million objects, a timeout on AWS tokens, yeah, yeah, we’ve heard all that already.

Deleting S3 buckets… Just please empty my sodding bucket, AWS!

So far, the extra-large number of objects, plus a fixed length of credentials validity, made all of those methods just fail. And even the script above, which was supposed to handle such a scenario — did not survive. Why? Because aws s3api list-object-versions takes longer than an hour when the bucket has >1M objects.

The last available option is through S3 bucket lifecycle policies (official doc here).

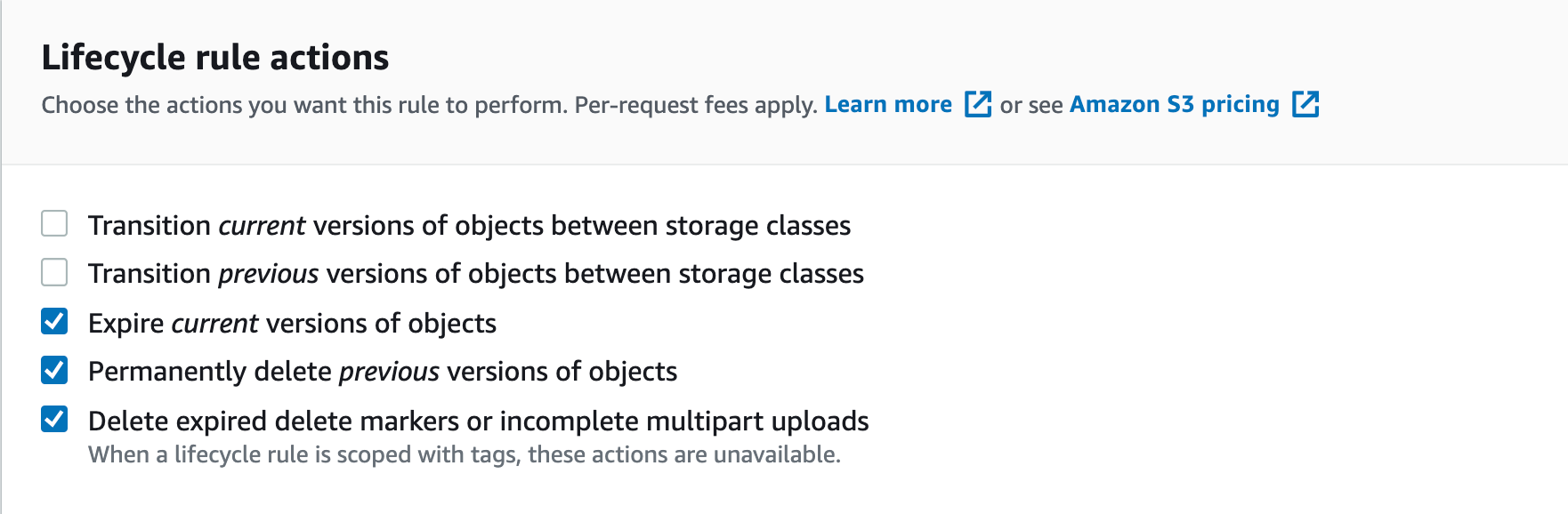

You will go to the bucket -> Management tab -> create a new lifecycle policy. Check This rule applies to all objects in the bucket, tick the confirmation box; then select the following Lifecycle rule actions:

Expire current versions of objects Permanently delete previous versions of objects Delete expired delete markers or incomplete multipart uploads

Enter 1 to all of Number of days after object creation, Number of days after objects become previous versions, and Number of days on Delete incomplete multipart uploads.

This will take a couple of days, so stock up on patience! I am writing this 5 full days after enabling lifecycle policies on my buckets, and those buckets are still not empty. AWS support looked into my case and told me this:

I see that the rule has removed 30,222,969 objects since 2/20. However, the process is still ongoing. It is because LCs are asynchronous

30 million objects and still running! Yeah. Patience.