Photo by Timothy Eberly on Unsplash

Meta’s Llama 2 has just dropped, and the AI community is feverish for trying it.

Llama 2 is a family of open-source, top-notch large language models released by Meta. It has been trained on 40% more data than its previous version, and its LLMs (divided into different model weights) are pretrained and fine-tuned models with parameters ranging from 7B to 70B.

In this article, we’ll take a look into some options to access Llama 2 at the time I’m writing. Those are:

- Access through Hugging Face Demos and HuggingChat: This is the easiest way to use it and they allow you to directly ask questions using an interface just like in ChatGPT.

- Download Locally: This is achieved by following some steps provided by Meta.

- Access through Hugging Face Transformers: Once you follow Meta’s steps, you should be able to import the Llama 2 models from Hugging Face with the transformers library, through an Access Token.

1. Llama 2 on Hugging Face Demos

If you want to go and try immediately Llama 2, head over to this Hugging Face space.

Below we’ll try the different demos provided in Hugging Face and compare the outputs. The input will be the following for all the weights:

I have only 4 ingredients, tomatoes, bread, garlic and olive oil. What kind of dish or dishes, can I do with them?

Llama2 70B Chatbot

Llama2 70B Chatbot on Hugging Face:

The snapshot demonstrates the Chatbot interface for the Llama2 70B Chatbot. The output is the following:

Here’s a simple recipe to make Bruschetta with your ingredients:Ingredients:• 4–6 slices of bread• 2–3 cloves of garlic, peeled and minced• 2 medium tomatoes, diced• 2–3 tablespoons of olive oil• Salt and pepper to taste• Optional: basil leaves, chopped fresh parsley, or other herbs for garnishInstructions:• Preheat your oven to 400°F (200°C).• Slice the bread into 1/2-inch thick slices and place it on a baking sheet.• Rub the garlic cloves on the bread slices, making sure to spread the garlic evenly.

Even though the explanation is pretty decent, it could have provided me with more dishes, like tomato soup, salad, and salmorejo, a traditional Spanish dish.

Llama2 13B Chatbot

As for this model, the output was actually richer in terms of options than the one provided by the larger model (link here):

Hello! With the four ingredients you have, you can make a variety of dishes. Here are some ideas:• Bruschetta: Toast the bread, then top it with garlic, tomatoes, and olive oil. You can also add salt and pepper to taste.• Tomato and Garlic Bread: Mix the tomatoes and garlic with olive oil, then spread the mixture on the toasted bread.• Tomato and Garlic Pasta: Cook pasta, then top it with a mixture of the tomatoes, garlic, and olive oil.• Tomato and Garlic Salad: Chop the tomatoes and mix them with garlic and olive oil, then serve over the bread.• Tomato and Garlic Soup: Blend the tomatoes and garlic with olive oil, then serve with the bread on the side.

Llama-2 7B Chat

Concerning the smallest model, the answer was pretty decent as well, but it added more ingredients to certain dishes, making it less accurate (link here).

Great question! With the ingredients you have, there are several delicious dishes you can create. Here are some ideas:• Bruschetta: Toast the bread, then top it with diced tomatoes, minced garlic, and a drizzle of olive oil. Salt and pepper to taste.• Tomato and Basil Salad: Chop the tomatoes and mix them with chopped fresh basil, salt, and pepper. Drizzle with olive oil and serve as a side dish.• Tomato Soup: Use the tomatoes, garlic, and olive oil to make a simple tomato soup. Blend the ingredients together with some broth and season with salt, pepper, and herbs.• Grilled Cheese and Tomato Sandwich: Grill a sandwich with sliced bread, tomato, and cheese for a classic comfort food.• Caprese Salad: Slice the tomatoes and layer them with mozzarella cheese and basil leaves. Drizzle with olive oil and balsamic vinegar.

ChatGPT

Just for curiosity and for comparison purposes, I tried the same input on ChatGPT (link here):

With those four ingredients, you can make a classic and delicious dish called “Bruschetta.” Bruschetta is an Italian appetizer that consists of toasted bread topped with fresh ingredients. Here’s a simple recipe for Tomato Bruschetta:Ingredients:• Tomatoes (ripe and firm)• Bread (baguette or any crusty Italian bread)• Garlic cloves (1–2 cloves, depending on your preference)• Olive oil• Salt and pepper (optional)Instructions:• Prepare the tomatoes: Dice the ripe and firm tomatoes into small pieces. You can remove the seeds if you prefer less moisture in your bruschetta. Place the diced tomatoes in a bowl.• Flavor the tomatoes: Finely chop one or two garlic cloves and add them to the bowl of tomatoes. Drizzle a generous amount of olive oil over the mixture and add a pinch of salt and pepper to taste. Mix everything together thoroughly and let it sit for a few minutes to allow the flavors to meld.• Toast the bread: Slice the bread into thick pieces, about ½ inch to 1 inch in thickness. You can toast the bread in a toaster or under the broiler in your oven. Drizzle a bit of olive oil on each slice before toasting for extra flavor.• Assemble the bruschetta: Once the bread is toasted, take each slice and top it generously with the tomato mixture. Make sure to include some of the olive oil and garlic from the bottom of the bowl to add more flavor.• Serve and enjoy: Arrange the bruschetta on a serving platter and serve immediately while the bread is still warm and crispy.

Interestingly, the answer was much like the one provided by Llama2 70B Chatbot. Both were very accurate because they took only the 4 ingredients (even though they added salt and pepper), but they lacked diversity, in that terms Llama2 13B Chatbot was better.

2. Llama 2 on Hugging Chat

If you don’t know what Hugging Chat is, you haven’t lost much until now. The default model in this chatbot interface is *oasst-sft-6-llama-30b**,*** but since the dawn of Llama 2, you can now swap models on Hugging Chat. See the demonstration below.

Swap models in Hugging Chat:

2. Llama 2 with Transformers

To use the model yourself locally, you can either use Hugging Face with the transformers library or download it after passing some Meta’s requests.

Create an account on Hugging Face

Start by creating a Hugging Face account here if you don’t have one yet, and get an Access Token by going to Settings and clicking on New Token under Access Tokens.

Create an Access Token on Hugging Face:

Keep the token safe, it will be used in just a few lines.

Download Llama 2

In order to use Lama 2 models, you need to go to Meta’s website and click on Download the Model, by providing some information and agreeing with the terms of use.

Download Llama 2 from Meta:

As soon as you provide your contact details, you’ll receive an email from Meta:

The models listed below are now available to you as a commercial license holder. By downloading a model, you are agreeing to the terms and conditions of the license, acceptable use policy and Meta’s privacy policy

By “the models listed below”, they mean: Llama-2–7b, Llama-2–7b-chat, Llama-2–13b, Llama-2–13b-chat, Llama-2–70b and Llama-2–70b-chat.

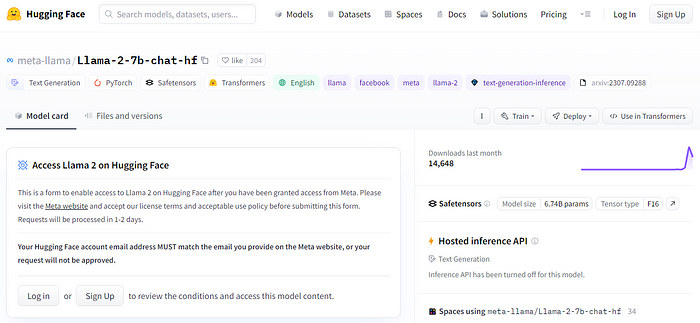

Meta’s GitHub repository for Llama 2, provides a README explaining, how to download these models locally or load them using Hugging Face. For the latter, you need to request the use of the models on Hugging Face. It doesn’t matter which weight is requested, they will provide access to all of them. Let''s take for instance the meta-llama/Llama-2–7b-chat-hf.

Request access to Llama-2–7b-chat-hf:

In my case, it took me a couple of minutes to gain access. Not 1 or 2 days as they mention

Use in Transformers

Llama 2 family requires a lot of RAM to load the models, hence I’ll explain the procedure, but I won’t be playing with the models locally.

Start by opening a notebook (.ipynb), and install some requirements:

!pip install transformers

!pip install huggingface_hub

Do you remember the Access Token? It’s now time to use it in order to import the model from Hugging Face.

from huggingface_hub import notebook_login

notebook_login()

The command above will load a window for you to input the access token, this will allow you to import models from Hugging Face without any issues.

Now click on Use in Transformers on the model’s page, and copy the code to your notebook.

# Load model directly

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("meta-llama/Llama-2-7b-chat-hf")

model = AutoModelForCausalLM.from_pretrained("meta-llama/Llama-2-7b-chat-hf")

And that’s it, this should be enough to import the Llama 2 models through the transformers library if you’re lucky to have enough RAM.

Next Steps

The goal of this tutorial was to explain the different ways of using Llama 2 models at the time I was writing. It would be interesting to see smaller models, that require less RAM, in order to reach more people. Also, integration with langchain it’s still not available and the Hugging Chat Inference APIs are turned off for all the Llama 2 family at present. Since the AI ecosystem runs as fast as a cheetah, it won’t take long until we have more seamless integrations with Llama 2 LLMs.