In the field of Deep Learning, understanding how models make decisions is essential. The search for the explanatory power of models has led to the development of techniques that shed light on the inner workings of complex algorithms. However, there’s a profound challenge these models often operate as “black boxes,” making it difficult to understand their decisions.

Imagine trusting a self-driving car without knowing why it made a critical move, or relying on a medical AI diagnosis without insight into its reasoning. This article is your guide to unlocking the inner workings of these AI enigmas. We’ll explore techniques that bring transparency to AI models, empowering you to make sense of their decisions. In an era where AI shapes our lives, this understanding is not just a curiosity; it’s a moral imperative. Join us on this journey to bridge the gap between technology and trust.

Fundamentals of explainability

In this section, we will dive into the fundamental aspects of model explainability, a key element in understanding complex machine learning models. We’ll explore how interpretability contributes to more transparent and trustworthy AI systems.

- Understanding Black Box Models

- Importance of Explainable AI

- Practical Use Cases

Understanding Black Box Models: Machine learning models, especially deep neural networks, are often considered “black boxes” due to their complexity. Interpretation techniques aim to elucidate these patterns. For example, when it comes to deep learning image classifiers, explainer methods can help identify image features that are important for prediction, thereby shedding light on why the model classifies certain such images. This transparency is essential in applications such as autonomous vehicles, where understanding why a car makes a particular decision is essential to ensure safety.

Importance of Explainable AI: In fields like healthcare, where AI is increasingly used to support medical diagnoses, explainability is essential. For example, imagine an AI system that identifies potential tumours in medical images. By applying explanation techniques, doctors can understand why AI has flagged a particular area as suspicious, thereby improving their confidence in the system and facilitating the decision-making process.

Practical Use Cases: To illustrate this, consider the credit approval model. By applying explanation tools, financial institutions can clarify to applicants why their credit application was accepted or denied. This provides applicants with useful information to improve their creditworthiness. Another example is the predictive maintenance of industrial equipment. Explainable AI can provide insights into why a machine is likely to fail, allowing for quick and cost-effective maintenance.

Traditional Methods

In this section, we will explore traditional machine learning model interpretation methods, focusing primarily on linear models. These methods have long been used as the basis for the interpretability of models.

- Interpreting Linear Models

- Feature Importance Analysis

- Limitations of Traditional Approaches

Interpreting Linear Models: Linear models are celebrated for their transparency. They assign different weights to input features, making it relatively easy to understand how changes in features impact predictions. For example, in a linear regression model predicting house prices, a higher weight on the number of bedrooms signifies that this feature strongly influences the final price. In a linear classification model for sentiment analysis, if the weight assigned to the presence of positive words is high, it indicates that the presence of these words strongly correlates with a positive sentiment prediction.

Feature Importance Analysis: Traditional methods often involve techniques such as feature importance analysis. This approach ranks features based on their contribution to the model’s predictions. For example, in a credit scoring model, trait importance analysis might reveal that a person’s credit history and income level are the most important factors in determining creditworthiness. their. Similarly, in a healthcare application, feature importance analysis can highlight that a patient’s age and medical history play an important role in predicting disease risk.

Limitations of Traditional Approaches: Although these traditional methods provide interpretability, they also have limitations. They assume a linear relationship between features and outcomes, which may not exist in complex nonlinear models such as deep learning networks. For example, in image classification using deep learning, traditional linear methods may have difficulty explaining why a convolutional neural network (CNN) recognizes image features specifically, the representation of certain objects due to the complexity of the hierarchical representation network.

Advanced Techniques

In this section, we will explore advanced techniques for model interpretability, going beyond traditional methods. These techniques offer deeper insights into complex models like deep learning networks.

Understanding SHAP Values: Shapley Additive Explanations

- Theory and Concept: Shap value originates from cooperative game theory. They aim to distribute the “impact” of each feature on the model`s output across the features, providing a fair distribution of importance. For example, when analyzing a deep learning model for image classification, Shap values can reveal that the value of a certain pixel significantly influences the model’s decision, helping you understand why that model recognizes certain patterns.

- Application to Deep Learning: Shap value can be applied to deep learning models, such as neural networks, to interpret their predictions. In natural language processing, Shap values can highlight the importance of specific words in a text input, clearly explaining why a model classifies a document in a certain way.

- Practical Use Cases: Shap values are applied in many different fields such as healthcare, finance and natural language processing. In healthcare, Shap values can reveal why a machine learning model recommends a specific treatment for a patient based on their medical history, ensuring transparency and trust.

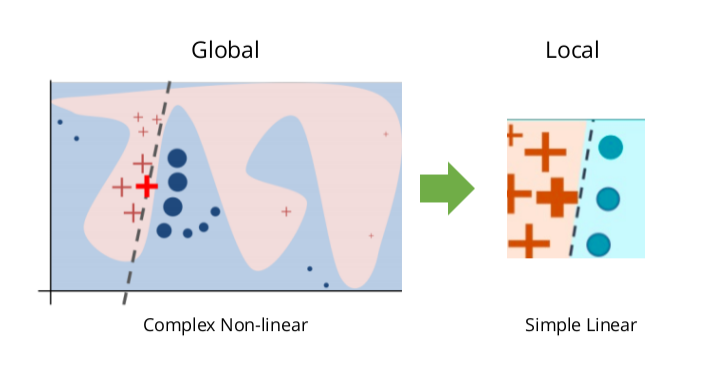

LIME (Local Interpretable Model-agnostic Explanations)

Source: lime-local-interpretable-model-agnostic-explanations

- Core Principles: Lime creates a locally faithful approximation of a complex model, making it interpretable in specific cases while maintaining model independence. For example, if you have a complex ensemble model to predict stock prices, Lime can provide a simple, easy-to-understand model that explains why that ensemble model predicts price changes specific to a particular stock on a given day.

- Implementing LIME with Deep Learning: Lime can be integrated into deep learning pipelines to understand individual predictions. If you have a deep reinforcement learning model controlling a robotic arm, LIME can help you understand why the model chose a particular action in a particular state of the environment.

- Case Studies: Real-world case studies illustrate how Lime has improved model interpretability in diverse applications. In autonomous driving, Lime can clarify why a self-driving car decided to change lanes in a complex traffic scenario, making it easier for developers to trust and fine-tune the driving algorithms.

Visualization Tools

Visualization tools play an important role in improving the interpretability of machine learning models. They enable data scientists and stakeholders to better understand model behaviour and predictions. Here we will discuss some widely used visualization tools and how they make models easier to understand.

- Matplotlib

- Seaborn

- TensorBoard

- Plotly

- Tableau

Matplotlib: Matplotlib is a general-purpose Python library for creating static, animated, and interactive visualizations. It is especially useful for visualizing data distribution, feature importance, and model performance metrics. For example, you can use matplotlib to create a bar chart showing the importance of features in a random forest classifier, making it easier to identify the most influential features in your model.

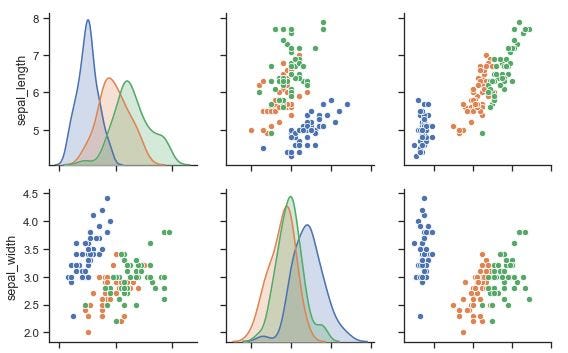

Seaborn:

Source: Seaborn (Python) — Data Visualization tool

Built on Matplotlib, Seaborn provides a high-level interface for creating informative and engaging statistical charts. It simplifies tasks such as creating heat maps, pair charts, and violin charts. With Seaborn, you can visualize the relationships between different entities in a data set using pairwise charts, allowing you to detect patterns and correlations.

TensorBoard:

Source: TensorFlow Developed by TensorFlow, TensorBoard is a powerful tool for visualizing machine learning experiments, tracking training metrics, and visualizing model architecture. You can use TensorBoard to visualize the training and validation loss curves of a neural network during training, helping you evaluate its convergence and performance.

Plotly: Plotly is a popular choice for creating interactive and web-based visualizations. It is helpful to present model predictions, interactive dashboards, and geospatial data. Additionally,** **you can contribute to the free open-source Potly where you are able to view, contribute or report issues on GitHub. For example, you can create interactive dashboards using Plotly to display real-time predictions from a machine learning model, providing stakeholders with a user-friendly interface to explore results.

Tableau: Tableau is a comprehensive data visualization platform that allows you to connect to various data sources, create interactive dashboards, and share insights with stakeholders. You can use Tableau to connect to a database, visualize customer segmentation results, and share that information with a non-technical audience at a business meeting.

Use Cases and Examples

Understanding the practical applications of model interpretability is essential to effectively harnessing its power. In this section, we will explore real-world use cases where interpretable techniques have had a significant impact.

- Credit Scoring

- Healthcare Diagnostics

- E-commerce Recommendations

- Autonomous Vehicles

- Legal and Regulatory Compliance

Credit Scoring: In the financial industry, credit scoring models determine an individual’s creditworthiness. Explainable models, such as decision trees, can reveal key factors that influence credit decisions, such as income, credit history and outstanding debt. In the financial sector, especially credit institutions, the credit scoring model is of utmost importance. They determine whether an individual or entity is eligible for a loan, credit card or other form of credit. Understanding the reasons behind credit decisions is important for both the financial institution and the applicant.

Healthcare Diagnostics: Explainable AI is increasingly important in healthcare. Explainable models can help physicians make important decisions. For example, in medical image analysis, convolutional neural networks (CNN) can highlight regions in an X-ray image that lead to a specific diagnosis, providing doctors with valuable information. Healthcare professionals must understand how and why a model arrived at a particular diagnosis to make informed decisions and provide the best possible patient care.

E-commerce Recommendations: Online retailers leverage machine learning to make product recommendations. Transparency models can make it clear why a particular product is recommended to users. Whether recommendations are based on a user’s browsing history, recent purchases, or similar behaviour, transparency builds trust and improves the shopping experience.

Autonomous Vehicles: Self-driving cars rely on complex deep learning models. Explainable AI can explain why a vehicle made a particular decision, such as slowing down or changing lanes. This transparency is important for security and accountability, ensuring that users can trust autonomous systems.

Legal and Regulatory Compliance: In a legal context, it is important to understand the logic behind AI-based decisions. For example, the interpretability of algorithmic hiring can help ensure compliance with anti-discrimination laws. If hiring patterns are biased toward certain demographic groups, comprehensible AI can reveal biases and support more equitable hiring practices.

Best Practices

In the area of AI model interpretability, several best practices ensure not only efficient and responsible interpretation but also seamless integration of these techniques into your AI workflow.

- Choosing the Right Explainability Technique

- Balancing Explainability and Model Performance

- Ethical Considerations

Choosing the Right Explainability Technique: The first important step is to choose the most suitable interpretation technique for your specific AI model. Different models and use cases can benefit from different approaches. For example, when working with deep learning models for autonomous vehicle control, using the SHAP value can provide insight into important factors influencing decisions, helping to improve safety. integrity and performance.

Balancing Explainability and Model Performance: Finding the right balance between model explanatory power and performance is an ongoing challenge. It is essential to find a suitable point where interpretability does not affect the model’s prediction accuracy. In financial fraud detection, maintaining a balance between model performance and interpretability will ensure that fraudulent activities are accurately identified without excessive error.

Ethical Considerations: Ethical concerns are paramount when it comes to the interpretive capabilities of AI. Ensure that the interpretations provided by your model do not reinforce bias or discrimination and comply with ethical principles. For example, in the context of AI-based recruitment tools, careful ethical considerations are required to avoid any form of bias that could disadvantage certain groups of candidates.

Implementing Explainability in Practice

Integrating AI model interpretation into your workflow includes hands-on steps, code samples, and seamless integration with popular deep learning frameworks. Here’s a detailed overview of how to do this

- Step-by-Step Guide: Begin by describing the step-by-step process for integrating model interpretability. In a natural language processing project focused on sentiment analysis, the first step may involve preprocessing the text data. Follow this with coding, model training, and finally integrating interpretable techniques like LIME to understand how specific words or phrases influence sentiment predictions.

- Code Samples: Providing code snippets can make implementation much easier. For example, when using Python with TensorFlow for image recognition, you can demonstrate how to use the SHAP library to visualize object properties. This practical approach guides practitioners in adding interpretive capabilities to their projects.import shap

import tensorflow as tf

# Load your trained model here

model = tf.keras.models.load_model('my_image_model')

# Initialize the explainer with your

modelexplainer = shap.Explainer(model)

# Assuming image_data is your input image data (make sure it's properly preprocessed)

image_data = # ...

# Explain a single prediction

shap_values = explainer(image_data)

# Visualize the attributions

shap.plots.image(shap_values)

- Integration with Common Deep Learning Frameworks: Many data scientists and engineers work with popular deep learning frameworks like TensorFlow or PyTorch. Show how to seamlessly integrate interpretable techniques into these environments. For example, you can demonstrate the ability to integrate SHAP with the PyTorch model for image classification, helping practitioners understand which parts of the image are important for prediction.

Future Trends

The world of AI model interpretation is dynamic, with ever-evolving techniques, growing importance in AI ethics, and highly diverse industrial applications. Let’s take a closer look at future trends in AI model interpretability.

- Emerging Techniques

- The Role of Explainability in AI Ethics

- Industry Applications

Emerging Techniques: As AI research advances, new interpretation techniques continuously emerge. One such technique is gradient integration, which is gaining ground in image classification tasks. Integrated gradients specify predictions for input features, providing new insights into model decisions. For example, applying gradients built into a self-driving car’s image recognition system could reveal why the AI decided to stop at a particular intersection, thereby improving confidence and safety. full.

The Role of Explainability in AI Ethics: As AI systems become increasingly integrated into our lives, the ethical implications of their decisions are increasingly coming into focus. Explainability plays a central role in ensuring ethical AI. Explainable models can help detect and overcome bias, promote fairness, and support accountability in autonomous systems. For example, in AI recruiting, interpretability can reveal biases in the hiring process, such as preferences for specific demographics. By ensuring transparency, it allows organizations to overcome these biases.

Industry Applications: Explainability has applications in many different industries beyond technology, including finance, healthcare, and law. In finance, interpretable models can clarify why loan applications are rejected, leading to fairer lending practices. In healthcare, AI’s interpretive capabilities help doctors understand why an AI diagnostic system recommends a particular treatment. For example, in legal AI, understanding why an AI system flags specific documents as relevant to e-discovery can help attorneys save significant time and resources.

Conclusion

In conclusion, the interpretability of AI models is not a choice but a responsibility. It is the bridge that connects complex AI models with human understanding. On this journey, we explored fundamentals, advanced techniques, and real-world applications. By deploying this information, we ensure transparency, correct biases and make fair and informed decisions. As the role of AI continues to evolve, interpretability becomes paramount to the ethical and equitable deployment of AI. So whether you’re a data scientist, machine learning engineer, or decision maker, embrace interpretability as a way to unlock the full potential of AI while respecting ethical values. virtue. Stay informed, stay accountable, and shape the future of AI responsibly.

References

- Lundberg, S. (2018). SHAP (Shapley Additive exPlanations). SHAP documentation.

- LIME (Local Interpretable Model-agnostic Explanations). (2023). C3.ai Data Science Glossary.

- Matplotlib. (2023). Matplotlib documentation.

- Matplotlib. (2023). Matplotlib documentation: Streamplot.

- Genesis (2018). Seaborn Seaborn documentation.

- Waskom, M. (2022). Seaborn documentation.

- TensorFlow. (2023). TensorBoard

- Plotly. (2023). Plotly documentation.

- Plotly. (2023). Plotly documentation: Is Plotly free?

- Plotly. (2023). Plotly GitHub repository.

- Tableau. (2023). Tableau AI.

- TensorFlow. (2023). TensorBoard.