When it comes to web scraping and working with data, everyone is interested in what useful information can be extracted from it. The world is run by data and organizations — especially — analyze the data extracted to derive insights, make strategic business decisions, draw out competitive marketing strategies, and more.

To extract meaningful information from large datasets you need tools, tools are essential for web scraping as they provide the necessary functionality to automate the process efficiently. In addition, they also help with data parsing, proxy rotation, rate limiting, data storage, error handling, and more. Some popular ones include Scrapy, Selenium, and Puppeteer, among others. It is without a doubt web scraping tools make the process more manageable and efficient. With a handful of solutions out there you can choose from.

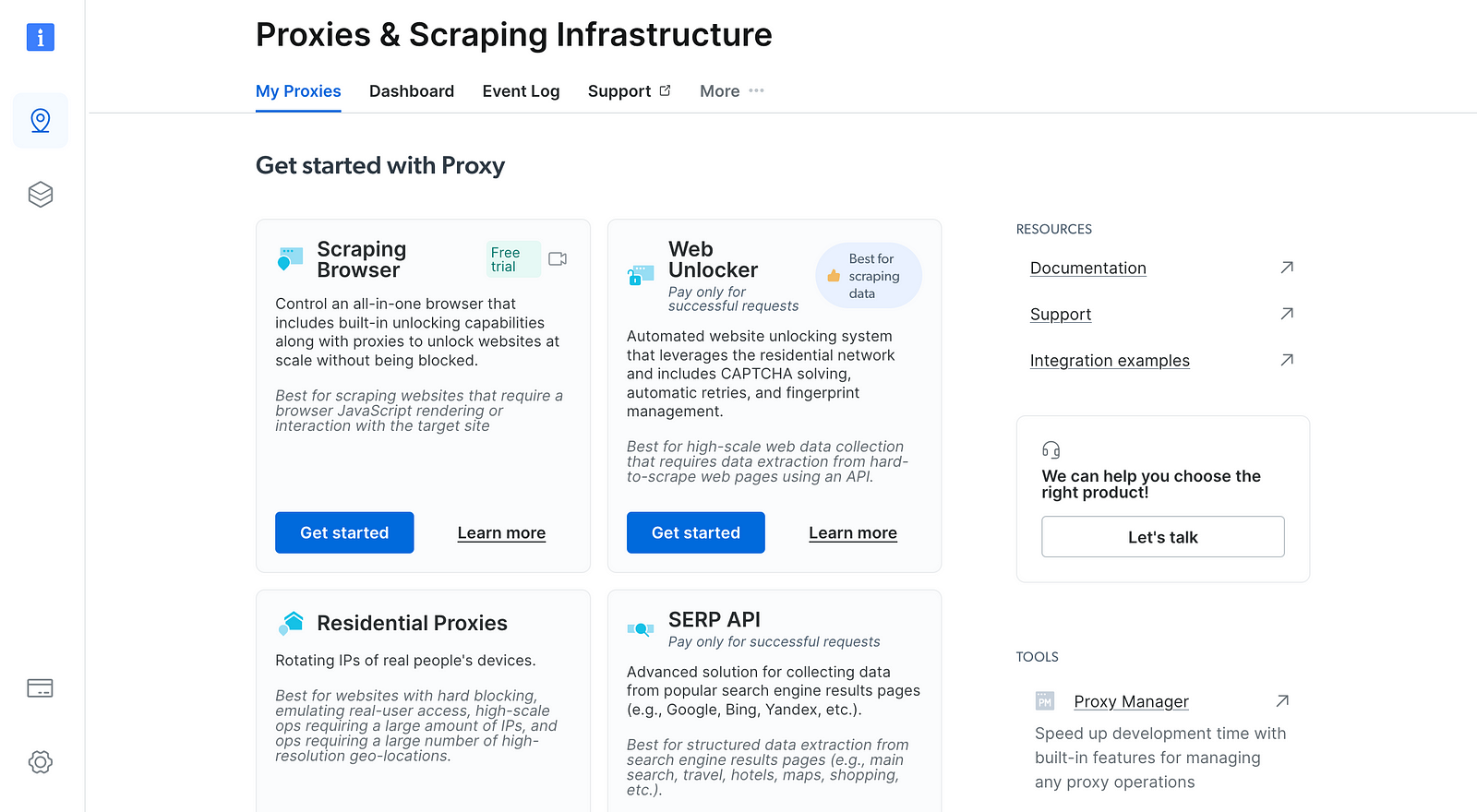

Enter Bright Data, a proxy infrastructure provider. In addition, they offer scraping solutions like Scraping Browser, and Scraping IDE, among others.

In this article, we will discuss how to use Bright Data’s Scraping Browser together with Puppeteer to scrape an e-commerce website to get data on trending products.

Scraping Browser as a scraping tool

To get started, visit brightdata.com. After registering you should see your dashboard. Scraping Browser is a proxy-unlocking solution and is designed to help focus on multi-step data collection from browsers while it handles full proxy and unblocking infrastructure, including CAPTCHA solving.

Navigate to ‘My Proxies’ page, and under ‘Scraping Browser’ click ‘Get started’

Bright Data’s user dashboard

Bright Data’s user dashboard

In the ‘Create a new proxy” page, choose and input a name for your new Scraping Browser proxy zone Note: Please select a meaningful name, as the zone’s name cannot be changed once created

To create and save your proxy, click ‘Add proxy’. After verifying your account above, you can now view your API credentials.

In your proxy zone’s ‘Access parameters’ tab, you’ll find your API credentials which include your Username and Password. You will use them to launch your first Scraping Browser session below.

Choosing a browser navigation library to use with Scraping Browser

A lot of popular ones but we’ll be using Puppeteer. Puppeteer is a Node.js library that provides a high-level API to control Chrome/Chromium over the DevTools Protocol. It runs in headless mode by default. It can do most things you can manually do in a browser including generating screenshots and PDFs of pages.

We make a directory myproject, assuming we already have Node installed, we can install puppeteer-core with npm.

mkdir myproject

cd myproject

npm install puppeteer-core

Setup puppeteer with Scraping Browser

We install puppeteer-core as we are only connecting to a remote browser. We then create a file main.js, bring in puppeteer, and write an async function as puppeteer support promises.

const puppeteer = require(''puppeteer-core'');

async function main() {

const browser = await puppeteer.connect({

});

try {

const page = await browser.newPage();

await page.goto(''https://www.ebay.com/globaldeals'', { timeout: 2 * 60 * 1000 });

} finally {

await browser.close();

}

}

We connect to our remote browser with the connect method on Puppeteer, and then we await the result. We then create a new page we can call the goto method on with a timeout. We finally call the close method to close the browser automatically. Done? not really. We still need to supply a browserWSEndpoint to connect and then call the main.

const puppeteer = require(''puppeteer-core'');

const AUTH = ''USER:PASS'';

const SBR_WS_ENDPOINT = `wss://${@brd.superproxy.io">AUTH}@brd.superproxy.io:9222`;

async function main() {

const browser = await puppeteer.connect({

browserWSEndpoint: SBR_WS_ENDPOINT,

});

try {

console.log(''Connected! Navigating...'');

const page = await browser.newPage();

await page.goto(''https://www.ebay.com/globaldeals'', { timeout: 2 * 60 * 1000 });

console.log(''Navigated! Scraping page content...'');

const html = await page.content();

console.log(html)

} finally {

await browser.close();

}

}

main().catch(err => {

console.error(err.stack || err);

process.exit(1);

})

We then get the username and password from the Access parameters tab on our Proxies dashboard to use in our web socket’s URL. We then pass the URL and set our timeout to 2 minutes. We then call content to get the full HTML content of the page, including the DOCTYPE.

// ...

async function main() {

const browser = await puppeteer.connect({

browserWSEndpoint: SBR_WS_ENDPOINT,

});

try {

console.log(''Connected! Navigating...'');

const page = await browser.newPage();

await page.goto(''https://www.ebay.com/globaldeals'', { timeout: 2 * 60 * 1000 });

const html = await page.content();

console.log(html)

} finally {

await browser.close();

}

}

// ...

Targeting specific classes using query selectors

We can refine the results we are getting back by targeting specific classes on the data we are interested in using selectors. Thankfully, Puppeteer provides us with a way to do so using queries. In addition, it also has an evaluation method that allows us to do some DOM manipulation, we can use the document object.

// ...

async function main() {

console.log(''Connecting to Scraping Browser...'');

const browser = await puppeteer.connect({

browserWSEndpoint: SBR_WS_ENDPOINT,

});

try {

console.log(''Connected! Navigating...'');

const page = await browser.newPage();

await page.goto(''https://www.ebay.com/globaldeals'', { timeout: 2 * 60 * 1000 });

const products = await page.evaluate((selector) => {

const element = Array.from(

document.querySelectorAll(".dne-itemtile-detail")

);

return element.map((el) => {

const name = el.querySelector(".dne-itemtile-title").textContent;

const price = el.querySelector(".price").textContent;

return { name, price };

});

})

console.log({products})

} finally {

await browser.close();

}

}

// ....

In this case, in the Puppeteer’s evaluate method we query for dne-itemtile-detail class on eBay’s Global deals page using querySelectorAll. This returns a NodeList object and then we can pass it to Array.from which returns to us a new array we can iterate over.

Thereafter we use a map on it and return a new array of objects with name and price as properties. With all that out of the way, we now have data we can derive insights from, use in making strategic decisions, and more.

Closing Thoughts

There will always be a need to analyze data. Also, there will always be a need for efficient tools to swim through a large amount of data to extract insights, make strategic decisions, and draw out competitive marketing strategies.

With tools like Scraping Browser and other offerings from bright data, you can make the process efficient and reduce errors.

Thanks for taking the time to read this piece. Connect with me on LinkedIn.

Comments

Loading comments…